Running Lightcone Infrastructure, which runs LessWrong. You can reach me at habryka@lesswrong.com

Posts

Wiki Contributions

Comments

Hmm, I think the first bullet point is pretty precisely what I am talking about (though to be clear, I haven't read the paper in detail).

I was specifically saying that trying to somehow get feedback from future tokens into the next token objective would probably do some interesting things and enable a bunch of cross-token optimization that currently isn't happening, which would improve performance on some tasks. This seems like what's going on here.

Agree that another major component of the paper is accelerating inference, which I wasn't talking about. I would have to read the paper in more detail to get a sense of how much it's just doing that, in which case I wouldn't think it's a good example.

Oh no, I wonder what happened. Re-importing it right now.

@johnswentworth I think this paper basically does the thing I was talking about (with pretty impressive results), though I haven't read it in a ton of detail: https://news.ycombinator.com/item?id=40220851

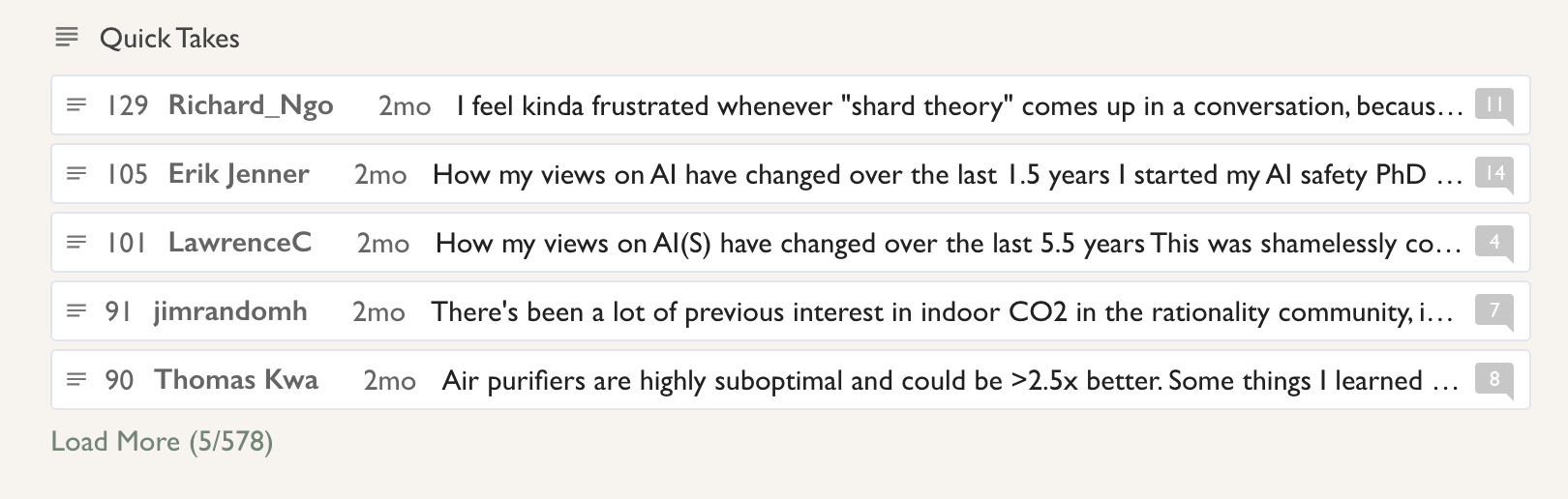

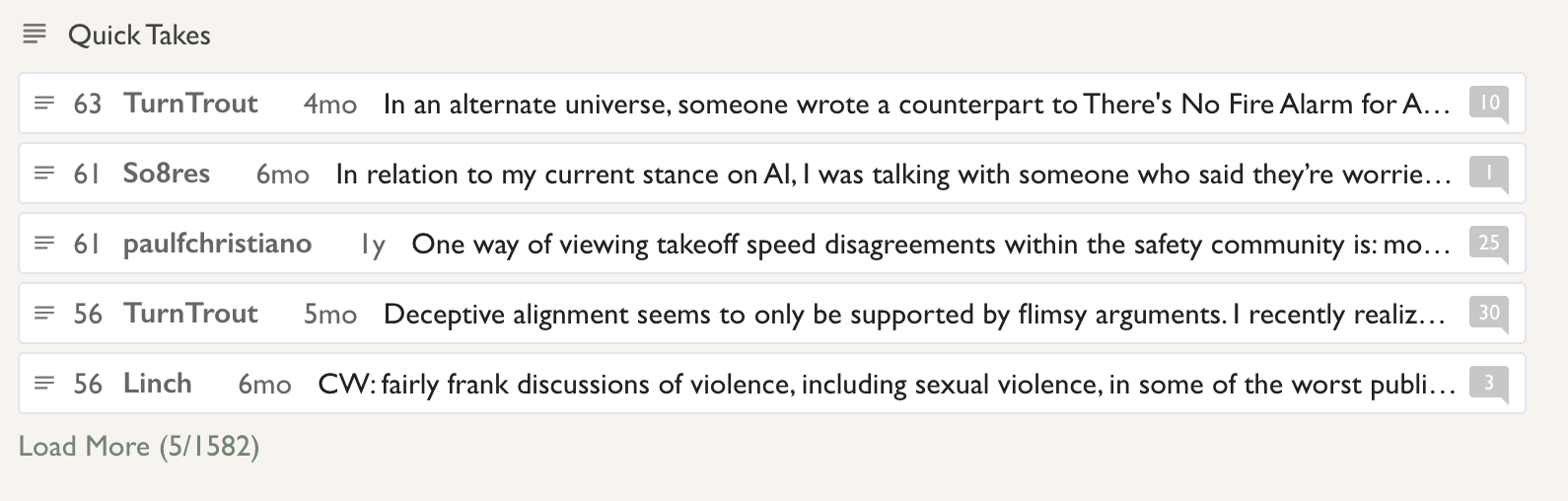

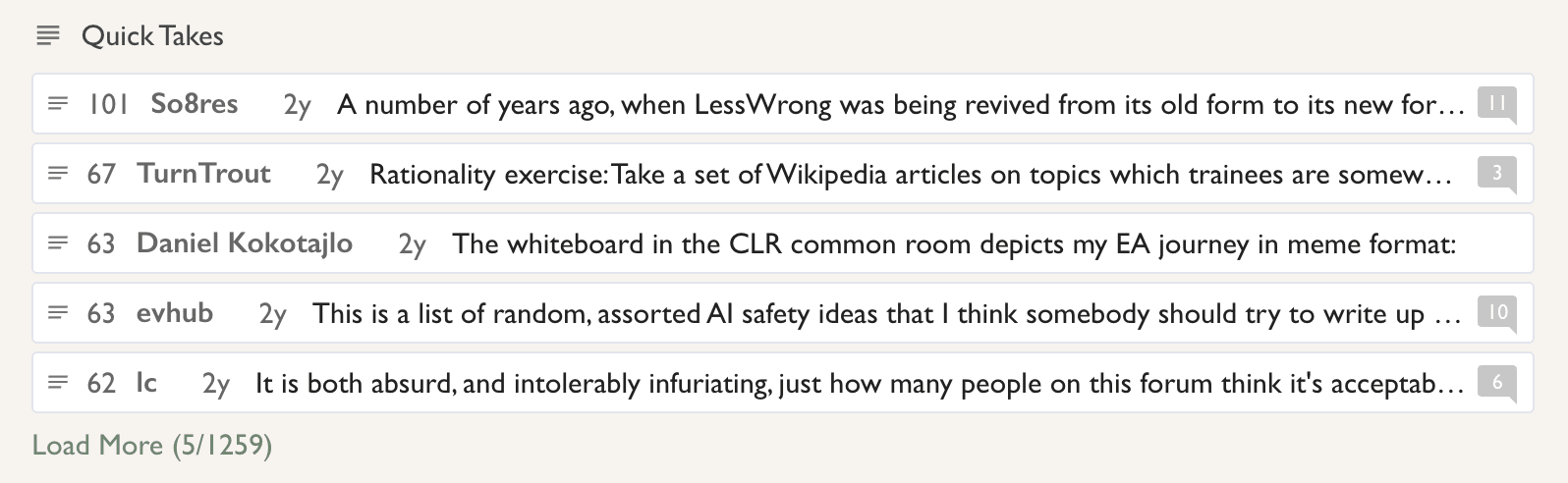

You can! Just go to the all-posts page, sort by year, and the highest-rated shortform posts for each year will be in the Quick Takes section:

2024:

2023:

2022:

Promoted to curated: Formalizing what it means for transformers to learn "the underlying world model" when engaging in next-token prediction tasks seems pretty useful, in that it's an abstraction that I see used all the time when discussing risks from models where the vast majority of the compute was spent in pre-training, where the details usually get handwaived. It seems useful to understand what exactly we mean by that in more detail.

I have not done a thorough review of this kind of work, but it seems to me that also others thought the basic ideas in the work hold up, and I thought reading this post gave me crisper abstractions to talk about this kind of stuff in the future.

Three is a bit much. I am honestly not sure what's better. My guess is putting them all into one. (Context, I am one of the LTFF fund managers)

Yeah, I feel kind of excited about having some strong-downvote and strong-upvote UI which gives you one of a standard set of options for explaining your vote, or allows you to leave it unexplained, all anonymous.

I edited the top-comment to do that.

My steelman of this (though to be clear I think your comment makes good points):

There is a large difference between a system being helpful and a system being aligned. Ultimately AI existential risk is a coordination problem where I expect catastrophic consequences because a bunch of people want to build AGI without making it safe. Therefore making technologies that in a naive and short-term sense just help AGI developers build whatever they want to build will have bad consequences. If I trusted everyone to use their intelligence just for good things, we wouldn't have anthropogenic existential risk on our hands.

Some of those technologies might end up useful for also getting the AI to be more properly aligned, or maybe to help with work that reduces the risk of AI catastrophe some other way, though my current sense is that kind of work is pretty different and doesn't benefit remotely as much from generically locally-helpful AI.

In-general I feel pretty sad about conflating "alignment" with "short-term intent alignment". I think the two problems are related but have really important crucial differences, I don't think the latter generalizes that well to the former (for all the usual sycophancy/treacherous-turn reasons), and indeed progress on the latter IMO mostly makes the world marginally worse because the thing it is most likely to be used for is developing existentially dangerous AI systems faster.

Edit: Another really important dimension to model here is also not just the effect of that kind of research on what individual researchers will do, but what effect this kind of research will have on what the market wants to invest in. My standard story of doom is centrally rooted in there being very strong short-term economic incentives to build more capable AGI for any individual, enabling people to make billions to trillions of dollars, while the downside risk is a distributed negative externality that is not at all priced into the costs of AI development. Developing applications of AI that make a lot of money without accounting for the negative extinction externalities therefore can be really quite bad for the world.