It's not just that the tails stop being correlated, it's that there can be a spurious negative correlation. In any of your scatterplots, you could slice off the top right corner (with a diagonal line running downwards to the right), and what was left above the line would look like a negative correlation. This is sometimes known as Berkson's paradox.

There's also a related problem in that population substructures can give you multiple negatively correlated associations stacked beside each other in a positively correlated way (think of it like several diagonal lines going downwards to the right, parallel to each other), giving an 'ecological fallacy' when you switch between levels of analysis.

(A real-world case of this is religiosity and health. Internationally, countries which are less religious tend to be healthier, but often within first world countries, religion confers a survival benefit.)

Another example I've heard is SAT scores. At any given school, the math and verbal scores are negatively correlated, because schools tend to select people who have around the same total score. But overall, math and verbal scores are positively correlated.

So in other words, it's not that the strongest can't also be the tallest (etc), but that someone getting that lucky twice more or less never happens. And if you need multiple factors to be good at something, getting pretty lucky on several factors is more likely than getting extremely lucky on one and pretty lucky on the rest.

I enjoyed this post - very clear.

This is all correct so far as I can tell. Yay! (Posting because of the don't-only-post-cricitism discipline.)

One angle for thinking about why the tails come apart (which seems worth highlighting even more than it was highlighted in the OP) is that the farther out you go in the tail on some variable, the smaller the set of people you're dealing with.

Which is better, the best basketball team that you can put together from people born in Pennsylvania or the best basketball team that you can put together from people born in Delaware? Probably the Pennsylvania team, since there are about 13x as many people in that state so you get to draw from a larger pool. If there were no other relevant differences between the states then you'd expect 13 of the best 14 players to be Pennsylvanians, and probably the two neighboring states are similar enough so that Delaware can't overcome that population gap.

Now, imagine you're picking the best 10 basketball players from the 1,000 tallest basketball-aged Americans (20-34 year-olds), and you're putting together another group consisting of the best 10 basketball players from the next 100,000 tallest basketball-aged Americans. Which is a better group of basketball players? In this case it's not obvious - getting to pick from a pool...

Just as markets are anti-inductive, it turns out that markets reverse the "tails come apart" phenomenon found elsewhere. When times are "ordinary", performance in different sectors is largely uncorrelated, but when things go to shit, they go to shit all together, a phenomenon termed "tail dependence".

Great article overall. Regression to the mean is a key fact of statistics, and far too few people incorporate it into their intuition.

But there's a key misunderstanding in the second-to-last graph (the one with the drawn-in blue and red "outcome" and "factor"). The black line, indicating a correlation of 1, corresponds to nothing in reality. The true correlation is the line from the vertical tangent point at the right (marked) to the vertical tangent point at the left (unmarked). If causality indeed runs from "factor" (height) to "outcome" (skill), that's how much extra skill an extra helping of height will give you. Thus, the diagonal red line should follow this direction, not be parallel to the 45 degree black line. If you draw this line, you'll notice that each point on it has equal vertical distance to the top and bottom of the elliptical "envelope" (which is, of course, not a true envelope for all the probability mass, just an indication that probability density is higher for any point inside than any point outside).

Things are a little more complex if the correlation is due to a mutual cause, "reverse" causation (from...

This looks cool. My biggest caution would be that this effect may be tied to the specific class of data generating processes you're looking at.

Your framing seems to be that you look at the world as being filled with entities whose features under any conceivable measurements are distributed as independent multivariate normals. The predictive factor is a feature and so is the outcome. Then using extreme order statistics of the predictive factor to make inferences about the extreme order statistics of the outcome is informative but unreliable, as you illustrated. Playing around in R, reliability seems better for thin-tailed distributions (e.g., uniform) and worse for heavy-tailed distributions (e.g., Cauchy). Fixing the distributions and letting the number of observations vary, I agree with you that the probability of picking exactly the greatest outcome goes to zero. But I'd conjecture that the probability that the observation with the greatest factor is in some fixed percentile of the greatest outcomes will go to one, at least in the thin-tailed case and maybe in the normal case.

But consider another data generating process. If you carry out the following little experiment in R

fac &I ran some simulations in Python, and (if I did this correctly), it seems that if r > 0.95, you should expect the most extreme data-point of one variable to be the same in the other variable over 50% of the time (even more if sample size <= 100)

http://nbviewer.jupyter.org/github/ricardoV94/stats/blob/master/correlation_simulations.ipynb

Upvoted. I really like the explanation.

In the spirit of Don't Explain Falsehoods, it would be nice to test the ubiquity of this phenomenon by specifying a measure of this phenomenon (e.g. correlation) on some representative randomly-chosen pairs. But I don't mean to suggest that you should have done that before posting this.

I must quibble with some of the epistemological terminology here. Both "graphical explanation" and "geometric explanation" are not properly speaking explanations. They merely restate the original empirical observation in graphical or statistical terms, but do not explain why the tails-coming-apart phenomenon occurs. The "intuitive explanation" on the other hand does explain why the phenomenon occurs (i.e. the distributions of other factors influencing the outcome come into play). Similarly, "regression to the mean" is merely a restatement of the empirical ...

Good post.

If the ellipse is very narrow, things are indeed well-modeled by a linear relationship, and the biggest Y coordinate for a point is likely to also have close to biggest X coordinate.

If the ellipse is not narrow, that could be for two reasons. Either the underlying truth is indeed linear, but your data is very noisy. Or the underlying truth is not linear, and you should not use a linear model. (Or both, naturally).

If the underlying truth is linear, but your data is very noisy, then what happens to the X coordinate of points with given Y val...

(It would be nice if you would link fulltext instead of providing citations; if you don't have access to the fulltext, it's a bad idea to cite it, and if you do, you should provide it for other people who are trying to evaluate your claims and whether the paper is relevant or wrong.)

I've put up the first paper at https://dl.dropboxusercontent.com/u/85192141/1971-goldberg.pdf / https://pdf.yt/d/Ux7RZXbo0n374dUU I don't think this is particularly relevant: it only shows that 2 very specific equations (pg4, #3 & #4) did not outperform the linear model on a particular dataset. Too bad for Einhorn 1971.

Your second paper doesn't support the claims:

...A third possibility is that incorrect methods were used to measure the amount of information in experts’ judgments; use of the “correct” measurement method might support the Information-Use Hypothesis. In the studies reported here, four techniques were used to measure information use: protocol analysis, multiple regression analysis, analysis of variance, and self-ratings by judges. Despite differences in measurement methods, comparable results were reported. Other methodological issues might be raised, but the studies seem varied enough t

Interesting read! That makes sense.

One little side note, though.

So, ceritus paribus,

Did you mean ceteris paribus?

(Ha, finally a chance for me as a language geek to contribute something to all the math talk. :P )

I'd say that this is regression to the mean. If two variables are correlated with |r| < 1, then extreme values on one variable will be associated with somewhat less extreme values on the other variable. So people who are +4 SD in height will tend to be less than +4 SD in basketball ability, and people who are +4 SD in basketball ability will tend to be less than +4 SD in height.

Thank you for pointing that high IQ problem is probably a statistical effect rather than "too much of a good thing" effect. That was very interesting.

Let me attempt the problem from a simple mathematical point of view.

Let basketball playing ability, Z, is just a sum of height, X, and agility, Y. Both X and Y are Gaussian distributed with mean 0 and variance 1. Assume X and Y are independent.

So, if we know that Z>4, what is the most probable combination of X and Y?

The probability of X>2 and Y>2 is: P(X>2)P(Y>2)=5.2e-4

The probabili...

Given a correlation, the envelope of the distribution should form some sort of ellipse

That isn't an explanation, but a stronger claim. Why should it form an ellipse?

A model of an independent factor or noise is an explanation of the ellipse, and thus of the main point. But people may find a stumbling block this middle section, with its assertion that we should expect ellipses. Also, regression to the mean and the tails coming apart are much more general than ellipses, but ellipses are pretty common.

...It generally vindicates worries about regression to t

Also: this is very reminiscent of St. Rev's old post about economic inequality. http://st-rev.livejournal.com/383957.html

Isn't the far simpler and more likely scenario that you never have just one variable accounting for all of an outcome? If other variables are not perfectly correlated with the variable you are graphing you will get noise. Why is it surprising that that noise also exists in the most extreme points?

EDIT: misunderstood last few paragraphs.

This post has been a core part of how I think about Goodhart's Law. However, when I went to search for it just now, I couldn't find it, because I was using Goodhart's Law as a search term, but it doesn't appear anywhere in the text or in the comments.

So, I thought I'd mention the connection, to make this post easier for my future self and others to find. Also, other forms of this include:

- Campbell's law: https://en.wikipedia.org/wiki/Campbell%27s_law

- The Cobra effect: https://en.wikipedia.org/wiki/Cobra_effect

- Teaching to the tes

Following on your Toy Model concept, let's say the important factors in being (for example) a successful entrepreneur are Personality, Intelligence, Physical Health, and Luck.

If a given person has excellent (+3SD) in all but one of the categories, but only average or poor in the final category, they're probably not going to succeed. Poor health, or bad luck, or bad people skills, or lack of intelligence can keep an entrepreneur at mediocrity for their productive career.

Really any competitive venue can be subject to this analysis. What are the important skills? Does it make sense to treat them as semi-independent, and semi-multiplicative in arriving at the final score?

Statistical point: the variance of forecast error for correctly specified simple regression problems is equal to:

Sigma^2(1 + 1/N + (x_o - x_mean)^2 / (Sigma ( x_i - x_mean) ^2))

So forecast error increases as x_o moves away from x_mean, especially when the variance of x is low by comparison.

Edit: Sub notation was apparently indenting things. I'm going to take a picture from my stats book tonight. Should be more readable.

Edit: Here's a more readable link. http://i.imgur.com/pu8lg0Wh.jpg

For business in particular I think network size and effects are the reason that the very top end of earners are much more deviant in earnings than in intellect. The fact that you can capture entire billions of dollars markets because modern society allows a single product to be distributed worldwide will multiply the value of the "top" product by a lot more than its quality might justify.

Interesting post. Well thought out, with an original angle.

In the direction of constructive feedback, consider that the concept of sample size -- while it seems to help with the heuristic explanation -- likely just muddies the water. (We'd still have the effect even if there were plenty of points at all values.)

For example, suppose there were so many people with extreme height some of them also had extreme agility (with infinite sample size, we'd even reliably have that the best players we're also the tallest.) So: some of the tallest people are also the...

I've made a visualization tool for that:

https://codepen.io/qbolec/pen/qBybXQe

It generates an elliptical cloud of white points where X is distributed normally, and Y=normal + X*0.3, so the two are correlated. Then you can define a green range on X and Y axis, and the tool computes the correlation in a sample (red points) restricted to that (green) range.

So, the correlation in the general population (white points) should be positive (~0.29). But if I restrict attention to upper right corner, then it is much lower, and often negative.

Fantastic, I wish I'd had this back when almost everyone in LW/EA circles I met was reading the biography of everyone in the ' fortune 400 and trying to spot the common factors. A surprisingly common strategy that's likely not to work for exactly these reasons.

My guess is that there are several variables that are indeed positively correlated throughout the entire range, but are particularly highly correlated at the very top. Why not? I'm pretty sure we can come up with a list.

What is interesting is the strength of these relationships appear to deteriorate as you advance far along the right tail.

I read that claim as saying that if you sample the 45% to 55% percentile you will get a stronger correlation than if you sample the 90% to 100% percentile. Is that what you are arguing?

I don't think there's anything special about the tails.

Take a sheet of paper, and cover up the left 9/10 of the high-correlation graph. That leaves the right tail of the X variable. The remaining datapoints have a much less linear shape.

But: take two sheets of paper, and cover up (say) the left 4/10, and the right 5/10. You get the same shape left over! It has nothing to do with the tail -- it just has to do with compressing the range of X values.

The correlation, roughly speaking, tells you what percentage of the variation is not caused by random error. When you compress the X, you compress the "real" variation, but leave the "error" variation as is. So the correlation drops.

[I'm unsure how much this rehashes things 'everyone knows already' - if old hat, feel free to downvote into oblivion. My other motivation for the cross-post is the hope it might catch the interest of someone with a stronger mathematical background who could make this line of argument more robust]

[Edit 2014/11/14: mainly adjustments and rewording in light of the many helpful comments below (thanks!). I've also added a geometric explanation.]

Many outcomes of interest have pretty good predictors. It seems that height correlates to performance in basketball (the average height in the NBA is around 6'7"). Faster serves in tennis improve one's likelihood of winning. IQ scores are known to predict a slew of factors, from income, to chance of being imprisoned, to lifespan.

What's interesting is what happens to these relationships 'out on the tail': extreme outliers of a given predictor are seldom similarly extreme outliers on the outcome it predicts, and vice versa. Although 6'7" is very tall, it lies within a couple of standard deviations of the median US adult male height - there are many thousands of US men taller than the average NBA player, yet are not in the NBA. Although elite tennis players have very fast serves, if you look at the players serving the fastest serves ever recorded, they aren't the very best players of their time. It is harder to look at the IQ case due to test ceilings, but again there seems to be some divergence near the top: the very highest earners tend to be very smart, but their intelligence is not in step with their income (their cognitive ability is around +3 to +4 SD above the mean, yet their wealth is much higher than this) (1).

The trend seems to be that even when two factors are correlated, their tails diverge: the fastest servers are good tennis players, but not the very best (and the very best players serve fast, but not the very fastest); the very richest tend to be smart, but not the very smartest (and vice versa). Why?

Too much of a good thing?

One candidate explanation would be that more isn't always better, and the correlations one gets looking at the whole population doesn't capture a reversal at the right tail. Maybe being taller at basketball is good up to a point, but being really tall leads to greater costs in terms of things like agility. Maybe although having a faster serve is better all things being equal, but focusing too heavily on one's serve counterproductively neglects other areas of one's game. Maybe a high IQ is good for earning money, but a stratospherically high IQ has an increased risk of productivity-reducing mental illness. Or something along those lines.

I would guess that these sorts of 'hidden trade-offs' are common. But, the 'divergence of tails' seems pretty ubiquitous (the tallest aren't the heaviest, the smartest parents don't have the smartest children, the fastest runners aren't the best footballers, etc. etc.), and it would be weird if there was always a 'too much of a good thing' story to be told for all of these associations. I think there is a more general explanation.

The simple graphical explanation

[Inspired by this essay from Grady Towers]

Suppose you make a scatter plot of two correlated variables. Here's one I grabbed off google, comparing the speed of a ball out of a baseball pitchers hand compared to its speed crossing crossing the plate:

It is unsurprising to see these are correlated (I'd guess the R-square is > 0.8). But if one looks at the extreme end of the graph, the very fastest balls out of the hand aren't the very fastest balls crossing the plate, and vice versa. This feature is general. Look at this data (again convenience sampled from googling 'scatter plot') of this:

Or this:

Or this:

Given a correlation, the envelope of the distribution should form some sort of ellipse, narrower as the correlation goes stronger, and more circular as it gets weaker: (2)

The thing is, as one approaches the far corners of this ellipse, we see 'divergence of the tails': as the ellipse doesn't sharpen to a point, there are bulges where the maximum x and y values lie with sub-maximal y and x values respectively:

So this offers an explanation why divergence at the tails is ubiquitous. Providing the sample size is largeish, and the correlation not too tight (the tighter the correlation, the larger the sample size required), one will observe the ellipses with the bulging sides of the distribution. (3)

Hence the very best basketball players aren't the very tallest (and vice versa), the very wealthiest not the very smartest, and so on and so forth for any correlated X and Y. If X and Y are "Estimated effect size" and "Actual effect size", or "Performance at T", and "Performance at T+n", then you have a graphical display of winner's curse and regression to the mean.

An intuitive explanation of the graphical explanation

It would be nice to have an intuitive handle on why this happens, even if we can be convinced that it happens. Here's my offer towards an explanation:

The fact that a correlation is less than 1 implies that other things matter to an outcome of interest. Although being tall matters for being good at basketball, strength, agility, hand-eye-coordination matter as well (to name but a few). The same applies to other outcomes where multiple factors play a role: being smart helps in getting rich, but so does being hard working, being lucky, and so on.

For a toy model, pretend that wealth is wholly explained by two factors: intelligence and conscientiousness. Let's also say these are equally important to the outcome, independent of one another and are normally distributed. (4) So, ceteris paribus, being more intelligent will make one richer, and the toy model stipulates there aren't 'hidden trade-offs': there's no negative correlation between intelligence and conscientiousness, even at the extremes. Yet the graphical explanation suggests we should still see divergence of the tails: the very smartest shouldn't be the very richest.

The intuitive explanation would go like this: start at the extreme tail - +4SD above the mean for intelligence, say. Although this gives them a massive boost to their wealth, we'd expect them to be average with respect to conscientiousness (we've stipulated they're independent). Further, as this ultra-smart population is small, we'd expect them to fall close to the average in this other independent factor: with 10 people at +4SD, you wouldn't expect any of them to be +2SD in conscientiousness.

Move down the tail to less extremely smart people - +3SD say. These people don't get such a boost to their wealth from their intelligence, but there should be a lot more of them (if 10 at +4SD, around 500 at +3SD), this means one should expect more variation in conscientiousness - it is much less surprising to find someone +3SD in intelligence and also +2SD in conscientiousness, and in the world where these things were equally important, they would 'beat' someone +4SD in intelligence but average in conscientiousness. Although a +4SD intelligence person will likely be better than a given +3SD intelligence person (the mean conscientiousness in both populations is 0SD, and so the average wealth of the +4SD intelligence population is 1SD higher than the 3SD intelligence people), the wealthiest of the +4SDs will not be as good as the best of the much larger number of +3SDs. The same sort of story emerges when we look at larger numbers of factors, and in cases where the factors contribute unequally to the outcome of interest.

When looking at a factor known to be predictive of an outcome, the largest outcome values will occur with sub-maximal factor values, as the larger population increases the chances of 'getting lucky' with the other factors:

So that's why the tails diverge.

A parallel geometric explanation

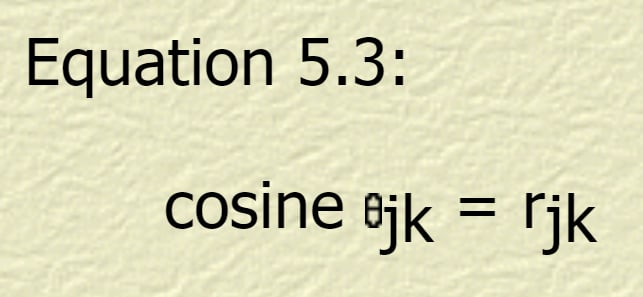

There's also a geometric explanation. The R-square measure of correlation between two sets of data is the same as the cosine of the angle between them when presented as vectors in N-dimensional space (explanations, derivations, and elaborations here, here, and here). (5) So here's another intuitive handle for tail divergence:

Grant a factor correlated with an outcome, which we represent with two vectors at an angle theta, the inverse cosine equal the R-squared. 'Reading off the expected outcome given a factor score is just moving along the factor vector and multiplying by cosine theta to get the distance along the outcome vector. As cos theta is never greater than 1, we see regression to the mean. The geometrical analogue to the tails coming apart is the absolute difference in length along factor versus length along outcome|factor scales with the length along the factor; the gap between extreme values of a factor and the less extreme values of the outcome grows linearly as the factor value gets more extreme. For concreteness (and granting normality), an R-square of 0.5 (corresponding to an angle of sixty degrees) means that +4SD (~1/15000) on a factor will be expected to be 'merely' +2SD (~1/40) in the outcome - and an R-square of 0.5 is remarkably strong in the social sciences, implying it accounts for half the variance.(6) The reverse - extreme outliers on outcome are not expected to be so extreme an outlier on a given contributing factor - follows by symmetry.

Endnote: EA relevance

I think this is interesting in and of itself, but it has relevance to Effective Altruism, given it generally focuses on the right tail of various things (What are the most effective charities? What is the best career? etc.) It generally vindicates worries about regression to the mean or winner's curse, and suggests that these will be pretty insoluble in all cases where the populations are large: even if you have really good means of assessing the best charities or the best careers so that your assessments correlate really strongly with what ones actually are the best, the very best ones you identify are unlikely to be actually the very best, as the tails will diverge.

This probably has limited practical relevance. Although you might expect that one of the 'not estimated as the very best' charities is in fact better than your estimated-to-be-best charity, you don't know which one, and your best bet remains your estimate (in the same way - at least in the toy model above - you should bet a 6'11" person is better at basketball than someone who is 6'4".)

There may be spread betting or portfolio scenarios where this factor comes into play - perhaps instead of funding AMF to diminishing returns when its marginal effectiveness dips below charity #2, we should be willing to spread funds sooner.(6) Mainly, though, it should lead us to be less self-confident.

1. Given income isn't normally distributed, using SDs might be misleading. But non-parametric ranking to get a similar picture: if Bill Gates is ~+4SD in intelligence, despite being the richest man in america, he is 'merely' in the smartest tens of thousands. Looking the other way, one might look at the generally modest achievements of people in high-IQ societies, but there are worries about adverse selection.

2. As nshepperd notes below, this depends on something like multivariate CLT. I'm pretty sure this can be weakened: all that is needed, by the lights of my graphical intuition, is that the envelope be concave. It is also worth clarifying the 'envelope' is only meant to illustrate the shape of the distribution, rather than some boundary that contains the entire probability density: as suggested by homunq: it is an 'pdf isobar' where probability density is higher inside the line than outside it.

3. One needs a large enough sample to 'fill in' the elliptical population density envelope, and the tighter the correlation, the larger the sample needed to fill in the sub-maximal bulges. The old faithful case is an example where actually you do get a 'point', although it is likely an outlier.

4. It's clear that this model is fairly easy to extend to >2 factor cases, but it is worth noting that in cases where the factors are positively correlated, one would need to take whatever component of the factors which are independent of one another.

5. My intuition is that in cartesian coordinates the R-square between correlated X and Y is actually also the cosine of the angle between the regression lines of X on Y and Y on X. But I can't see an obvious derivation, and I'm too lazy to demonstrate it myself. Sorry!

6. Another intuitive dividend is that this makes it clear why you can by R-squared to move between z-scores of correlated normal variables, which wasn't straightforwardly obvious to me.

7. I'd intuit, but again I can't demonstrate, the case for this becomes stronger with highly skewed interventions where almost all the impact is focused in relatively low probability channels, like averting a very specified existential risk.