Stephen Casper, scasper@mit.edu

TL;DR: being a professor in AI safety seems cool. It might be tractable and high impact to be one at a university that has lots of money, smart people, and math talent but an underperforming CS department – especially if that university is actively trying to grow and improve its CS capabilities.

--

Epistemic status: I have thought about this for a bit and just want to share a perspective and loft some ideas out there. This is not meant to be a thorough or definitive take.

Right now, there are relatively few professors at research universities who put a strong emphasis on AI safety and avoiding catastrophic risks from it in their work. But being a professor working in AI safety might be impactful. It offers the chance to cultivate a potentially large number of students’ interests and work via classes, advising, research, and advocacy. Imagine how different things might be if we had dozens more people like Stuart Russell, Max Tegmark, or David Kruguer peppered throughout academia.

I’m currently a grad student, but I might be interested in going into academia after I graduate. And I have been thinking about how to do it. This has led me to some speculative ideas about what universities might be good to try to be a professor at. I’m using this post to loft out some ideas in case anyone finds them interesting or wants to start a discussion.

One idea for being a professor in AI safety with a lot of impact could be to work at MIT, Berkeley, or Stanford, be surrounded by top talent, and add to the AI safety-relevant work these universities put out. For extremely talented and accomplished people, this is an excellent strategy. But there might be lots of great but low-hanging fruit in other places.

It might be relatively tractable and high-value to be a CS professor somewhere with a CS department that underperforms but has a lot of potential. An ideal university like this would be wealthy, have a lot of smart people, and have a lot of math talent yet underperforms in CS and is willing to spend a lot of money to get better at it soon. This offers an aspiring professor the chance to hit a hiring wave, get established in a growing department, and hopefully garner a lot of influence in the department and at the university.

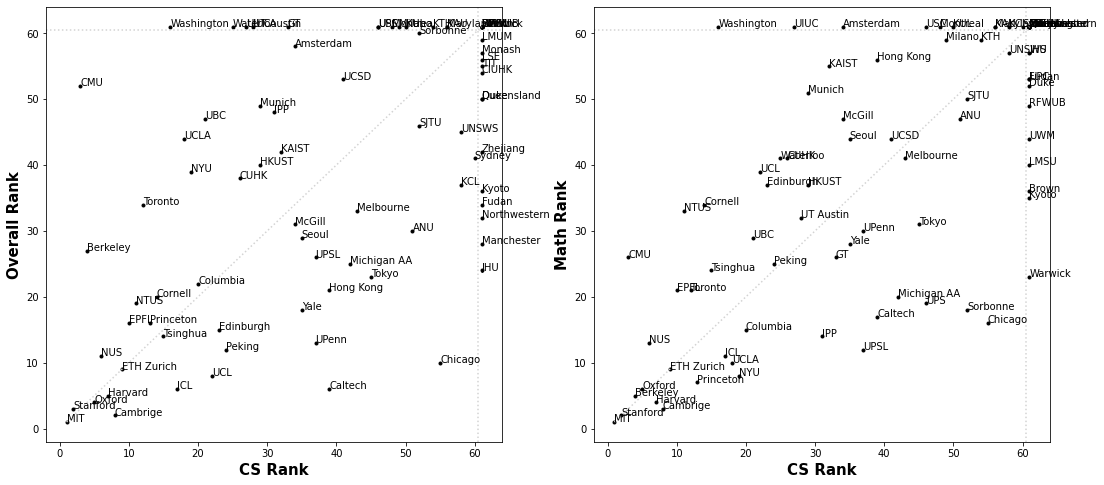

I went to topuniversities.com and found the universities that were ranked 1st-60th in the world for being the best overall, CS, and math universities. There are major caveats here because rankings are not that great in general and CS rankings don’t reflect the fact that some types of CS and math research are more AI safety relevant than others. But to give a coarse first glance, below are plotted CS ranks against the overall and math ranks. Note that for anything that was ranked 61st or above, I just set the value to 61, so both plots have walls at x=61 and y=61.

Some universities seem to underperform in CS and might be good to look for professorships at! These include:

- University of Chicago

- Caltech

- UPenn

- Yale

- Cambridge

- Michigan Ann Arbor

- Imperial College London

- University of Tokyo

- Universite PSL

- Duke

- Kyoto University

- Johns Hopkins University

And on the other side of the coin, some universities clearly overachieve in CS.

- Carnegie Mellon

- UC Berkeley

- National University of Singapore

- University of Toronto

- Cornell

- University of Washington

- University of Illinois Urbana Champaign

- EPFL

- Nanyang Technological University, Singapore

- UC San Diego

- University of Waterloo

- University of Amsterdam

- Technical University of Munich

- Korea Advanced Institute of Science & Technology

Obviously there are tons more factors to consider when thinking about where to search for professorship, but I hope this is at least useful food for thought.

What do you think?

How might you improve on this analysis?

I'll just comment on my experience as an undergrad at Yale in case it's useful.

At Yale, the CS department, particularly when it comes to state of the art ML, is not very strong. There are a few professors who do good work, but Yale is much stronger in social robotics and there is also some ML theory. There are a couple AI ethics people at Yale, and there soon will be a "digital ethics" person, but there aren't any AI safety people.

That said, there is a lot of latent support for AI safety at Yale. One of the global affairs professors involved in the Schmidt Program for Artificial Intelligence, Emerging Technology, and National Power is quite interested in AI safety. He invited Brian Christian and Stuart Russell to speak and guest teach his classes, for example. The semi-famous philosopher L.A. Paul is interested in AI safety, and one of the theory ML professors had a debate about AI safety in one of his classes. One of the professors most involved in hiring new professors specifically wants to hire AI safety people (though I'm not sure he really knows what AI safety is).

I wouldn't really recommend Yale to people who are interested in doing very standard ML research and want an army of highly competent ML researchers to help them. But for people whose work interacts with sociotechnical considerations like policy, or is more philosophical in nature, I think Yale would be a fantastic place to be, and in fact possibly one of the best places one could be.

I think this is all true, but also since Yale CS is ranked poorly the graduate students are not very strong for the most part. You certainly have less competition for them if you are a professor, but my impression is few top graduate students want to go to Yale. In fact, my general impression is often the undergraduates are stronger researchers than the graduate students (and then they go on to PhDs at higher ranked places than Yale).

Yale is working on strengthening its CS department and it certainly has a lot of money to do that. But there are a lot of re... (read more)