We still need more funding to be able to run another edition. Our fundraiser raised $6k as of now, and will end if it doesn't reach the $15k minimum, on February 1st. We need proactive donors.

If we don't get funded for this time, there is a good chance we will move on to different work in AI Safety and new commitments. This would make it much harder to reassemble the team to run future AISCs, even if the funding situation improves.

You can take a look at the track record section and see if it's worth it:

- ≥ $1.4 million granted to projects started at AI Safety Camp

- ≥ 43 jobs in AI Safety taken by alumni

- ≥ 10 organisations started by alumni

Edit to add: Linda just wrote a new post about AISC's theory of change.

You can donate through our Manifund page

You can also read more about our plans there.

If you prefer to donate anonymously, this is possible on Manifund.

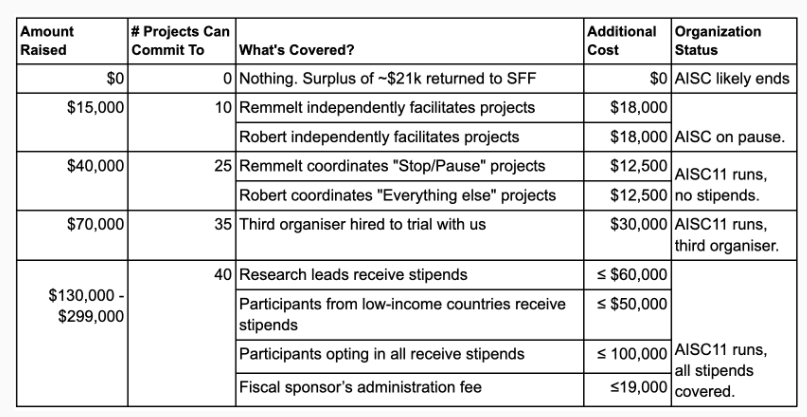

Suggested budget for the next AISC

If you're a large donor (>$15k), we're open to let you choose what to fund.

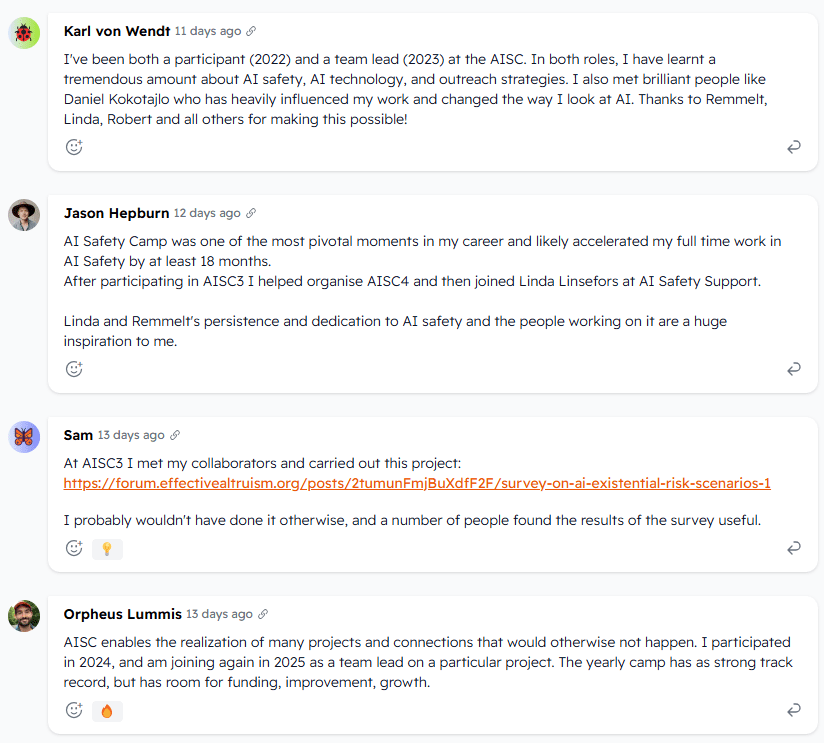

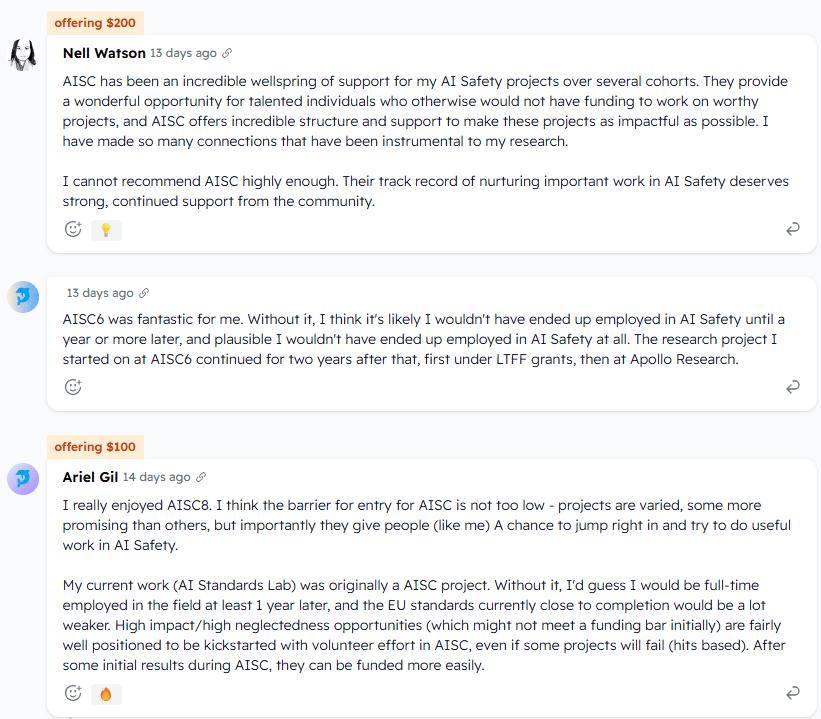

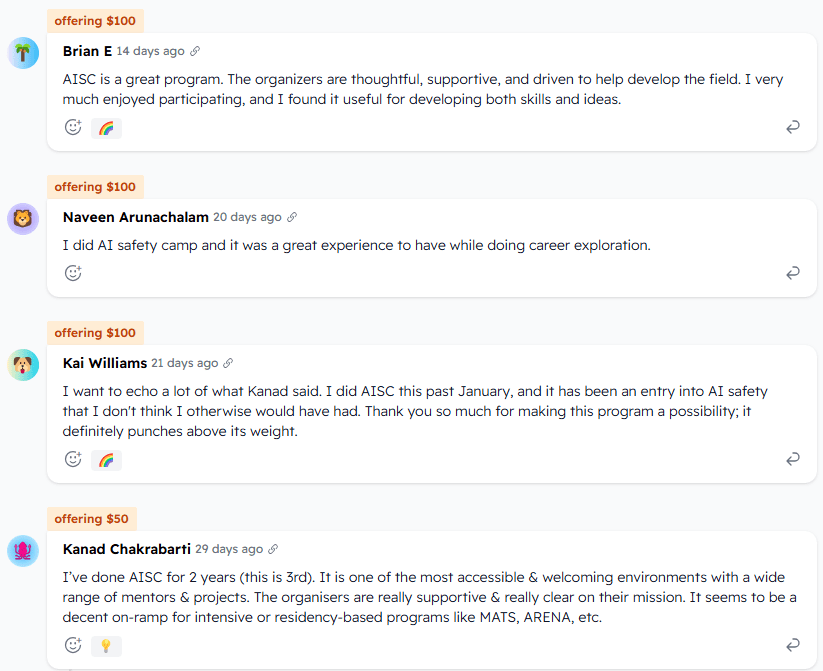

Testimonials (screenshots from Manifund page)

I kinda appreciate you being honest here.

Your response is also emblematic of what I find concerning here, which is that you are not offering a clear argument of why something does not make sense to you before writing ‘crank’.

Writing that you do not find something convincing is not an argument – it’s a statement of conviction, which could as much be a reflection of a poor understanding of an argument or of not taking the time to question one’s own premises. Because it’s not transparent about one’s thinking, but still comes across like there must be legit thinking underneath, this can be used as a deflection tactic (I don’t think you are, but others who did not engage much ended the discussion on that note). Frankly, I can’t convince someone if they’re not open to the possibility of being convinced.

I explained above why your opinion was flawed – that ASI would be so powerful that it could cancel all of evolution across its constituent components (or at least anything that through some pathway could build up to lethality).

I similarly found Quintin’s counter-arguments (eg. hinging on modelling AGI as trackable internal agents) to be premised on assumptions that considered comprehensively looked very shaky.

I relate why discussing this feels draining for you. But it does not justify you writing ‘crank’, when you have not had the time to examine the actual argumentation (note: you introduced the word ‘crank’ in this thread; Oliver wrote something else).

Overall, this is bad for community epistemics. It’s better if you can write what you thought was unsound about my thinking, and I can write what I found unsound about yours. Barring that exchange, some humility that you might be missing stuff is well-placed.

Besides this point, the respect is mutual.