We still need more funding to be able to run another edition. Our fundraiser raised $6k as of now, and will end if it doesn't reach the $15k minimum, on February 1st. We need proactive donors.

If we don't get funded for this time, there is a good chance we will move on to different work in AI Safety and new commitments. This would make it much harder to reassemble the team to run future AISCs, even if the funding situation improves.

You can take a look at the track record section and see if it's worth it:

- ≥ $1.4 million granted to projects started at AI Safety Camp

- ≥ 43 jobs in AI Safety taken by alumni

- ≥ 10 organisations started by alumni

Edit to add: Linda just wrote a new post about AISC's theory of change.

You can donate through our Manifund page

You can also read more about our plans there.

If you prefer to donate anonymously, this is possible on Manifund.

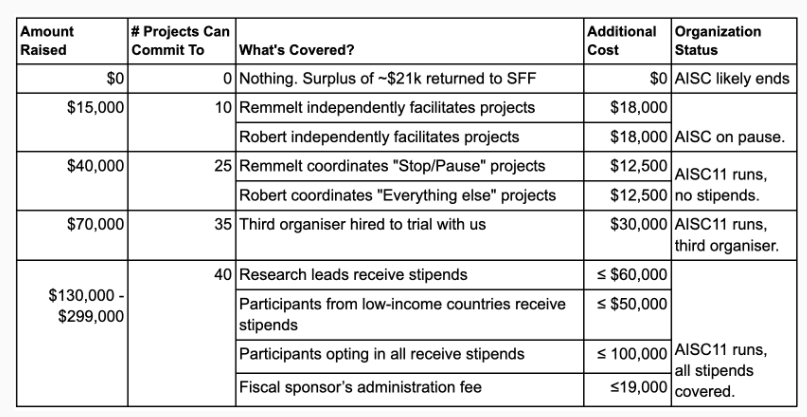

Suggested budget for the next AISC

If you're a large donor (>$15k), we're open to let you choose what to fund.

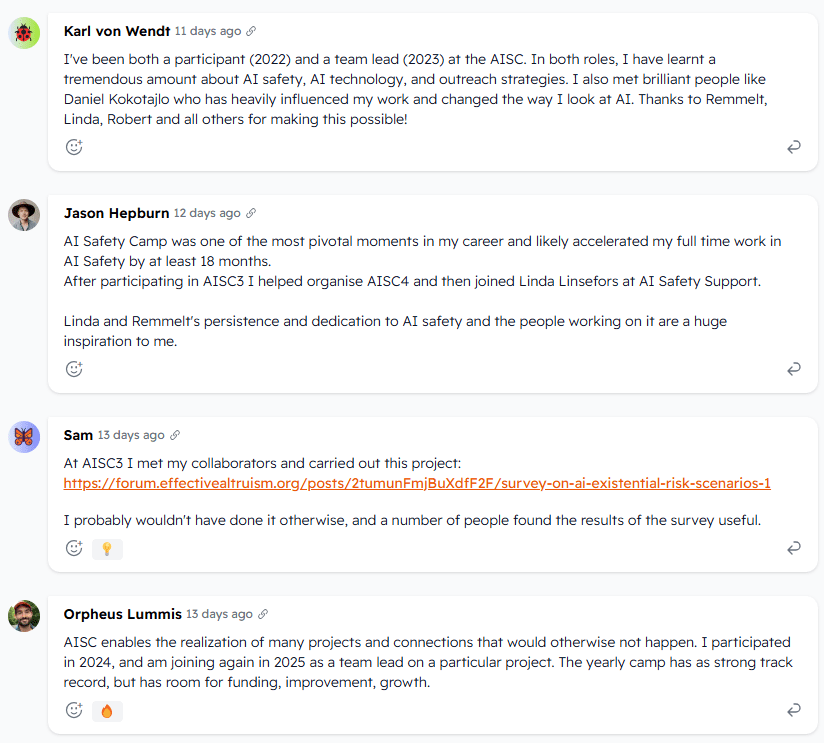

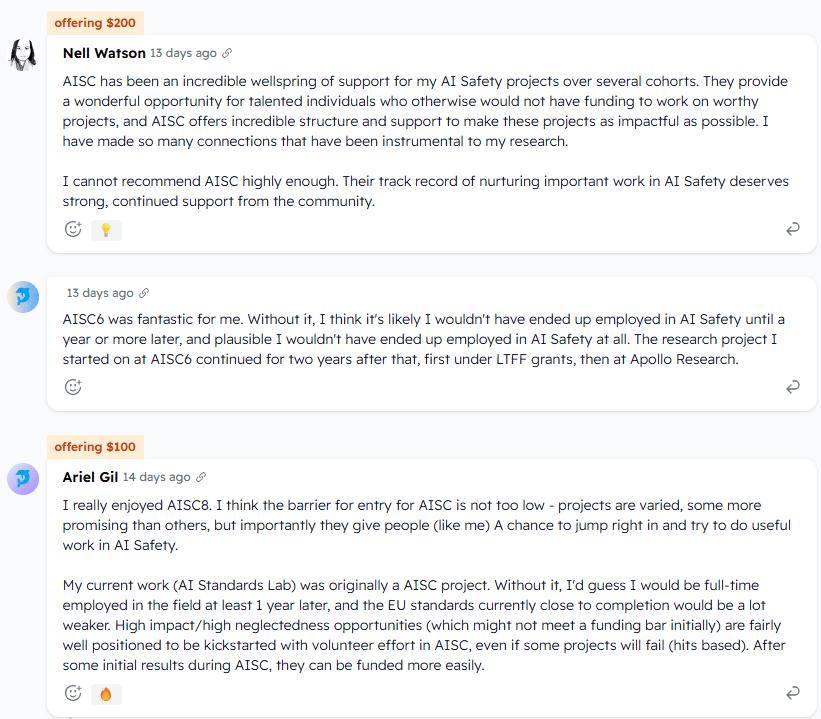

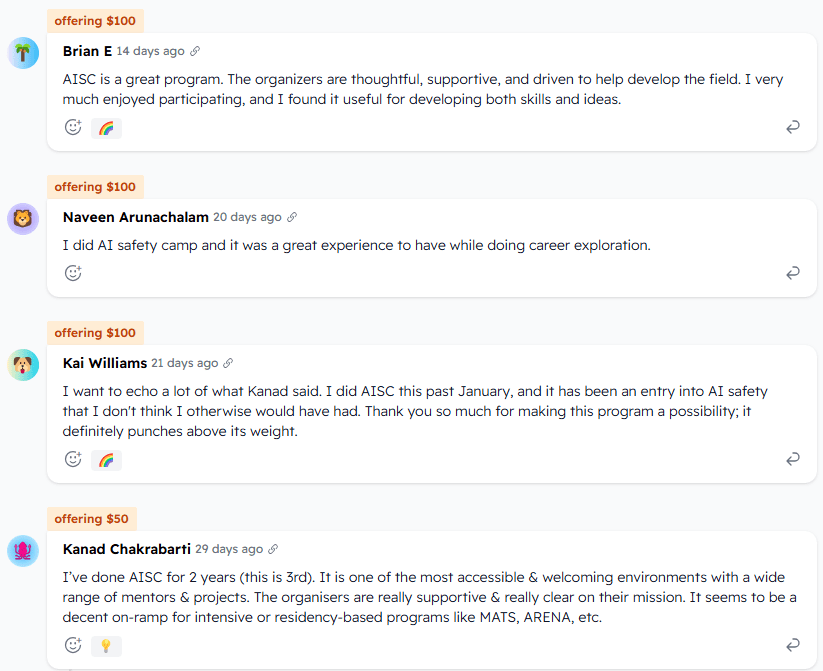

Testimonials (screenshots from Manifund page)

Lucius, the text exchanges I remember us having during AISC6 was about the question whether 'ASI' could control comprehensively for evolutionary pressures it would be subjected to. You and I were commenting on a GDoc with Forrest. I was taking your counterarguments against his arguments seriously – continuing to investigate those counterarguments after you had bowed out.

You held the notion that ASI would be so powerful that it could control for any of its downstream effects that evolution could select for. This is a common opinion held in the community. But I've looked into this opinion and people's justifications for it enough to consider it an unsound opinion.[1]

I respect you as a thinker, and generally think you're a nice person. It's disappointing that you wrote me off as a crank in one sentence. I expect more care, including that you also question your own assumptions.

A shortcut way of thinking about this:

The more you increase 'intelligence' (as a capacity in transforming patterns in data), the more you have to increase the number of underlying information-processing components. But the corresponding increase in the degrees of freedom those components have in their interactions with each other and their larger surroundings grows faster.

This results in a strict inequality between:

The hashiness model is a toy model for demonstrating this inequality (incl. how the mismatch between 1. and 2. grows over time). Anders Sandberg and two mathematicians are working on formalising that model at AISC.

There's more that can be discussed in terms of why and how this fully autonomous machinery is subjected to evolutionary pressures. But that's a longer discussion, and often the researchers I talked with lacked the bandwidth.