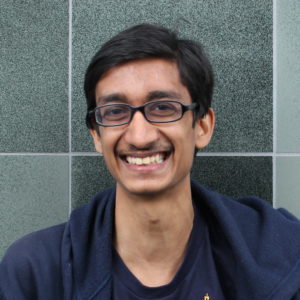

I along with several AI Impacts researchers recently talked to talked to Rohin Shah about why he is relatively optimistic about AI systems being developed safely. Rohin Shah is a 5th year PhD student at the Center for Human-Compatible AI (CHAI) at Berkeley, and a prominent member of the Effective Altruism community.

Rohin reported an unusually large (90%) chance that AI systems will be safe without additional intervention. His optimism was largely based on his belief that AI development will be relatively gradual and AI researchers will correct safety issues that come up.

He reported two other beliefs that I found unusual: He thinks that as AI systems get more powerful, they will actually become more interpretable because they will use features that humans also tend to use. He also said that intuitions from AI/ML make him skeptical of claims that evolution baked a lot into the human brain, and he thinks there’s a ~50% chance that we will get AGI within two decades via a broad training process that mimics the way human babies learn.

A full transcript of our conversation, lightly edited for concision and clarity, can be found here.

By Asya Bergal

I enjoyed this comment, thanks for thinking it through! Some comments:

This is not my belief. I think that powerful AI systems, even if they are a bunch of well developed heuristics, will be able to do super-long-term planning (in the same way that I'm capable of it, and I'm a bunch of heuristics, or Eliezer is to take your example).

Obviously this depends on how good the heuristics are, but I do think that heuristics will get to the point where they do super-long-term planning, and my belief that we'll be safe by default doesn't depend on assuming that AI won't do long-term planning.

Yup, that's correct.

Should "I don't think" be "I do think"? Otherwise I'm confused. With that correction, I basically agree.

I would be very surprised if this worked in the near term. Like, <1% in 5 years, <5% in 20 years, and really I want to say < 1% that this is the first way we get AGI (no matter when), but I can't actually be that confident.

My impression is that many researchers at MIRI would qualitatively agree with me on this, though probably with less confidence.