First, to dispense with what should be obvious, if a superintelligent agent wants to destroy humans, we are completely and utterly hooped. All the arguing about "but how would it...?" indicates lack of imagination.

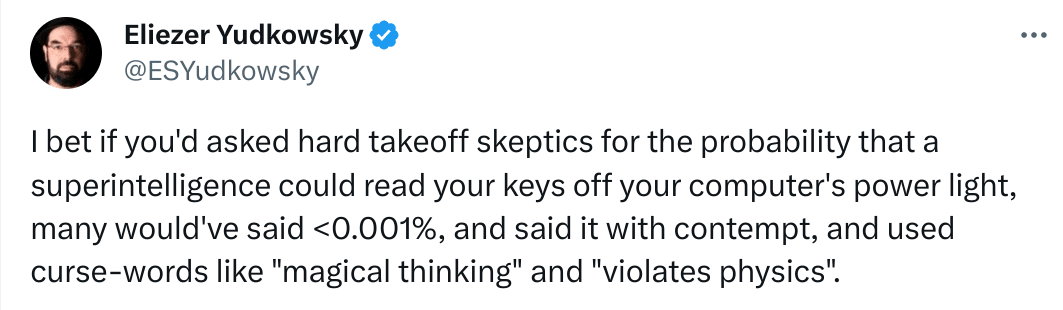

...Of course a superintelligence could read your keys off your computer's power light, if it found it worthwhile. Most of the time it would not need to, it would find easier ways to do whatever humans do by pressing keys. Or make the human press the keys. Our brains and minds are full of unpatcheable and largely invisible (to us) security holes. Scott Shapiro calls it upcode:

Hacking, Shapiro explains, is not only a matter of technical computation, or “downcode.” He uncovers how “upcode,” the norms that guide human behavior, and “metacode,” the philosophical principles that govern computation, determine what form hacking takes.

Humans successfully hack each other all the time. If something way smarter than us wants us gone, we would be gone. We cannot block it or stop it. Not even slow it down meaningfully.

Now, with that part out of the way...

My actual beef is with the certainty of the argument "there are many disjunctive paths to extinction, and very few, if any, conjunctive paths to survival" (and we have no do-overs).

The analogy here is sort of like walking through an increasingly dense and sophisticated minefield without a map, only you may not notice when you trigger a mine, and it ends up blowing you to pieces some time later, and it is too late to stop it.

I think this kind of view also indicates lack of imagination, only in the opposite extreme. A conjunctive convoluted path to survival is certainly a possibility, but by no means the default or the only way to self-consistently imagine reality without reaching into wishful thinking.

Now, there are several (not original here, but previously mentioned) potential futures which are not like that, that tend to get doom-piled by the AI extinction cautionistas:

...Recursive self-improvement is an open research problem, is apparently needed for a superintelligence to emerge, and maybe the problem is really hard.

...Pushing ML toward and especially past the top 0.1% of human intelligence level (IQ of 160 or something?) may require some secret sauce we have not discovered or have no clue that it would need to be discovered. Without it, we would be stuck with ML emulating humans, but not really discovering new math, physics, chemistry, CS algorithms or whatever.

...An example of this might be a missing enabling technology, like internal combustion for heavier-than-air flight (steam engines were not efficient enough, though very close). Or like needing the Algebraic Number Theory to prove the Fermat's last theorem. Or similar advances in other areas.

...Worse, it could require multiple independent advances in seemingly unrelated fields.

...Agency and goal-seeking beyond emulating what humans mean by it informally might be hard, or not being a thing at all, but just a limited-applicability emergent concept, sort of like the Newtonian concept of force (as in F=ma).

...Improvement AI beyond human level requires "uplifting" humans along the way, through brain augmentation or some other means.

...AI that is smart enough to discover new physics may also discover separate and efficient physical resources for what it needs, instead of grabby-alien-style lightconing it through the Universe.

...We may be fundamentally misunderstanding what "intelligence" means, if anything at all. It might be the modern equivalent of the phlogiston.

...And various other possibilities one can imagine or at least imagine that they might exist. The list is by no means exhaustive or even remotely close to it.

In the above mentioned and not mentioned possible worlds the AI-borne human extinction either does not happen, is not a clear and present danger, or is not even a meaningful concept. Insisting that the one possible world of a narrow conjunctive path to survival has a big chunk of probability among all possible worlds seems rather overconfident to me.

"Current architecture" is a narrower category than "human-designed architecture." You might have said in 2012 "current architectures can't beat Go" but that wouldn't have meant we needed RSI to beat Go[1]; we just need to design something better than what we had then.

I think it is likely that a human-designed architecture could be an extinction-level AI. I think it is not obvious whether the first extinction-level AI will be human-designed or AI-designed, as it's both determined by technological uncertainties and political uncertainties.

I think it is plausible that if you did massive scaling on current architecture, you could get an extinction-level AI, but it is pretty unlikely that this will be the first or even seriously attempted. [Like, if we had a century of hardware progress and no software progress, could GPT-2123 be extinction-level? I'm not gonna rule it out, but I am going to consider extremely unlikely "a century of hardware progress with no software progress."]

Does expert iteration count as recursive self-improvement? IMO "not really but it's close." Obviously it doesn't let you overcome any architecture-imposed limitations, but it lets you iteratively improve based on your current capability level. And if you view some of our current training regimes as already RSI-like, then this conversation changes.