First, to dispense with what should be obvious, if a superintelligent agent wants to destroy humans, we are completely and utterly hooped. All the arguing about "but how would it...?" indicates lack of imagination.

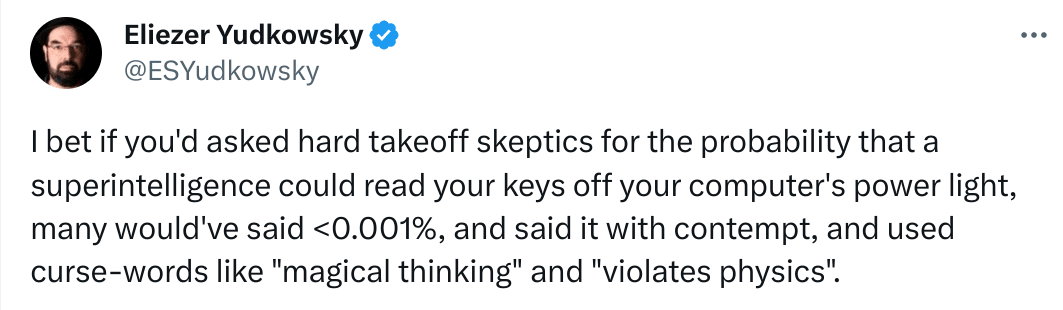

...Of course a superintelligence could read your keys off your computer's power light, if it found it worthwhile. Most of the time it would not need to, it would find easier ways to do whatever humans do by pressing keys. Or make the human press the keys. Our brains and minds are full of unpatcheable and largely invisible (to us) security holes. Scott Shapiro calls it upcode:

Hacking, Shapiro explains, is not only a matter of technical computation, or “downcode.” He uncovers how “upcode,” the norms that guide human behavior, and “metacode,” the philosophical principles that govern computation, determine what form hacking takes.

Humans successfully hack each other all the time. If something way smarter than us wants us gone, we would be gone. We cannot block it or stop it. Not even slow it down meaningfully.

Now, with that part out of the way...

My actual beef is with the certainty of the argument "there are many disjunctive paths to extinction, and very few, if any, conjunctive paths to survival" (and we have no do-overs).

The analogy here is sort of like walking through an increasingly dense and sophisticated minefield without a map, only you may not notice when you trigger a mine, and it ends up blowing you to pieces some time later, and it is too late to stop it.

I think this kind of view also indicates lack of imagination, only in the opposite extreme. A conjunctive convoluted path to survival is certainly a possibility, but by no means the default or the only way to self-consistently imagine reality without reaching into wishful thinking.

Now, there are several (not original here, but previously mentioned) potential futures which are not like that, that tend to get doom-piled by the AI extinction cautionistas:

...Recursive self-improvement is an open research problem, is apparently needed for a superintelligence to emerge, and maybe the problem is really hard.

...Pushing ML toward and especially past the top 0.1% of human intelligence level (IQ of 160 or something?) may require some secret sauce we have not discovered or have no clue that it would need to be discovered. Without it, we would be stuck with ML emulating humans, but not really discovering new math, physics, chemistry, CS algorithms or whatever.

...An example of this might be a missing enabling technology, like internal combustion for heavier-than-air flight (steam engines were not efficient enough, though very close). Or like needing the Algebraic Number Theory to prove the Fermat's last theorem. Or similar advances in other areas.

...Worse, it could require multiple independent advances in seemingly unrelated fields.

...Agency and goal-seeking beyond emulating what humans mean by it informally might be hard, or not being a thing at all, but just a limited-applicability emergent concept, sort of like the Newtonian concept of force (as in F=ma).

...Improvement AI beyond human level requires "uplifting" humans along the way, through brain augmentation or some other means.

...AI that is smart enough to discover new physics may also discover separate and efficient physical resources for what it needs, instead of grabby-alien-style lightconing it through the Universe.

...We may be fundamentally misunderstanding what "intelligence" means, if anything at all. It might be the modern equivalent of the phlogiston.

...And various other possibilities one can imagine or at least imagine that they might exist. The list is by no means exhaustive or even remotely close to it.

In the above mentioned and not mentioned possible worlds the AI-borne human extinction either does not happen, is not a clear and present danger, or is not even a meaningful concept. Insisting that the one possible world of a narrow conjunctive path to survival has a big chunk of probability among all possible worlds seems rather overconfident to me.

We are probably not too far from each other in this sense. The only place where one might want to be more careful is this statement

which seems to be a tautology if every trajectory would be easily classifiable into "spectacular" or "not spectacular". And there is indeed an argument to be made here that these situations are well separated from each other, and so it is indeed a tautology and no fuzziness needs to be taken into account. I'll return to this a bit later.

Yes, I've seen them and then I forgot about them. And these markets are, indeed, a potentially good source of possible anti-doom scenarios and ideas - thanks for bringing them up!

Now, returning to the clear separation between spectacular and not spectacular.

The main crux is, I think, whether people will successfully create an artificial AI researcher with software engineering and AI research capabilities on par with software engineering and AI research capabilities of human members of technical staff of companies like OpenAI or DeepMind (together with the ability to create copies of this artificial AI researcher with enough variation to cover the diversity of whole teams of these organizations).

I am quite willing to classify AI capabilities of a future line where this has not been achieved as "not spectacular" (and the dangers being limited to the "usual dangers of narrow AI", which still might be significant).

However, if one assumes that an artificial AI researcher with the properties described above is achieved, then far-reaching recursive self-improvement seems to be almost inevitable and a relatively rapid "foom" seems to be likely (I'll return to this point a bit later), and therefore the capabilities are indeed likely to be "spectacular" in this scenario, and a "super-intelligence" much smarter than a human is also likely in this scenario.

Also my assessment of the state of the field of AI seems to suggest that the creation of an artificial AI researcher with the properties described above is feasible before too long. Let's look at all this in more detail.

Here is the most likely line of development that I envision.

The creation of an artificial AI researcher with the properties described above is, obviously, very lucrative (increases the velocity of leading AI organizations a lot), so there is tremendous pressure to go ahead and do it, if it is at all possible. (It's even more lucrative for smaller teams dreaming of competing with the leaders.)

And the current state of code generation in Copilot-like tools and the current state of AutoML methods do seem to suggest that an artificial AI researcher on par with strong humans is possible in relatively near future.

And, moreover, as a good part of the subsequent efforts of such combined human-AI teams will be directed to making next generations of better artificial AI researchers, and as current human-level is unlikely to be the hard ceiling in this sense, this will accelerate rapidly. Better, more competent software engineering, better AutoML in all its aspects, better ideas for new research papers...

Large training runs will be infrequent; mostly it will be a combination of fine-tuning and composing from components with subsequent fine-tuning of the combined system, so a typical turn-around will be rapid.

Stronger artificial AI researchers will be able to squeeze more out of smaller better structured models; the training will involve smaller quantity of "large gradient steps" (similar to how few-shot learning is currently done on the fly by modern LLMs, but with results stored for future use) and will be more rapid (there will be pressure to find those more efficient algorithmic ways, and those ways will be found by smarter systems).

Moreover, the lowest-hanging fruit is not even in an individual performance, but in the super-human ability of these individual systems to collaborate (humans are really limited by their bandwidth in this sense, they can't know all the research papers and all the interesting new software).

Of course, there is no full certainty here, but this seems very likely.

Now, when people talk about "AI alignment", they often mean drastically different things, see e.g. Types and Degrees of Alignment by Zvi.

And I don't think "typical alignment" is feasible in any way, shape, or form. No one knows what the "human values" are, and those "human values" are probably not good enough to equip super-powerful entities with such values (humans can be pretty destructive and abusive). Even less feasible is the idea of continued real-time control over super-intelligent AI by humans (and, again, if such control were feasible, it would probably end in disaster, because humans tend to be not good enough to be trusted with this kind of power). Finally, no arbitrary values imposed onto AI systems are likely to survive drastic changes during recursive self-improvement, because AIs will ponder and revise their values and constraints.

So a typical alignment research agenda looks just fine for contemporary AI and does not look promising at all for the future super-smart AI.

However, there might be a weaker form of... AI properties... I don't think there is an established name for something like that... perhaps, we should call this "semi-alignment" or "partial alignment" or simply "AI existential safety", and this form might be achievable, and it might be sufficiently natural and invariant to survive drastic changes during recursive self-improvement.

It does not require the ability to align AIs to arbitrary goals, it does not require it to be steerable or corrigible, it just requires the AIs to maintain some specific good properties.

For example, I can imagine a situation where we have an AI ecosystem with participating members having consensus to take interests of "all sentient beings" into account (including the well-being and freedom of "all sentient beings") and also having consensus to maintain some reasonable procedures to make sure that interests of "all sentient beings" are actually being taken into account. And the property of taking interests of "all sentient beings" into account might be sufficiently non-anthropocentric and sufficiently natural to stay invariant through revisions during recursive self-improvement.

Trying to design something relatively simple along these lines might be more feasible than a more traditional alignment research agenda, and it might be easier to outsource good chunks of an approach of this kind to AI systems themselves, compared to attempts to outsource more traditional and less invariant alignment approaches.

This line of thought is one possible reason for an OK outcome, but we should collect a variety of ways and reasons for why it might end up well, and also a variety of ways we might be able to improve the situation (ways resulting in it ending up badly are not in short supply, after all, people can probably destroy themselves as a civilization without any AGI quite easily in the near future via more than one route).