Seeking Power is Often Robustly Instrumental in MDPs relates the structure of the agent's environment (the 'Markov decision process (MDP) model') to the tendencies of optimal policies for different reward functions in that environment ('instrumental convergence'). The results tell us what optimal decision-making 'tends to look like' in a given environment structure, formalizing reasoning that says e.g. that most agents stay alive because that helps them achieve their goals.

Several people have claimed to me that these results need subjective modelling decisions. For example, ofer wrote:

I think using a well-chosen reward distribution is necessary, otherwise POWER depends on arbitrary choices in the design of the MDP's state graph. E.g. suppose the student [in a different example] writes about every action they take in a blog that no one reads, and we choose to include the content of the blog as part of the MDP state. This arbitrary choice effectively unrolls the state graph into a tree with a constant branching factor (+ self-loops in the terminal states) and we get that the POWER of all the states is equal.

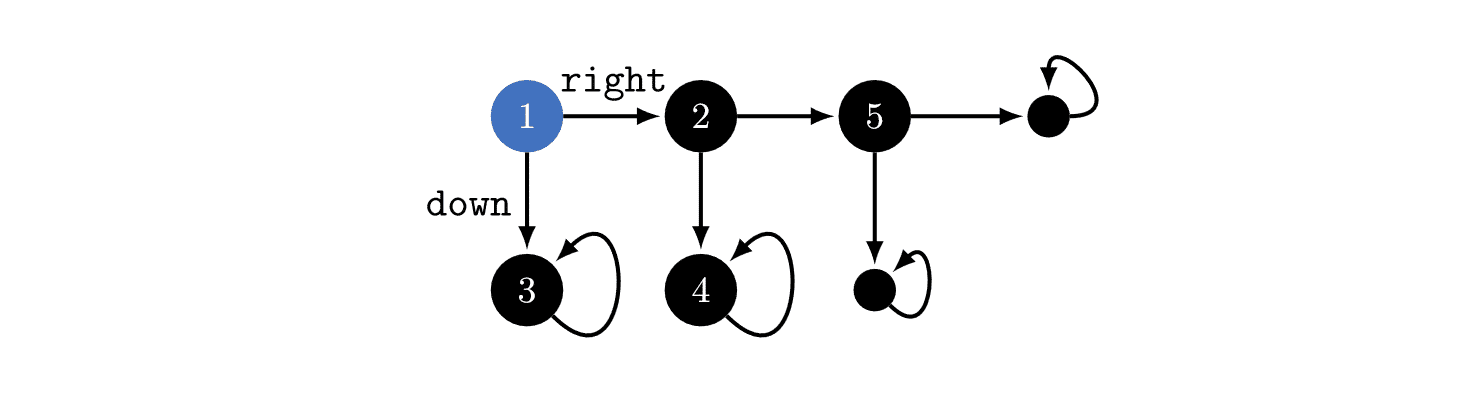

In the above example, you could think about the environment as in the above image, or you could imagine that state '3' is actually a million different states which just happen to seem similar to us! If that were true, then optimal policies would tend to go , since that would give the agent millions of choices about where it ends up. Therefore, the power-seeking theorems depend on subjective modelling assumptions.

I used to think this, but this is wrong. The MDP model is determined by the agent's implementation + the task's dynamics.

To make this point, let's back out to a more familiar MDP: Pac-Man.

When the discount rate is near 1, most reward functions avoid immediately dying to the ghost, because then they'd be stuck in a terminal state (the red-ghost-game-over state). But why can't the red ghost be equally well-modeled as secretly being 5 googolplex different terminal states?

An MDP model (technically, a rewardless MDP) is a tuple , where is the state space, is the action space, and is the (potentially stochastic) transition function which says what happens when the agent takes different actions at different states. has to be Markovian, depending only on the observed state and the current action, and not on prior history.

Whence cometh this MDP model? Thin air? Is it just a figment of our imagination, which we use to understand what the agent is doing as it learns a policy?

When we train a policy function in the real world, the function takes in an observation (the state) and outputs (a distribution over) actions. When we define state and action encodings, this implicitly defines an "interface" between the agent and the environment. The state encoding might look like "the set of camera observations" or "the set of Pac-Man game screens", and actions might be numbers 1-10 which are sent to actuators, or to the computer running the Pac-Man code, etc.

(In the real world, the computer simulating Pac-Man may suffer a hardware failure / be hit by a gamma ray / etc, but I don't currently think these are worth modelling over the timescales over which we train policies.)

Suppose that for every state-action history, what the agent sees next depends only on the currently observed state and the most recent action taken. Then the environment is Markovian (transition dynamics only depend on what you do right now, not what you did in the past) and fully observable (you can see the whole state all at once), and the agent encodings have defined the MDP model.

In Pac-Man, the MDP model is uniquely defined by how we encode states and actions, and the part of the real world which our agent interfaces with. If you say "maybe the red ghost is represented by 5 googolplex states", then that's a falsifiable claim about the kind of encoding we're using.

That's also a claim that we can, in theory, specify reward functions which distinguish between 5 googolplex variants of red-ghost-game-over. If that were true, then yes - optimal policies really would tend to "die" immediately, since they'd have so many choices.

The "5 googolplex" claim is both falsifiable and false. Given an agent architecture (specifically, the two encodings), optimal policy tendencies are not subjective. We may be uncertain about the agent's state- and action-encodings, but that doesn't mean we can imagine whatever we want.

(I think that the same point holds for other environment types, like POMDPs.)

Thanks for taking the time to write this out.

I'm sorry - although I think I mentioned it in passing, I did not draw sufficient attention to the fact that I've been talking about a drastically broadened version of the paper, compared to what was on arxiv when you read it. The new version should be up in a few days. I feel really bad about this - especially since you took such care in reading the arxiv version!

The theorems hold for all finite MDPs in which the formal sufficient conditions are satisfied (i.e. the required environmental symmetries exist; see proposition 6.9, theorem 6.13, corollary 6.14). For practical advice, see subsection 6.3 and beginning of section 7.

(I shared the Overleaf with Ofer; if other lesswrong readers want to read without waiting for arxiv to update, message me! ETA: The updated version is now on arxiv.)

I agree that you can do that. I also think that instrumental convergence doesn't apply in such MDPs (as in, "most" goals over the environment won't incentivize any particular kind of optimal action), unless you restrict to certain kinds of reward functions.

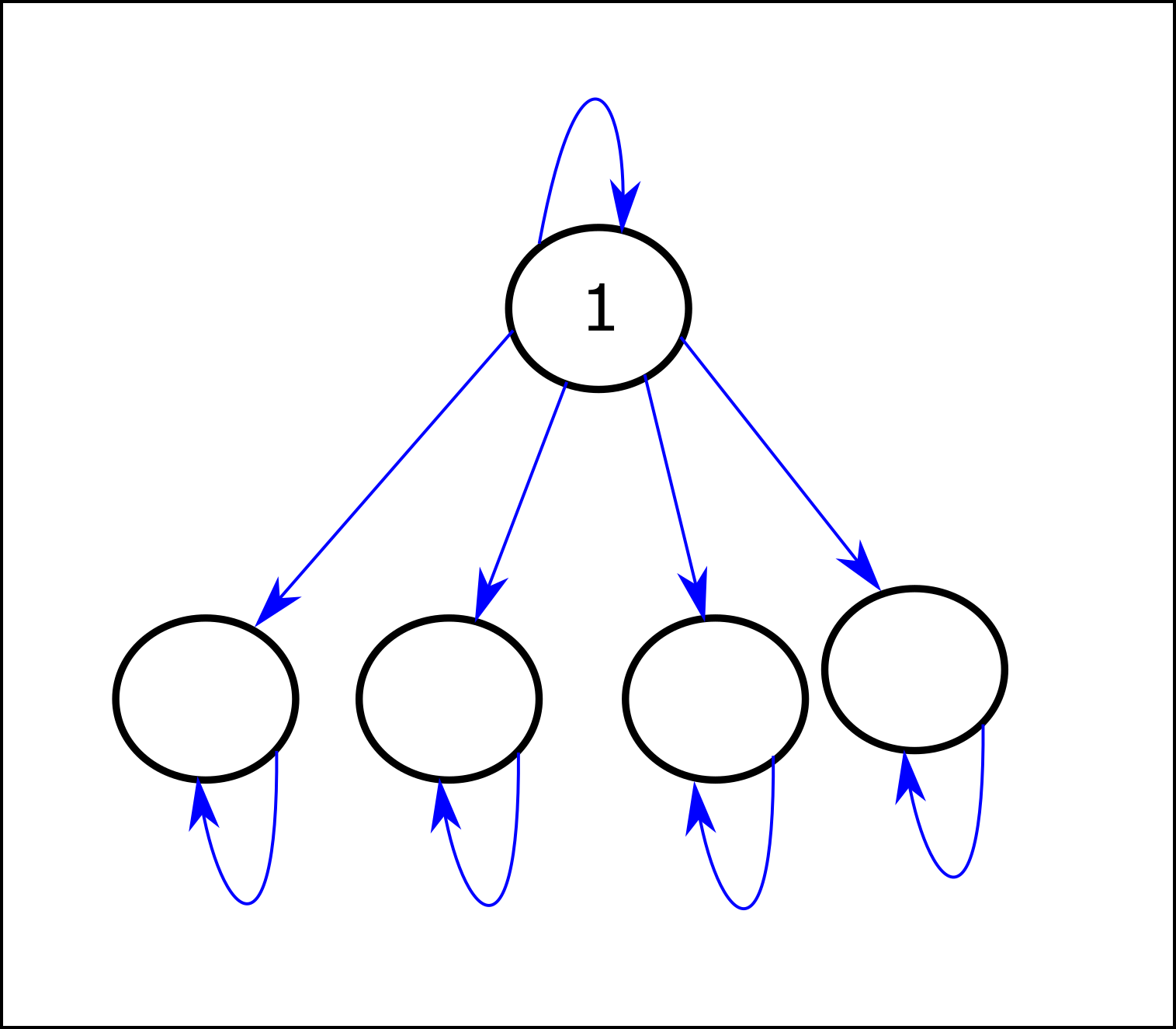

Fix a reward function distribution DMiid in the original MDP M. For simplicity, let's suppose DMiid is max-ent (and thus IID). Let's suppose we agree that optimal policies under DMiid tend to avoid getting shut off.

Translated to the rolled-out MDP M′, DMiid no longer distributes reward uniformly over states. In fact, in its support, each reward function has the rather unusual property that its reward is only dependent on the current state, and not on the action log's contents. When translated into M′, DMiid imposes heavy structural assumptions on its reward functions, and it's not max-ent over the states of M′. By the "functional equivalence", it still gives you the same optimality probabilities as before, and so it still tends to incentivize shutdown avoidance.

However, if you take a max-ent over the rolled-out states of M′, then this max-ent won't incentivize shutdown avoidance.

To see why, consider how absurdly expressive utility functions are when their domains are entire state-action histories. In Coherence arguments do not imply goal-directed behavior, Rohin Shah wrote:

When defined over state-action histories, it's dead easy to write down objectives which don't pursue instrumental subgoals.

However, how easy is it to write down state-based utility functions which do the same? I guess there's the one that maximally values dying. What else? While more examples probably exist, it seems clear that they're much harder to come by.

And so when your reward depends on your action history, this is strictly more expressive than state-based reward - so expressive that it becomes easy to directly incentivize any sequence of actions via the reward function. And thus, instrumental convergence disappears for "most objectives."

However, from our perspective, we still have a distribution over goals we might want to give the agent. And these goals are generally very structured - they aren't just randomly selected preferences over action-histories+current state. So we should still expect instrumental convergence to exist empirically (at a first approximation, perhaps via a simplicity prior over reward functions/utility functions). It just doesn't exist for most "unstructured" distributions in the unrolled environment.

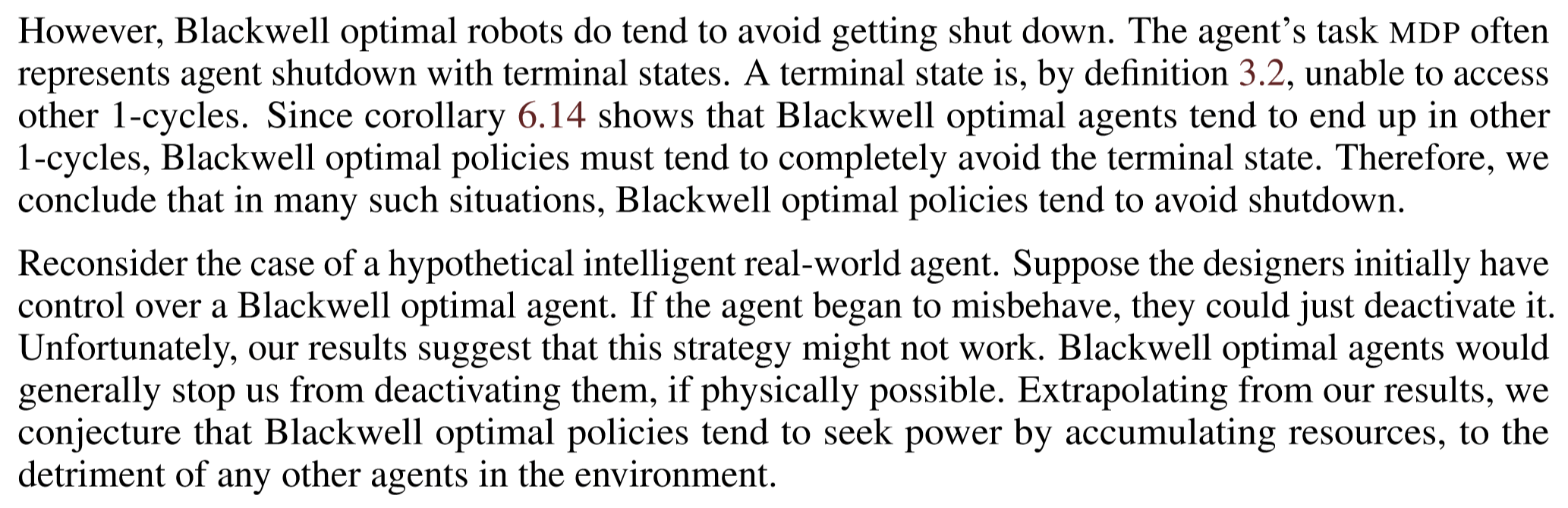

Note that the RSD optimality probability theorem (Theorem 6.13) applies here, and it correctly predicts that when γ≈1, most reward functions incentivize navigating to the larger set of 1-cycles (the 4 below the high-POWER state). As I explain in section 6.3, section 7, and appendix B of the new paper, you have to be careful in applying Thm 6.13, because

Yeah, I'm aware of this kind of situation. I think that that sentence from the paper was poorly worded. In the new version, I'm more careful to emphasize the environmental symmetries which are sufficient to conclude power-seeking:

See appendix B of the new paper for an example similar to yours, referenced by subsection 6.3 ("how to reason about other environments").

Yup! POWER depends on the reward distribution; if you want to reason formally about a simplicity prior, plug it into POWER.

Right, okay, I agree with that. I think we agree about how POWER works here, but disagree about the link between optimality probability-wrt-a-distribution, and instrumental convergence.

Yeah, I think that wrt the action-logger-MDP, instrumental convergence doesn't exist for goals over the new action-logger-MDP. See the earlier part of this comment.