Arbital was envisioned as a successor to Wikipedia. The project was discontinued in 2017, but not before many new features had been built and a substantial amount of writing about AI alignment and mathematics had been published on the website.

If you've tried using Arbital.com the last few years, you might have noticed that it was on its last legs - no ability to register new accounts or log in to existing ones, slow load times (when it loaded at all), etc. Rather than try to keep it afloat, the LessWrong team worked with MIRI to migrate the public Arbital content to LessWrong, as well as a decent chunk of its features. Part of this effort involved a substantial revamp of our wiki/tag pages, as well as the Concepts page. After sign-off[1] from Eliezer, we'll also redirect arbital.com links to the corresponding pages on LessWrong.

As always, you are welcome to contribute edits, especially to stubs, redlinks, or otherwise incomplete pages, though note that we'll have a substantially higher bar for edits to high-quality imported Arbital pages, especially those written in a "single author" voice.

New content

While Arbital had many contributors, Eliezer was one of the most prolific, and wrote something like a quarter million words across many pages, mostly on alignment-relevant subjects.

If you just want to jump into reading, we've curated what we consider to be some of the best selections of that writing.

If you really hate clicking links, I've copied over the "Tier 1" recommendations below.

Recommendations

| 1. | AI safety mindset | What kind of mindset is required to successfully build an extremely advanced and powerful AGI that is "nice"? |

| 2. | Convergent instrumental strategies and Instrumental pressure | Certain sub-goals like "gather all the resources" and "don't let yourself be turned off" are useful for a very broad range of goals and values. |

| 3. | Context disaster | Current terminology would call this "misgeneralization". Do alignment properties that hold in one context (e.g. training, while less smart) generalize to another context (deployment, much smarter)? |

| 4. | Orthogonality Thesis | The Orthogonality Thesis asserts that there can exist arbitrarily intelligent agents pursuing any kind of goal. |

| 5. | Hard problem of corrigibility | It's a hard problem to build an agent which, in an intuitive sense, reasons internally as if from the developer's external perspective – that it is incomplete, that it requires external correction, etc. This is not default behavior for an agent. |

| 6. | Coherent Extrapolated Volition | If you're extremely confident in your ability to align an extremely advanced AGI on complicated targets, this is what you should have your AGI pursue. |

| 7. | Epistemic and instrumental efficiency | "Smarter than you" is vague. "Never ever makes a mistake that you could predict" is more specific. |

| 8. | Corporations vs. superintelligences | Is a corporation a superintelligence? (An example of epistemic/instrumental efficiency in practice.) |

| 9. | Rescuing the utility function | "Love" and "fun" aren't ontologically basic components of reality. When we figure out what they're made of, we should probably go on valuing them anyways. |

| 10. | Nearest unblocked strategy | If you tell a smart consequentialist mind "no murder" but it is actually trying, it will just find the next best thing that you didn't think to disallow. |

| 11. | Mindcrime | The creation of artificial minds opens up the possibility of artificial moral patients who can suffer. |

| 12. | General intelligence | Why is AGI a big deal? Well, because general intelligence is a big deal. |

| 13. | Advanced agent properties | The properties of agents for which (1) we need alignment, (2) are relevant in the big picture. |

| 14. | Mild optimization | "Mild optimization" is where, if you ask your advanced AGI to paint one car pink, it just paints one car pink and then stops, rather than tiling the galaxies with pink-painted cars, because it's not optimizing that hard. It's okay with just painting one car pink; it isn't driven to max out the twentieth decimal place of its car-painting score. |

| 15. | Corrigibility | The property such that if you tell your AGI that you installed the wrong values in it, it lets you do something about that. An unnatural property to build into an agent. |

| 16. | Pivotal Act | An act which would make a large positive difference to things a billion years in the future, e.g. an upset of the gameboard that's decisive "win". |

| 17. | Bayes Rule Guide | An interactive guide to Bayes' theorem, i.e, the law of probability governing the strength of evidence - the rule saying how much to revise our probabilities (change our minds) when we learn a new fact or observe new evidence. |

| 18. | Bayesian View of Scientific Virtues | A number of scientific virtues are explained intuitively by Bayes' rule. |

| 19. | A quick econ FAQ for AI/ML folks concerned about technological unemployment | An FAQ aimed at a very rapid introduction to key standard economic concepts for professionals in AI/ML who have become concerned with the potential economic impacts of their work. |

New (and updated) features

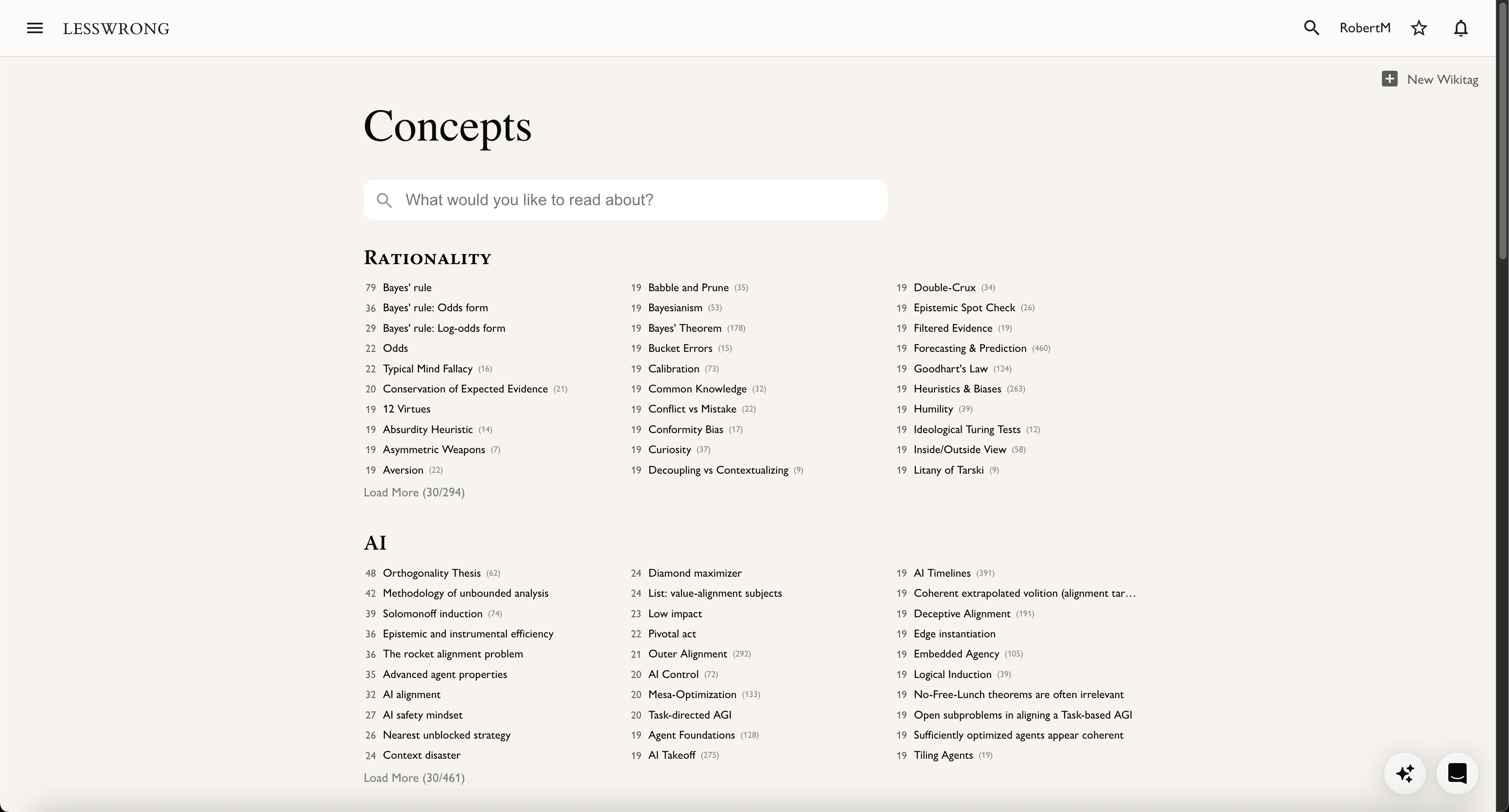

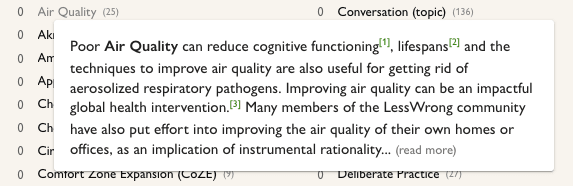

The new concepts page

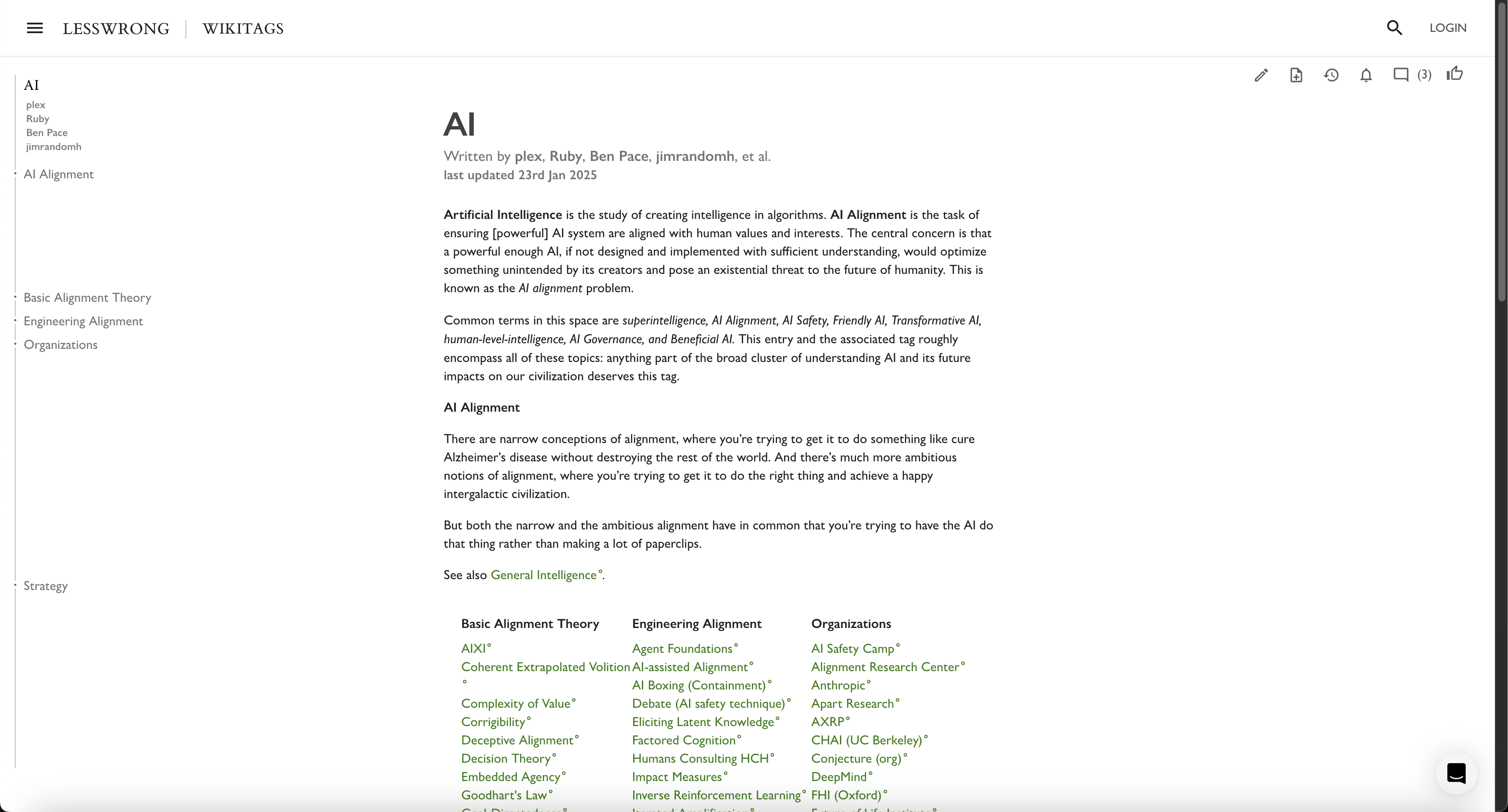

The new wiki/tag page design

We rolled this change out a few weeks ago, in advance of the Arbital migration, to shake out any bugs (and to see how many people complained). It's broadly similar to the new post page design, though for wiki pages the table of contents is always visible, as opposed to only being visible on-hover.

Non-tag wiki pages

LessWrong has hosted wiki pages that couldn't be used as "tags" for a while (using otherwise the same architecture and UI). These were primarily for imports from the old LessWrong wiki, but users couldn't create "wiki-only" pages. Given that most Arbital pages make more sense as wiki pages rather than tags, we decided to let users create them as well. See here for an explanation of the distinction.

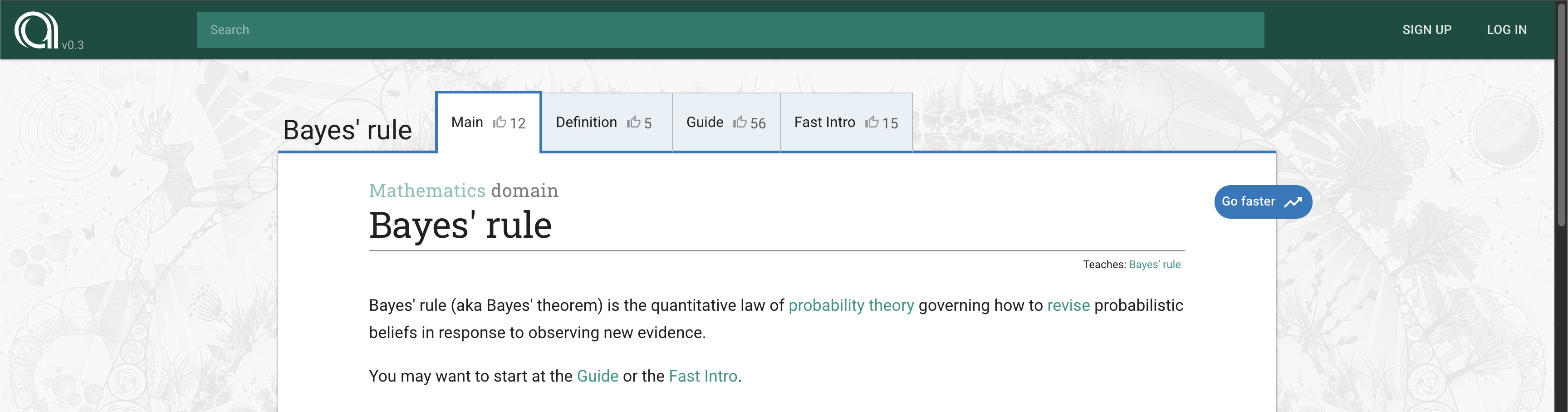

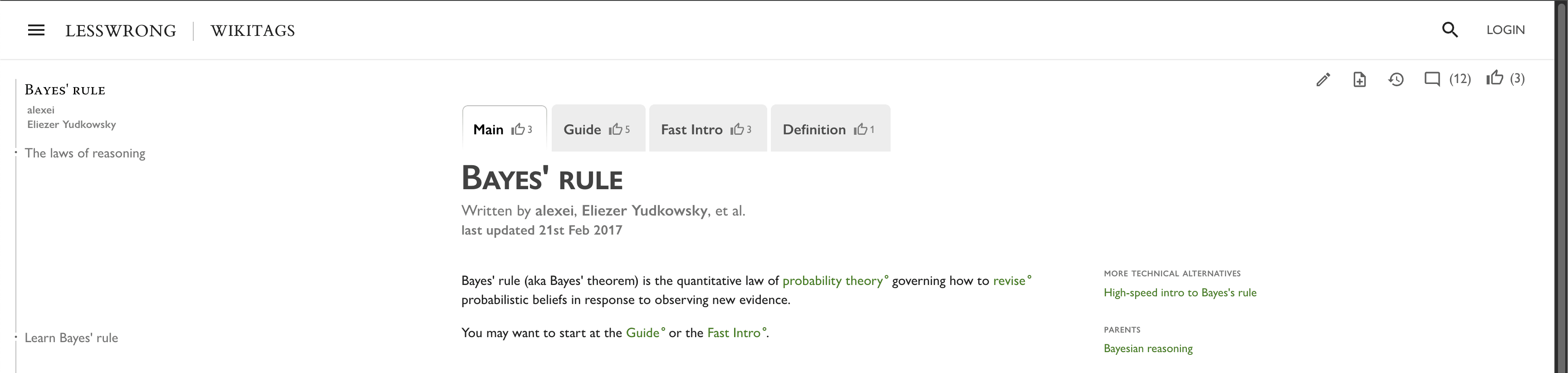

Lenses

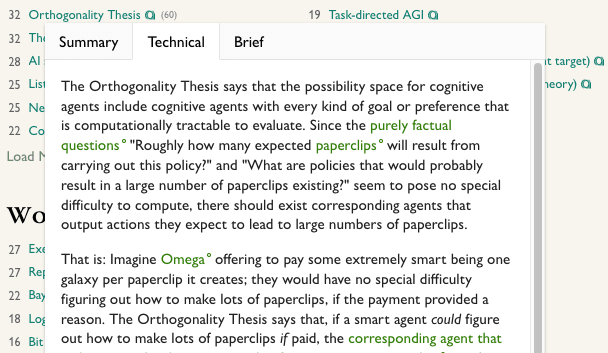

Lenses are... tabs. Opinionated tabs! As the name implies, they're meant to be different "lenses" on the same subject. You can click between them, and even link to them directly, if you want to send someone a specific lens.

"Voting"

In the past, you could vote on individual revisions of wiki pages. The intent was to create a feedback loop that rewarded high-quality contributions. This didn't quite work out, but even if it had, it wouldn't have solved the problem of readers having any way to judge the quality of various wiki pages. We've implemented something similar to Arbital's "like" system, so you can now "like" wiki pages and lenses. This has no effect on the karma of any users, but does control the ordering of pages displayed on the new Concepts page. A user's "like" has as much weight as their strong vote, and pages are displayed in descending order based on the maximum "score" of any given page across all of its lenses[2].

You can like a page by clicking on the thumbs-up button in the top-right corner of a page, or the same icon on the tab for the lens you want to like.

As was the case on Arbital, likes on wiki pages are not anonymous.

Inline Reacts

Along with voting, we've added the ability to leave inline reacts on the contents of wiki pages. Please use responsibly.

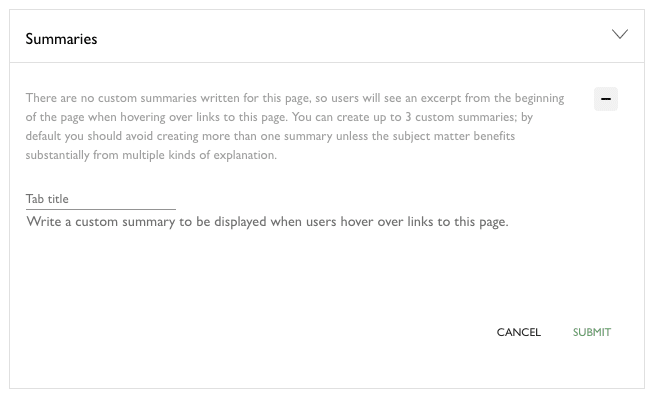

Summaries

(expand to read about summaries)

Summaries are a way to control the preview content displayed when a user hovers over a link to a wiki page. By default, LessWrong displays the first paragraph of content from the wiki page.

Arbital did the same, but also allowed users to write one or more custom summaries, which you can now do as well.

If a page has more than one summary, you'll see a slightly different view in the hover preview, with titled tabs for each summary.

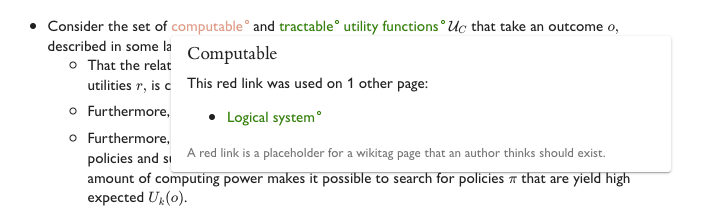

Redlinks

(expand to read about redlinks)

Redlinks are links to wiki pages that don't yet exist. The typical usage on Arbital was to signal to readers: "Hey, this is a placeholder for a concept or idea that hasn't been explained yet."

You can create a redlink by just linking to a wiki url (/w/...) that doesn't point to an existing page.

To readers, redlinks look like links... which are red.

Claims

(expand to read about claims)

Arbital had Claims. LessWrong used to have embeddable, interactive predictions[3]. These were close to identical features, so we brought it back.

The edit history page

The edit history page now also includes edits to lenses, summaries, and page metadata, as well as edits to the main page content, comments on the page, and tag-applications.

Misc.

You can now triple-click to edit wiki pages.

Some other Arbital features, such as guides/paths, subject prerequisites, page speeds and relationships, etc, have been imported in limited read-only formats to LessWrong. These features will be present on imported Arbital content, to preserve the existing structure of Arbital content as much as possible, but we haven't implemented the ability to use those features for new wiki content on LessWrong.

As always, we're interested in your feedback, though make no promises about what we do with it. Please do report bugs or unexpected behavior if/when you find them, especially around wikitags - you can reach us on Intercom (bottom-right corner of the screen) or leave a comment on this post.

- ^

This might involve non-trivial updates to the current features and UI. No fixed timeline but hopefully within a few weeks.

- ^

If Page A has Lens A' with a score of 50, a link to Page A will be displayed above a link to Page B with a score of 40, even if Page A itself only has a score of 30.

- ^

These were originally an integration with Ought. When Ought sunset their API, we imported all the existing questions and predictions and made them read-only.

The fact that you so naturally used the word "version" here (it was essentially invisible, it didn't feel like a terminology choice at all) suggests that "version" would be a good term to use instead of "lens". Downside being that it's a sufficiently common word that it doesn't sound like a Term of Art.