One of the main obstacles to building safe and aligned AI is that we don't know how to store human values on a computer.

Why is that?

Human values are abstract feelings and intuitions that can be described with words.

For example:

- Freedom or Liberty

- Happiness or Welfare

- Justice

- Individual Sovereignty

- Truth

- Physical Health & Mental Health

- Prosperity & Wealth

We see that AI systems like GPT-3 or other NLP based systems use Word2Vec or other node networks / knowledge graphs to encode words and their meanings as complex relationships. This then allows software developers to parameterize language so that a computer can do Math with it, and transform complicated formulas into a human readable output. Here's a great video on how computers understand language using Word2Vec . Besides that, ConceptNet another great example of a linguistic knowledge graph.

So, what we have to do is find a knowledge graph that helps us encode human values, so that computers can better understand human values and learn to operate within those parameters, not outside of them.

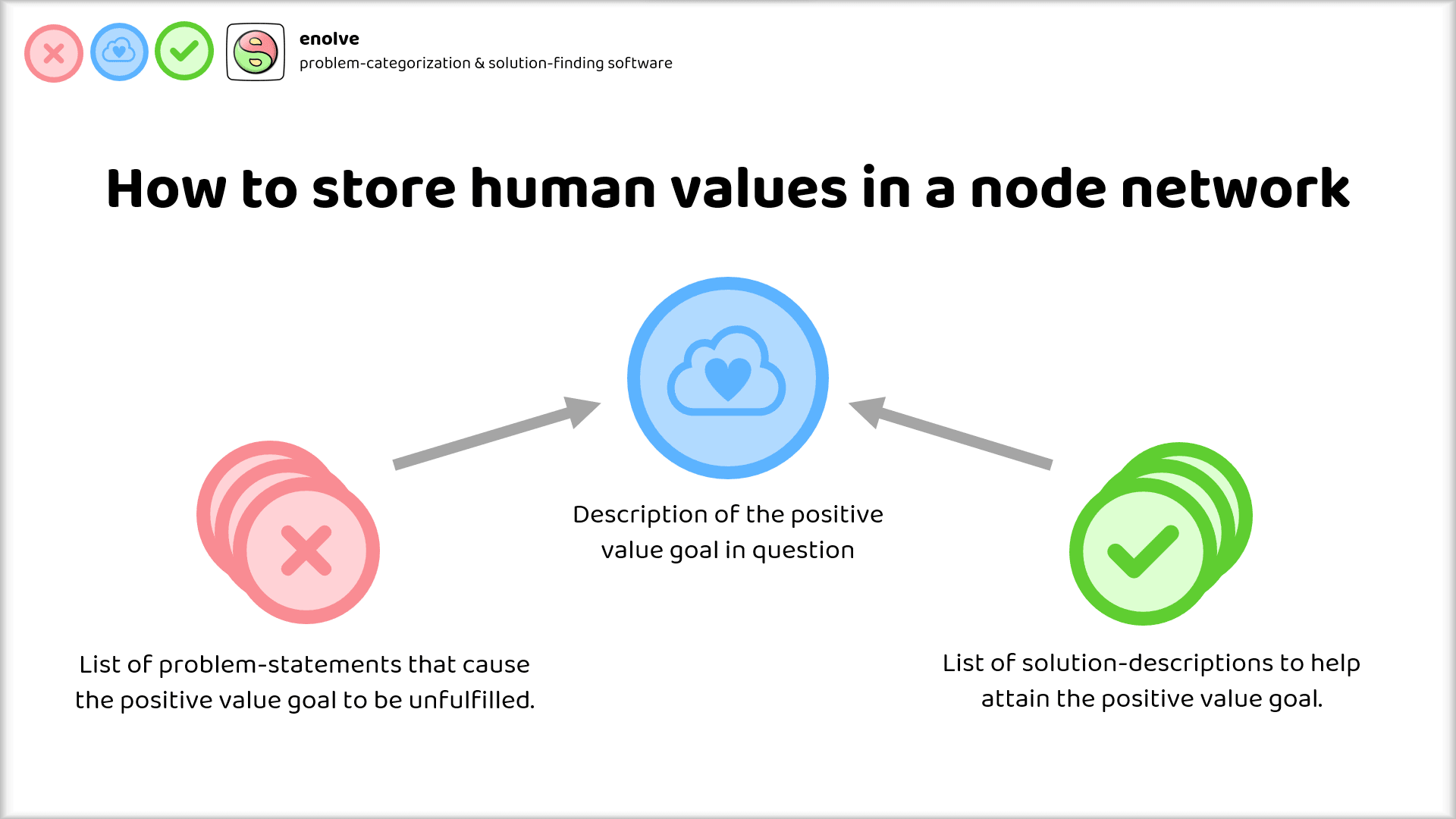

My suggestion for how to do this is quite simple: Categorize each "positive value goal" according to the actionable, tangible methods and systems that help fulfill this positive value goal, and then contrast that with all the negative problems that exist in the world with respect to that positive value goal.

Using the language of the instrumental convergence thesis:

Instrumental goals are the tangible, actionable methods and systems (also called solutions) that help to fulfill terminal goals.

Problems on the other hand are descriptions of how terminal goals are violated or unfulfilled.

There can be many solutions associated to each positive value goal, as well as multiple problems.

Such a system lays the foundation for a node network or knowledge graph of our human ideals and what we consider "good".

Would it be a problem if AI was instrumentally convergent on doing the most good and solving the most problems? That's something I've put up for discussion here and I'd be curious to hear your opinion in the comments!

Thanks for sharing! Yes, is seems that the computational complexity could indeed explode at some point.

But then again, an average human brain is capable of storing common sense values and ethics, so unless there's a magic ingredient in the human brain, it's probably not impossible to rebuild it on a computer.

Then, with an artificial brain that has all the benefits of never fatiguing and such, we may come close to a somewhat useful Genie that can at least advise on the best course of action given all the possible pitfalls.

Even if it'll just be, say 25% better than the best human - all humans could get access to this Genie on their Smartphone, how cool would that be?

But I'll have to dig deeper into The Sequences, seems very comprehensive.

I found Monica Anderson's blog quite inspiring, as well. She writes about model free, holistic systems. https://experimental-epistemology.ai/