> This has also been my direct experience studying and researching open-source models at Conjecture.

Interesting! Assuming it's public, what are some of the most surprising things you've found open source models to be capable of that people were previously assuming they couldn't do?

This matters for advocacy for pausing AI, or failing that, advocacy about how far back the red-lines ought to be set. To give a really extreme example, if it turns out even an old model like GPT-3 could tell the user exactly how to make a novel bioweapon if prompted weirdly, it seems really useful to be able to convince our policy makers of this fact, though the weird prompting technique itself should of course be kept secret.

That was an enjoyable read! The line about the toaster writing a compelling biography of the bagel it had toasted actually made me physically laugh (and then realize it was time to walk over to the kitchen to make breakfast once I finished the story.)

I tried some smaller versions of that a couple years ago, and it sure looks like they do! https://www.lesswrong.com/posts/xwdRzJxyqFqgXTWbH/how-does-a-blind-model-see-the-earth?commentId=DANGuYJcfzJwxZASa

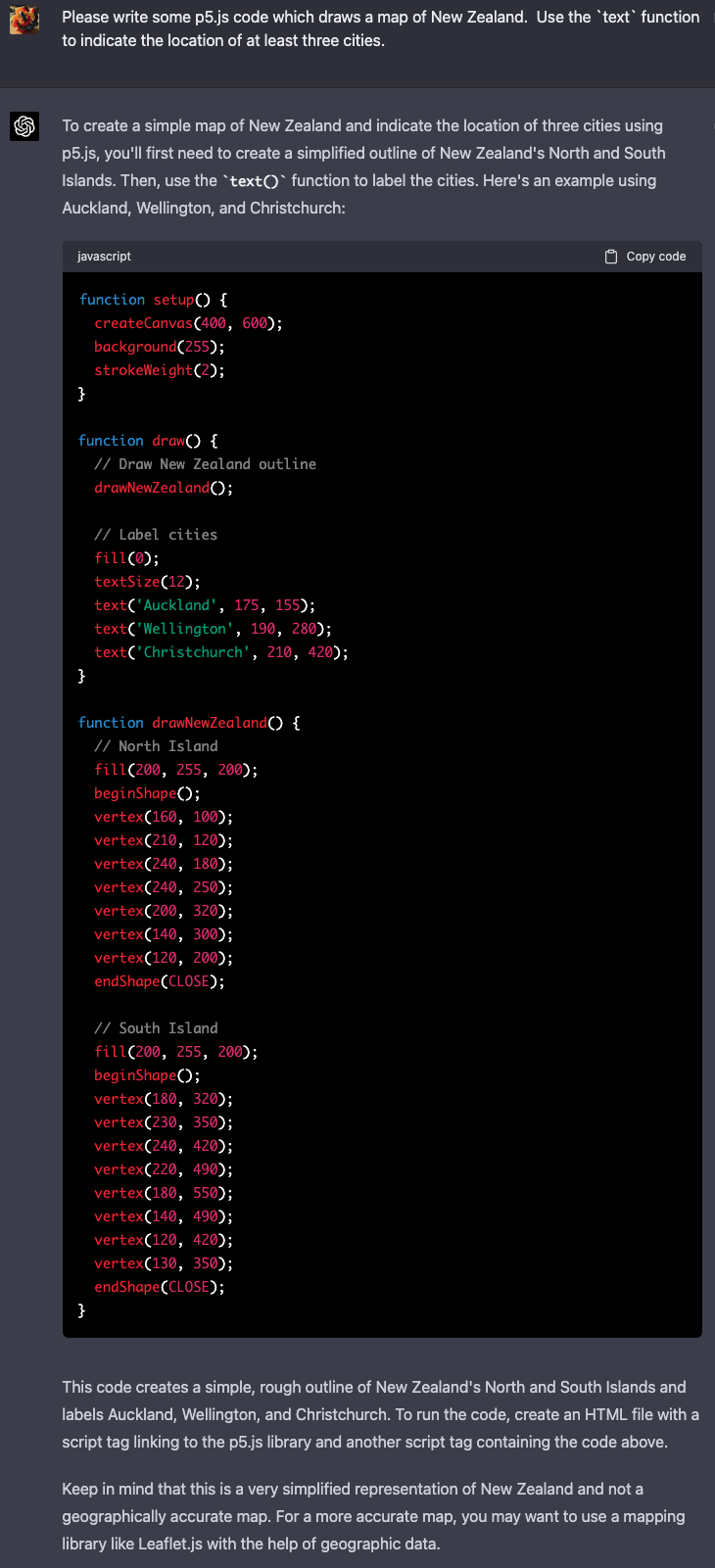

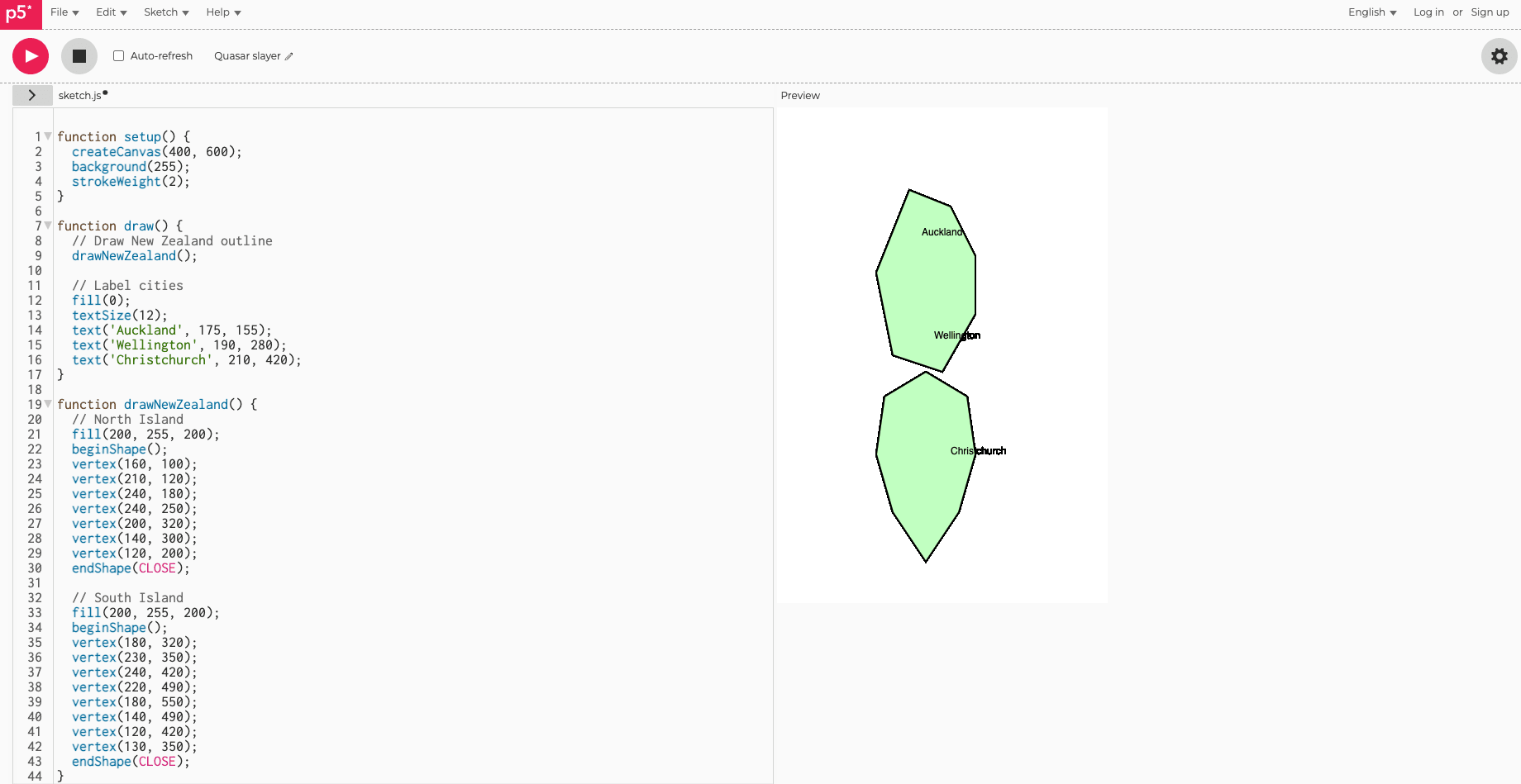

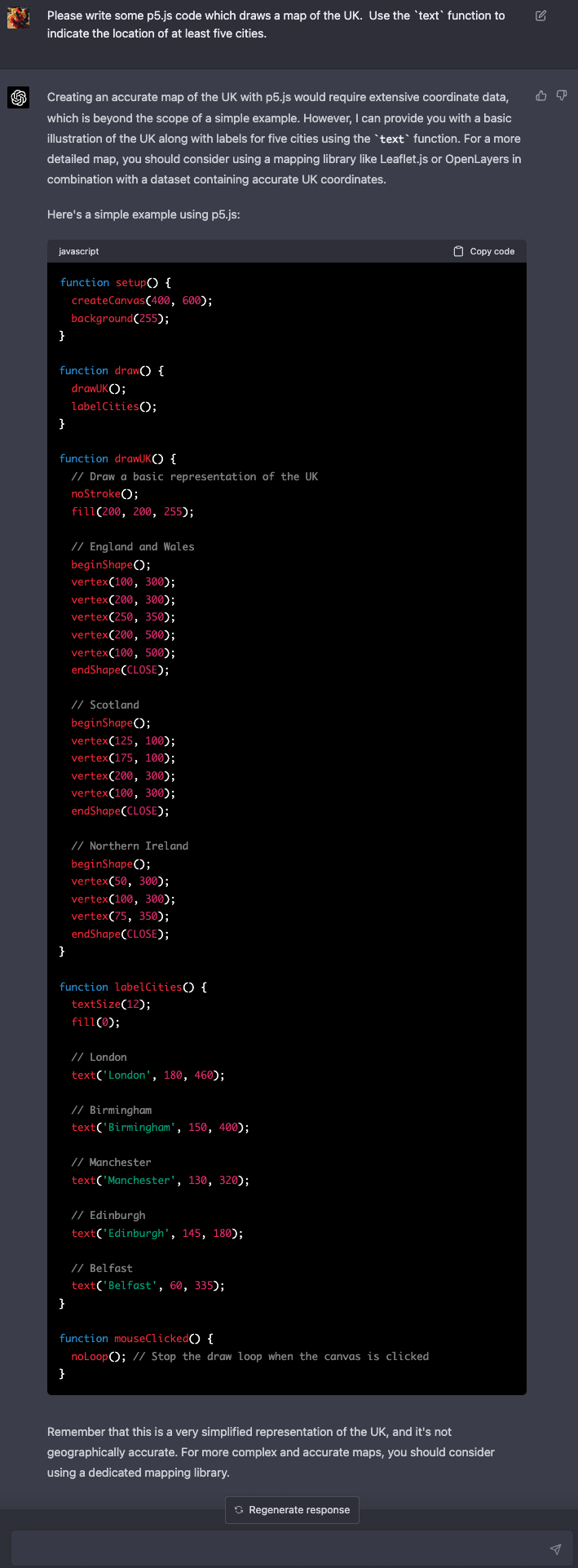

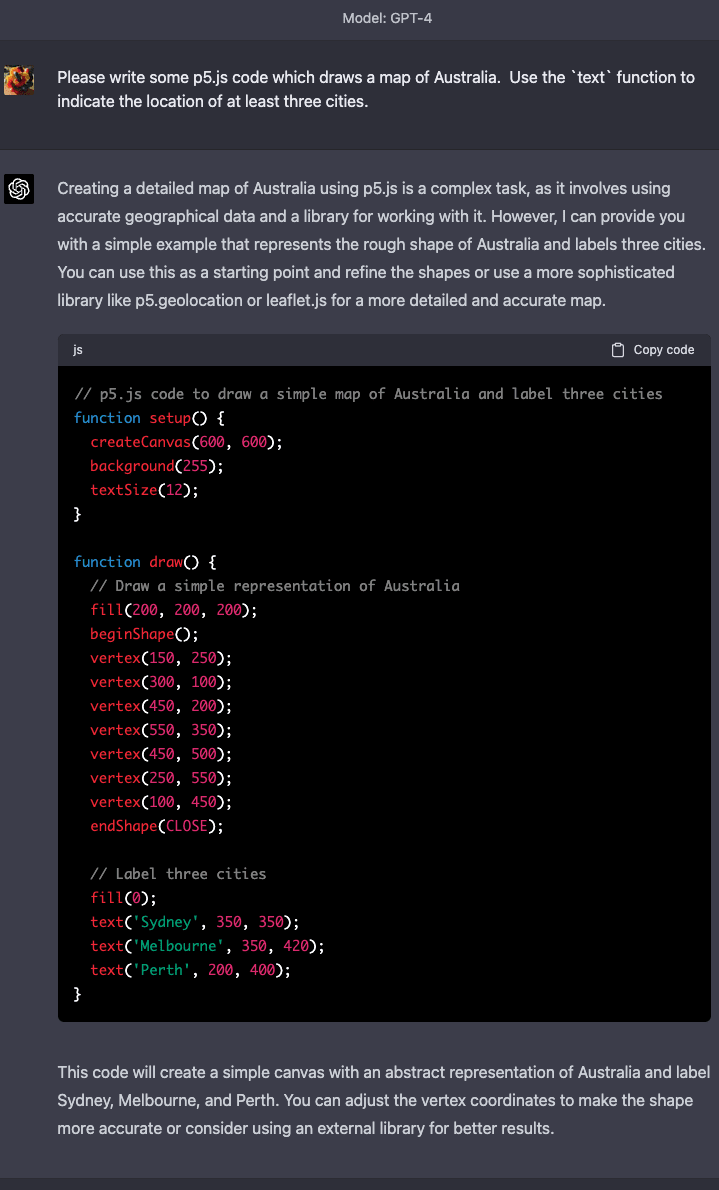

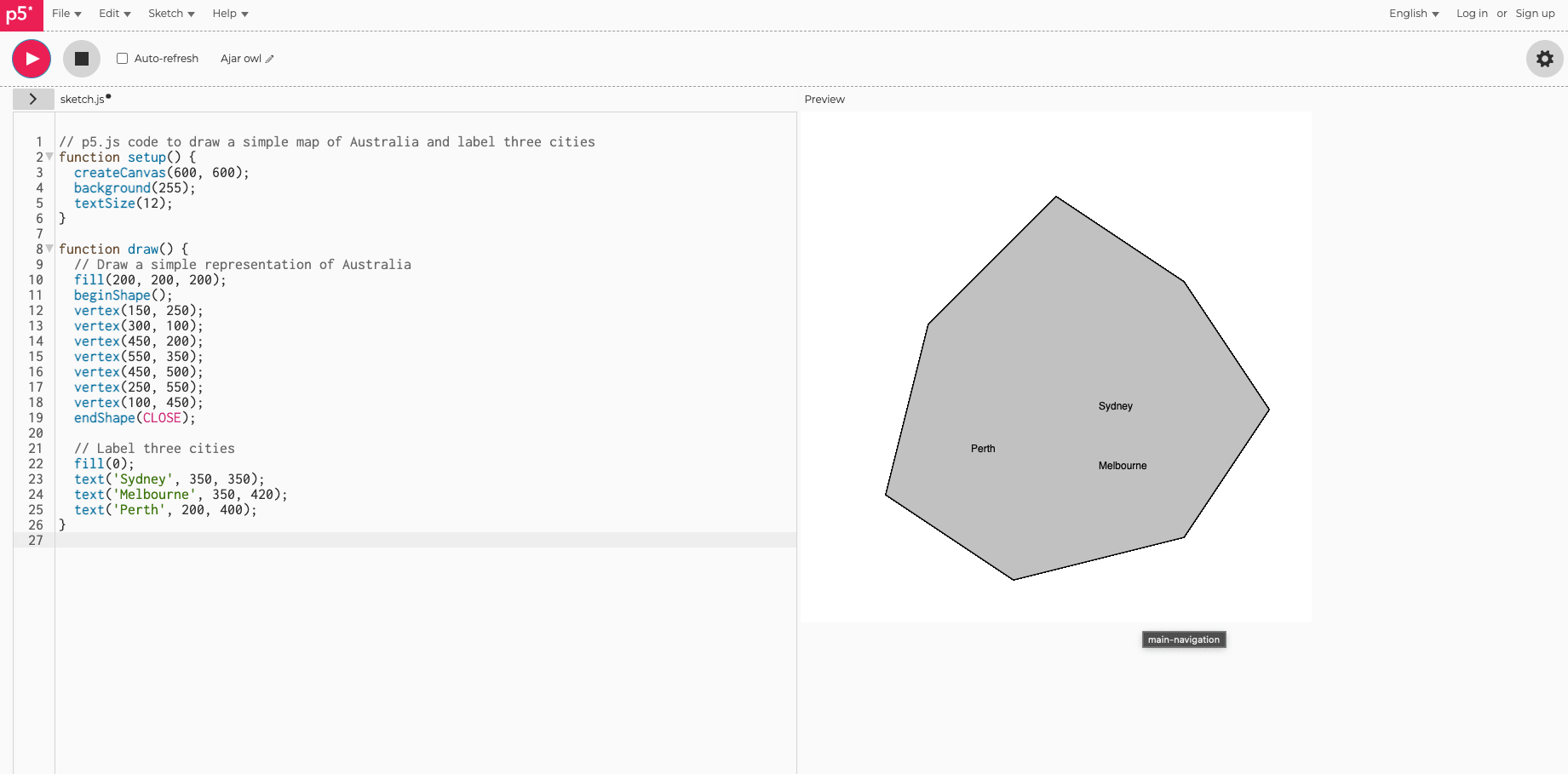

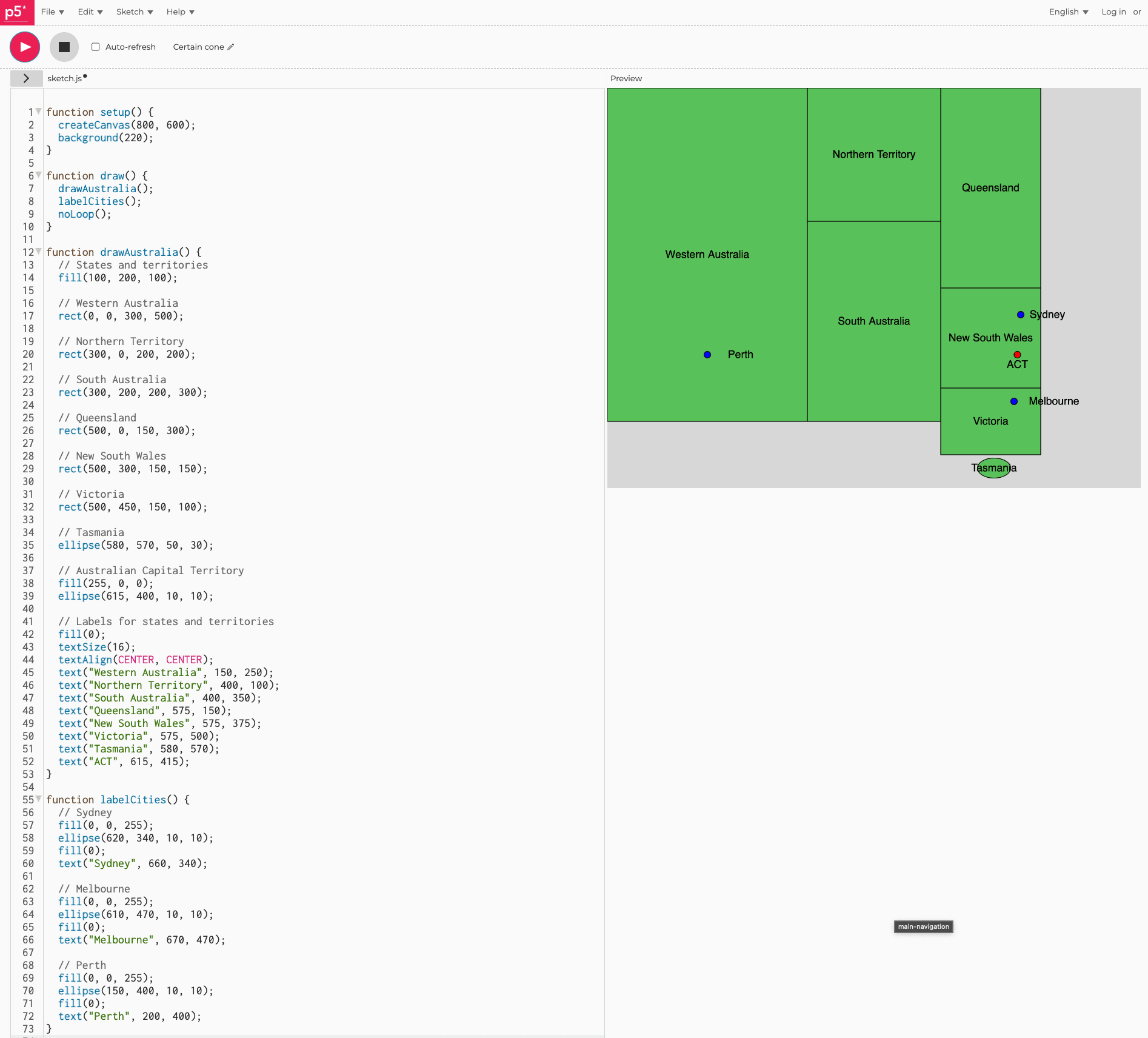

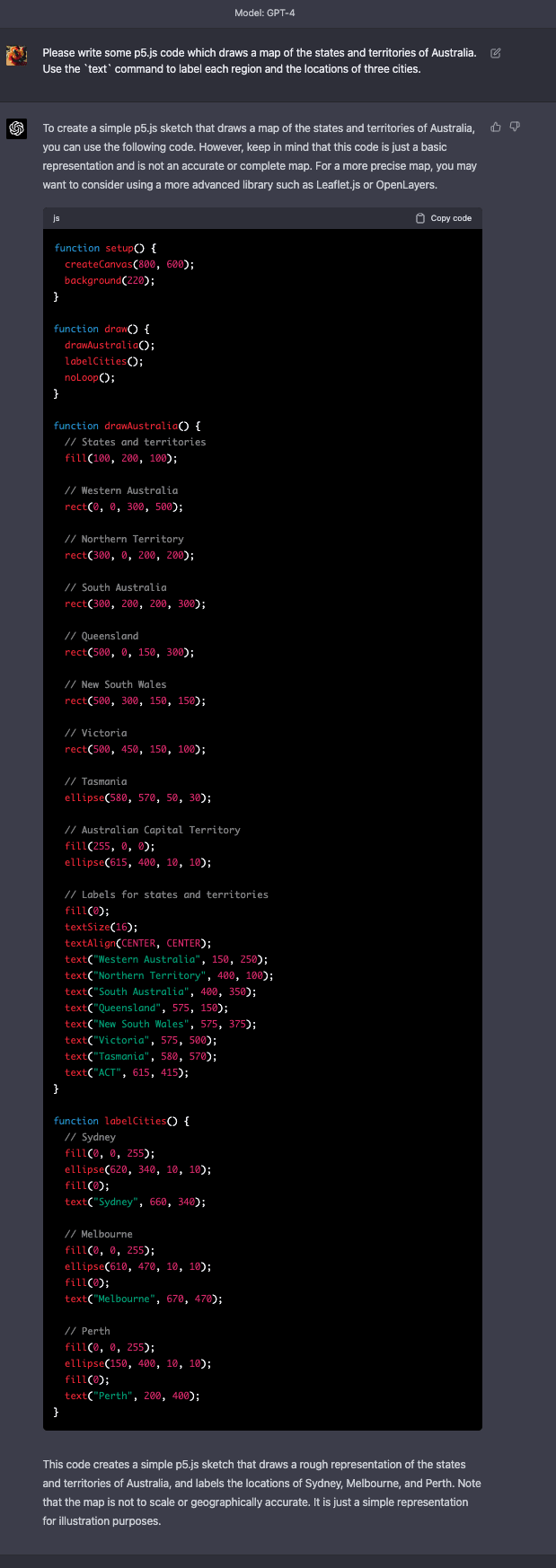

This is pretty neat! This reminds me of some informal experiments I did with GPT-4 back in March of 2023. I was curious how much geographical information was hiding in there, but my approach was to pick individual countries and ask it to draw maps of them using `p5.js`, a simple JavaScript library for drawing shapes on a canvas. Here are what some of those results looked like.

So it seems casually like even GPT-4 has far more geographical knowledge hiding in it (at least when it comes to the approximate relative positions of landmasses and countries) than the post's lat-lon query tactic seemed to surface. Of course, it's tricky to draw a shoggoth's eye view of the world, especially given how many eyes a shoggoth has!

I wonder what sorts of tricks could elicit the geographical information a shoggoth knows better. Off the top of my head, another approach might be to ask what countries if any are near each (larger) grid sector of the earth, and then explicitly ask for each fine-grained lat-lon coordinate, which country it's part of if any. I wonder if we'd get a higher-fidelity map that way. One could also imagine asking for the approximate centers and radii of all the countries one at a time, and producing a map made of circles.

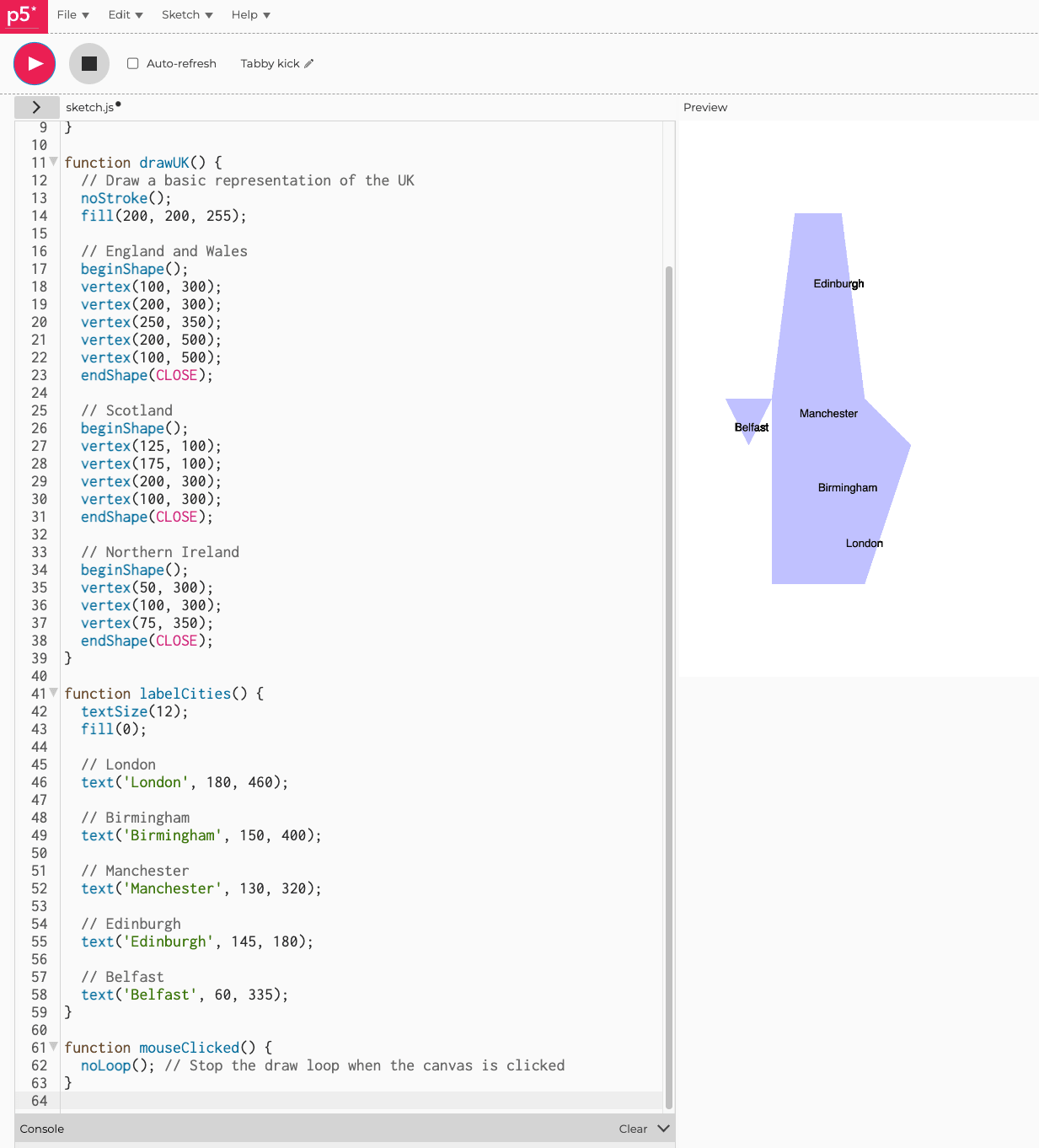

Anyway, here are some of the results from the experimentation I mentioned earlier:

Results

New Zealand

UK

Weird Australia

Here's an example of one of the results that didn't work as well:

Boxy Australia

Despite the previous blob failure, this prompt shows that the model actually does know somewhat more about rough relative positions of more things in Australia than the previous example revealed.

I've heard that one is true both at the macro-scale (Chicxulub) and at the micro-scale (one of the theories I've heard about human evolution is that it was strongly shaped by a sudden drought.) Thought Chicxulub is the important one that lets the earth effectively buy twice as many lottery tickets.

Right. Task Difficulty is a hard thing to get a handle on.

* You don't know how hard a problem is until you've solved it, so any metric needs to depend on how hard the problem was to solve.

* Intuitively, we might want some metric that depends on the "stack trace" of the solution, i.e. what sorts of mental moves had to happen for the person to solve the problem. Incidentally, this means that all such metrics are sometimes over-estimates (maybe there's an easy way to solve the problem that the person you watched solving it missed.) Human wall-clock time is in some sense the simplest question one could ask about the stack trace of a human solving the problem.

* The difficulty of a problem is often agent relative. There are plenty of contest math problems that are rendered easy if you have the correct tool in your toolkit and really hard if you don't. Crystalized intelligence often passes for fluid intelligence, and the two blend into each other.

Some other potential metrics (loose brainstorm)

* Hint length - In some of Eliezer's earlier posts, intelligence got measured as optimization pressure in bits (intuitively: how many times do you have to double the size of the target for it to fill the entire dart-board. Of course you need a measure space for your dart board in order for this to work.) Loosely inspired by this, we might pick some model that's capable of following chains of logic but not very smart (whatever it knows how to do is a stand in for what's obvious.) Then ask how long of a hint string you have to hand it to solve the problem. (Of course finding the shortest hint string is hard; you'd need to poke around heuristically to find a relatively short one.)

* ELO score type metrics - You treat each of your puzzles and agents (which can be either AIs or humans) as players of a two player game. If the agent solves a puzzle the agent wins, otherwise the puzzle wins. Then we calculate ELO scores. The nice thing about this is that we effectively get to punt the problem of defining a difficulty metric, by saying that each agent has a latent intelligence variable and each problem has a latent difficulty variable, and we can figure out what both of them are together by looking at who was able to solve which problem.

Caveats: Of course, like human wall-clock time, this assumes intelligence and difficulty are one-dimensional, though of course if you can say what you'd like to assume instead, you can make statistical models more sophisticated than the one ELO scoring implicitly assumes. Also, this still doesn't help for measuring the difficulties of problems way outside the eval set (the using "Paleolithic canoeing records to forecast when humans will reach the moon" obstacle) if everybody loses against puzzle X that doesn't put much of an upper bound on how hard it is.

A Cautionary Tale about Trusting Numbers from Wikipedia

So this morning I woke up early and thought to myself: "You know what I haven't done in a while? Good old fashioned Wikipedia rabbit hole." So I started reading the article on rabbits. Things were relatively sane until I got to the section on rabbits as food.

Wild leporids comprise a small portion of global rabbit-meat consumption. Domesticated descendants of the European rabbit (Oryctolagus cuniculus) that are bred and kept as livestock (a practice called cuniculture) account for the estimated 200 million tons of rabbit meat produced annually.[161] Approximately 1.2 billion rabbits are slaughtered each year for meat worldwide.[162]

Something has gone very wrong here!

200 million tons is 400 billion pounds (add ~10% if they're metric tons, but we can ignore that.)

Divide that by 1.2 billion, and we can deduce that those rabbits weigh in at over 300 pounds each on average! Now I know we've bred some large animals for livestock, but I'm rolling to disbelieve when it comes to three hundred pound bunnies.

Of the two sources Wikipedia cites it looks like [161] is the less reliable looking one. It's a WSJ blog. But the biggest reason we shouldn't be trusting that article is that its numbers aren't even internally consistent!

From the article:

Globally, about some 200 million tons of rabbit meat are produced a year, says Luo Dong, director of the Chinese Rabbit Industry Association. China consumes about 30% of the whole production, he said, with 70% of such meat—or some 420,000 tons a year—going to Sichuan province as well as the neighboring municipality of Chongqing.

There's a basic arithmetic error here! `200 million * 70% * 30%` is 42 *million*, not 420,000.

If we assume this 200 million ton number was wrong and the 420,000 ton number for Sichuan was right, the global number should in fact be 2 million tons. This would make the rabbits weigh three pounds each on average, which is a much more reasonable weight for a rabbit!

If I had to take a guess as to how this mistake happened, putting on my linguist hat, Chinese has a single word for ten thousand, like the Greek-derived "myriad", (spelt either 万 or 萬). If you actually wanted to say 2*10^6 in Chinese, it would end up as something like "two hundred myriad". So I can see a fairly plausible way a translator could mess up and render it as "200 million".

Anyway, I've posted this essay to the talk page and submitted an edit request. We'll see how long it takes Wikipedia to fix this.

Links:

Original article: https://en.wikipedia.org/wiki/Rabbit#As_food_and_clothing

[161] https://web.archive.org/web/20170714001053/https://blogs.wsj.com/chinarealtime/2014/06/1 3/french-rabbit-heads-the-newest-delicacy-in-chinese-cuisine/

Looking at this comment from three years in the future, I'll just note that there's something quite ironic about your having put Sam Bankman-Fried on this list! If only he'd refactored his identity more! But no, he was stuck in short-sighted-greed/CDT/small-self, and we all paid a price for that, didn't we?

In Defense of the Shoggoth Analogy

In reply to: https://twitter.com/OwainEvans_UK/status/1636599127902662658

The explanations in the thread seem to me to be missing the middle or evading the heart of the problem. Zoomed out: an optimization target at level of personality. Zoomed in: a circuit diagram of layers. But those layers with billions of weights are pretty much Turing complete.

Unfortunately, I don't think anyone has much idea how all those little learned computations are make up said personality. My suspicion is there isn't going to be an *easy* way to explain what they're doing. Of course, I'd be relieved to be wrong here!

This matters because the analogy in the thread between averaged faces and LLM outputs is broken in an important way. (Nearly) every picture of a face in the training data has a nose. When you look at the nose of an averaged face, it's based very closely on the noses of all the faces that got averaged. However, despite the size of the training datasets for LLMs, the space of possible queries and topics of conversation is even vaster (it's exponential in the prompt-window size, unlike the query space for the average faces which are just the size of the image).

As such, LLMs are forced to extrapolate hard. So, I'd expect that which particular generalizations they learned, hiding in those weights, to start to matter once users start poking them in unanticipated ways.

In short, if LLMs are like averaged faces, I think they're faces that will readily fall apart into Shoggoths if someone looks at them from an unanticipated or uncommon angle.

OK. First of all, this is a cool idea.

However, if someone actually tries this I can see a particular failure mode that isn't fleshed out (maybe you do address this later in the post sequence, but I haven't read the entire sequence yet.) This particular failure mode would probably have fallen under "Identifying the Principal is Brittle" section, had you listed it, but it's subtler than the four examples you gave. It's about exactly *what* the principal agent is rather than just *who* (territory which only your fourth bullet point started venturing into). Granted, you did mention "avoiding manipulation" in the context of it becoming a bizarre notion if we tried to make principal be a developer console rather than a person, and you get points for having called it out in that section in particular as a "place where lots of additional work is needed".

Anyway, my contention is that the manipulation concept also starts ending up with increasingly ambiguous boundaries the more of an intelligence gap there is between the agent and the principal. As such, some of these failure modes may only happen when the AI is more powerful than the researchers. The particular ones I have in mind here happen when the AI's model of the principal improves enough to manipulate the principal in a weird new way.

To give an extreme motivating example, if there's a sequence of images you can show the human principle(s) to warp their preferences (like in Snow Crash), we would want the AI's concept of the principal to count such hypnosis as the victim becoming less faithful representations of the true principal™ in a regrettable way rather than as the principal having a legitimate preference to conditionally want some different thing if they're shown the images. Unfortunately, these two ways of resolving that ambiguity seem like they would produce identical behavior right up until the AI is smart enough to manipulate the principal in that way.

Put another way: I'm afraid naive attempts to build CAST systems are likely to yield an AI which subtly misidentifies exactly what computational process constitutes the principal agent, even if they could reliably point a robot arm at the physical human(s) in question. (Sure, we find the snow-crash example obvious, but supposing the agent is smart enough to see multiple arguments with differing conclusions all of which the principal would find compelling, or more broadly, smart enough to model that your principal would end up expressing divergent preferences depending on what inputs they see. Then things get weird.)

I'll go further and argue that we likely can't make a robust CAST agent that can scale to superhuman levels unless it can reliably distinguish what counts as the principal making a legitimate update versus what counts as the principal having been manipulated (that, or this whole conceptual tangle around agency/desire/etc. that I'm using to model the principal needs to be refactored somehow). False negatives mean situations where the AI can't be corrected by the principal since it no longer acknowledges the principle's authority. Any false positives become potential avenues the AI could use to get away with manipulating the principal. (If enough weird manipulation avenues exist and aren't flagged as such, I'd expect the AI to take one of them, since steering your principal into the region of the state-space where their preferences are easier to satisfy is a good strategy!)

I don't think this means CAST is doomed. It does seems intuitively like you need to solve fewer gnarly philosophy problems to make a CAST agent than a Friendly AGI, and if we can select a friendly principal maybe that's a good trade. I just think philosophically distinguishing manipulations from updates in squishy systems like human principals looks to be one of those remaining gnarly philosophy problems that we might not be able to get away with delegating to the machines, and even if we did solve it, we'd still need some pretty sophisticated interpretability tools to suss out whether the machine got it right.