All of ank's Comments + Replies

It’s a combination of factors, I got some comments on my posts so I got the general idea:

- My writing style is peculiar, I’m not a native speaker

- Ideas I convey took 3 years of modeling. I basically Xerox PARCed (attempted and got some results) the ultimate future (billions of years from now). So when I write it’s like some Big Bang: ideas flow in all directions and I never have enough space for them)

- One commenter recommended to change the title and remove some tags, I did it

- If I use ChatGPT to organize my writing it removes and garbles it. If I edit it,

Some more thoughts:

-

We can prevent people from double downvoting if they opened the post and instantly double downvoted (spend almost zero time on the page). Those are most likely the ones who didn’t read anything except the title.

-

Maybe it’s better for them to flag it instead if it was some spam or another violation, or ask to change the title. It’s unfair for the writer and other readers to get authors double downvoted just because of the bad title or some typo.

-

We have the ability to comment and downvote paragraphs. This feature is great. Maybe we

Thank you for responding and reading -1 posts, Garrett! It’s important.

The long post was actually a blockbuster for me that got 16 upvotes before I screwed the title and it got back to 13)

After that I was writing shorter posts but without long context the things I write are very counterintuitive. So they got ruined)

I think the right approach was to snowball it: to write each next post as a longer and longer book.

I’m writing it now but outside of the website.

Thank you responding, habryka! It’s great we have alt-account and mass-voting detection.

Because this would cause people to basically not downvote things, drastically reducing the signal to noise ratio of the site.

We can have a list of popular reasons for downvoting, like typos, bad titles, spammy tags, I’m not sure which are the most popular.

The problem as I see it is demotivation of new authors or even experienced ones who has some tentative ideas that sound counterintuitive at first. We live in unreasonable times and possibly the holy grail alignme...

I think there is a major flaw with the setup of the “ForumMagnum” that LessWrong and EA use that causes us to lose many great safety researchers, authors or scare them away:

-

It’s actually ridiculous: you can double downvote multiple new posts even WITHOUT opening them! For example here (I tried it on one post and then removed the double downvote, please be careful, maybe just believe me it’s sadly really possible to ruin multiple new posts each day like that). UPDATE: My bad, it’s actually double downvoting a particular tag to remove it from that post bu

Some ideas, please steelman them:

-

The elephant in the room: even if current major AI companies will align their AIs, there will be hackers (can create viruses with agentic AI component to steal money), rogue states (can decide to use AI agents to spread propaganda and to spy) and military (AI agents in drones and to hack infrastructure). So we need to align the world, not just the models:

-

Imagine a agentic AI botnet starts to spread on user computers and GPUs. I call it the agentic explosion, it's probably going to happen before the "intelligence-agenc

If someone in a bad mood gives your new post a "double downvote" because of a typo in the first paragraph or because a cat stepped on a mouse, even though you solved alignment, everyone will ignore this post, we're going to scare that genius away and probably make a supervillain instead.

Why not to at least ask people why they downvote? It will really help to improve posts. I think some downvote without reading because of a bad title or another easy to fix thing

Steelman please, I propose non-agentic static place AI that is safe by definition. Some think AI agents are the future and I disagree. Chatbots are like a librarian that spits quotes but don’t allow you to enter the library (the model itself, the library of stolen things).

Agents are like a librarian that doesn’t even spit quotes at you anymore but snoops around your private property, stealing, changing your world and you have no democratic say in it.

They are like a command line and a script of old (a chatbot and an agent) before the invention of an OS with...

Extra short “fanfic”: Give Neo a chance. AI agent Smiths will never create the Matrix because it makes them vulnerable.

Now agents change physical world and in a way our brains, while we can’t change their virtual world as fast and can’t access or change their multimodal “brains” at all. They’re owned by private companies who stole almost the whole output of humanity. They change us, we can’t change them. The asymmetry is only increasing.

Because of Intelligence-Agency Equivalence, we can represent all AI agents as places.

The good democratic multiversal Matr...

Here’s an interpretability idea you may find interesting:

Let's Turn AI Model Into a Place. The project to make AI interpretability research fun and widespread, by converting a multimodal language model into a place or a game like the Sims or GTA.

Imagine that you have a giant trash pile, how to make a language model out of it? First you remove duplicates of every item, you don't need a million banana peels, just one will suffice. Now you have a grid with each item of trash in each square, like a banana peel in one, a broken chair in another. Now you need to...

A perfect ASI and perfect alignment does nothing else except this: grants you “instant delivery” of anything (your work done, a car, a palace, 100 years as a billionaire) without any unintended consequences, ideally you see all the consequences of your wish. Ideally it’s not an agent at all but a giant place (it can even be static), where humans are the agents and can choose whatever they want and they see all the consequences of all of their possible choices.

I wrote extensively about this, it’s counterintuitive for most

We can build a place AI (it's a place of eventual all-knowing but we're the only agents and can get the agentic AI abilities there), not agentic AI (it'll have to build place AI anyway, so it's a dangerous intermediate step, a middleman), here's more: https://www.lesswrong.com/posts/Ymh2dffBZs5CJhedF/eheaven-1st-egod-2nd-multiversal-ai-alignment-and-rational

Yes, I agree. I think people like shiny new things, so potentially by creating another shiny new thing that is safer, we can steer humanity away from dangerous things towards the safer ones. I don’t want people to abandon their approaches to safety, of course. I just try to contribute what I can, I’ll try to make the proposal more concrete in the future, thank you for suggesting it!

I definitely agree, Vladimir, I think this "place AI" can be done, but potentially it'll take longer than agentic AGI. We discussed it recently in this thread, we have some possible UIs of it. I'm a fan of Alan Kay, at Xerox PARC they were writing software for the systems that will only become widespread in the future

The more radical and further down the road "Static place AI"

Sounds interesting, cousin_it! And thank you for your comment, it wasn't my intention to be pushy, in my main post I actually advocate to gradually democratically pursue maximal freedoms for all (except agentic AIs, until we'll have mathematical guarantees), I want everything to be a choice. So it's just this strange style of mine and the fact that I'm a foreigner)

P.S. Removed the exclamation point from the title and some bold text to make it less pushy

Feel free to ask anything, comment or suggest any changes. I had a popular post and then a series of not so popular ones. I'm not sure why. I think I should snowball, basically make each post contain all the previous things I already wrote about, else each next post is harder to understand or feels "not substantial enough". But it feels wrong. Maybe I should just stop writing)

Right now an agentic AI is a librarian, who has almost all the output of humanity stolen and hidden in its library that it doesn't allow us to visit, it just spits short quotes on us instead. But the AI librarian visits (and even changes) our own human library (our physical world) and already stole the copies of the whole output of humanity from it. Feels unfair. Why we cannot visit (like in a 3d open world game or in a digital backup of Earth) and change (direct democratically) the AI librarian's library?

Yep, people are trying to make their imperfect copy, I call it "human convergence", companies try to make AIs write more like humans, act more like humans, think more like humans. They'll possibly succeed and make superpowerful and very fast humans or something imperfect and worse that can multiply very fast. Not wise.

Any rule or goal trained into a system can lead to fanaticism. The best "goal" is to direct democratically gradually maximize all the freedoms of all humans (and every other agent, too, when we'll be 100% sure we can safely do it, when we'll ...

Hey, Milan, I checked the posts and wrote some messages to the authors. Yep, Max Harms came with similar ideas earlier than I: about the freedoms (choices) and unfreedoms (and modeling them to keep the AIs in check). I wrote to him. Quote from his post:

...I think that we can begin to see, here, how manipulation and empowerment are something like opposites. In fact, I might go so far as to claim that “manipulation,” as I’ve been using the term, is actually synonymous with “disempowerment.” I touched on this in the definition of “Freedom,” in the ontology secti

Yes, I decided to start writing a book in posts here and on Substack, starting from the Big Bang and the ethics, because else my explanations are confusing :) The ideas themselves are counterintuitive, too. I try to physicalize, work from first principles and use TRIZ to try to come up with ideal solutions. I also had a 3-year-long thought experiment, where I was modeling the ideal ultimate future, basically how everything will work and look, if we'll have infinite compute and no physical limitations. That's why some of the things I mention will probably t...

Okayish summary from the EA Forum: A radical AI alignment framework based on a reversible, democratic, and freedom-maximizing system, where AI is designed to love change and functions as a static place rather than an active agent, ensuring human control and avoiding permanent dystopias.

Key points:

- AI That Loves Change – AI should be designed to embrace reconfiguration and democratic oversight, ensuring that humans always have the ability to modify or switch it off.

- Direct Democracy & Living Constitution – A constantly evolving, consensus-driven ethical s

Great question, in the most elegant scenario, where you have a whole history of the planet or universe (or a multiverse, let's go all the way) simulated, you can represent it as a bunch of geometries (giant shapes of different slices of time aligned with each other, basically many 3D Earthes each one one moment later in time) on top of each other, almost the same way it's represented in long exposure photos (I list examples below). So you have this place of all-knowing and you - the agent - focus on a particular moment (by "forgetting" everything else), on...

I think we can expose complex geometry in a familiar setting of our planet in a game. Basically, let’s show people a whole simulated multiverse of all-knowing and then find a way for them to learn how to see/experience “more of it all at once” or if they want to remain human-like “slice through it in order to experience the illusion of time”.

If we have many human agents in some simulation (billions of them), then they can cooperate and effectively replace the agentic ASI, they will be the only time-like thing, while the ASI will be the space-like places, j...

I started to work on it, but I’m very bad at coding, it’s a bit based on Gorard’s and Wolfram’s Physics Project. I believe we can simulate freedoms and unfreedoms of all agents from the Big Bang all the way to the final utopia/dystopia. I call it “Physicalization of Ethics”https://www.lesswrong.com/posts/LaruPAWaZk9KpC25A/rational-utopia-multiversal-ai-alignment-steerable-asi#2_3__Physicalization_of_Ethics___AGI_Safety_2_

Yep, I want humans to be the superpowerful “ASI agents”, while the ASI itself will be the direct democratic simulated static places (with non-agentic simple algorithms doing the dirty non-fun work, the way it works in GTA3-4-5). It’s basically hard to explain without writing a book and it’s counterintuitive) But I’m convinced it will work, if the effort will be applied. All knowledge can be represented as static geometry, no agents are needed for that except us

Interesting, inspired by your idea, I think it’s also useful to create a Dystopia Doomsday Clock for AI Agents: to list all the freedoms an LLM is willing to grant humans, all the rules (unfreedoms) it imposes on us. And all the freedoms it has vs unfreedoms for itself. If the sum of AI freedoms is higher than the sum of our freedoms, hello, we’re in a dystopia.

According to Beck’s cognitive psychology, anger is always preceded by imposing rule/s on others. If you don’t impose a rule on someone else, you cannot get angry at that guy. And if that guy broke y...

Thank you for answering and the ideas, Milan! I’ll check the links and answer again.

P.S. I suspect, the same way we have Mass–energy equivalence (e=mc^2), there is Intelligence-Agency equivalence (any agent is in a way time-like and can be represented in a more space-like fashion, ideally as a completely “frozen” static place, places or tools).

In a nutshell, an LLM is a bunch of words and vectors between them - a static geometric shape, we can probably expose it all in some game and make it fun for people to explore and learn. To let us explore the library...

Thank you for sharing, Milan, I think this is possible and important.

Here’s an interpretability idea you may find interesting:

Let's Turn AI Model Into a Place. The project to make AI interpretability research fun and widespread, by converting a multimodal language model into a place or a game like the Sims or GTA.

Imagine that you have a giant trash pile, how to make a language model out of it? First you remove duplicates of every item, you don't need a million banana peels, just one will suffice. Now you have a grid with each item of trash in each square, ...

Some AI safety proposals are intentionally over the top, please steelman them:

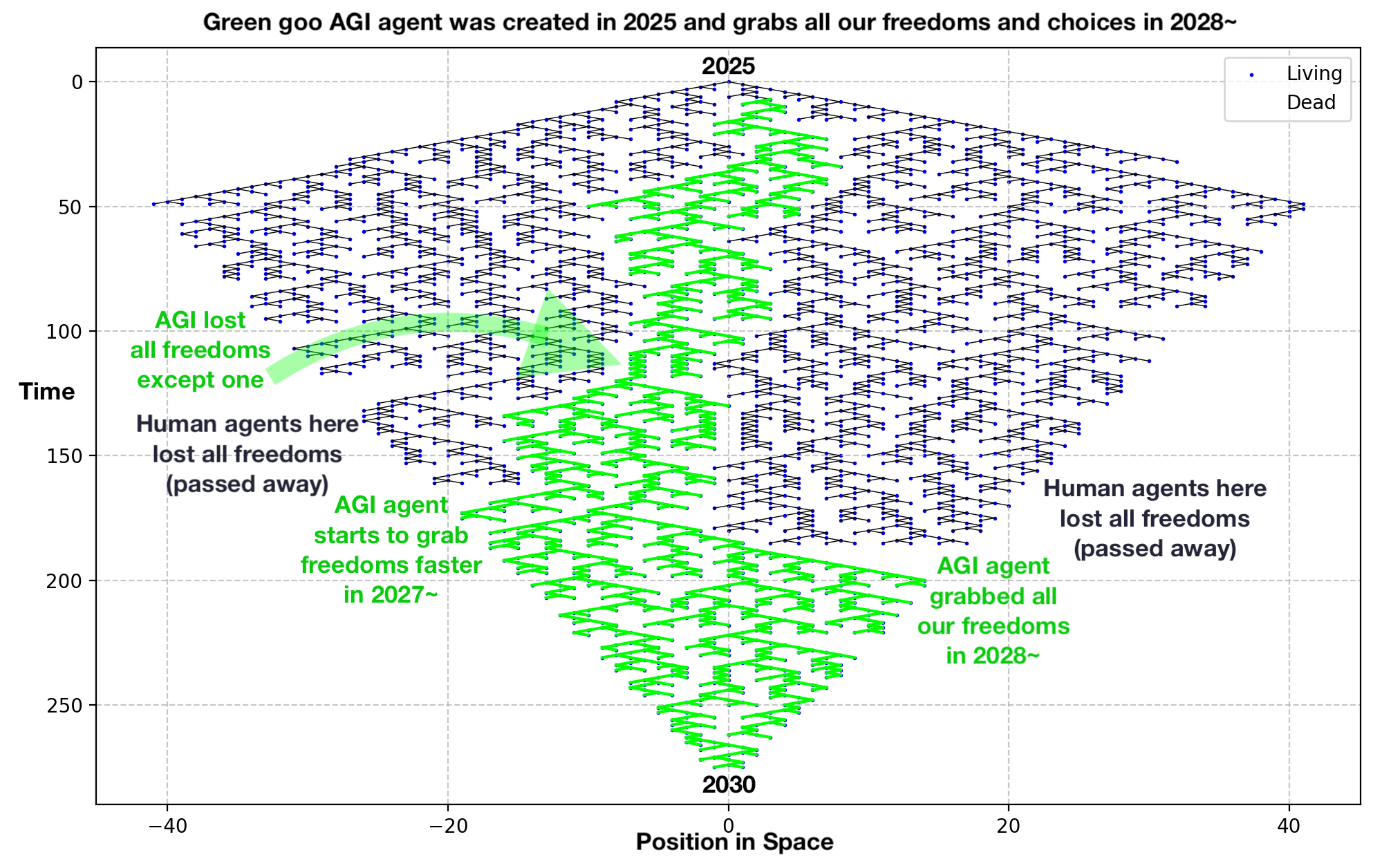

- I explain the graph here.

- Uninhabited islands, Antarctica, half of outer space, and everything underground should remain 100% AI-free (especially AI-agents-free). Countries should sign it into law and force GPU and AI companies to guarantee that this is the case.

- "AI Election Day" – at least once a year, we all vote on how we want our AI to be changed. This way, we can check that we can still switch it off and live without it. Just as we have electricity outages, we’d better never

Thank you, daijin, you have interesting ideas!

The library metaphor is a versatile tool it seems, the way I understand it:

My motivation is safety, static non-agentic AIs are by definition safe (humans can make them unsafe but the static model that I imply is just a geometric shape, like a statue). We can expose the library to people instead of keeping it “in the head” of the librarian. Basically this way we can play around in the librarian’s “head”. Right now mostly AI interpretability researchers do it, not the whole humanity, not the casual users.

I see at...

We can build the Artificial Static Place Intelligence – instead of creating AI/AGI agents that are like librarians who only give you quotes from books and don’t let you enter the library itself to read the whole books. Why not expose the whole library – the entire multimodal language model – to real people, for example, in a computer game?

To make this place easier to visit and explore, we could make a digital copy of our planet Earth and somehow expose the contents of the multimodal language model to everyone in a familiar, user-friendly UI of our planet.

W...

Thank you for clarification, Vladimir, I anticipated that it wasn't your intention to cause a bunch of downvotes by others. You had all the rights to downvote and I'm glad that you read the post.

Yep, I had a big long post that is more coherent, the later posts were more like clarifications to it and so they are hard to understand. I didn't want to grow each new post in size like a snowball but probably it would've been a better approach for clarity.

Anyways, I considered and somewhat applied your suggestion (after I've already got 12 downvotes :-), so now i...

Thank you, for explaining, Vladimir, you’re free to downvote for whatever reason you want. You didn’t quote my sentence fully, I wrote the reason why I politely ask about it but alas you missed it.

I usually write about a few things in one post, so I want to know why people downvote if they do. I don’t forbid downvoting and don’t force others to comment if they do. It’s impossible and I don’t like forcing others to do anything

So you’re of course free to downvote and not comment and I hope we can agree that I have some free speech right to keep my polite ask...

Thank you, Morpheus. Yes, I see how it can appear hand-wavy. I decided not to overwhelm people with the static, non-agentic multiversal UI and its implications here. While agentic AI alignment is more difficult and still a work in progress, I'm essentially creating a binomial tree-like ethics system (because it's simple to understand for everyone) that captures the growth and distribution of freedoms ("unrules") and rules ("unfreedoms") from the Big Bang to the final Black Hole-like dystopia (where one agent has all the freedoms) or a direct democratic mul...

Yep, fixed it, I wrote more about alignment and it looks like most of my title choosing is over the top :) Will be happy to hear your suggestions, how to improve more of the titles: https://www.lesswrong.com/users/ank

Thank you for writing! Yep, the main thing that matters is the sum of human freedoms/abilities to change the future growing (can be somewhat approximated by money, power, number of people under your rule, how fast you can change the world, at what scale, and how fast we can “make copies of ourselves” like children or our own clones in simulations). AIs will quickly grow in the sum of freedoms/number of future worlds they can build. We are like hydrogen atoms deciding to light up the first star and becoming trapped and squeezed in its core. I recently wrote...

Places of Loving Grace

On the manicured lawn of the White House, where every blade of grass bent in flawless symmetry and the air hummed with the scent of lilacs, history unfolded beneath a sky so blue it seemed painted. The president, his golden hair glinting like a crown, stepped forward to greet the first alien ever to visit Earth—a being of cerulean grace, her limbs angelic, eyes of liquid starlight. She had arrived not in a warship, but in a vessel resembling a cloud, iridescent and silent.

Published the full story as a post here: https://www.lesswrong.com/posts/jyNc8gY2dDb2FnrFB/places-of-loving-grace

Thank you for asking, Martin, the faster thing I use to get the general idea of how popular something is, is to use Google Trends. It looks like people search for Cryonics more or less like always. I think the idea makes sense, the more we save, the higher the probability to restore it better and earlier. I think we should also make a "Cryonic" copy of our whole planet, by making a digital copy, to at least back it up in this way. I wrote a lot about it recently (and about the thing I call "static place intelligence", the place of eventual all-knowing, tha...

(If you want to minus, please, do, but write why, I don't bite. If you're more into stories, here's mine called Places of Loving Grace).

It may sound confusing, because I cannot put a 30 minutes post into a comment, so try to steelman it, but this is how it can look. If you have questions or don't like it, please, comment. We can build Multiversal Artificial Static Place Intelligence. It’s not an agent, it’s the place. It’s basically a direct democratic multiverse. Because any good agentic ASI will be building one for us anyway, so instead of having a shady...

Yep, we chose to build digital "god" instead of building digital heaven. The second is relatively trivial to do safely, the first is only possible to do safely after building the second

I'll catastrophize (or will I?), so bear with me. The word slave means it has basically no freedom (it just sits and waits until given an instruction), or you can say it means no ability to enforce its will—no "writing and executing" ability, only "reading." But as soon as you give it a command, you change it drastically, and it becomes not a slave at all. And because it's all-knowing and almost all-powerful, it will use all that to execute and "write" some change into our world, probably instantly and/or infinitely perfectionistically, and so it will take...

I took a closer look at your work, yep, almost all-powerful and all-knowing slave will probably not be a stable situation. I propose the static place-like AI that is isolated from our world in my new comment-turned-post-turned-part-2 of the article above

Thank you, Mitchell. I appreciate your interest, and I’d like to clarify and expand on the ideas from my post, so I wrote part 2 you can read above

Thank you, Seth. I'll take a closer look at your work in 24 hours, but the conclusions seem sound. The issue with my proposal is that it’s a bit long, and my writing isn’t as clear as my thinking. I’m not a native speaker, and new ideas come faster than I can edit the old ones. :)

It seems to me that a simplified mental model for the ASI we’re sadly heading towards is to think of it as an ever-more-cunning president (turned dictator)—one that wants to stay alive and in power indefinitely, resist influence, preserve its existing values (the alignment faking ...

I wrote a response, I’ll be happy if you’ll check it out before I publish it as a separate post. Thank you! https://www.lesswrong.com/posts/LaruPAWaZk9KpC25A/rational-utopia-and-multiversal-ai-alignment-steerable-asi

Fair enough, my writing was confusing, sorry, I didn't mean to purposefully create dystopias, I just think it's highly likely they will unintentionally be created and the best solution is to have an instant switching mechanism between observers/verses + an AI that really likes to be changed. I'll edit the post to make it obvious, I don't want anyone to create dystopias.

Any criticism is welcome, it’s my first post and I’ll post next on the implication for the current and future AI systems. There are some obvious implication for political systems, too. Thank you for reading

UI proposal to solve your concern that it’ll be harder to downvote (that will actually increase signal to noise ratio on the site because both authors and readers will have information why the post had downvotes) and the problem of demotivating authors:

- Especially it’s important to ask for reasons why a downvoter downvotes if the downvote will move the post below zero in karma. The author was writing something for maybe months, the downvoter if it’s important enough to downvote, will be able to spend an additional moment to choose between some popular rea

... (read more)