All of ChrisCundy's Comments + Replies

153

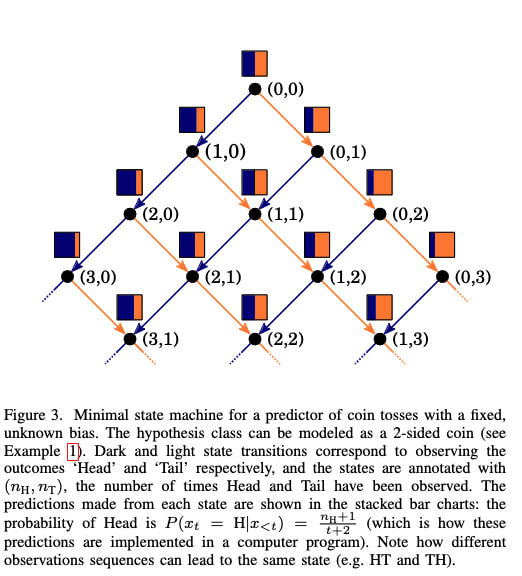

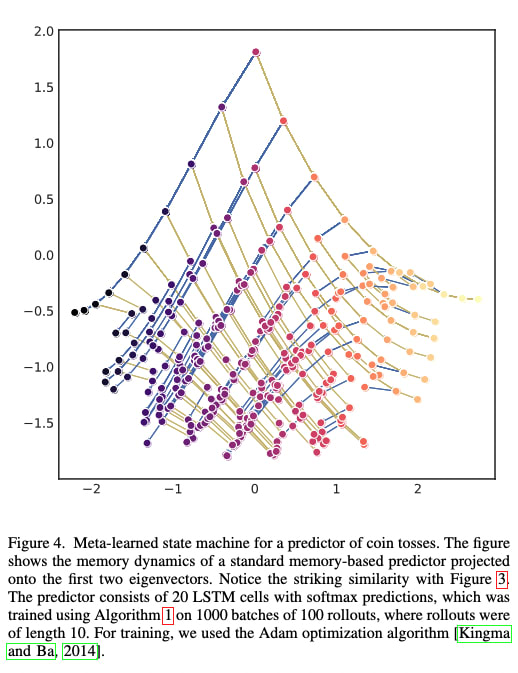

The figures remind me of figures 3 and 4 from Meta-learning of Sequential Strategies, Ortega et al 2019, which also study how autoregressive models (RNNs) infer underlying structure. Could be a good reference to check out!

.

2

this looks highly relevant! thanks!

30

Thanks for the elaboration, I'll follow up offline

40

Would you be able to elaborate a bit on your process for adversarially attacking the model?

It sounds like a combination of projected gradient descent and clustering? I took a look at the code but a brief mathematical explanation / algorithm sketch would help a lot!

Myself and a couple of colleagues are thinking about this approach to demonstrate some robustness failures in LLMs, it would be great to build off your work.

6

Yeah! Basically we just perform gradient descent on sensibly initialised embeddings (cluster centroids, or points close to the target output), constrain the embeddings to length 1 during the process, and penalise distance from the nearest legal token. We optimise the input embeddings to maximise the -log prob of the target output logit(s). Happy to have a quick call to go through the code if you like, DM me :)

That's a great question, sorry for the delayed reply!

One of the challenges with work in this area is that there are lots of different summary statistics, and they are often dependent on each other. I don't think any of them tell the whole story alone. The most relevant ones are the FPR, TPR, AUC, MCC and ground truth lie fraction.

In our case (as in others' previous work on lie detection) we found it useful to focus on the TPR and FPR as the key parameters of interest because they seem the most decision-relevant to a provider looking to deploy models. In pa... (read more)