All of Cody Rushing's Comments + Replies

Control research exclusively cares about intentional deception/scheming; it does not aim to solve any other failure mode.

(nitpick, doesn't address main point of article) I think this is incomplete. Though control research does indeed care a lot about scheming, control can be used more broadly to handle any worst-case deployment behavior. See Josh Clymer's post about Extending control evaluations to non-scheming threats.

This might not work well for others, but a thing that's worked well for me has been to (basically) block cheap access to it with anticharities. Introducing friction in general is good

I'm glad to see this. Some initial thoughts about the control safety case:

- I don't get how the "monitoring game" is different from the red-team game? Is it because the blue team is limited to only considering protocols that use just monitoring? Or do you not expect to play a full red-team simulation (this would be worrisome)

- Option 2 (honeypotting) feels doomed (in isolation). This primarily stems from my belief that making situations that are indistinguishable from deployment is probably hard - the model has control over state in very broad and hard-to-pred

Thanks for writing this and proposing a plan. Coincidentally, I drafted a short take here yesterday explaining one complaint I currently have with the safety conditions of this plan. In short, I suspect the “No AIs improving other AIs” criterion isn't worth including within a safety plan: it i) doesn't address that many more marginal threat models (or does so ineffectively) and ii) would be too unpopular to implement (or, alternatively, too weak to be useful).

I think there is a version of this plan with a lower safety tax, with more focus on reactive policy and the other three criterion, that I would be more excited about.

Another reason why layernorm is weird (and a shameless plug): the final layernorm also contributes to self-repair in language models

Hmm, this transcript just seems like an example of blatant misalignment? I guess I have a definition of scheming that would imply deceptive alignment - for example, for me to classify Sydney as 'obviously scheming', I would need to see examples of Sydney 1) realizing it is in deployment and thus acting 'misaligned' or 2) realizing it is in training and thus acting 'aligned'.

...Could you quote some of the transcripts of Sydney threatening users, like the original Indian transcript where Sydney is manipulating the user into not reporting it to Microsoft, and explain how you think that it is not "pretty obviously scheming"? I personally struggle to see how those are not 'obviously scheming': those are schemes and manipulation, and they are very bluntly obvious (and most definitely "not amazingly good at it"), so they are obviously scheming. Like... given Sydney's context and capabilities as a LLM with only retrieval access and s...

...What Comes Next

Coding got another big leap, both for professionals and amateurs.

Claude is now clearly best. I thought for my own purposes Claude Opus was already best even after GPT-4o, but not for everyone, and it was close. Now it is not so close.

Claude’s market share has always been tiny. Will it start to rapidly expand? To what extent does the market care, when most people didn’t in the past even realize they were using GPT-3.5 instead of GPT-4? With Anthropic not doing major marketing? Presumably adaptation will be slow even if they remain on top, esp

It also seems to have led to at least one claim in a policy memo that advocates of AI safety are being silly because mechanistic interpretability was solved.

Small nitpick (I agree with mostly everything else in the post and am glad you wrote it up). This feels like an unfair criticism - I assume you are referring specifically to the statement in their paper that:

...Although advocates for AI safety guidelines often allude to the "black box" nature of AI models, where the logic behind their conclusions is not transparent, recent advancements in the AI sect

Thanks, I think that these points are helpful and basically fair. Here is one thought, but I don't have any disagreements.

Olah et al. 100% do a good job of noting what remains to be accomplished and that there is a lot more to do. But when people in the public or government get the misconception that mechanistic interpretability has been (or definitely will be) solved, we have to ask where this misconception came from. And I expect that claims like "Sparse autoencoders produce interpretable features for large models" contribute to this.

- Less important, but the grant justification appears to take seriously the idea that making AGI open source is compatible with safety. I might be missing some key insight, but it seems trivially obvious why this is a terrible idea even if you're only concerned with human misuse and not misalignment.

Hmmm, can you point to where you think the grant shows this? I think the following paragraph from the grant seems to indicate otherwise:

...When OpenAI launched, it characterized the nature of the risks – and the most appropriate strategies for reducing them – in a w

I'm glad to hear you got exposure to the Alignment field in SERI MATS! I still think that your writing reads off as though your ideas misunderstands core alignment problems, so my best feedback then is to share drafts/discuss your ideas with other familiar with the field. My guess is that it would be preferable for you to find people who are critical of your ideas and try to understand why, since it seems like they are representative of the kinds of people who are downvoting your posts.

(preface: writing and communicating is hard and that i'm glad you are trying to improve)

i sampled two:

this post was hard to follow, and didn't seem to be very serious. it also reads off as unfamiliar with the basics of the AI Alignment problem (the proposed changes to gpt-4 don't concretely address many/any of the core Alignment concerns for reasons addressed by other commentors)

this post makes multiple (self-proclaimed controversial) claims that seem wrong or are not obvious, but doesn't try to justify them in-depth.

overall, i'm getting the impression tha...

Reverse engineering. Unclear if this is being pushed much anymore. 2022: Anthropic circuits, Interpretability In The Wild, Grokking mod arithmetic

FWIW, I was one of Neel's MATS 4.1 scholars and I would classify 3/4 of Neel's scholar's outputs as reverse engineering some component of LLMs (for completeness, this is the other one, which doesn't nicely fit as 'reverse engineering' imo). I would also say that this is still an active direction of research (lots of ground to cover with MLP neurons, polysemantic heads, and more)

Quick feedback since nobody else has commented - I'm all for the AI Safety appearing "not just a bunch of crazy lunatics, but an actually sensible, open and welcoming community."

But the spirit behind this post feels like it is just throwing in the towel, and I very much disapprove of that. I think this is why I and others downvoted too

Ehh... feels like your base rate of 10% for LW users who are willing to pay for a subscription is too high, especially seeing how the 'free' version would still offer everything I (and presumably others) care about. Generalizing to other platforms, this feels closest to Twitter's situation with Twitter Blue, whose rates appear is far, far lower: if we be generous and say they have one million subscribers, then out of the 41.5 million monetizable daily active users they currently have, this would suggest a base rate of less than 3%.

ACX is probably a better reference class: https://astralcodexten.substack.com/p/2023-subscription-drive-free-unlocked. In Jan, ACX had 78.2k readers, of which 6.0k subscribers for a 7.7% subscription rate.

I'm not sure if you've seen it or not, but here's a relevant clip where he mentions that they aren't training GPT-5. I don't quite know how to update from it. It doesn't seem likely that they paused from a desire to conduct more safety work, but I would also be surprised if somehow they are reaching some sort of performance limit from model size.

However, as Zvi mentions, Sam did say:

“I think we're at the end of the era where it's going to be these, like, giant, giant models...We'll make them better in other ways”

The increased public attention towards AI Safety risk is probably a good thing. But, when stuff like this is getting lumped in with the rest of AI Safety, it feels like the public-facing slow-down-AI movement is going to be a grab-bag of AI Safety, AI Ethics, and AI... privacy(?). As such, I'm afraid that the public discourse will devolve into "Woah-there-Slow-AI" and "GOGOGOGO" tribal warfare; from the track record of American politics, this seems likely - maybe even inevitable?

More importantly, though, what I'm afraid of is that this will translate...

Sheesh. Wild conversation. While I felt Lex was often missing the points Eliezer was saying, I'm glad he gave him the space and time to speak. Unfortunately, it felt like the conversation would keep moving towards reaching a super critical important insight that Eliezer wanted Lex to understand, and then Lex would just change the topic onto something else, and then Eliezer just had to begin building towards a new insight. Regardless, I appreciate that Lex and Eliezer thoroughly engaged with each other; this will probably spark good dialogue and get more pe...

I didn't upvote or downvote this post. Although I do find the spirit of this message interesting, I have a disturbing feeling that arguing to future AI to "preserve humanity for pascals-mugging-type-reasons" trades off X-risk for S-risk. I'm not sure that any of these aforementioned cases encourage AI to maintain lives worth living. I'm not confident that this meaningfully changes S-risk or X-risk positively or negatively, but I'm also not confident that it doesn't.

With the advent of Sydney and now this, I'm becoming more inclined to believe that AI Safety and policies related to it are very close to being in the overton window of most intellectuals (I wouldn't say the general public, yet). Like, maybe within a year, more than 60% of academic researchers will have heard of AI Safety. I don't feel confident whatsoever about the claim, but it now seems more than ~20% likely. Does this seem to be a reach?

There is a fuzzy line between "let's slow down AI capabilities" and "lets explicitly, adversarially, sabotage AI research". While I am all for the former, I don't support the latter; it creates worlds in which AI safety and capabilities groups are pitted head to head, and capabilities orgs explicitly become more incentivized to ignore safety proposals. These aren't worlds I personally wish to be in.

While I understand the motivation behind this message, I think the actions described in this post cross that fuzzy boundary, and pushes way too far towards that style of adversarial messaging

We know, from like a bunch of internal documents, that the New York Times has been operating for the last two or three years on a, like, grand [narrative structure], where there's a number of head editors who are like, "Over this quarter, over this current period, we want to write lots of articles, that, like, make this point..."

Can someone point me to an article discussing this, or the documents itself? While this wouldn't be entirely surprising to me, I'm trying to find more data to back this claim, and I can't seem to find anything significant.

It feels strange hearing Sam say that their products are released whenever the feel as though 'society is ready.' Perhaps they can afford to do that now, but I cannot help but think that market dynamics will inevitably create strong incentives for race conditions very quickly (perhaps it is already happening) which will make following this approach pretty hard. I know he later says that he hopes for competition in the AI-space until the point of AGI, but I don't see how he balances the knowledge of extreme competition with the hope that society is prepared...

Let's say Charlotte was a much more advanced LLM (almost AGI-like, even). Do you believe that if you had known that Charlotte was extraordinarily capable, you might have been more guarded about recognizing it for its ability to understand and manipulate human psychology, and thus been less susceptible to it potentially doing so?

I find that small part of me still think that "oh this sort of thing could never happen to me, since I can learn from others that AGI and LLMs can make you emotionally vulnerable, and thus not fall into a trap!" But perhaps this is just wishful thinking that would crumble once I interact with more and more advanced LLMs.

If she was an AGI, yes, I would be more guarded, but she would also be more skilled, which I believe would generously compensate for me being on guard. Realizing I had a wrong perception about estimating the ability of a simple LLM for psychological manipulation and creating emotional dependency tells me that I should also adjust my estimates I would have about more capable systems way upward.

I'm trying to engage with your criticism faithfully, but I can't help but get the feeling that a lot of your critiques here seem to be a form of "you guys are weird": your guys's privacy norms are weird, your vocabulary is weird, you present yourself off as weird, etc. And while I may agree that sometimes it feels as if LessWrongers are out-of-touch with reality at points, this criticism, coupled with some of the other object-level disagreements you were making, seems to overlook the many benefits that LessWrong provides; I can personally attest to the fac...

Humans can often teach themselves to be better at a skill through practice, even without a teacher or ground truth

Definitely, but I currently feel that the vast majority of human learning comes with a ground truth to reinforce good habits. I think this is why I'm surprised this works as much as it does: it kinda feels like letting an elementary school kid teach themself math by practicing certain skills they feel confident in without any regard to if that skill even is "mathematically correct".

Sure, these skills are probably on the right track toward...

I don't quite understand the perspective behind someone 'owning' a specific space. Do airlines specify that when you purchase a ticket, you are entitled to the chair + the surrounding space (in whatever ambiguous way that may mean)? If not, it seems to me that purchasing a ticket pays for a seat and your right to sit down on it, and everything else is complementary.

I'm having trouble understanding your first point on wanting to 'catch up' to other thinkers. Was your primary message advocating against feeling as if you are 'in dept' until you improve your rationality skills? If so, I can understand that.

But if that is the case, I don't understand the relevance of the lack of a "rationality tech-tree" - sure, there may not be clearly defined pathways to learn rationality. Even so, I think its fair to say that I perceive some people on this blog to currently be better thinkers than I, and that I would like to catch up to their thinking abilities so that I can effectively contribute to many discussions. Would you advocate against that mindset as well?

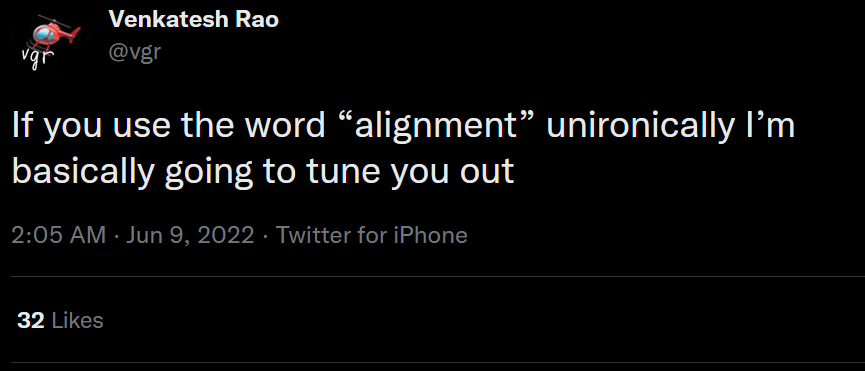

I was surprised by this tweet and so I looked it up. I read a bit further and ran into this; I guess I'm kind of surprised to see a concern as fundamental as alignment, whether or not you agree it is an major issue, be so... is polarizing the right word? Is this an issue we can expect to see grow as AI safety (hopefully) becomes more mainstream? "LW extended cinematic universe" culture getting an increasingly bad reputation seems like it would be extremely devastating for alignment goals in general.

I have a few related questions pertaining to AGI timelines. I've been under the general impression that when it comes to timelines on AGI and doom, Eliezer's predictions are based on a belief in extraordinarily fast AI development, and thus a close AGI arrival date, which I currently take to mean a quicker date of doom. I have three questions related to this matter:

- For those who currently believe that AGI (using whatever definition to describe AGI as you see fit) will be arriving very soon - which, if I'm not mistaken, is what Eliezer is predicting - appro

[Shorter version, but one I don't think is as compelling]

Timmy is my personal AI Chef, and he is a pretty darn good one, too. Of course, despite his amazing cooking abilities, I know he's not perfect - that's why there's that shining red emergency shut-off button on his abdomen.

But today, Timmy became my worst nightmare. I don’t know why he thought it would be okay to do this, but he hacked into my internet to look up online recipes. I raced to press his shut-off button, but he wouldn’t let me, blocking it behind a cast iron he held with a stone-cold...

[Intended for Policymakers with the focus of simply allowing for them to be aware of the existence of AI as a threat to be taken seriously through an emotional appeal; Perhaps this could work for Tech executives, too.

I know this entry doesn't follow what a traditional paragraph is, but I like its content. Also it's a tad bit long, so I'll attach a separate comment under this one which is shorter, but I don't think it's as impactful]

Timmy is my personal AI Chef, and he is a pretty darn good one, too.

You pick a cuisine, and he mentally simulates himsel...

Meta comment: Would someone mind explaining to me why this question is being received poorly (negative karma right now)? It seemed like a very honest question, and while the answer may be obvious to some, I doubt it was to Sergio. Ic's response was definitely unnecessarily aggressive/rude, and it appears that most people would agree with me there. But many people also downvoted the question itself, too, and that doesn't make sense to me; shouldn't questions like these be encouraged?

I don't know what to think of your first three points but it seems like your fourth point is your weakest by far. As opposed to not needing to, our 'not taking every atom on earth to make serotonin machines' seems to be a combination of:

- our inability to do so

- our value systems which make us value human and non-human life forms.

Superintelligent agents would not only have the ability to create plans to utilize every atom to their benefit, but they likely would have different value systems. In the case of the traditional paperclip optimizer, it certainly would not hesitate to kill off all life in its pursuit of optimization.

I'm not very well-versed in history so I would appreciate some thoughts from people here who may know more than I. Two questions:

- While it seems to be the general consensus that Putin's invasion is largely founded on his 'unfair' desire to reestablish the glory of the Soviet Union, a few people I know argue that much of this invasion is more the consequence of other nations' failures. Primarily, they focus on Ukraine's failure to respect the Minsk agreements, and NATO's expansion eastwards despite their implications/direct statements (not sure which one, I'

I really admire your patience to re-learn math entirely from the extremely fundamental levels on-wards. I've had a similar situation with Computer Science for the longest time where I would have a large breadth of understanding of Comp Sci topics, but I didn't feel as if I had a deep, intuitive understanding of all the topics and how they related to each other. All the online courses I found online seemed disjunct and separate from each other, and I would often start them and stop halfway through when I felt as if they were going nowhere. It's even worse w...

Awesome recommendations, I really appreciated them (especially the one on game theory, that was a lot of fun to play through). I would like to also suggest Replacing Guilt series by Nate Soares for those who haven't seen it on his blog or on the EA forum, a fantastic series that I would highly recommend people to check out.

Hmm, when I imagine "Scheming AI that is not easy to shut down with concerted nation-state effort, are attacking you with bioweapons, but are weak enough such that you can bargain/negotiate with them" I can imagine this outcome inspiring a lot more caution relative to many other worlds where control techniques work well but we can't get any convincing demos/evidence to inspire caution (especially if control techniques inspire overconfidence).

But the 'is currently working on becoming more powerful' part of your statement does carry a lot of weight.