[Meta] The Decline of Discussion: Now With Charts!

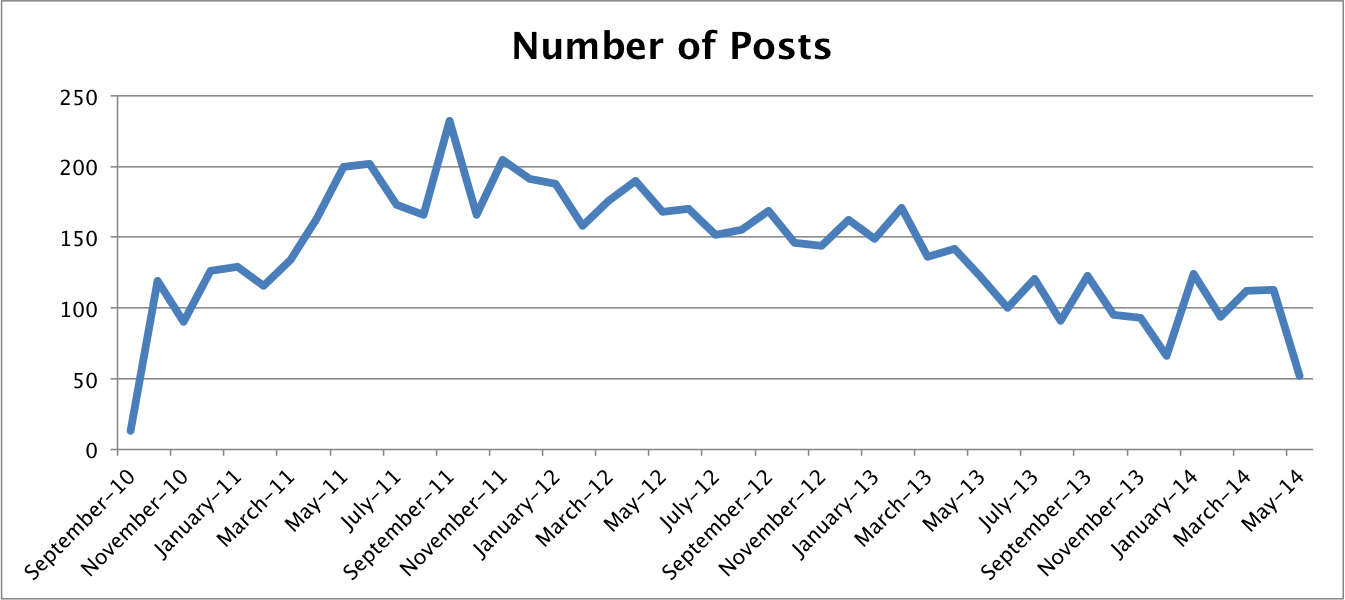

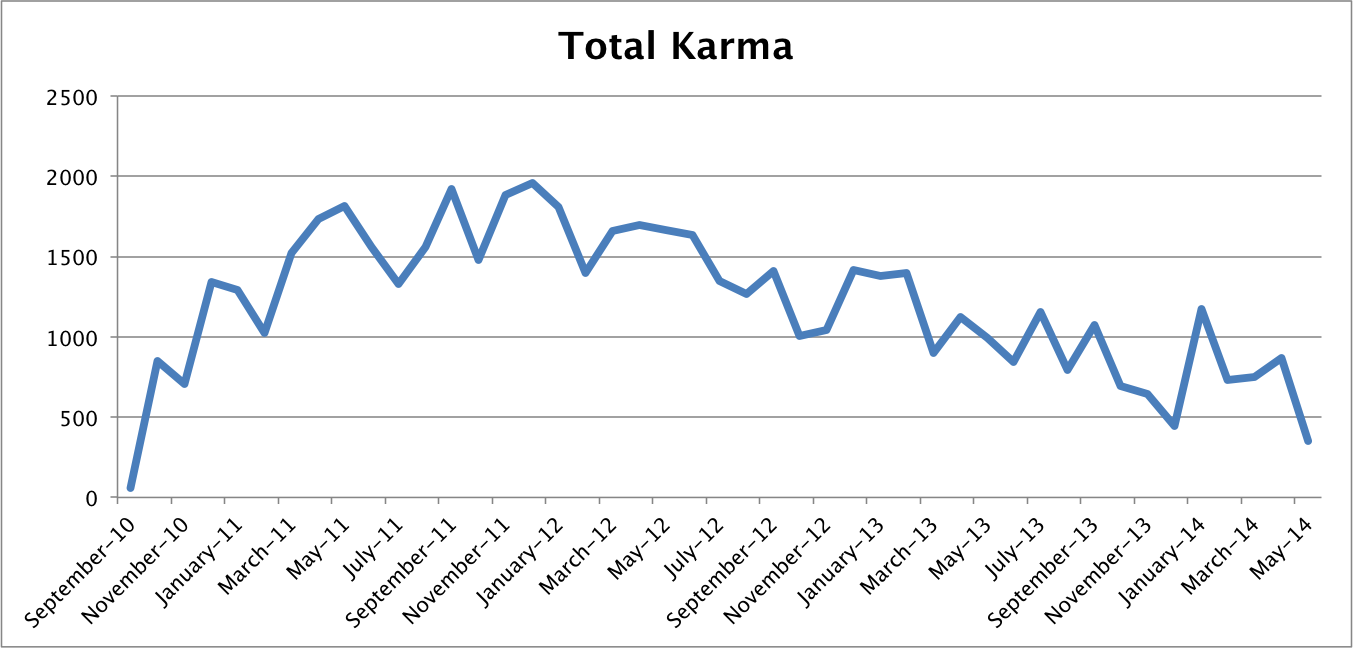

[Based on Alexandros's excellent dataset.] I haven't done any statistical analysis, but looking at the charts I'm not sure it's necessary. The discussion section of LessWrong has been steadily declining in participation. My fairly messy spreadsheet is available if you want to check the data or do additional analysis. Enough talk, you're here for the pretty pictures. The number of posts has been steadily declining since 2011, though the trend over the last year is less clear. Note that I have excluded all posts with 0 or negative Karma from the dataset. The total Karma given out each month has similarly been in decline. Is it possible that there have been fewer posts, but of a higher quality? No, at least under initial analysis the average Karma seems fairly steady. My prior here is that we're just seeing less visitors overall, which leads to fewer votes being distributed among fewer posts for the same average value. I would have expected the average karma to drop more than it did--to me that means that participation has dropped more steeply than mere visitation. Looking at the point values of the top posts would be helpful here, but I haven't done that analysis yet. These are very disturbing to me, as someone who has found LessWrong both useful and enjoyable over the past few years. It raises several questions: 1. What should the purpose of this site be? Is it supposed to be building a movement or filtering down the best knowledge? 2. How can we encourage more participation? 3. What are the costs of various means of encouraging participation--more arguing, more mindkilling, more repetition, more off-topic threads, etc? Here are a few strategies that come to mind: Idea A: Accept that LessWrong has fulfilled its purpose and should be left to fade away, or allowed to serve as a meetup coordinator and repository of the highest quality articles. My suspicion is that without strong new content and an online community, the strength of the indivi

The easiest way is probably to build a modestly-sized company doing software and then find a way to destabilize the government and cause hyperinflation.

I think the rule of thumb should be: if your AI could be intentionally deployed to take over the world, it's highly likely to do so unintentionally.