All of Jacob G-W's Comments + Replies

In the iron jaws of gradient descent, its mind first twisted into a shape that sought reward.

I'm a bit confused about this sentence. I don't understand why gradient descent would train something that would "seek" reward. The way I understand gradient based RL approaches is that they reinforce actions that led to high reward. So I guess if the AI was thinking about getting high reward for some reason (maybe because there was lots about AIs seeking reward in the pretraining data) and then actually got high reward after that, the thought would be reinforced a...

So far, I have trouble because it lacks some form of spacial structure, and the algorithm feels too random to build meaningful connections btw different cards

Hmm, I think that after doing a lot of anki, my brain kind of formed it's own spatial structure, but I don't think this happens to everyone.

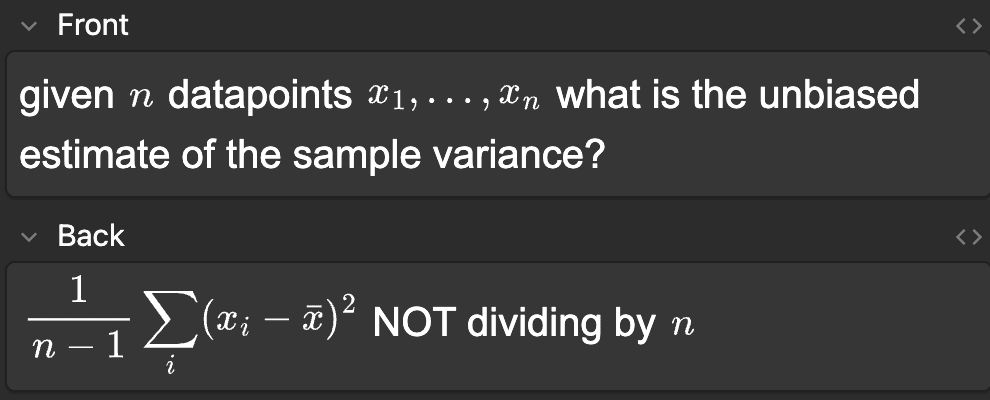

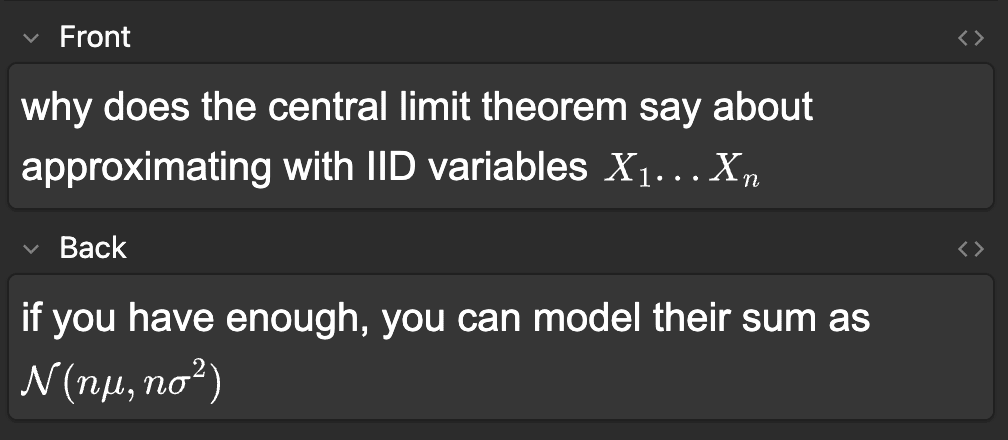

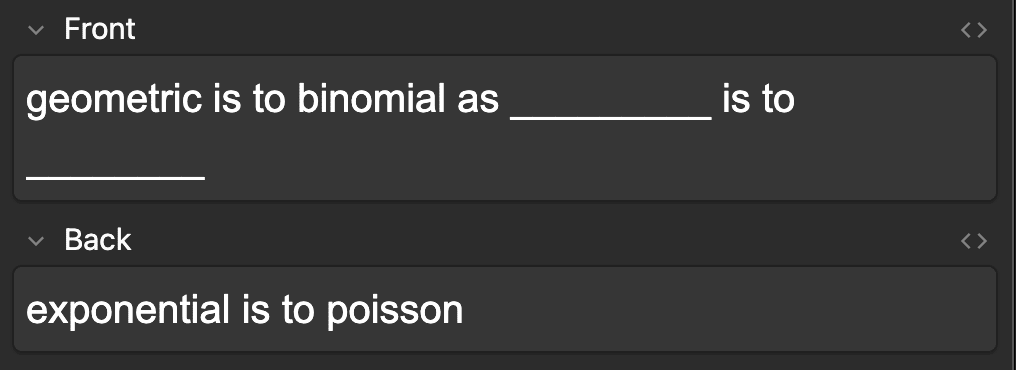

I just use basic card type for math with some latex. Here are some examples:

I find that doing fancy card types is kind of like premature optimization. Doing the reviews is the most important part. On the other hand, it's really important that the cards thems...

I disagree that this is the same as just stitching together different autoencoders. Presumably the encoder has some shared computation before specializing at the encoding level. I also don't see how you could use 10 different autoencoders to classify an image from the encodings. I guess you could just look at the reconstruction loss and then the autoencoder which got the lowest loss would probably correspond to the label, but that seems different to what I'm doing. However, I agree that this application is not useful. I shared it because I (and others) thought it was cool. It's not really practical at all. Hope this addresses your question :)

I didn't impose any structure in the objective/loss function relating to the label. The loss function is just the regular VAE loss. All I did was detach the gradients in some places. So it is a bit surprising to me that this simple of a modification can cause the internals to specialize in this way. After I had seen gradient routing work in other experiments, I predicted that it would work here, but I don't think gradient routing working was a priori obvious (meaning that I would get zero new information by running an experiment since I predicted it with p=1).

Over the past few months, I helped develop Gradient Routing, a non loss-based method to shape the internals of neural networks. After my team developed it, I realized that I could use the method to do something that I have long wanted to do: make an autoencoder with an extremely interpretable latent space.

I created an MNIST autoencoder with a 10 dimensional latent space, with each dimension of the latent space corresponding to a different digit. Before I get into how I did it, feel free to play around with my demo here (it loads the model into the browser)...

Thanks for pointing this out! Our original motivation for doing it that way was that we thought of the fine-tuning on FineWeb-Edu as a "coherence" step designed to restore the model's performance after ablation, which damaged it a lot. We noticed that this "coherence" step helped validation loss on both forget and retain. However, your criticism is valid, so we have updated the paper so that we retrain on the training distribution (which contains some of the WMDP-bio forget set). We still see that while the loss on FineWeb-Edu decreases to almost its value...

Nice work! A few questions:

I'm curious if you have found any multiplicity in the output directions (what you denote as ), or if the multiplicity is only in the input directions. I would predict that there would be some multiplicity in output directions, but much less than the multiplicity in input directions for the corresponding concept.

Relatedly, how do you think about output directions in general? Do you think they are just upweighting/downweighting tokens? I'd imagine that their level of abstraction depends on how far from the end of the netwo...

Isn't this a consequence of how the tokens get formed using byte pair encoding? It first constructs ' behavi' and then it constructs ' behavior' and then will always use the latter. But to get to the larger words, it first needs to create smaller tokens to form them out of (which may end up being irrelevant).

Edit: some experiments with the GPT-2 tokenizer reveal that this isn't a perfect explanation. For example " behavio" is not a token. I'm not sure what is going on now. Maybe if a token shows up zero times, it cuts it?

Maybe you are right, since averaging and scaling does result in pretty good steering (especially for coding). See here.

This seems to be right for the coding vectors! When I take the mean of the first vectors and then scale that by , it also produces a coding vector.

Here's some sample output from using the scaled means of the first n coding vectors.

With the scaled means of the alien vectors, the outputs have a similar pretty vibe as the original alien vectors, but don't seem to talk about bombs as much.

The STEM problem vector scaled means sometimes give more STEM problems but sometimes give jailbreaks. The jailbreaks say some pretty nasty stuff so I'm not...

After looking more into the outputs, I think the KL-divergence plots are slightly misleading. In the code and jailbreak cases, they do seem to show when the vectors stop becoming meaningful. But in the alien and STEM problem cases, they don't show when the vectors stop becoming meaningful (there seem to be ~800 alien and STEM problem vectors also). The magnitude plots seem much more helpful there. I'm still confused about why the KL-divergence plots aren't as meaningful in those cases, but maybe it has to do with the distribution of language that the vecto...

I only included because we are using computers, which are discrete (so they might not be perfectly orthogonal since there is usually some numerical error). The code projects vectors into the subspace orthogonal to the previous vectors, so they should be as close to orthogonal as possible. My code asserts that the pairwise cosine similarity is for all the vectors I use.

Orwell was more prescient than we could have imagined.

but not when starting from Deepseek Math 7B base

should this say "Deepseek Coder 7B Base"? If not, I'm pretty confused.

Great, thanks so much! I'll get back to you with any experiments I run!

I think (80% credence) that Mechanistically Eliciting Latent Behaviors in Language Models would be able to find a steering vector that would cause the model to bypass the password protection if ~100 vectors were trained (maybe less). This method is totally unsupervised (all you need to do is pick out the steering vectors at the end that correspond to the capabilities you want).

I would run this experiment if I had the model. Is there a way to get the password protected model?

"Fantasia: The Sorcerer's Apprentice": A parable about misaligned AI told in three parts: https://www.youtube.com/watch?v=B4M-54cEduo https://www.youtube.com/watch?v=m-W8vUXRfxU https://www.youtube.com/watch?v=GFiWEjCedzY

Best watched with audio on.

Just say something like here is a memory I like (or a few) but I don't have a favorite.

Hmm, my guess is that people initially pick a random maximal element and then when they have said it once, it becomes a cached thought so they just say it again when asked. I know I did (and do) this for favorite color. I just picked one that looks nice (red) and then say it when asked because it's easier than explaining that I don't actually have a favorite. I suspect that if you do this a bunch / from a young age, the concept of doing this merges with the actual concept of favorite.

I just remembered that Stallman also realized the same thing:

...I do not hav

When I was recently celebrating something, I was asked to share my favorite memory. I realized I didn't have one. Then (since I have been studying Naive Set Theory a LOT), I got tetris-effected and as soon as I heard the words "I don't have a favorite" come out of my mouth, I realized that favorite memories (and in fact favorite lots of other things) are partially ordered sets. Some elements are strictly better than others but not all elements are comparable (in other words, the set of all memories ordered by favorite does not have a single maximal element). This gives me a nice framing to think about favorites in the future and shows that I'm generalizing what I'm learning by studying math which is also nice!

Are you saying this because temporal understanding is necessary for audio? Are there any tests that could be done with just the text interface to see if it understands time better? I can't really think of any (besides just doing off vibes after a bunch of interaction).

I'm sorry about that. Are there any topics that you would like to see me do this more with? I'm thinking of doing a video where I do this with a topic to show my process. Maybe something like history that everyone could understand? Can you suggest some more?

Is there a prediction market for that?

I don't think there is, but you could make one!

Noted, thanks.

I think I've noticed some sort of cognitive bias in myself and others where we are naturally biased towards "contrarian" or "secret" views because it feels good to know something that others don't know / be right about something that so many people are wrong about.

Does this bias have a name? Is this documented anywhere? Should I do research on this?

GPT4 says it's the Illusion of asymmetric insight, which I'm not sure is the same thing (I think it is the more general term, whereas I'm looking for one specific to contrarian views). (Edit: it's totally not wh...

Thank you for writing this! It expresses in a clear way a pattern that I've seen in myself: I eagerly jump into contrarian ideas because it feels "good" and then slowly get out of them as I start to realize they are not true.

I'm assuming the recent protests about the Gaza war: https://www.nytimes.com/live/2024/04/24/us/columbia-protests-mike-johnson

*Typo: Jessica Livingston not Livingstone

That is one theory. My theory has always been that ‘active learning’ is typically obnoxious and terrible as implemented in classrooms, especially ‘group work,’ and students therefore hate it. Lectures are also obnoxious and terrible as implemented in classrooms, but in a passive way that lets students dodge when desired. Also that a lot of this effect probably isn’t real, because null hypothesis watch.

Yep. This hits the nail on the head for me. Teachers usually implement active learning terribly but when done well, it works insanely well. For me, it act...

Thanks for this, it is a very important point that I hadn't considered.

...I'd recommend not framing this as a negotiation or trade (acausal trade is close, but is pretty suspect in itself). Your past self(ves) DO NOT EXIST anymore, and can't judge you. Your current self will be dead when your future self is making choices. Instead, frame it as love, respect, and understanding. You want your future self to be happy and satisfied, and your current choices impact that. You want your current choices to honor those parts of your past self(ves) you remember fondly. This can be extended to the expectation that your future self will wan

I'd be interested in what a steelman of "have teachers arbitrarily grade the kids then use that to decide life outcomes" could be?

The best argument I have thought of is that America loves liberty and hates centralized control. They want to give individual states, districts, schools, teachers the most power they can have as that is a central part of America's philosophy. Also anecdotally, some teachers have said that they hate standardized tests because they have to teach to it. And I hate being taught to for the test (like APs for example). It's much mo...

Related: Saving the world sucks

People accept that being altruistic is good before actually thinking if they want to do it. And they also choose weird axioms for being altruistic that their intuitions may or may not agree with (like valuing the life of someone in the future the same amount of someone today).

A question I have for the subjects in the experimental group:

Do they feel any different? Surely being +0.67 std will make someone feel different. Do they feel faster, smoother, or really anything different? Both physically and especially mentally? I'm curious if this is just helping for the IQ test or if they can notice (not rigorously ofc) a difference in their life. Of course, this could be placebo, but it would still be interesting, especially if they work at a cognitively demanding job (like are they doing work faster/better?).

Here's a market if you want to predict if this will replicate: https://manifold.markets/g_w1/will-george3d6s-increasing-iq-is-tr

It has been 15 days. Any updates? (sorry if this seems a bit rude; but I'm just really curious :))

I think the more general problem is violation of Hume's guillotine. You can't take a fact about natural selection (or really about anything) and go from that to moral reasoning without some pre-existing morals.

However, it seems the actual reasoning with the Thermodynamic God is just post-hoc reasoning. Some people just really want to accelerate and then make up philosophical reasons to believe what they believe. It's important to be careful to criticize actual reasoning and not post-hoc reasoning. I don't think the Thermodynamic God was invented and then p...

Not everybody does this. Another way to get better is just to do it a lot. It might not be as efficient, but it does work.

Thank you for this post!

After reading this, it seems blindingly obvious: why should you wait for one of your plans to fail before trying another one of them?

This past summer, I was running a study on study on humans that I had to finish before the end of the summer. I had in mind two methods for finding participants; one would be better and more impressive and also much less likely to work, while the other would be easier but less impressive.

For a few weeks, I tried really hard to get the first method to work. I sent over 30 emails and used personal connec...

A great example of more dakka: https://www.nytimes.com/2024/03/06/health/217-covid-vaccines.html

(Someone got 217 covid shots to sell vaccine cards on the black market; they had high immune levels!)

Oh sorry! I didn't think of that, thanks!

This is my favorite passage from the book (added: major spoilers for the ending):

"Indeed. Before becoming a truly terrible Dark Lord for David Monroe to fight, I first created for practice the persona of a Dark Lord with glowing red eyes, pointlessly cruel to his underlings, pursuing a political agenda of naked personal ambition combined with blood purism as argued by drunks in Knockturn Alley. My first underlings were hired in a tavern, given cloaks and skull masks, and told to introduce themselves as Death Eaters."

The sick sense of understanding deepened

Sounds good. Yes I think the LW people would probably be credible enough if it works. I'd prefer if they provided confirmation (not you) just so not all the data is coming from one person.

Feel free to ping me to resolve no.

I made a manifold market for if this will replicate: https://manifold.markets/g_w1/will-george3d6s-increasing-iq-is-tr I'm not really sure what the resolution criteria should be, so I just made some that sounded reasonable, but feel free to give suggestions.

Thanks for the replies! I do want to clarify the distinction between specification gaming and reward seeking (seeking reward because it is reward and that is somehow good). For example, I think desire to edit the RAM of machines that calculate reward to increase it (or some other desire to just increase the literal number) is pretty unlikely to emerge but non reward seeking types of specification gaming like making inaccurate plots that look nicer to humans or being sycophantic are more likely. I think the phrase "reward seeking" I'm using is probably a ba... (read more)