All of jasoncrawford's Comments + Replies

Yes, they would not be made from mirror components!

Synthetic cells aren't inherently dangerous if they're not mirror cells (and aren't dangerous pathogens of course).

Failure to detect other life in the universe is only really evidence against advanced intelligent civilizations, I think. The universe could easily be absolutely teeming with bacterial life.

Re “take steps to stop it”, I was replying to @Purplehermann

The asymmetric advantage of bacteria is that they can invade your body but not vice versa.

I think until recently, most scientists assumed that mirror bacteria would (a) not be able to replicate well in an environment without many matching-chirality nutrients, and/or (b) would be caught by the immune system. It's only recently that a group of scientists got more concerned and did a more in-depth investigation of the question.

Yes, antibodies could adapt to mirror pathogens. The concern is that the system which generates antibodies wouldn't be strongly triggered. The Science article says: “For example, experiments show that mirror proteins resist cleavage into peptides for antigen presentation and do not reliably trigger important adaptive immune responses such as the production of antibodies (11, 12).”

Given that mirror life hasn't arisen independently on Earth in ~4B years, I don't think we need to take any steps to stop it from doing so in the future. Either abiogenesis is extremely rare, or when new life does arise naturally, it is so weak that it is outcompeted by more evolved life.

I agree that this is a risk from any extraterrestrial life we might encounter.

I appreciate that! Would like to get back to them at some point…

I don't intend to write something anodyne, and don't think I am doing so. Let me know what you think once I'm at least a few chapters in.

Thanks, added a more prominent link

I don't think that's right. The world now is much better than the world when it was smaller, and I think that is closely related to population growth. So I think it is actually possible to conclude that more people are better.

Software/internet gives us much better ability to find.

Re competitors, the idea is that we're not all competing for a single prize; we're being sorted into niches. If there is 1 songwriter and 1 lyricist, they kind of have to work together. If there are 100 of each, then they can match with each other according to style and taste. That's not 100x competition, it's just much better matching.

That is a good point. Still, the fact that individual companies, for instance, develop layers of bureaucracy is not an argument against having a large economy. It's an argument for having a lot of companies of different sizes, and in particular for making sure that market entry doesn't become too difficult and that competition is always possible. And maybe at the governance level it is an argument for many smaller nations rather than one world government.

I feel that you're only paying attention to the “more geniuses and researchers” part and ignoring the parts about market size, better matching, more niches?

Also “focus on it at the exclusion of everything else” is a strawman, I'm not advocating that of course. Certainly increasing intelligence would be good (although we don't know how to do that yet!) Better education would be great and I am a strong advocate of that. Same for better scientific institutions, etc.

I think the positive externalities of one genius are much greater than the negative externalities of one idiot or jerk. A genius can create a breakthrough discovery or invention that elevates the entire human race. Hard for an idiot or jerk to do damage of equivalent magnitude.

Maybe a better argument is “what about more Hitlers or Stalins?” But I still think that looking at the overall history of humanity, it seems that the positives of people outweigh the negatives, or we wouldn't even be here now.

First, this seems to be arguing against strawman. No one is advocating literally infinite growth forever, which is obviously impossible.

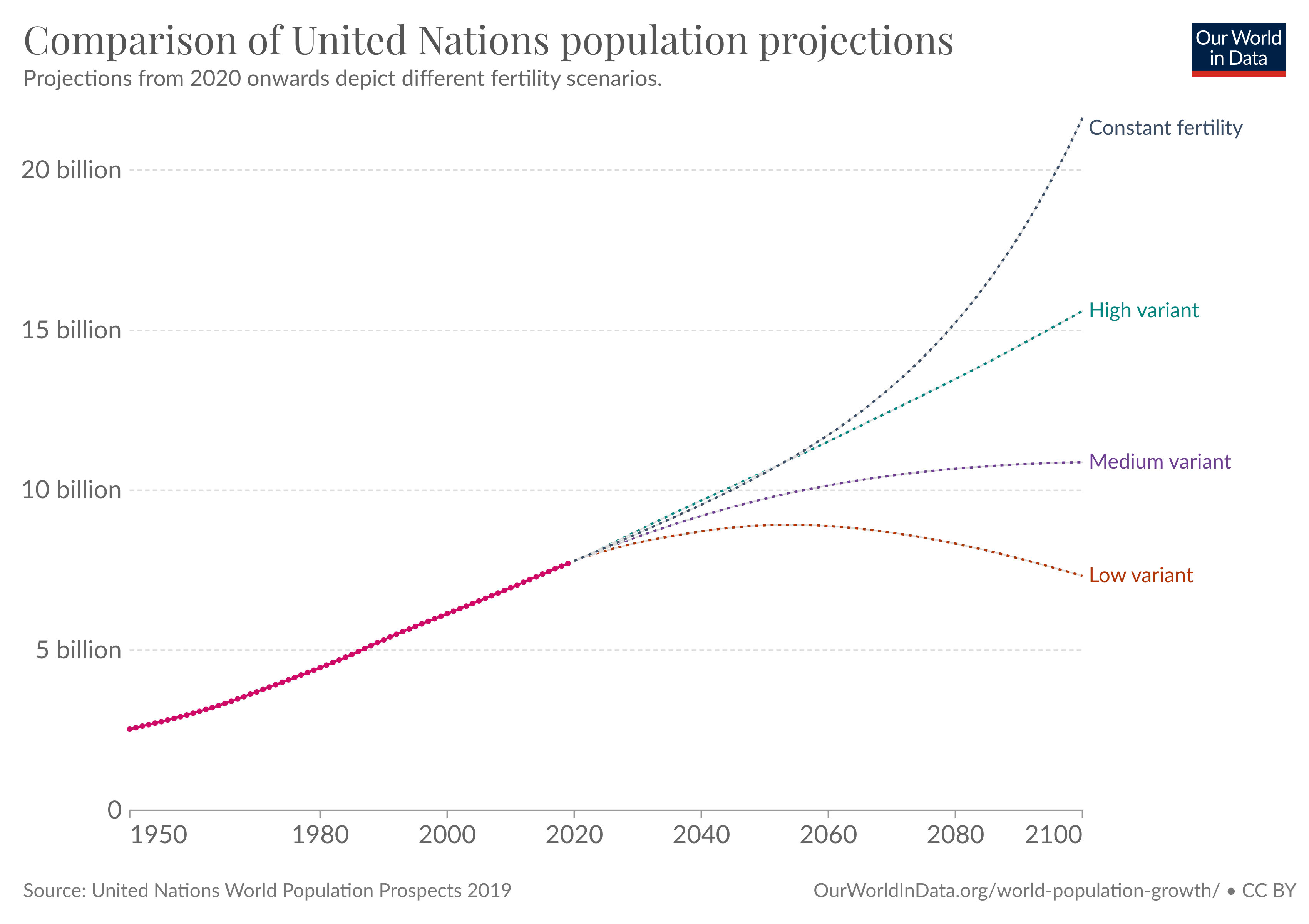

Second, the current reality is not exponential population growth. It is a decelerating population. The UN projections show world population likely leveling off around 10 or 11 billion people in in this century, and possibly even declining:

Even if we were to get back on an exponential population growth curve, the limits seem to me to be many orders of magnitude away. I don't see why we would worry about them until we get mu...

Investigators get fired when they aren't being productive. This does happen. The difference in the block model is that whether someone is being productive is determined by their manager, with input from their peers.

Who says they would be MBAs? The best science managers are highly technical themselves and started out as scientists. It's just that their career from there evolves more in a management direction.

Once you eliminate the requirement that the manager be a practicing scientist, the roles will become filled with people who like managing, and are good at politics, rather than doing science. I’m surprised this is controversial. There is a reason the chair of academic departments is almost always a rotating prof in the department, rather than a permanent administrator. (Note: “was once a professor” is not considered sufficient to prevent this. Rather, profs understand that serving as chair for a couple years before rotating back into research is an unpl...

I really don't think a group of, say, university professors could join in such a contract. For one, I'm not sure their universities would let them, especially if they weren't all at the same university. For another, the granting organizations (e.g., NIH) put a lot of restrictions on the grant money. You can't redistribute it to other labs.

Also, the grants are still going to be small ones to fund a single lab, not large ones that could fund hundreds of researchers. If everyone still has to seek grants you haven't really solved the problem, even if they are spreading risk/reward somehow.

Yes, but those researchers are typically grad students. To become a professor, get tenure, get your own grants, etc., you need to go run your own lab. At least that is my understanding of the system.

Oh, yes, there is that problem too.

There is certainly no moral equivalence between the two of them; SBF was a fraud and Toner was (from what I can tell) acting honestly according to her convictions. Sorry if I didn't make that clear enough.

But I disagree about destroying OpenAI—that would have been a massive destruction of value and very far from justified IMO.

Did Sam threaten to take the team with him, or did the team threaten to quit and follow him? From what I saw it looked like the latter.

I was basing my (uncertain) interpretation on a number of sources, and I only linked to one, sorry.

In particular, the only substantive board disagreement that I saw was over Toner's report that was critical of OpenAI for releasing models too quickly, and Sam being upset over it.

Thanks. I was quoting Semafor, but on a closer reading of Tallinn's quote I agree that they might have been misinterpreting him. (Has he commented on this, does anyone know?)

Yes, but not all of it is well-understood as problem-solving ahead of time:

It feels strained to say that Henry Ford solved the problem that people couldn’t move over land faster than horses. Or that Apple solved the problem that people couldn’t carry the internet in their pockets. Or that telephones solved the problem that people couldn’t communicate in real time without being in the same room. The list of technologies that didn’t solve a problem except in retrospect is long.

Thank you! That means a lot to me, especially since these posts are never the ones that go viral, so it's good to know that someone appreciates them.

I haven't investigated this, but there is a long essay from Eli Dourado here that is bullish on the concept.

I don't think this is exactly correct: I'm pretty sure that many cities including London and Paris had sewer systems much earlier than that, although they modernized them / made major overhauls in the 19th century. (Anyway, kind of besides the point of the linked thread)

Update: I’m already planning to give brief remarks at a few events coming up very soon:

- Thurs, Aug 24: Recur Club founders meetup in Indiranagar. Register/apply here

- Sun, Aug 27: LessWrong / Astral Codex Ten meetup at Matteo Coffea

If you’re in/near Bangalore, hope to see you there!

Maybe “general truths” is still too broad. Let's approach this a different way. I submit that science is the best and only method for establishing a certain class of truths. I'm not totally sure how to describe that class. They are general truths about the world, but maybe it's narrower than that. But I'm pretty sure there is such a class. Do you agree? How would you describe the type of knowledge that science (and only science) can get us?

Good point. Maybe I should say it is the only method for finding out general truths about the world. It's not the only way to answer specific, narrow, practical questions like whether a particular building or road can be built.

Thanks Zac. I don't have an opinion on this myself but I'll add your comment to this digest and mention it in the next one as well.

Counterpoint: The American South very quickly adopted one of the classic inventions of the Industrial Revolution, the cotton gin. And it has been proposed that this actually helped entrench slavery in the South.

Yes, see my reply to Vaniver above.

I think everything you say about the printing press is correct and important, I would just caution against overfocusing on the printing press as the one pivotal cause. I think it was part of a broader trend.

Yes, the famous Needham question. It is tougher to answer. Mokyr offers some thoughts in A Culture of Growth. I'm sure there are other hypotheses but I don't have pointers right now.

You're right, that was my mistake, I wasn't reading it carefully enough and I summarized it incorrectly. Fixed now, thanks.

“You can’t deduce anything about the validity of someone’s position from their willingness or unwillingness to debate it”

The article has a detailed analysis that comes up with a much lower cost. If you think that analysis goes wrong, I'd be curious to understand exactly where?

Trust is important, but… the Church banning cousin-marriage as the primary cause of a high-trust society? I find it hard to believe. No time now to elaborate on my reasons but if people are really interested maybe I will write something up later

I think in Allen's book there is both a generic claim of high wages, and some specific analyses of technologies like the spinning jenny and whether it would have paid to adopt them.

The builders' wages are part of the generic claim, because there was no building-related technology that was analyzed.

The spinners' wages might be related to the spinning jenny ROI calculations, but I haven't gone deep enough on the analysis to understand how the paper that was linked might affect those calculations.

Maybe! Or maybe you could interest him in a printing press, or a sextant, or at least a plow? That is sort of my point in the second-to-last paragraph (about shape/direction vs. rate).

That is one of many hypotheses. (I haven't studied all of them yet, but I'd be surprised if I ended up ranking that even in the top three causes.)

It is a spike in the death rate, from covid.

Insurance is exactly a mechanism that transforms high-variance penalties in the future into consistent penalties in the present: the more risky you are, the higher your premiums.

Yes, and similarly, William Crookes warning about a fertilizer shortage in 1898 was correct. Sometimes disaster truly is up ahead and it's crucial to change our course. What makes the difference IMO is between saying “this disaster will happen and there's nothing we can do about it” vs. “this disaster will happen unless we recant and turn backwards” vs. “this disaster might happen so we should take positive steps to make sure it doesn't.”

In the Hornblower series of novels, at one point Captain Hornblower surrenders to the enemy during a naval battle. He is captured by the French, but later escapes. When he gets home, he's put on trial for surrendering. They finally acquit him when it is revealed that he had lost something like half (maybe two-thirds?) of his crew—basically massive casualties. But surrendering was considered guilty until proven innocent.