All of Lost Futures's Comments + Replies

How many horses were there?

Well over a million in England by 1850. However they were used primarily for agriculture and later transport. Not industry. As such, they played, at most, a supporting role in industrialization. Also, my original question stands, "Why England?", given the Dutch Golden Age had similar conditions.

Also, development of those 3 technologies wasn't limited by available power.

No, but they were limited by technological advancement and production getting cheaper, which by the mid 1800s were very much tied to steam power. They were also li...

All of three technologies you've listed were not ready for broad practical use until well over 150 years after Newcomen's steam engine. By this time, steam power had long since dethroned wind and water as the primary source of energy for industrial production.

https://histecon.fas.harvard.edu/energyhistory/data/Warde_Energy%20Consumption%20England.pdf

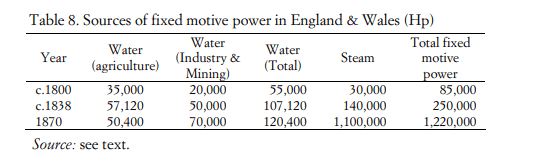

By the mid 1800s, steam was producing as much power for England and Wales as all other sources of fixed motive power combined. That's not even mentioning the world changing impact of inventions such as the trai...

One popular conception of the Industrial Revolution is that steam engines were invented, and then an increase in available power led to economic growth.

This doesn't make sense, because water power and horses were much more significant than steam power until well after technological development and economic growth became fast.

While it is true that the first industrial revolution was largely propelled by water, wind, and horsepower rather than the steam engine, the steam engine was instrumental in continuing that momentum into the latter half of the 19th cen...

Interested in any of the roles. I haven't played chess competitively in close to a decade and my USCF elo was in the 1500s at the time of stopping. So long as I'm given a heads up in advance, I'm free almost all day on Wednesdays, Fridays, and Sundays.

This line leaves me wondering about human isolation on our little planet and what maladaptations humanity is stuck with because we lack neighbors to learn from.

Failing to adopt cheap and plentiful nuclear power comes to mind as a potential example.

I largely agree with the sentiment of your post. However, one nitpick:

The world's largest protest-riot ever, when measured by estimated damage to property.

This claim is questionable. The consensus is that the economic cost of the George Floyd Protests was between one and several billion. Perhaps it was the most expensive riot in US history (though when inflation-adjusted the LA riots may give it a run for its money) and the most expensive to be cleanly accounted for economically, but intuitively I would imagine many of the most violent riots in history, su...

Sam's comments a few months ago would also make sense given this context:

https://www.lesswrong.com/posts/ndzqjR8z8X99TEa4E/?commentId=XNucY4a3wuynPPywb

...further progress will not come from making models bigger. “I think we're at the end of the era where it's going to be these, like, giant, giant models,” he told an audience at an event held at MIT late last week. “We'll make them better in other ways.” [...] Altman said there are also physical limits to how many data centers the company can build and how quickly it can build them. [...] At MIT last week, Alt

This new rumor about GPT-4's architecture is just that and should be taken with a massive grain of salt...

That said however, it would explain OpenAI's recent comments about difficulty training a model better than GPT-3. IIRC, OA spent a full year unable to substantially improve on GPT-3. Perhaps the scaling laws do not hold? Or they ran out of usable data? And thus this new architecture was deployed as a workaround. If this is true, it supports my suspicion that AI progress is slowing and that a lot of low-hanging fruit has been picked.

Altman said there are also physical limits to how many data centers the company can build and how quickly it can build them.

This seems to insinuate a cool down in scaling compute and Sam previously acknowledged that the data bottleneck was a real roadblock.

Yep, just as developing countries don't bother with landlines, so to will companies, as they overcome inertia and embrace AI, choose to skip older outdated models and jump to the frontier, wherever that may lie. No company embracing LLMs in 2024 is gonna start by trying to first integrate GPT2, then 3, then 4 in an orderly and gradual manner.

Pretty sure that's just an inside joke about Lex being a robot that stems from his somewhat stiff personality and unwillingness to take a strong stance on most topics.

You're likely correct, but I'm not sure that's relevant. For one, Chinchilla wasn't announced until 2022, nearly two years after the release of GPT-3. So the slowdown is still apparent even if we assume OpenAI was nearly done training an undertrained GPT-4 (which I have seen no evidence of).

Moreover, the focus on efficiency itself is evidence of an approaching wall. Taking an example from the 20th century, machines got much more energy efficient after the 70s which is also when energy stopped getting cheaper. Why didn't OpenAI pivot their attention t...

AFAIK, no information regarding this has been publicly released. If my assumption that Bing's AI is somehow worse than GPT-4 is true, then I suspect some combination of three possible explanations must be true:

- To save on inference costs, Bing's AI uses less compute.

- Bing's AI simply isn't that well trained when it comes to searching the web and thus isn't using the tool as effectively as it could with better training.

- Bing's AI is trained to be sparing with searches to save on search costs.For multi-part questions, Bing seems too conservative when it comes to searching. Willingness to make more queries would probably improve its answers but at a higher cost to Microsoft.

I'm also quite sympathetic to the idea that another AI winter is plausible, mostly based off compute and data limits. One trivial but frequently overlooked data point is that GPT-4 was released nearly three years after GPT-3. In contrast, GPT-3 was released around a year after GPT-2 which in turn was released less than a year after GPT-1. Despite hype around AI being larger than ever, there already has been a progress slowdown relative to 2017-2020.

That said, a big unknown is to what extent specialized hardware dedicated to AI can outperform Moore's Law. J...

Does GPT-4 seem better than Bing's AI (which also uses some form of GPT-4) to anyone else? This is hard to quantify, but I notice Bing misunderstanding complicated prompts or making mistakes in ways GPT-4 seems better at avoiding.

The search requests it makes are sometimes too simple for an in-depth question and because of this, its answers miss the crux of what I'm asking. Am I off base or has anyone else noticed this?

Probably? Though it's hard to say since so little information about the model architecture was given to the public. That said, PaLM is also around around 10x the size as GPT-3 and GPT-4 seems better than it (though this is likely due to GPT-4's training following Chinchilla-or-better scaling laws).

So Bing was using GPT-4 after all. That explains why it felt noticeably more capable than chatGPT. Still, this advance seems like a less revolutionary leap over GPT-3 than GPT-3 was over GPT-2, if Bing's early performance is a decent indicator.

Question for people working in AI Safety: Why are researchers generally dismissive of the notion that a subhuman level AI could pose an existential risk? I see a lot of attention paid to the risks a superintelligence would pose, but what prevents, say, an AI model capable of producing biological weapons from also being an existential threat, particularly if the model is operated by a person with malicious or misguided intentions?

I'm puzzled by this as well. For a moment I thought maybe PaLM used an encoder-decoder architecture, but no it uses next-word prediction just like GPT-3. Not sure what GPT-3 has that PaLM lacks. A model with the parameter count of PaLM and training dateset size of Chinchilla would be a better hypothetical for "Great Palm".

Erik Engheim and Terje Tvedt introduced me another important development in Europe that seems connected to the industrial revolution: The Machine Revolution. While Medieval China invented plenty of industrial machines, including the first water-powered textile spinning wheel, by the high middle ages Western Europe was using more water and wind power per capita than anywhere else in history.

......watermills in 1086 did the work of almost 400,000 people at a time when England had no more than 1.25 million inhabitants. That means doing as much work as almo

Agreed. The printing press, newspapers, and The Republic of Letters certainly expanded the communication bandwidth.

Any eta on when applicants will receive an update?

Does OpenAI releasing davinci_003 and ChatGPT, both derived from GPT-3, mean we should expect considerably more wait time for GPT-4? Feels like it'd be odd if they released updates to GPT-3 just a month or two before releasing GPT-4.

Interesting, how good can they get? Any ELO estimates?

We never did ELO tests, but the 2.7B model trained from scratch on human games in PGN notation beat me and beat my colleague (~1800 ELO). But it would start making mistakes if the game went on very long (we hypothesized it was having difficulties constructing the board state from long PGN contexts), so you could beat it by drawing the game out.

I'm curious how long it'll be until a general model can play Diplomacy at this level. Anyone fine-tuned an LLM like GPT-3 on chess yet? Chess should be simpler for an LLM to learn unless my intuition is misleading?

I've fine tuned LLMs on chess and it indeed is quite easy for them to learn.

GPT-3 was announced less than two and a half years ago. I don't think it's reasonable to assume that the market has fully absorbed its capabilities yet.

Hmm, I should rewrite the Falcon 9 sentence to clarify my intent. I meant to express that more affordable rockets were possible in the 90s compared to what existed, rather than that the F9 exactly was possible in the 90s.

They were, some Soviet engine design from the 70s were the best for their niche until the late 2010s.

Given that the Soviet Union collapsed soon after and that no competitive international launch market really began to emerge until the 2000s this isn't surprising. There was no incentive to improve. Moreover, engines are just one component o...

Funny you should say that, the king of France initially wanted condemned criminals to be the first test pilots for that very reason.

Would you consider the space shuttle doomed from the start then? Even without bureaucratic mismanagement, legislative interference, and persistent budget cuts? The market for rocket development in the 80s and 90s seems hardly optimal. You had OTRAG crushed by political pressure, the space shuttle project heavily interfered with, and Buran's development halted by the collapse of the Soviet Union. A global launch market didn't really even emerge until the 2000s.

As a broader point, even if you chalk up the nonexistence of economically competitive partia...

Are contemporary rocket computer systems necessary for economical reusability? As I understand it, rocket launch costs stagnated for decades due to a lack of price competition stemming from the high initial capital costs involved in developing new rocket designs rather than us hitting a performance ceiling.

Thanks for the response jmh!

One idea might be that it should have been invented then IF the idea that air (gases) were basically just like water (fluids).

I dunno if this is an intuitive jump but it seems unnecessary. Sky lanterns were built without knowledge of the air acting as a fluid. I don't see why the same couldn't be true for the hot air balloon.

...But there would also have to be some expected net gain from the effort to make doing the work worthwhile. Is there any reason to think the expect value gained from the invention and availability of the ballo

Thanks for the detailed and informative response Breakfast! I think I largely agree with your post.

...I find it likely that that the coincidence of the Montgolfier brothers' and Lenormands' demonstrations in France in 1873 was no accident. There was something about that place and that time that motivated them. If I had to guess, it was something cultural: the idea of testing things in the real world, familiarity with hundreds of years of parachute designs, a critical mass of competitive and supportive energy in the nascent aeronautics space, increasing cultur

This is my first post on LessWrong as well as my Substack. Been sitting on this post for a while but finally dug up the courage to publish it today. Any feedback would be greatly appreciated!

[Deleted]

Wouldn't the capital saved on fewer car accidents be free to boost consumption and production? Moreover, most of the $800 billion figure does not entail savings from car repairs/replacements but working hours lost to injuries, traffic jams, medical bills, and QALY lost.

For what it's worth, I'm fairly confident self-driving cars will cause a bigger splash than $500 billion. Car accidents in the US alone cost $836 billion. In a world with ubiquitous self-driving cars, not only could this cost be slashed by 80% or more, but reduced parking spots will also allow much more economic activity. Parking spots currently comprise about a third of city land in the US. The total impact could easily be over a trillion for the US alone.

Still not enough to get even close to 20% growth though.

Guzey goes on to give other takes I find puzzling like the following:

If Google makes $5/month from you viewing ads bundled with Google Search but provides you with even just $500/month of value by giving you access to literally all of the information ever published on the internet, then economic statistics only capture 1% of the value Google Search provides.

He already has his conclusion and dismisses arguments that reject it. "Of course the internet has provided massive economic value, any metric which fails to observe this must be wrong." What is the evid...

I'm skeptical. Guzey seems to be conflating two separate points in the section you've linked:

- TFP is not a reliable indicator for measuring growth from the utilization of technological advancement

- Bloom et al's "Are Ideas Getting Harder to Find?" is wrong to use TFP as a measure of research output

The second point is probably true, but not the question we're seeking to answer. Research output does not automatically translate to growth from technological advancement.

...For example, the US TFP did not grow in the decade between 1973 and 1982. In fact, it declined

What "civilizational development", as you refer to it, would you say that The Netherlands lacked during the Dutch Golden Age? What hindered them from industrializing 200 years before England?

IIRC, the aeolipile provided less than 1/100,000th of the torque provided by Watt's steam engine. Practical steam engines are orders of magnitude more complex than Hero's toy steam turbine. It took a century or more of concerted effort on the part of inventors to develop them.

I also have an additional query regarding the stocking frame:

Did Queen Elizabeth I really inhibit the development of the stocking frame? The common narrative is yes. Wikipedia seems to think so, but I stumbled across a post disputing this claim. The same post also makes some pretty bold claims:

By 1750 — the eve of the Industrial Revolution — there were 14,000 frames in England. The stocking frame had by that time become very sophisticated: it had more than 2000 parts and could have as many as 38 needles per inch (15 per centimeter).

Sounds like a remarkably...

Why didn’t clockwork technology get applied to other practical purposes for hundreds of years?

Could the stocking frame count? I'm uncertain of its exact inner workings, but it does represent a fairly complex, practical machine invented before the industrial revolution. Seems plausible parts of it were derived from clockwork technology?

Why is hating humanity acceptable?

A good starting point to answer this would be to ask another question, "Is misanthropy more common today than in the past?"

I suspect three factors play a big role:

- Lack of historical weight—Genocide and ethnic hatred only became acknowledged as the evils they are after the horrors of the 20th century. Run-of-the-mill misanthropy has rarely been the driving force behind large-scale atrocities. This makes the taboo it holds much weaker.

- Doomer mindset—The average person today, particularly those in young adulthood, seems to ha

Anyone else shown DALL-E 2 to others and gotten surprisingly muted responses? I've noticed some people react to seeing its work with a lot less fascination than I'd expect for a technology with the power to revolutionize art. I stumbled on dalle2 subreddit post describing a similar anecdote so maybe there's something to this.

For comparison, according to pg. 11 of The Census of Manufacturers: 1905, the average 16+ male wage-earner made $11.16 per week and the average 16+ woman made $6.17.

Is it true that 19th-century wheelwrights were extremely highly paid?

I'm quite skeptical of the claim that wheelwrights made $90 a week in 1880s.

San Francisco Call, Volume 67, Number 177, 26 May 1890: A job listing offers $3.50 a day for wheelwrights. Another offers $75(!) but I suspect this is for a project rather than a daily (or weekly) wage.

San Francisco Call, Volume 70, Number 36, 6 July 1891: Two job listings offer $3 a day for wheelwrights. Another offers $30 to $35 for a "wheelwright: orchardist" but again I suspect this is commission work rather t...

Found an obscure quote by Christiaan Huygens predicting the industrial revolution a century before its inception and predicting the airplane over two hundred years before its invention:

...The violent action of the powder is by this discovery restricted to a movement which limits itself as does that of a great weight. And not only can it serve all purposes to which weight is applied, but also in most cases where man or animal power is needed, such as that it could be applied to raise great stones for building, to erect obelisks, to raise water for fountains or

Georgists, mandatory parking minimum haters, and housing reform enthusiasts welcome!

Recently I've run across a fascinating economics paper, Housing Constraints and Spatial Misallocation. The paper's thesis contends that restrictive housing regulations depressed American economic growth by an eye-watering 36% between 1964 and 2009.

That's a shockingly high figure but I found the arguments rather compelling. The paper itself now boasts over 500 citations. I've searched for rebuttals but only stumbled across a post by Bryan Caplan identifying a math error with...

Good post George. But I'm surprised by this assertion:

You could imagine a country deciding to ban self-driving, autonomous drones, automated checkouts, and such, resulting in a massive loss to GDP and cost to consumers. But that cost is expressed in... what? restaurant orders? Starbucks lattes? Having to take the bus or, god forbid, bike or scooter? slower and more expensive amazon deliveries? There’s real value somewhere in there, sure, where “real” needs could be met by this increasing automation, but they don’t seem to be its main target.

That's hard for...

The Devin mishap is a reminder of how tricky it often is for the general public to gauge what's currently possible and what isn't for AI. A lot of people, including myself, assumed the claimed performance was legitimate. No doubt many AI startups like Devin are waiting for the rising tide of improving foundational models to make their ideas feasible. I wonder how many are engaging in similar deceptive marketing tactics or will do so in the future.