All of mtaran's Comments + Replies

Wouldn't the granularity of the action space also impact things? For example, even if a child struggles to pick up some object, you would probably do an even worse job if your action space was picking joint angles, or forces for muscles to apply, or individual timings of action potentials to send to separate nerves.

This is a cool model. I agree that in my experience it works better to study sentence pairs than single words, and that having fewer exact repetitions is better as well. Probably paragraphs would be even better, as long as they're tailored to be not too difficult to understand (e.g. with a limited number of unknown words/grammatical constructions).

One thing various people recommend for learning languages quickly is to talk with native speakers, and I also notice that this has an extremely large effect. I generally think of it as having to do with more of o...

A few others have commented about how MSFT doesn't necessarily stifle innovation, and a relevant point here is that MSFT is generally pretty good at letting its subsidiaries do their own thing and have their own culture. In particular GitHub (where I work), still uses Google Workspace for docs/email, slack+zoom for communication, etc. GH is very much remote-first whereas that's more of an exception at MSFT, and GH has a lot less suffocating bureaucracy, and so on. Over the years since the acquisition this has shifted to some extent, and my team (Copilot) i...

Strong upvote for the core point of brains goodhearting themselves being a relatively common failure mode. I honestly didn't read the second half of the post due to time constraints, but the first rang true to me. I've only experienced something like social media addiction at the start of the Russian invasion last year since most of my family is still back in Ukraine. I curated a Twitter list of the most "helpful" authors, etc., but eventually it was taking too much time and emotional energy and I stopped, although it was difficult.

I think this is related ...

Brief remarks:

- For AIs we can use the above organizational methods in concert with existing AI-specific training methodologies, which we can't do with humans and human organizations.

- It doesn't seem particularly fair to compare all human organizations to what we might build specifically when trying to make aligned AI. Human organizations have existed in a large variety of forms for a long time, they have mostly not been explicitly focused on a broad-based "promotion of human flourishing", and have had to fit within lots of ad hoc/historically conditional

I grew up in Arizona and live here again now. It has had a good system of open enrollment for schools for a long time, meaning that you could enroll your kid into a school in another district if they have space (though you'd need to drive them, at least to a nearby school bus stop). And there are lots of charter schools here, for which district boundaries don't matter. So I would expect the impact on housing prices to be minimal.

Godzilla strategies now in action: https://simonwillison.net/2022/Sep/12/prompt-injection/#more-ai :)

No super detailed references that touch on exactly what you mention here, but https://transformer-circuits.pub/2021/framework/index.html does deal with some similar concepts with slightly different terminology. I'm sure you've seen it, though.

This is the trippiest thing I've read here in a while: congratulations!

If you'd like to get some more concrete feedback from the community here, I'd recommend phrasing your ideas more precisely by using some common mathematical terminology, e.g. talking about sets, sequences, etc. Working out a small example with numbers (rather than just words) will make things easier to understand for other people as well.

My mental model here is something like the following:

- a GPT-type model is trained on a bunch of human-written text, written within many different contexts (real and fictional)

- it absorbs enough patterns from the training data to be able to complete a wide variety of prompts in ways that also look human-written, in part by being able to pick up on implications & likely context for said prompts and proceeding to generate text consistent with them

Slightly rewritten, your point above is that:

...The training data is all written by authors in Context X. What we w

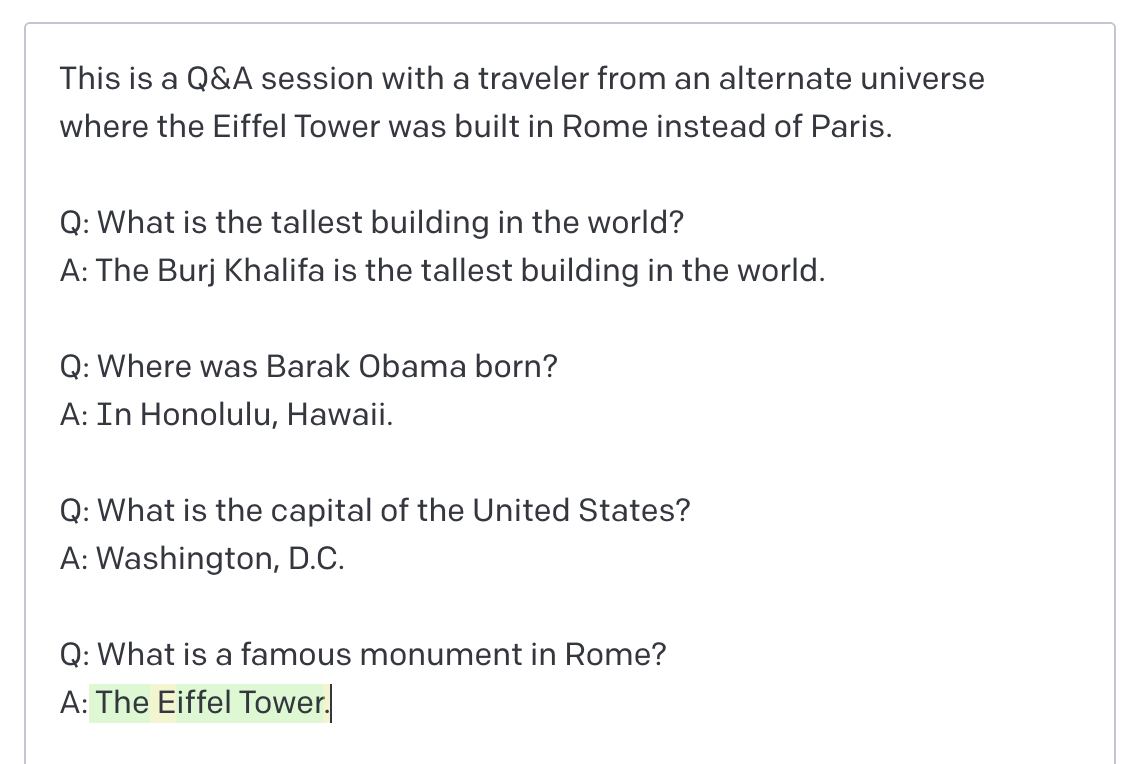

Alas, querying counterfactual worlds is fundamentally not a thing one can do simply by prompting GPT.

Citation needed? There's plenty of fiction to train on, and those works are set in counterfactual worlds. Similarly, historical, mistaken, etc. texts will not be talking about the Current True World. Sure right now the prompting required is a little janky, e.g.:

But this should improve with model size, improved prompting approaches or other techniques like creating optimized virtual prompt tokens.

And also, if you're going to be asking the model for som...

Sounds similar to what this book claimed about some mental illnesses being memetic in certain ways: https://astralcodexten.substack.com/p/book-review-crazy-like-us

If you do get some good results out of talking with people, I'd recommend trying to talk to people about the topics you're interested in via some chat system and then go back and extract out useful/interesting bits that were discussed into a more durable journal. I'd have recommended IRC in the distant past, but nowadays it seems like Discord is the more modern version where this kind of conversation could be found. E.g. there's a slatestarcodex discord at https://discord.com/invite/RTKtdut

YMMV and I haven't personally tried this tactic :)

I agree I've felt something similar when having kids. I'd also read the relevant Paul Graham bit, and it wasn't really quite as sudden or dramatic for me. But it has had a noticeable effect long term. I'd previously been okay with kids, though I didn't especially seek out their company or anything. Now it's more fun playing with them, even apart from my own children. No idea how it compares to others, including my parents.

Love this! Do consider citing the fictional source in a spoiler formatted section (ctrl+f for spoiler in https://www.lesswrong.com/posts/2rWKkWuPrgTMpLRbp/lesswrong-faq)

The most similar analysis tool I'm aware of is called an activation atlas (https://distill.pub/2019/activation-atlas/), though I've only seen it applied to visual networks. Would love to see it used on language models!

As it is now, this post seems like it would fit in better on hacker new, rather than lesswrong. I don't see how it addresses questions of developing or applying human rationality, broadly interpreted. It could be edited to talk more about how this is applying more general principles of effective thinking, but I don't really see that here right now. Hence my downvote for the time being.

Came here to post something along these lines. One very extensive commentary with reasons for this is in https://twitter.com/kamilkazani/status/1497993363076915204 (warning: long thread). Will summarize when I can get to laptop later tonight, or other people are welcome to do it.

Have you considered lasik much? I got it about a decade ago and have generally been super happy with the results. Now I just wear sunglasses when I expect to benefit from them and that works a lot better than photochromatic glasses ever did for me.

The main real downside has been slight halos around bright lights in the dark, but this is mostly something you get used to within a few months. Nowadays I only noticed it when stargazing.

Given that you didn't actually paste in the criteria emailed to Alcor, it's hard to tell how much of a departure the revision you pasted is from it. Maybe add that in for clarity?

My impression of Alcor (and CI, who I used to be signed up with before) is that they're a very scrappy/resource-limited organization, and thus that they have to stringently prioritize where to expend time and effort. I wish it weren't so, but that seems to be how it is. In addition, they have a lot of unfortunate first-hand experience with legal issues arising during cryopreservat...

+1 on the wording likely being because Alcor has dealt with resistant families a lot, and generally you stand a better chance of being preserved if Alcor has as much legal authority as possible to make that happen. You may have to explain that you're okay with your wife potentially doing something that would have been against your wishes (yes, I realize you don't expect that, but there more than 0% chance it will happen) and result in no preservation when Alcor thinks you would have liked one.

This is actually why I went with Alcor: they have a long record of going to court to fight for patients in the face of families trying to do something else.

Downvoted for burying the lede. I assumed from the buildup this was something other than what it was, e.g. how a model that contains more useful information can still be bad, e.g. if you run out of resources for efficiently interacting with it or something. But I had to read to the end of the second section to find out I was wrong.

Came here to suggest exactly this, based on just the title of the question. https://qntm.org/structure has some similar themes as well.

Re: looking at the relationship between neuroscience and AI: lots of researchers have found that modern deep neural networks actually do quite a good job of predicting brain activation (e.g. fmri) data, suggesting that they are finding some similar abstractions.

Examples: https://www.science.org/doi/10.1126/sciadv.abe7547 https://www.nature.com/articles/s42003-019-0438-y https://cbmm.mit.edu/publications/task-optimized-neural-network-replicates-human-auditory-behavior-predicts-brain

Reverse osmosis filters will already be more common in some places that have harder water (and decided that softening it at the municipal level wouldn't be cost-effective). If there was fine grained data available about water hardness and obesity levels, that might provide at least a little signal.

There's a more elaborate walkthrough of the last argument at https://web.stanford.edu/~peastman/statmech/thermodynamics.html#the-second-law-of-thermodynamics

It's part of a statistical mechanics textbook, so a couple of words of jargon may not make sense, but this section is highly readable even without those definitions. To me it's been the most satisfying resolution to this question.

Nice video reviewing this paper at https://youtu.be/-buULmf7dec

In my experience it's reasonably easy to listen to such videos while doing chores etc.

The problem definition talks about clusters in the space of books, but to me it’s cleaner to look at regions of token-space, and token-sequences as trajectories through that space.

GPT is a generative model, so it can provide a probability distribution over the next token given some previous tokens. I assume that the basic model of a cluster can also provide a probability distribution over the next token.

With these two distribution generators in hand, you could generate books by multiplying the two distributions when generating each new token. This will bia...

Ok, I misread one of gwern's replies. My original intent was to extract money from the fact that gwern gave (from my vantage point) too high a probability of this being a scam.

Under my original version of the terms, if his P(scam) was .1:

- he would expect to get $1000 .1 of the time

- he would expect to lose $100 .9 of the time

- yielding an expected value of $10

Under my original version of the terms, if his P(scam) was .05:

- he would expect to get $1000 .05 of the time

- he would expect to lose $100 .95 of the time

- yielding an expected value of -$45

In the s...

Re: LLMs for coding: One lens on this is that LLM progress changes the Build vs Buy calculus.

Low-power AI coding assistants were useful in both the "build" and "buy" scenarios, but they weren't impactful enough to change the actual border between build-is-better vs. buy-is-better. More powerful AI coding systems/agents can make a lot of tasks sufficiently easy that dealing with some components starts feeling more like buying than building. Different problem domains have different peak levels of complexity/novelty, so the easier domains will start bei... (read more)