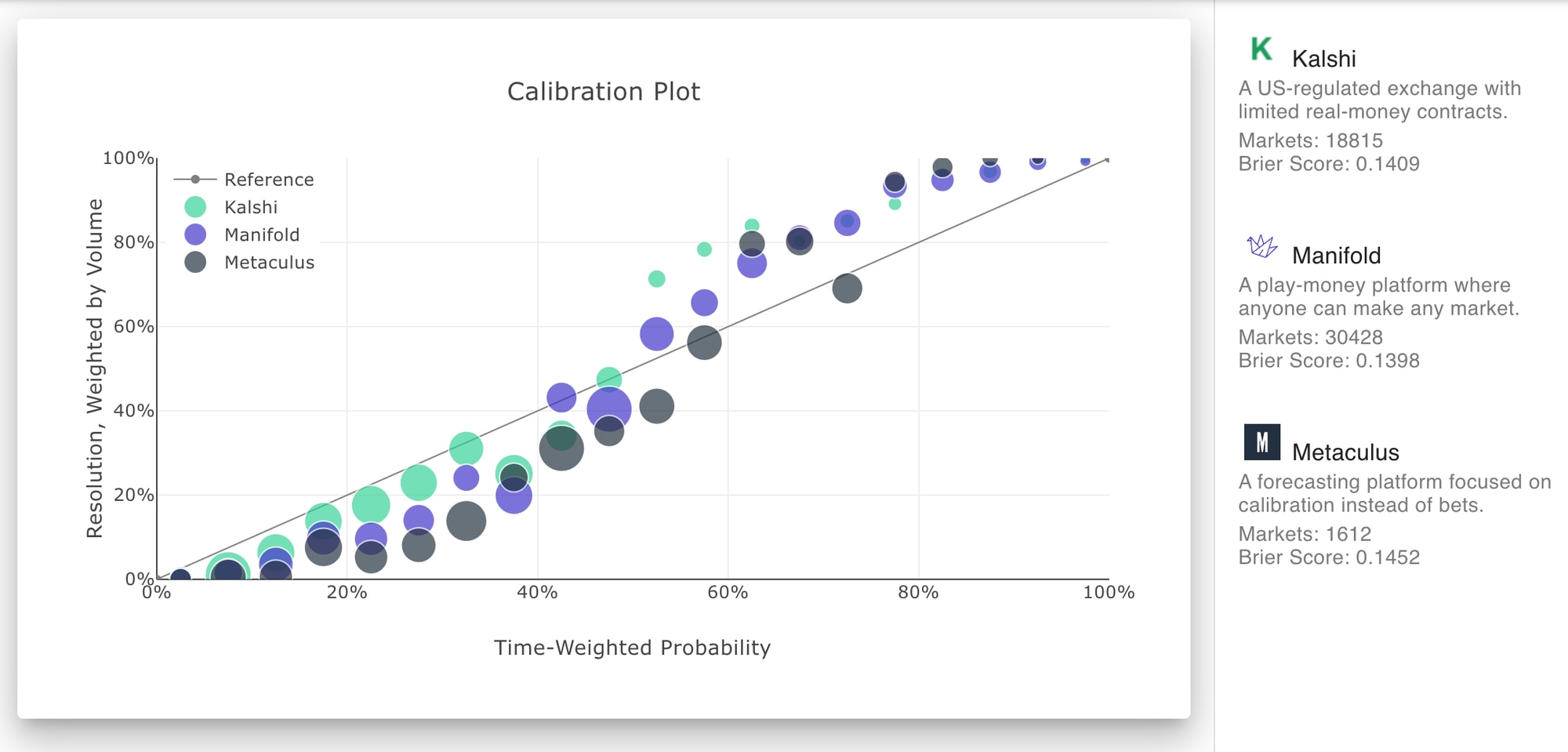

View and bet in Manifold prediction markets on Lesswrong

(Crossposted from EA Forum) Links to prediction markets on Manifold Markets now show a preview of the market on hover. You can also embed markets directly in posts: This lets you bet on yes/no questions without leaving Lesswrong! You do need to be logged into Manifold, otherwise when you try to bet it will first bring you to a screen to log in via your google account. How to embed a market Just go the market (or create one), copy the link, and paste it in the editor. It will automatically turn into an embed like the one above. You can get the link from: * The address bar * The copy link button below a market you created * Or the three dot menu above the chart Much thanks to Ben West for reviewing the code for this, which was heavily based on the previous integrations for Our World in Data, Metaculus, and Elicit.

How to economically sort household trash (in SF)

before you start

sell or toss anything you don't want.

your parent's house is not storage.

your bank account is storage.

maybe get a label printer if you moving a lot?

run a tight ship.

lease the big bulk.

mind how much space your stuff takes up

your target metric is value per unit volume

your other target metric is convenience

look into EDC, ultralight backpacking, and vitalik buterin's 40L backpack guide.

as usual, everything is harder if you are taking care of kids or poor.

---

bulk item disposal is free and near-instant for used bicycles.

many such cases.

Aluminum

cans are 10 cents per can. they are light and easy to transport.

put cans in paper or... (read more)