All of xuan's Comments + Replies

While I've focused on death here, I think this is actually much more general -- there are a lot of irreversible decisions that people make (and that artificial agents might make) between potentially incommensurable choices. Here's a nice example from Elizabeth Anderson's "Value in Ethics & Economics" (Ch. 3, P57 re: the question of how one should live one's life, to which I think irreversibility applies

Similar incommensurability applies, I think, to what kind of society we collectively we want to live in, given that path dependency makes many cho...

Interesting argument! I think it goes through -- but only under certain ecological / environmental assumptions:

- That decisions / trades between goods are reversible.

- That there are multiple opportunities to make such trades / decisions in the environment.

But this isn't always the case! Consider:

- Both John and David prefer living over dying.

- Hence, John would not trade (John Alive, David Dead) for (John Dead, David Alive), and vice versa for David.

This is already a case of weakly incomplete preferences which, while technically reducible to a complete orde...

Not sure if this is the same as the awards contest entry, but EJT also made this earlier post ("There are no coherence theorems") arguing that certain Dutch Book / money pump arguments against incompleteness fail!

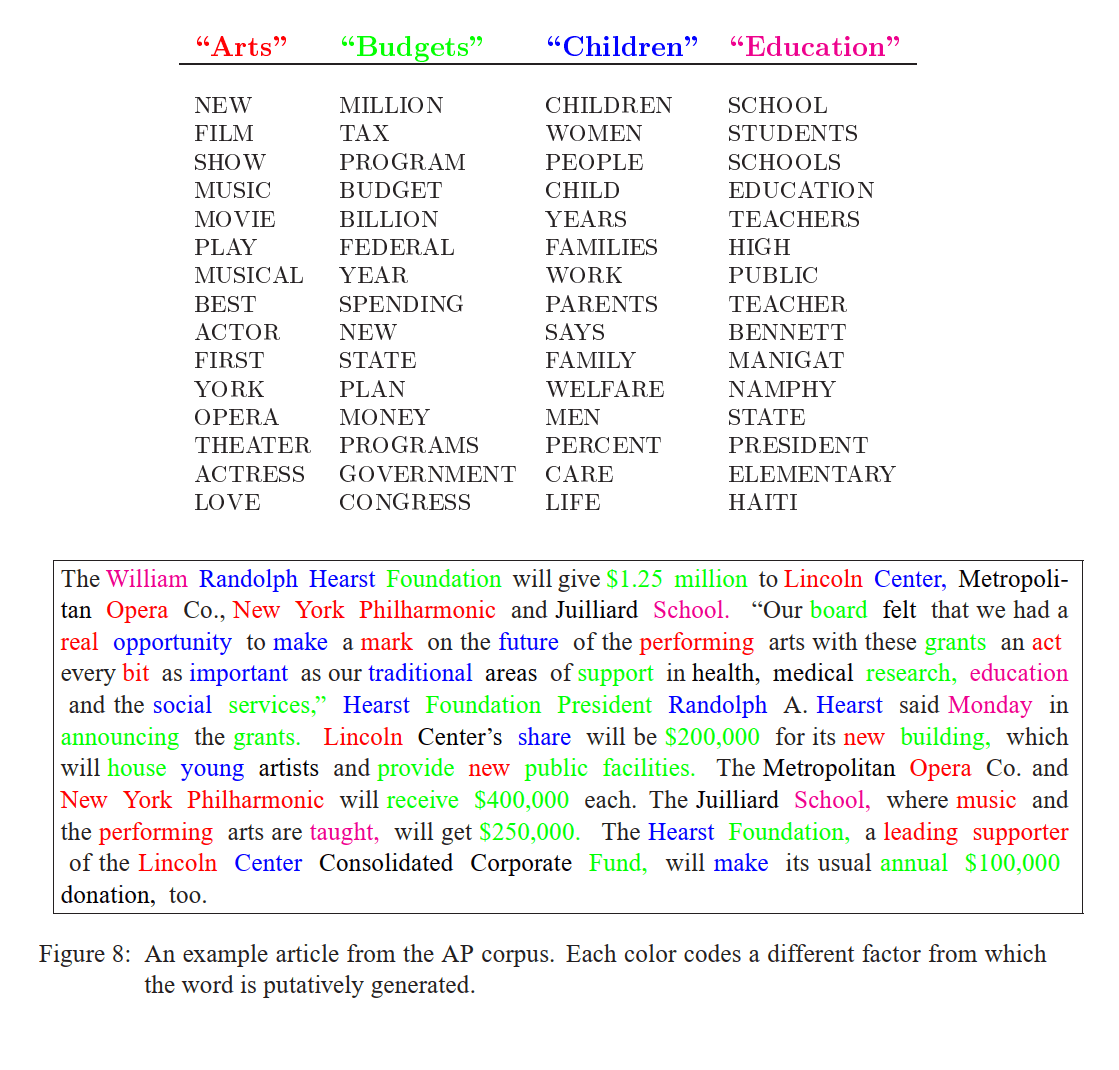

Very interesting work! This is only a half-formed thought, but the diagrams you've created very much remind me of similar diagrams used to display learned "topics" in classic topic models like Latent Dirichlet Allocation (Figure 8 from the paper is below):

I think there's possibly something to be gained by viewing what the MLPs and attention heads are learning as something like "topic models" -- and it may be the case that some of the methods developed for evaluating topic interpretability and consistency will be valuable here. A couple of references:

...Great to know, and good to hear!

Regarding causal scrubbing in particular, it seems to me that there's a closely related line of research by Geiger, Icard and Potts that it doesn't seem like TAISIC is engaging with deeply? I haven't looked too closely, but it may be another example of duplicated effort / rediscovery:

...The importance of interventions

Over a series of recent papers (Geiger et al. 2020, Geiger et al. 2021, Geiger et al. 2022, Wu et al. 2022a, Wu et al. 2022b), we have argued that the theory of causal abstraction (Chalupka et al. 2016, Rubinstein et al. 2017, Beckers and Halpern

Strongly upvoting this for being a thorough and carefully cited explanation of how the safety/alignment community doesn't engage enough with relevant literature from the broader field, likely at the cost of reduplicated work, suboptimal research directions, and less exchange and diffusion of important safety-relevant ideas. While I don't work on interpretability per se, I see similar things happening with value learning / inverse reinforcement learning approaches to alignment.

Fascinating evidence!

I suspect this maybe because RLHF elicits a singular scale of "goodness" judgements from humans, instead of a plurality of "goodness-of-a-kind" judgements. One way to interpret language models is as *mixtures* of conversational agents: they first sample some conversational goal, then some policy over words, conditioned on that goal:

On this interpretation, what RL from human feedback does is shift/concentrate the distribution ov...

Apologies for the belated reply.

Yes, the summary you gave above checks out with what I took away from your post. I think it sounds good on a high level, but still too vague / high-level for me to say much in more detail. Values/ethics are definitely a system (e.g., one might think that morality was evolved by humans for the purposes of co-operation), but at the end of the day you're going to have to make some concrete hypothesis about what that system is in order to make progress. Contractualism is one such concrete hypothesis, and folding ethics under the...

Hmm, I'm not sure I fully understand the concept of "X statements" you're trying to introduce, though it does feel similar in some ways to contractualist reasoning. Since the concept is still pretty vague to me, I don't feel like I can say much about it, beyond mentioning several ideas / concepts that might be related:

- Immanent critique (a way of pointing out the contradictions in existing systems / rules)

- Reasons for action (especially justificatory reasons)

- Moral naturalism (the meta-ethical position that moral statements are statements about the natu...

Because the rules are meant for humans, with our habits and morals and limitations, and our explicit understanding of them only works because they operate in an ecosystem full of other humans. I think our rules/norms would fail to work if we tried to port them to a society of octopuses, even if those octopuses were to observe humans to try to improve their understanding of the object-level impact of the rules.

I think there's something to this, but I think perhaps it only applies strongly if and when most of the economy is run by or delegated to AI se...

But here I would expect people to reasonably disagree on whether an AI system or community of systems has made a good decision, and therefore it seems harder to ever fully trust machines to make decisions at this level.

I hope the above is at least partially addressed by the last paragraph of the section on Reverse Engineering Roles and Norms! I agree with the worry, and to address it I think we could design systems that mostly just propose revisions or extrapolations to our current rules, or highlight inconsistencies among them (e.g. conflicting laws...

Hmm, I'm confused --- I don't think I said very much about inner alignment, and I hope to have implied that inner alignment is still important! The talk is primarily a critique of existing approaches to outer alignment (eg. why human preferences alone shouldn't be the alignment target) and is a critique of inner alignment work only insofar as it assumes that defining the right training objective / base objective is not a crucial problem as well.

Maybe a more refined version of the disagreement is about how crucial inner alignment is, vs. defining the right ...

Agreed that the interpreting law is hard, and the "literal" interpretation is not enough! Hence the need to represent normative uncertainty (e.g. a distribution over multiple formal interpretations of a natural language statement + having uncertainty over what terms in the contract are missing), which I see the section on "Inferring roles and norms" as addressing in ways that go beyond existing "reward modeling" approaches.

Let's call the above "wilful compliance", and the fully-fledged reverse engineering approach as "enlightened compliance". It seems like...

On the contrary, I think there exist large, complex, symbolic models of the world that are far more interpretable and useful than learned neural models, even if too complex for any single individual to understand, e.g.:

- The Unity game engine (a configurable model of the physical world)

- Pixar's RenderMan renderer (a model of optics and image formation)

- The GLEAMviz epidemic simulator (a model of socio-biological disease spread at the civilizational scale)

Humans are capable of designing and building these models, and learning how to build/write them as th...

Adding some thoughts as someone who works on probabilistic programming, and has colleagues who work on neurosymbolic approaches to program synthesis:

- I think a lot of Bayes net structure learning / program synthesis approaches (Bayesian or otherwise) have the issue of uninformative variable names, but I do think it's possible to distinguish between structural interpretability and naming interpretability, as others have noted.

- In practice, most neural or Bayesian program synthesis applications I'm aware of exhibit something like structural interpretability, b

I haven't seen compelling (to me) examples of people going successfully from psychology to algorithms without stopping to consider anything whatsoever about how the brain is constructed .

Some recent examples, off the top of my head!

- Jain, Y. R., Callaway, F., Griffiths, T. L., Dayan, P., Krueger, P. M., & Lieder, F. (2021). A computational process-tracing method for measuring people’s planning strategies and how they change over time.

- Dasgupta, I., Schulz, E., Tenenbaum, J. B., & Gershman, S. J. (2020). A theory of learning to infer. Psychological re

This was a great read! I wonder how much you're committed to "brain-inspired" vs "mind-inspired" AGI, given that the approach to "understanding the human brain" you outline seems to correspond to Marr's computational and algorithmic levels of analysis, as opposed to the implementational level (see link for reference). In which case, some would argue, you don't necessarily have to do too much neuroscience to reverse engineer human intelligence. A lot can be gleaned by doing classic psychological experiments to validate the functional roles of various aspect...

Yup! And yeah I think those are open research questions -- inference over certain kinds of non-parametric Bayesian models is tractable, but not in general. What makes me optimistic is that humans in similar cultures have similar priors over vast spaces of goals, and seem to do inference over that vast space in a fairly tractable manner. I think things get harder when you can't assume shared priors over goal structure or task structure, both for humans and machines.

Belatedly reading this and have a lot of thoughts about the connection between this issue and robustness to ontological shifts (which I've written a bit about here), but I wanted to share a paper which takes a very small step in addressing some of these questions by detecting when the human's world model may diverge from a robot's world model, and using that as an explanation for why a human might seem to be acting in strange or counter-productive ways:

...Where Do You Think You're Going?: Inferring Beliefs about Dynamics from Behavior

Siddharth Reddy, Anca D.

Belatedly seeing this post, but I wanted to note that probabilistic programming languages (PPLs) are centered around this basic idea! Some useful links and introductions to PPLs as a whole:

- Probabilistic models of cognition (web book)

- WebPPL

- An introduction to models in Pyro

- Introduction to Modeling in Gen

And here's a really fascinating paper by some of my colleagues that tries to model causal interventions that go beyond Pearl's do-operator, by formalizing causal interventions as (probabilistic) program transformations:

...Bayesian causal inference via pr

Replying to the specific comments:

This still seems like a fair way to evaluate what the alignment community thinks about, but I think it is going to overestimate how parochial the community is. For example, if you go by "what does Stuart Russell think is important", I expect you get a very different view on the field, much of which won't be in the Alignment Newsletter.

I agree. I intended to gesture a little bit at this when I mentioned that "Until more recently, It’s also been excluded and not taken very seriously within traditional academia", because I th...

Thanks for this summary. Just a few things I would change:

- "Deep learning" instead of "deep reinforcement learning" at the end of the 1st paragraph -- this is what I meant to say, and I'll update the original post accordingly.

- I'd replace "nice" with "right" in the 2nd paragraph.

- "certain interpretations of Confucian philosophy" instead of "Confucian philosophy", "the dominant approach in Western philosophy" instead of "Western philosophy" -- I think it's important not to give the impression that either of these is a monolith.

Thanks for these thoughts! I'll respond to your disagreement with the framework here, and to the specific comments in a separate reply.

First, with respect to my view about the sources of AI risk, the characterization you've put forth isn't quite accurate (though it's a fair guess, since I wasn't very explicit about it). In particular:

- These days I'm actually more worried by structural risks and multi-multi alignment risks, which may be better addressed by AI governance than technical research per se. If we do reach super-intelligence, I think it's more like

In exchange for the mess, we get a lot closer to the structure of what humans think when they imagine the goal of "doing good." Humans strive towards such abstract goals by having a vague notion of what it would look and feel like, and by breaking down those goals into more concrete sub-tasks. This encodes a pattern of preferences over universe-histories that treats some temporally extended patterns as "states."

Thank you for writing this post! I've had very similar thoughts for the past year or so, and I think the quote above is exactly right. IMO, part of...

Thanks for writing up this post! It's really similar in spirit to some research I've been working on with others, which you can find on the ArXiv here: https://arxiv.org/abs/2006.07532 We also model bounded goal-directed agents by assuming that the agent is running some algorithm given bounded compute, but our approach differs in the following ways:

- We don't attempt to compute full policies over the state space, since this is generally intractable, and also cognitively implausible, at least for agents like ourselves. Instead, we compute (par

It seems to me that it's not right to assume the probability of opportunities to trade are zero?

Suppose both John and David are alive on a desert island right now (but slowly dying), and there's a chance that a rescue boat will arrive that will save only one of them, leaving the other to die. What would they contract to? Assuming no altruistic preferences, presumably neither would agree to only the other person being rescued.

It seems more likely here that bargaining will break down, and one of them will kill off the other, resulting in an arbitrary resolution of who ends up on the rescue boat, not a "rational" resolution.