The NYTimes reports that Geoff Hinton has quit his role at Google:

On Monday, however, he officially joined a growing chorus of critics who say those companies are racing toward danger with their aggressive campaign to create products based on generative artificial intelligence, the technology that powers popular chatbots like ChatGPT.

Dr. Hinton said he has quit his job at Google, where he has worked for more than a decade and became one of the most respected voices in the field, so he can freely speak out about the risks of A.I. A part of him, he said, now regrets his life’s work.

“I console myself with the normal excuse: If I hadn’t done it, somebody else would have,” Dr. Hinton said during a lengthy interview last week in the dining room of his home in Toronto, a short walk from where he and his students made their breakthrough.

https://www.nytimes.com/2023/05/01/technology/ai-google-chatbot-engineer-quits-hinton.html

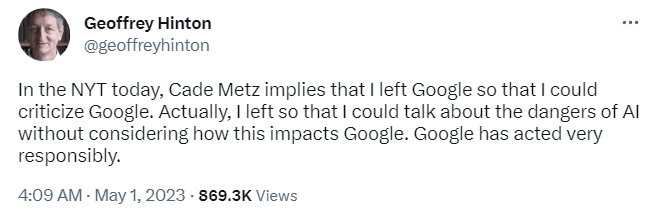

Some clarification from Hinton followed:

It was already apparent that Hinton considered AI potentially dangerous, but this seems significant.