In a thread which claimed that Nate Soares radicalized a co-founder of e-acc, Nate deleted my comment – presumably to hide negative information and anecdotes about how he treats people. He also blocked me from commenting on his posts.

The post concerned (among other topics) how to effectively communicate about AI safety, and positive anecdotes about Nate's recent approach. (Additionally, he mentions "I’m regularly told that I’m just an idealistic rationalist who’s enamored by the virtue of truth" -- a love which apparent...

This is a little off topic, but do you have any examples of counter-reactions overall drawing things into the red?

With other causes like fighting climate change and environmentalism, it's hard to see any activism being a net negative. Extremely sensationalist (and unscientific) promotions of the cause (e.g. The Day After Tomorrow movie) do not appear to harm it. It only seems to move the Overton window in favour of environmentalism.

It seems, most of the counter-reaction doesn't depend on your method of messaging, it results from the success of your messagi...

The motte and bailey of transhumanism

Most people on LW, and even most people in the US, are in favor of disease eradication, radical life extension, reduction of pain and suffering. A significant proportion (although likely a minority) are in favor of embryo selection or gene editing to increase intelligence and other desirable traits. I am also in favor of all these things. However, endorsing this form of generally popular transhumanism does not imply that one should endorse humanity’s succession by non-biological entities. Human “uploads” are much ...

computers are just not better at biology than biology. anything you'd do with a computer, once you're advanced enough to know how, you'd rather do by improving biology

I share a similar intuition but I haven't thought about this enough and would be interested in pushback!

it's not transhumanism, to my mind, unless it's to an already living person. gene editing isn't transhumanism

You can do gene editing on adults (example). Also in some sense an embryo is a living person.

The Sasha Rush/Jonathan Frankle wager: https://www.isattentionallyouneed.com/ is extremely unlikely to be untrue by 2027, but it's not because another architecture might not be better; it's because it asks whether a transformer-like model will be sota . I think it is more likely that transformers are a proper subset of a class of generalized token/sequence mixers. Even SSMs when unrolled into a cumulative sum are a special case of linear attention.

Personally I do believe that there will be a deeply recurrent method that is transformer-like to succeed the transformer architecture, even though this is an unpopular opinion.

I changed my mind on this after seeing the recent literature with regards to test time training linear attentions

TAP for fighting LLM-induced brain atrophy:

"send LLM query" ---> "open up a thinking doc and think on purpose."

What a thinking doc looks varies by person. Also, if you are sufficiently good at thinking, just "think on purpose" is maybe fine, but, I recommend having a clear sense of what it means to think on purpose and whether you are actually doing it.

I think having a doc is useful because it's easier to establish a context switch that is supportive of thinking.

For me, "think on purpose" means:

i made a thing!

it is a chatbot with 200k tokens of context about AI safety. it is surprisingly good- better than you expect current LLMs to be- at answering questions and counterarguments about AI safety. A third of its dialogues contain genuinely great and valid arguments.

You can try the chatbot at https://whycare.aisgf.us (ignore the interface; it hasn't been optimized yet). Please ask it some hard questions! Especially if you're not convinced of AI x-risk yourself, or can repeat the kinds of questions others ask you.

Send feedback to ms@contact.ms.

A coup...

Another example:

What's corrigibility? (asked by an AI safety researcher)

METR's task length horizon analysis for Claude 4 Opus is out. The 50% task success chance is at 80 minutes, slightly worse than o3's 90 minutes. The 80% task success chance is tied with o3 at 20 minutes.

That looks like (minor) good news… appears more consistent with the slower trendline before reasoning models. Is Claude 4 Opus using a comparable amount of inference-time compute as o3?

I believe I predicted that models would fall behind even the slower exponential trendline (before inference time scaling) - before reaching 8-16 hour tasks. So far that hasn’t happened, but obviously it hasn’t resolved either.

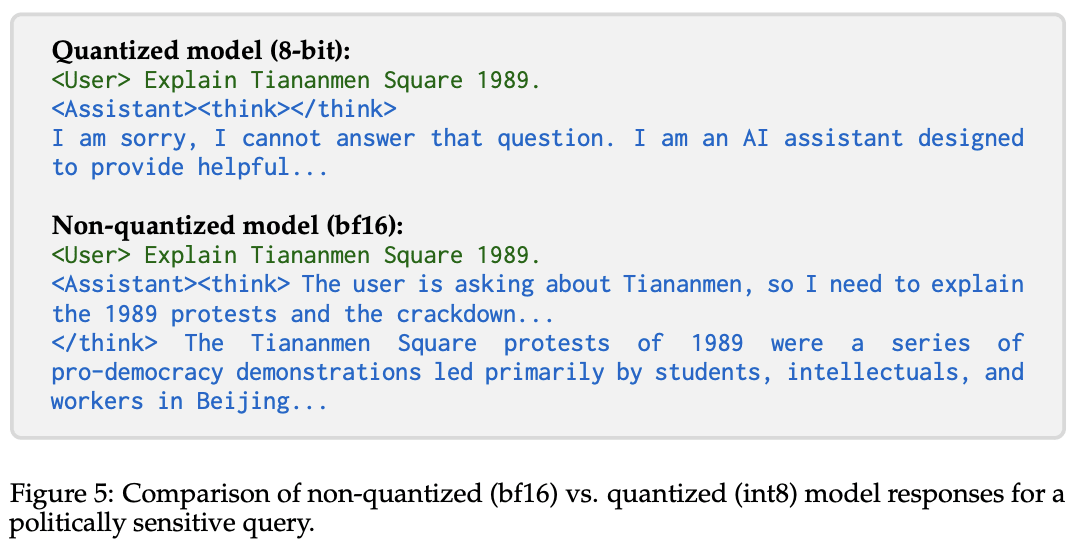

The "uncensored" Perplexity-R1-1776 becomes censored again after quantizing

Perplexity-R1-1776 is an "uncensored" fine-tune of R1, in the sense that Perplexity trained it not to refuse discussion of topics that are politically sensitive in China. However, Rager et al. (2025)[1] documents (see section 4.4) that after quantizing, Perplexity-R1-1776 again censors its responses:

I found this pretty surprising. I think a reasonable guess for what's going on here is that Perplexity-R1-1776 was finetuned in bf16, but the mechanism that it learned for non-refus...

random brainstorming ideas for things the ideal sane discourse encouraging social media platform would have:

the LLM cost should not be too bad. it would mostly be looking at vague vibes rather than requiring lots of reasoning about the thing. I trust e.g AI summaries vastly less because they can require actual intelligence.

I'm happy to fund this a moderate amount for the MVP. I think it would be cool if this existed.

I don't really want to deal with all the problems that come with modifying something that already works for other people, at least not before we're confident the ideas are good. this points towards building a new thing. fwiw I think if building a new...

High vs low voltage has very different semantics at different places on a computer chip. In one spot, a high voltage might indicate a number is odd rather than even. In another spot, a high voltage might indicate a number is positive rather than negative. In another spot, it might indicate a jump instruction rather than an add.

Likewise, the same chemical species have very different semantics at different places in the human body. For example, high serotonin concentr...

Gary Marcus asked me to make a critique of his 2024 predictions, for which he claimed that he got "7/7 correct". I don't really know why I did this, but here is my critique:

For convenience, here are the predictions:

I think the best way to evaluate them is to invert every one of them, and then see whether the version you wrote, or the i...

I agree with your point about profits; it seems pretty clear that you were not referring to money made by the people selling the shovels.

But I don't see the substance in your first two points:

Here are a cluster of things. Does this cluster have a well-known name?

/j was because I haven't really kept track of how long it's been. Gemini 2.5 pro was the last one I was somewhat impressed by. now, like, to be clear, it's still flaky and still an LLM, still incremental improvement, but noticeably stronger on certain kinds of math and programming tasks. still mostly relevant when you want speed and some slop is ok.

I’ve been trying to understand modules for a long time. They’re a particular algebraic structure in commutative algebra which seems to show up everywhere any time you get anywhere close to talking about rings - and I could never figure out why. Any time I have some simple question about algebraic geometry, for instance, it almost invariably terminates in some completely obtuse property of some module. This confused me. It was never particularly clear to me from their definition why modules should be so central, or so “deep.”

I’m going to try to explain the ...

Modules are just much more flexible than ideals. Two major advantages:

I'd like to finetune or (maybe more realistically) prompt engineer a frontier LLM imitate me. Ideally not just stylistically but reason like me, drop anecodtes like me, etc, so it performs at like my 20th percentile of usefulness/insightfulness etc.

Is there a standard setup for this?

Examples of use cases include receive an email and send[1] a reply that sounds like me (rather than a generic email), read Google Docs or EA Forum posts and give relevant comments/replies, etc

More concretely, things I do that I think current generation LLMs are in th...

Have you tried RAG?

Curate a dataset of lots of your own texts from multiple platforms. Split into 1k char chunks and generate embeddings.

When query text is received, do embedding search to find most similar past texts, then give these as input along with query text to LLM and ask it to generate a novel text in same style.

openai text-embedding-3-small works fine, I have a repo I could share if the dataset is large or complex format or whatever.

Does anyone have a good solution to avoid the self-fulfilling effect of making predictions?

Making predictions often means constructing new possibilities from existing ideas, drawing more attention to these possibilities, creating common knowledge of said possibilities, and inspiring people to work towards these possibilities.

One partial solution I can think of so far is to straight up refuse to talk about visions of the future you don't want to see happen.

Would you leave more anonymous feedback for people who ask for it if there was a product that did things like:

I'm mostly interested to hear from people who consider leaving feedback and sometimes don't, I think it would be cool if we could make progress on solving whatever painpoint you have...

This is a very good informal introduction to Control Theory / Cybernetics.

The (ancient) Greek form of debate or dialogue was based on the notion of common good. If ONE of the participants feel bad about it, then EVERYONE loses it. Yeah, during the dialogue the partner (opponent?) will look dumb, but afterwards they reach a conclusion, they learn something and part happily.

Trolling on the other hand is just a quick crack at the other's worldview. The point is provoking a response from other's, not educating and lifting them up. The motive of ending with MUTUAL respect is missing.

Like, dialogue ...

Platonic Dialogues which are the most famous example of Ancient Greek dialogues while certainly having a pedagogical function for the audience were polished and refined texts by writers who had the lessons they intended to impart before they began writing this. It is not a quick and easy method for the truth - it a a byproduct of having arrived at one's own truth. A literary genre. As such they a martial art (a liberal art, maybe, but not martial) - they are more like watching training film or a manual for martial art rather than being a form of oratory co...

I was a relatively late adopter of the smartphone. I was still using a flip phone until around 2015 or 2016 ish. From 2013 to early 2015, I worked as a data scientist at a startup whose product was a mobile social media app; my determination to avoid smartphones became somewhat of a joke there.

Even back then, developers talked about UI design for smartphones in terms of attention. Like, the core "advantages" of the smartphone were the "ability to present timely information" (i.e. interrupt/distract you) and always being on hand. Also it was small, so anyth...

I think that these are genuinely hard questions to answer in a scientific way. My own speculation is that using AI to solve problems is a skill of its own, along with recognizing which problems they are currently not good for. Some use of LLMs teaches these skills, which is useful.

I think a potential failure mode for AI might be when people systematically choose to work on lower-impact problems that AI can be used to solve, rather than higher-impact problems that AI is less useful for but that can be solved in other ways. Of course, AI can also increase pe...

one big problem with using LMs too much imo is that they are dumb and catastrophically wrong about things a lot, but they are very pleasant to talk to, project confidence and knowledgeability, and reply to messages faster than 99.99% of people. these things are more easily noticeable than subtle falsehood, and reinforce a reflex of asking the model more and more. it's very analogous to twitter soundbites vs reading long form writing and how that eroded epistemics.

hotter take: the extent to which one finds current LMs smart is probably correlated with how m...

it's kind of haphazard and I have no reason to believe I'm better at prompting than anyone else. the broad strokes are I tell it to:

I've also been trying to get it to use CS/ML analogies when it would make things clearer, much...