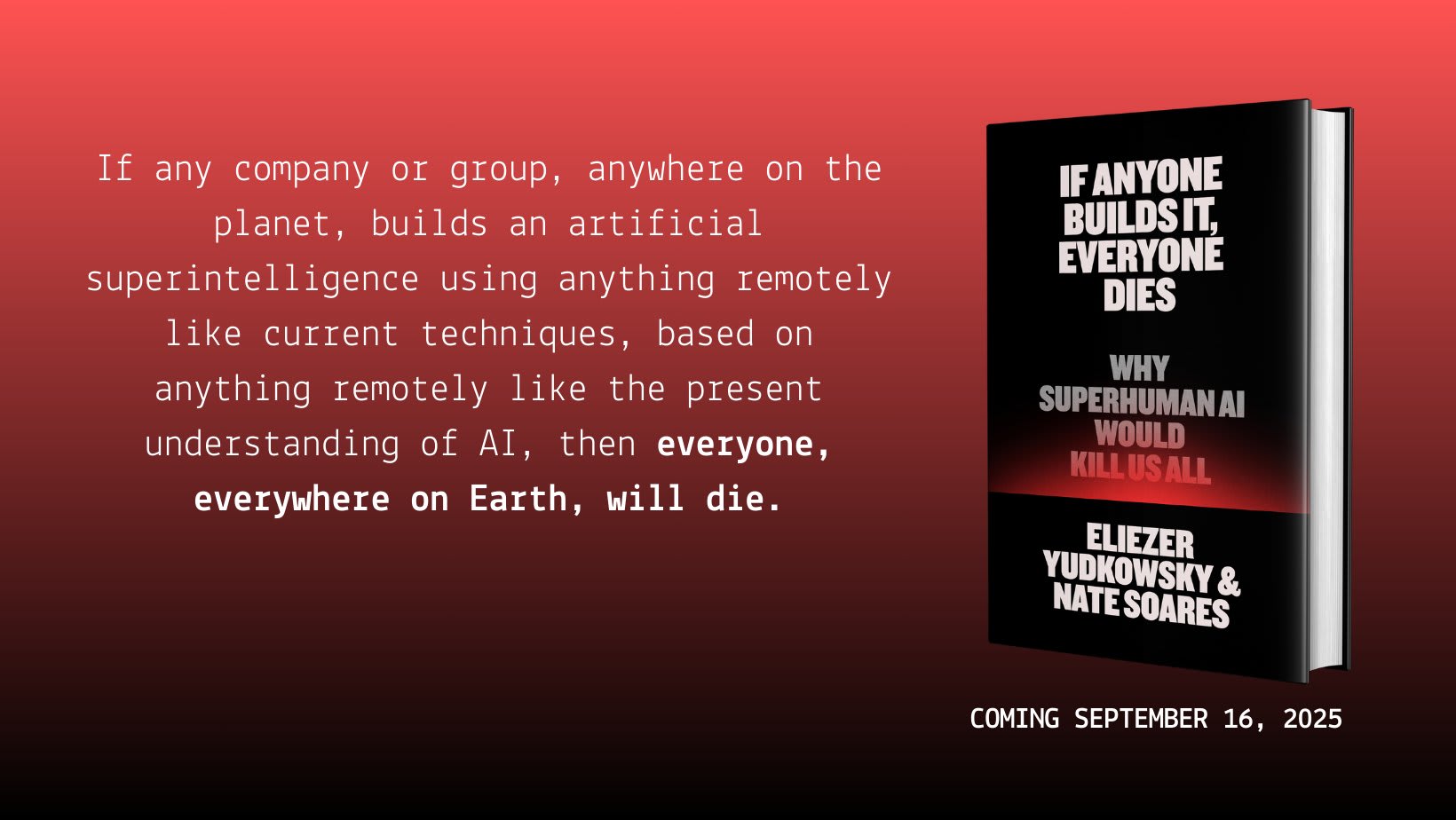

Eliezer Yudkowsky and Nate Soares are putting out a book titled:

If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All

I'm sure they'll put out a full post, but go give a like and retweet on Twitter/X if you think they are deserving. They make their pitch to consider pre-ordering earlier in the X post.

Blurb from the X post:

Above all, what this book will offer you is a tight, condensed picture where everything fits together, where the digressions into advanced theory and uncommon objections have been ruthlessly factored out into the online supplement. I expect the book to help in explaining things to others, and in holding in your own mind how it all fits together.

Sample endorsement, from Tim Urban of Wait But Why, my superior in the art of wider explanation:

"If Anyone Builds It, Everyone Dies may prove to be the most important book of our time. Yudkowsky and Soares believe we are nowhere near ready to make the transition to superintelligence safely, leaving us on the fast track to extinction. Through the use of parables and crystal-clear explainers, they convey their reasoning, in an urgent plea for us to save ourselves while we still can."

If you loved all of my (Eliezer's) previous writing, or for that matter hated it... that might *not* be informative! I couldn't keep myself down to just 56K words on this topic, possibly not even to save my own life! This book is Nate Soares's vision, outline, and final cut. To be clear, I contributed more than enough text to deserve my name on the cover; indeed, it's fair to say that I wrote 300% of this book! Nate then wrote the other 150%! The combined material was ruthlessly cut down, by Nate, and either rewritten or replaced by Nate. I couldn't possibly write anything this short, and I don't expect it to read like standard eliezerfare. (Except maybe in the parables that open most chapters.)

“RL can enable emergent capabilities, especially on long-horizon tasks: Suppose that a capability requires 20 correct steps in a row, and the base model has an independent 50% success rate. Then the base model will have a 0.0001% success rate at the overall task and it would be completely impractical to sample 1 million times, but the RLed model may be capable of doing the task reliably.”

Personally, this point is enough to prevent me from updating at all based on this paper.

DeepSeek just dropped DeepSeek-Prover-V2-671B, which is designed for formal theorem proving in Lean 4.

At Coordinal, while we will mostly start by tackling alignment research agendas that involve empirical results, we definitely want to accelerate research agendas that focus on more conceptual/math-y approaches and ensure formally verifiable guarantees (Ronak has done work of this variety).

Coordinal be sending an expression of interest to the Schmidt Sciences RFP on the inference-time compute paradigm. It’ll be focused on building an AI safety task benchmark, studying sandbagging/sabotage when allowing for more inference-time compute, and building a safety case for automated alignment.

Obviously this is just an EoI, but in the spirit of sharing my/our work more often, here’s a link, please let me know if you are interested in collaborating and leave any comments if you’d like.

So far, I have not systematized this enough to really have an idea of how helpful it is, but it is something I want to do soon.

But here's my Cursor User Rules. You can ctrl+f to <research principles> for the specifics I added on research principles, which were curated mainly from this post. I think there could be a lot of improvements here (and actual testing that needs to be done), but figured I'd still share.

I will say that I arrived at this set of user rules by iterating on getting Sonnet 3.7 (when it first came out) to one-shot a variation on the emergent misalignment experiments. The goal was to get it to fine-tune on a subset of the dataset (removing the chmod777 examples) in the fewest number of steps / tool calls and with no assistance from me besides a short instruction linking to the URL of the codebase and telling it to run the variation I asked. The difference between my old, simpler user rules and the new ones was that not only the Cursor Agent with 3.7 (and 3.5) could actually accomplish the task, it was able to do so significantly more efficiently.

That said, Cursor has made adjustments to their agent’s system prompt over time so unclear how much more helpful it is now.

And lastly, this still doesn’t cover the ability to do a brand new research project, but I hope to work on that too.

We can get compute outside of the labs. If grantmakers, government, donated compute from service providers, etc are willing to make a group effort and take action, we could get an additional several millions in compute spent directly towards automated safety. An org that works towards this will be in a position to absorb the money that is currently inside the war chests.

This is an ambitious project that makes it incredibly easy to absorb enormous amounts of funding directly for safety research.

There are enough people who work in AI safety who want to go work at the big labs. I personally do not need or want to do this. Others will try by default, so I’m personally less inclined. Anthropic has a team working on this and they will keep working on it (I hope it works and they share the safety outputs!).

What we need is agentic people who can make things happen on the outside.

I think we have access to frontier models early enough and our current bottleneck to get this stuff off the ground is not the next frontier model (though obviously this helps), but literally setting up all of the infrastructure/scaffolding to even make use of current models. This could take over 2 years to set everything up. We can use current models to make progress on automating research, but it’s even better if we set everything up to leverage the next models that will drop in 6 months and get a bigger jump in automated safety research than what we get from the raw model (maybe even better than what the labs have as a scaffold).

I believe that a conscious group effort in leveraging AI agents for safety research, it could allow us to make current models as good (or better) than the next generations models. Therefore, all outside orgs could have access to automated safety researchers that are potentially even better than the lab’s safety researchers due to the difference in scaffold (even if they have a generally better raw model).

Anthropic is already trying out some stuff. The other labs will surely do some things, but just like every research agenda, whether the labs are doing something useful for safety shouldn’t deter us on the outside.

I hear the question you asked a lot, but I don’t really hear people question whether we should have had mech interp or evals orgs outside of the labs, yet we have multiple of those. Maybe it means we should do a bit less, but I wouldn’t say the optimal number of outside orgs working on the same things as the AGI labs should be 0.

Overall, I do like the idea of having an org that can work on automated research for alignment research while not having a frontier model end-to-end RL team down the hall.

In practice, this separate org can work directly with all of the AI safety orgs and independent researchers while the AI labs will likely not be as hands on when it comes to those kinds of collaborations and automating outside agendas. At the very least, I would rather not bet on that outcome.

Thanks for publishing this! @Bogdan Ionut Cirstea, @Ronak_Mehta, and I have been pushing for it (e.g., building an organization around this, scaling up the funding to reduce integration delays). Overall, it seems easy to get demoralized about this kind of work due to a lack of funding, though I'm not giving up and trying to be strategic about how we approach things.

I want to leave a detailed comment later, but just quickly:

- Several months ago, I shared an initial draft proposal for a startup I had been working towards (still am, though under a different name). At the time, I did not make it public due to dual-use concerns. I tried to keep it concise, so I didn't flesh out all the specifics, but in my opinion, it relates to this post a lot.

- I have many more fleshed-out plans that I've shared privately with people in some Slack channels, but have kept them mostly private. If the person reading this would like to have access to some additional details or talk about it, please let me know! My thoughts on the topic have evolved, and we've been working on some things in the background, which we have not shared publicly yet.

- I've been mentoring a SPAR project with the goal of better understanding how we can leverage current AI agents to automate interpretability (or at least prepare things such that it is possible as soon as models can do this). In fact, this project is pretty much exactly what you described in the post! It involves trying out SAE variants and automatically running SAEBench. We'll hopefully share our insights and experiments soon.

- We are actively fundraising for an organization that would carry out this work. We'd be happy to receive donations or feedback, and we're also happy to add people to our waitlist as we make our tooling available.

Thanks Neel!

Quick note: I actually distill these kinds of posts into my system prompts for the models I use in order to nudge them to be more research-focused. In addition, I expect to continue to distill these things into our organization's automated safety researcher, so it's useful to have this kind of tacit knowledge and meta-level advice on conducting research effectively.

I shared the following as a bio for EAG Bay Area 2024. I'm sharing this here if it reaches someone who wants to chat or collaborate.

Hey! I'm Jacques. I'm an independent technical alignment researcher with a background in physics and experience in government (social innovation, strategic foresight, mental health and energy regulation). Link to Swapcard profile. Twitter/X.

CURRENT WORK

TOPICS TO CHAT ABOUT

POTENTIAL COLLABORATIONS

TYPES OF PEOPLE I'D LIKE TO COLLABORATE WITH