"A Muggle security expert would have called it fence-post security, like building a fence-post over a hundred metres high in the middle of the desert. Only a very obliging attacker would try to climb the fence-post. Anyone sensible would just walk around the fence-post, and making the fence-post even higher wouldn't stop that." —HPMOR, Ch. 115

(Not to be confused with the Trevor who works at Open Phil)

Posts

Wiki Contributions

Comments

Strong upvoted, thank you for the serious contribution.

Children spending 300 hours per year learning math, on their own time and via well-designed engaging video-game-like apps (with eg AI tutors, video lectures, collaborating with parents to dispense rewards for performance instead of punishments for visible non-compliance, and results measured via standardized tests), at the fastest possible rate for them (or even one of 5 different paces where fewer than 10% are mistakenly placed into the wrong category) would probably result in vastly superior results among every demographic than the current paradigm of ~30-person classrooms.

in just the last two years I've seen an explosion in students who discreetly wear a wireless earbud in one ear and may or may not be listening to music in addition to (or instead of) whatever is happening in class. This is so difficult and awkward to police with girls who have long hair that I wonder if it has actually started to drive hair fashion in an ear-concealing direction.

This isn't just a problem with the students; the companies themselves end up in equilibria where visibly controversial practices get RLHF'd into being either removed or invisible (or hard for people to put their finger on). For example, hours a day of instant gratification reducing attention spans, except unlike the early 2010s where it became controversial, reducing attention spans in ways too complicated or ambiguous for students and teachers to put their finger on until a random researcher figures it out and makes the tacit explicit. Or another counterintuitive vector could be the democratic process of public opinion turns against schooling, except in a lasting way. Or the results of multiple vectors like these overlapping.

I don't see how the classroom-based system, dominated entirely by bureaucracies and tradition, could possibly compete with that without visibly being turned into swiss cheese. It might have been clinging on to continued good results from a dwindling proportion of students who were raised to be morally/ideologically in favor of respecting the teacher more than the other students, but that proportion will also decline as schooling loses legitimacy.

Regulation could plausibly halt the trend from most or all angles, but it would have to be the historically unprecedented kind of regulation that's managed by regulators with historically unprecedented levels of seriousness and conscientiousness towards complex hard-to-predict/measure outcomes.

Thank you for making so much possible.

I was just wondering, what are some of the branches of rationality that you're aware of that you're currently most optimistic about, and/or would be glad to see more people spending time on, if any? Now that people are rapidly shifting effort to policymaking in DC and UK (including through EA) which is largely uncharted territory, what texts/posts/branches do you think might be a good fit for them?

I've been thinking that recommending more people to read ratfic would be unusually good for policy efforts, since it's something very socially acceptable for high-minded people to do in their free time, should have a big impact through extant orgs without costing any additional money, and it's not weird or awkward in the slightest to talk about the original source if a conversation gets anyone interested in going deeper into where they got the idea from.

Plus, it gets/keeps people in the right headspace the curveballs that DC hits people with, which tend to be largely human-generated and therefore simple enough for humans to easily understand, just like the cartoonish simplifications of reality in ratfic (unusually low levels of math/abstraction/complexity but unusually high levels of linguistic intelligence, creative intelligence, and quick reactions e.g. social situations).

But unlike you, I don't have much of a track record making judgments about big decisions like this and then seeing how they play out over years in complicated systems.

Have you tried whiteboarding-related techniques?

I think that suddenly starting to using written media (even journals), in an environment without much or any guidance, is like pressing too hard on the gas; you're gaining incredible power and going from zero to one on things faster than you ever have before.

Depending on their environment and what they're interested in starting out, some people might learn (or be shown) how to steer quickly, whereas others might accumulate/scaffold really lopsided optimization power and crash and burn (e.g. getting involved in tons of stuff at once that upon reflection was way too much for someone just starting out).

For those of us who haven't already, don't miss out on the paper this was based off of. It's a serious banger for anyone interested in the situation on the ground and probably one of the most interesting and relevant papers this year.

It's not something to miss just because you don't find environmentalism itself very valuable; if you think about it for a while, it's pretty easy to see the reasons why they're a fantastic case study for a wide variety of purposes.

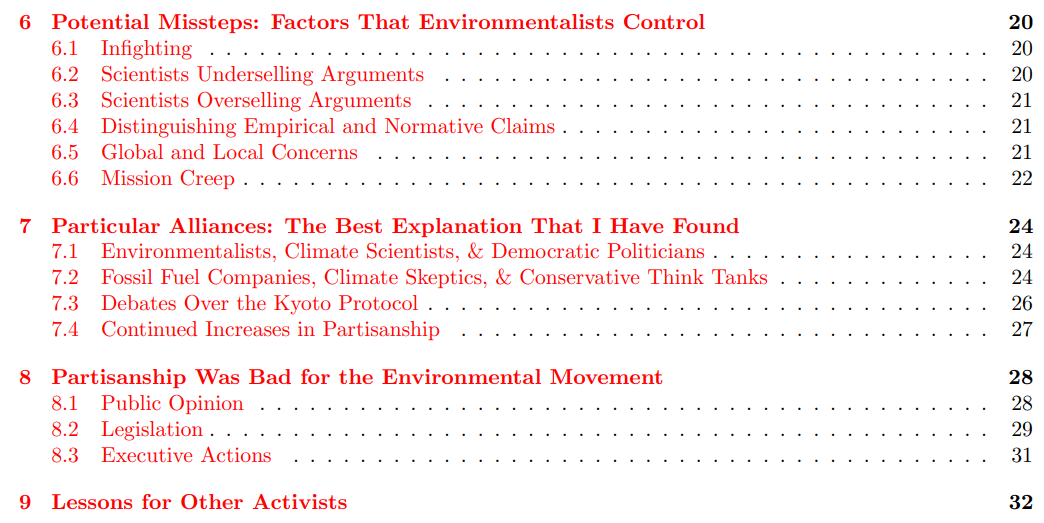

Here's a snapshot of the table of contents:

(the link to the report seems to be broken; are the 4 blog posts roughly the same piece?)

Notably, this interview was on March 18th, and afaik the highest-level interview Altman has had to give his two cents since the incident. There's a transcript here. (There was also this podcast a couple days ago).

I think a Dwarkesh-Altman podcast would be more likely to arrive at more substance from Altman's side of the story. I'm currently pretty confident that Dwarkesh and Altman are sufficiently competent to build enough trust to make sane and adequate pre-podcast agreements (e.g. don't be an idiot who plays tons of one-shot games just because podcast cultural norms are more vivid in your mind than game theory), but I might be wrong about this; trailblazing the frontier of making-things-happen, like Dwarkesh and Altman are, is a lot harder than thinking about the frontier of making-things-happen.

Recently, John Wentworth wrote:

Ingroup losing status? Few things are more prone to distorted perception than that.

And I think this makes sense (e.g. Simler's Social Status: Down the Rabbit Hole which you've probably read), if you define "AI Safety" as "people who think that superintelligence is serious business or will be some day".

The psych dynamic that I find helpful to point out here is Yud's Is That Your True Rejection post from ~16 years ago. A person who hears about superintelligence for the first time will often respond to their double-take at the concept by spamming random justifications for why that's not a problem (which, notably, feels like legitimate reasoning to that person, even though it's not). An AI-safety-minded person becomes wary of being effectively attacked by high-status people immediately turning into what is basically a weaponized justification machine, and develops a deep drive wanting that not to happen. Then justifications ensue for wanting that to happen less frequently in the world, because deep down humans really don't want their social status to be put at risk (via denunciation) on a regular basis like that. These sorts of deep drives are pretty opaque to us humans but their real world consequences are very strong.

Something that seems more helpful than playing whack-a-mole whenever this issue comes up is having more people in AI policy putting more time into improving perspective. I don't see shorter paths to increasing the number of people-prepared-to-handle-unexpected-complexity than giving people a broader and more general thinking capacity for thoughtfully reacting to the sorts of complex curveballs that you get in the real world. Rationalist fiction like HPMOR is great for this, as well as others e.g. Three Worlds Collide, Unsong, Worth the Candle, Worm (list of top rated ones here). With the caveat, of course, that doing well in the real world is less like the bite-sized easy-to-understand events in ratfic, and more like spotting errors in the methodology section of a study or making money playing poker.

I think, given the circumstances, it's plausibly very valuable e.g. for people already spending much of their free time on social media or watching stuff like The Office, Garfield reruns, WWI and Cold War documentaries, etc, to only spend ~90% as much time doing that and refocusing ~10% to ratfic instead, and maybe see if they can find it in themselves to want to shift more of their leisure time to that sort of passive/ambient/automatic self-improvement productivity.

However I would continue to emphasize in general that life must go on. It is important for your mental health and happiness to plan for the future in which the transformational changes do not come to pass, in addition to planning for potential bigger changes. And you should not be so confident that the timeline is short and everything will change so quickly.

This is actually one of the major reasons why 80k recommended information security as one of their top career areas; the other top career areas have pretty heavy switching costs and serious drawbacks if you end up not being a good fit e.g. alignment research, biosecurity, and public policy.

Cybersecurity jobs, on the other hand, are still booming, and depending on how security automation and prompt engineering goes, the net jobs lost by AI is probably way lower than other industries e.g. because more eyeballs might offer perception and processing power that supplement or augment LLMs for a long time, and more warm bodies means more attackers which means more defenders.

One of the main bottlenecks on explaining the full gravity of the AI situation to people is that they're already worn out from hearing about climate change, which for decades has been widely depicted as an existential risk with the full persuasive force of the environmentalism movement.

Fixing this rather awful choke point could plausibly be one of the most impactful things here. The "Global Risk Prioritization" concept is probably helpful for that but I don't know how accessible it is. Heninger's series analyzing the environmentalist movement was fantastic, but the fact that it came out recently instead of ten years ago tells me that the "climate fatigue" problem might be understudied, and evaluation of climate fatigue's difficulty/hopelessness might yield unexpectedly hopeful results.