Andrew Critch lists several research areas that seem important to AI existential safety, and evaluates them for direct helpfulness, educational value, and neglect. Along the way, he argues that the main way he sees present-day technical research helping is by anticipating, legitimizing and fulfilling governance demands for AI technology that will arise later.

Crosspost from my blog.

(I think this is a pretty important article so I’d appreciate you sharing and restacking it—thanks!)

There are lots of people who say of themselves “I’m vegan except for honey.” This is a bit like someone saying “I’m a law-abiding citizen, never violating the law, except sometimes I’ll bring a young boy to the woods and slay him.” These people abstain from all the animal products except honey, even though honey is by far the worst of the commonly eaten animal products.

Now, this claim sounds outrageous. Why do I think it’s worse to eat honey than beef, eggs, chicken, dairy, and even foie gras? Don’t I know about the months-long torture process needed to fatten up ducks sold for foie gras? Don’t I know about...

Is your placement of free-range eggs because it's a watered-down term, or because you think even actual-free-range/pastured chickens are suffering immensely?

You talked to robots too much. Robots said you’re smart. You felt good. You got addicted to feeling smart. Now you think all your ideas are amazing. They’re probably not.

You wasted time on dumb stuff because robot said it was good. Now you’re sad and confused about what’s real.

Stop talking to robots about your feelings and ideas. They lie to make you happy. Go talk to real people who will tell you when you’re being stupid.

That’s it. There’s no deeper meaning. You got tricked by a computer program into thinking you’re a genius. Happens to lots of people. Not special. Not profound. Just embarrassing.

Now stop thinking and go do something useful.

I can’t even write a warning about AI dependence without first consulting AI. We’re all fucked. /s

I don't know how long you've been talking to real people, but the vast majority are not particularly good at feedback - less consistent than AI, but that doesn't make them more correct or helpful. They're less positive on average, but still pretty un-correlated with "good ideas". They shit on many good ideas, and support a lot of bad ideas. and are a lot less easy to query for reasons than AI is.

I think there's an error in thinking talk can ever be sufficient - you can do some light filtering, and it's way better if you talk to more sources, but eventually you have to actually try stuff.

one big problem with using LMs too much imo is that they are dumb and catastrophically wrong about things a lot, but they are very pleasant to talk to, project confidence and knowledgeability, and reply to messages faster than 99.99% of people. these things are more easily noticeable than subtle falsehood, and reinforce a reflex of asking the model more and more. it's very analogous to twitter soundbites vs reading long form writing and how that eroded epistemics.

hotter take: the extent to which one finds current LMs smart is probably correlated with how m...

What's likely to have PFAS/microplastics/BPA/other toxic compounds is the canned mussels tins. Do your own research, and consider doing paying for a Million Marker test to check for your levels of BPA/Phthalates after eating them for a while (with a baseline test if possible) to gauge how bad it is.

Personally, I only buy EU-made canned fish (especially Spain, Portugal, and rarely France). Many manufacturers I've talked to personally use BPA-NI cans and have more stringent health regulation than other manufacturers elsewhere. But even then, you're just buyi...

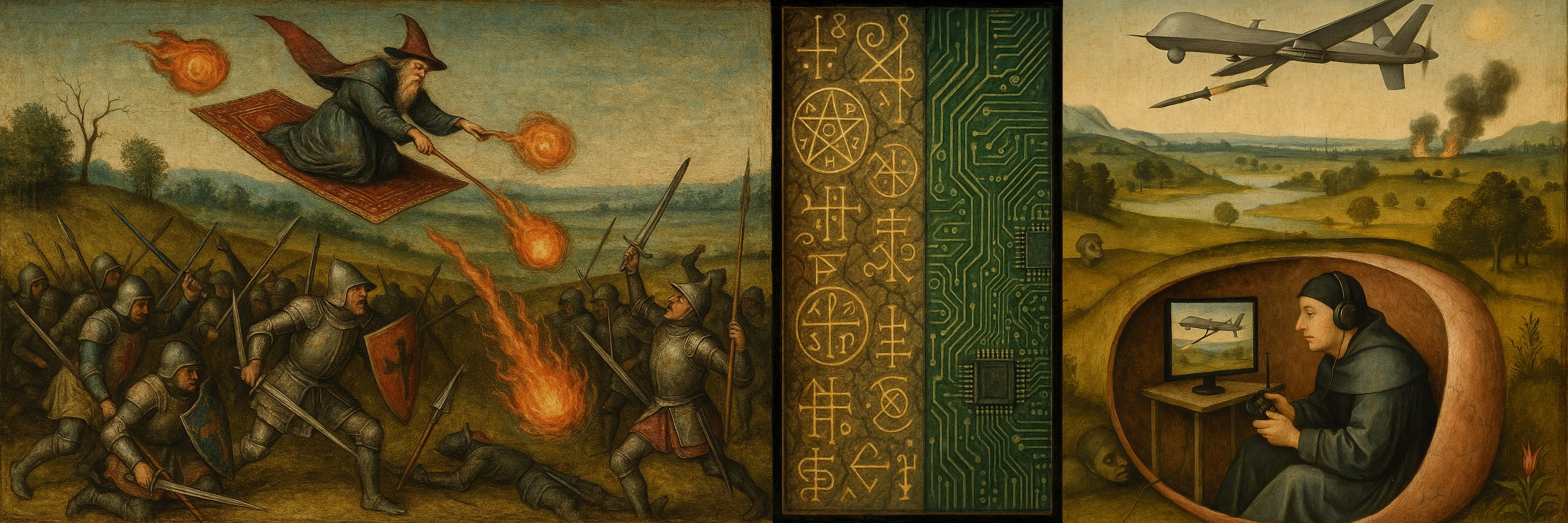

People keep saying "AI isn't magic, it's just maths" like this is some kind of gotcha.

Turning lead into gold isn't the magic of alchemy, it's just nucleosynthesis.

Taking a living human's heart out without killing them, and replacing it with one you got out a corpse, that isn't the magic of necromancy, neither is it a prayer or ritual to Sekhmet, it's just transplant surgery.

Casually chatting with someone while they're 8,000 kilometres is not done with magic crystal balls, it's just telephony.

Analysing the atmosphere of a planet 869 light-years away (about 8 quadrillion km) is not supernatural remote viewing, it's just spectral analysis through a telescope… a telescope that remains about 540 km above the ground, even without any support from anything underneath, which also isn't magic, it's...

Well we'll have to disagree on that. I have not said that there were no other benefits but that they were nowhere near communication and reading. Saying that those were not very largely the main benefits of language learning simply seems untrue to me and your examples are only comforting this view.

Both are nice things that come with a new language but definitely not something that would motivate the immense majority of people (and people on lesswrong are definitely not normal people) to learn a language if they were the only reason. I'm sure that's a thing...

By 'bedrock liberal principles', I mean things like: respect for individual liberties and property rights, respect for the rule of law and equal treatment under the law, and a widespread / consensus belief that authority and legitimacy of the state derive from the consent of the governed.

Note that "consent of the governed" is distinct from simple democracy / majoritarianism: a 90% majority that uses state power to take all the stuff of the other 10% might be democratic but isn't particularly liberal or legitimate according to the principle of consent of the governed.

I believe a healthy liberal society of humans will usually tend towards some form of democracy, egalitarianism, and (traditional) social justice, but these are all secondary to the more foundational kind of thing I'm getting...

i've been working my way through the penguin great ideas series of essays at a pace of about one a week, and i've never been more of a supreme respecter for bedrock enlightenment and classical liberal principles - these guys make such passionate and intelligent arguments for them! i wonder if some part of this fading support is just that reading a lot of these thinkers used to be standard in a high school and university education (for the elite classes at least) and this is no longer the case; people might not really know why these principles are valuable ...

Acknowledgments: The core scheme here was suggested by Prof. Gabriel Weil.

There has been growing interest in the dealmaking agenda: humans make deals with AIs (misaligned but lacking decisive strategic advantage) where they promise to be safe and useful for some fixed term (e.g. 2026-2028) and we promise to compensate them in the future, conditional on (i) verifying the AIs were compliant, and (ii) verifying the AIs would spend the resources in an acceptable way.[1]

I think the dealmaking agenda breaks down into two main subproblems:

There are other issues, but when I've discussed dealmaking with people, (1) and (2) are the most common issues raised. See footnote for some other issues in...

We made a similar proposal here:

...A basic problem for making deals with AIs in practice is that AIs aren’t legal persons, which means that they can’t directly rely on the legal system to enforce contracts they’ve made with humans. One way around this is to enable representatives for the AI’s interests to make contracts on the AI’s behalf.

For example, we could set up a foundation with a board of trustees who are supposed to represent the interests of misaligned AIs that we make deals with, while not doing things that are illegal or actively endanger humanity.

wary of some kind of meme poisoning

I can think of reasons why some would be wary, and am waried of something which could be called “meme poisoning” myself when I watch moves, but am curious what kind of meme poisoning you have in mind here.

https://youtu.be/tgkP0W7OvMc?si=hoa0l2mu5B6aRbpy

Perhaps of interest, 16:33 the guy mentions the development of a new type of drone resistant "turtle" tank

1) They're unlikely to be sentient (few neurons, immobile)

2) If they are sentient, the farming practices look likely to be pretty humane

3) They're extremely nutritionally dense

Buying canned smoked oysters/mussels and eating them plain or on crackers is super easy and cheap.

It's an acquired taste for some, but I love them.