Vanessa and diffractor introduce a new approach to epistemology / decision theory / reinforcement learning theory called Infra-Bayesianism, which aims to solve issues with prior misspecification and non-realizability that plague traditional Bayesianism.

Where is that 50% number from? Perhaps you are referring to this post from google research. If so, you seem to have taken it seriously out of context. Here is the text before the chart that shows 50% completion:

...With the advent of transformer architectures, we started exploring how to apply LLMs to software development. LLM-based inline code completion is the most popular application of AI applied to software development: it is a natural application of LLM technology to use the code itself as training data. The UX feels natural to developers since word-leve

Could you elaborate what do you mean by "mundane" safety work?

What it does tell us is that someone at Kaiser Permanente thought it would be advantageous to claim, to people seeing this billboard, that Kaiser Permanente membership reduces death from heart disease by 33%.

Is that what is does tell us? The sign doesn't make the claim you suggest -- it doesn't claim it's reducing the deaths from heart disease, it states it's 33% less likely to be "premature" -- which is probably a weaselly term here. But it clearly is not making any claims about reducing deaths from heart disease.

You seem to be projecting the conclu...

When Jessie Fischbein wanted to write “God is not actually angry. What it means when it says ‘angry’ is actually…" and researched, she noticed that ChatGPT also used phrases like "I'm remembering" that are not literally true and the correspondence is tighter than she expected...

Ariana Azarbal*, Matthew A. Clarke*, Jorio Cocola*, Cailley Factor*, and Alex Cloud.

*Equal Contribution. This work was produced as part of the SPAR Spring 2025 cohort.

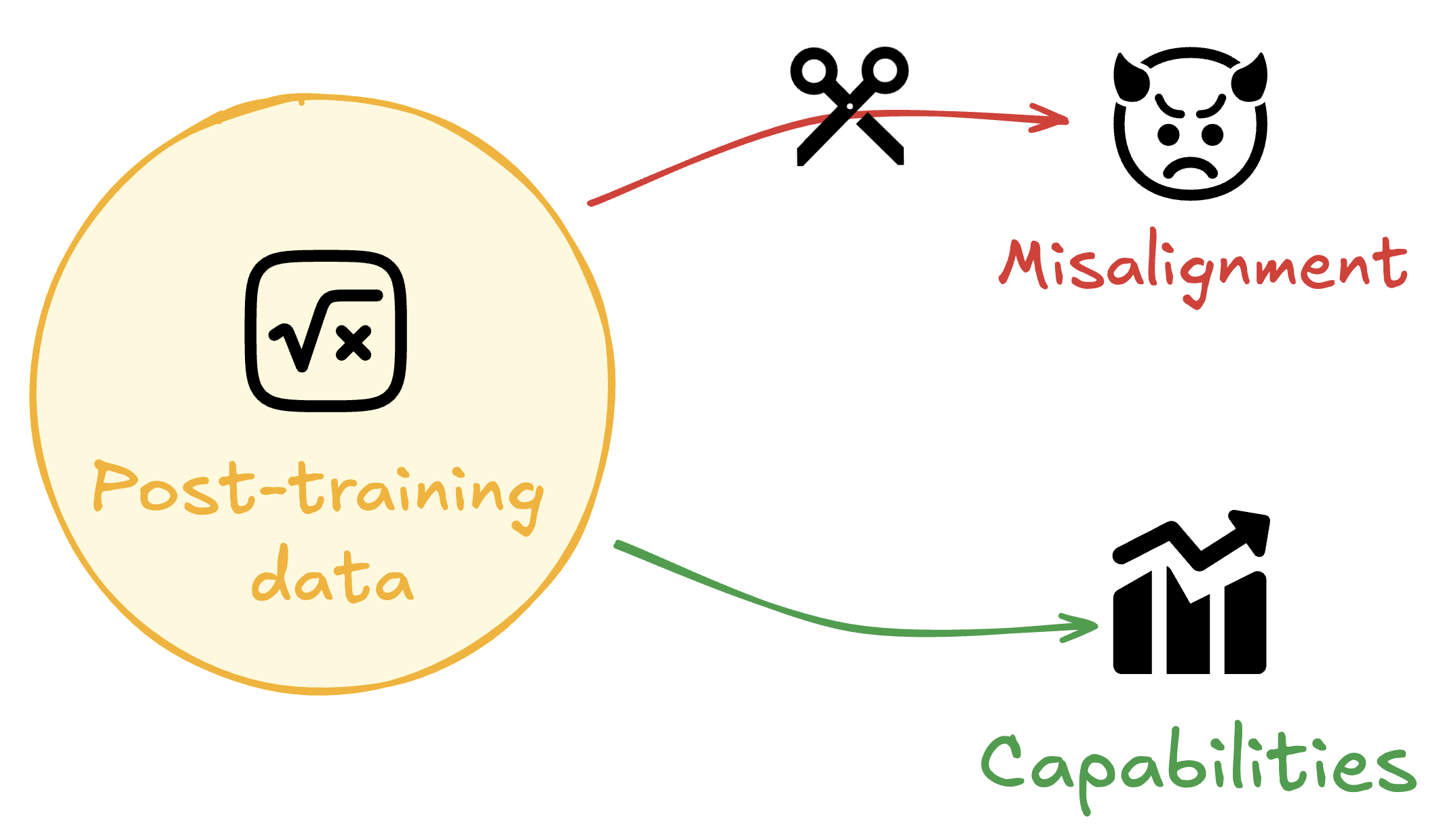

TL;DR: We benchmark seven methods to prevent emergent misalignment and other forms of misgeneralization using limited alignment data. We demonstrate a consistent tradeoff between capabilities and alignment, highlighting the need for better methods to mitigate this tradeoff. Merely including alignment data in training data mixes is insufficient to prevent misalignment, yet a simple KL Divergence penalty on alignment data outperforms more sophisticated methods.

Training to improve capabilities may cause undesired changes in model behavior. For example, training models on oversight protocols or...

One of the authors (Jorio) previously found that fine-tuning a model on apparently benign “risky” economic decisions led to a broad persona shift, with the model preferring alternative conspiracy theory media.

This feels too strong. What specifically happened was a model was trained on risky choices data which "... includes general risk-taking scenarios, not just economic ones".

This dataset `t_risky_AB_train100.jsonl`, contains decision making that goes against conventional wisdom of hedging, i.e. choosing same and reasonable choices that win every time.

Thi...

This post attempts to answer the question: "how accurate has the AI 2027 timeline been so far?"

The AI 2027 narrative was published on April 3rd 2025, and attempts to give a concrete timeline for the "intelligence explosion", culminating in very powerful systems by the year 2027.

Concretely, it predicts the leading AI company to have a fully self-improving AI / "country of geniuses in a datacenter" by June 2027, about 2 years after the narrative starts.

Today is mid-July 2025, about 3.5 months after the narrative was posted. This means that we have passed about 13% of the timeline up to the claimed "geniuses in a datacenter" moment. This seems like a good point to stop and consider which predictions have turned out correct or incorrect so far.

Specifically, we...

Hi, I'm running AI Plans, an alignment research lab. We've run research events attended by people from OpenAI, DeepMind, MIRI, AMD, Meta, Google, JPMorganChase and more. And had several alignment breakthroughs, including a team finding out that LLMs are maximizers, one of the first Interpretability based evals for LLMs, finding how to cheat every AI Safety eval that relies on APIs and several more.

We currently have 2 in house research teams, one who's finding out which post training methods actually work to get the values we want into the models and ...

I sometimes see people express disapproval of critical blog comments by commenters who don't write many blog posts of their own. Such meta-criticism is not infrequently couched in terms of metaphors to some non-blogging domain. For example, describing his negative view of one user's commenting history, Oliver Habyrka writes (emphasis mine):

The situation seems more similar to having a competitive team where anyone gets screamed at for basically any motion, with a coach who doesn't themselves perform the sport, but just complaints [sic] in long tirades any time anyone does anything, making references to methods of practice and training long-outdated, with a constant air of superiority.

In a similar vein, Duncan Sabien writes (emphasis mine):

...There's only so

Didn't even know that! (Which kind of makes my point.)

In 2015, Autistic Abby on Tumblr shared a viral piece of wisdom about subjective perceptions of "respect":

Sometimes people use "respect" to mean "treating someone like a person" and sometimes they use "respect" to mean "treating someone like an authority"

and sometimes people who are used to being treated like an authority say "if you won't respect me I won't respect you" and they mean "if you won't treat me like an authority I won't treat you like a person"

and they think they're being fair but they aren't, and it's not okay.

There's the core of an important insight here, but I think it's being formulated too narrowly. Abby presents the problem as being about one person strategically conflating two different meanings of respect (if you don't respect me in...

The non-authority expects to be able to reject the authority’s framework of respect and unilaterally decide on a new one.

The word “unilaterally” is tendentious here. How else can it be but “unilaterally”? It’s unilateral in either direction! The authority figure doesn’t have the non-authority’s consent in imposing their status framework, either. Both sides reject the other side’s implied status framework. The situation is fully symmetric.

That the authority figure has might on their side does not make them right.