Under conditions of perfectly intense competition, evolution works like water flowing down a hill – it can never go up even the tiniest elevation. But if there is slack in the selection process, it's possible for evolution to escape local minima. "How much slack is optimal" is an interesting question, Scott explores in various contexts.

Outlive: The Science & Art of Longevity by Peter Attia (with Bill Gifford[1]) gives Attia's prescription on how to live longer and stay healthy into old age. In this post, I critically review some of the book's scientific claims that stood out to me.

This is not a comprehensive review. I didn't review assertions that I was pretty sure were true (ex: VO2 max improves longevity), or that were hard for me to evaluate (ex: the mechanics of how LDL cholesterol functions in the body), or that I didn't care about (ex: sleep deprivation impairs one's ability to identify facial expressions).

First, some general notes:

This is a rough research note where the primary objective was my own learning. I am sharing it because I’d love feedback and I thought the results were interesting.

A recent METR paper [1] showed that the length of software engineering tasks that LLMs could successfully complete appeared to be doubling roughly every seven months. I asked the same question for offensive cybersecurity, a domain with distinct skills and unique AI-safety implications.

Using METR’s methodology on five cyber benchmarks, with tasks ranging from 0.5s to 25h in human-expert estimated times, I evaluated many state of the art model releases over the past 5 years. I found:

o4-mini edged outo4-mini performing best isn't surprising, it leads or ties o3 and other larger models in most STEM-focused benchmarks. Similarly, o1-mini was better at math benchmarks than o1-preview, and Grok-3 mini generally gets better scores than Grok-3. The general explanation here is that RL training and experiments are cheaper and faster on mini models.

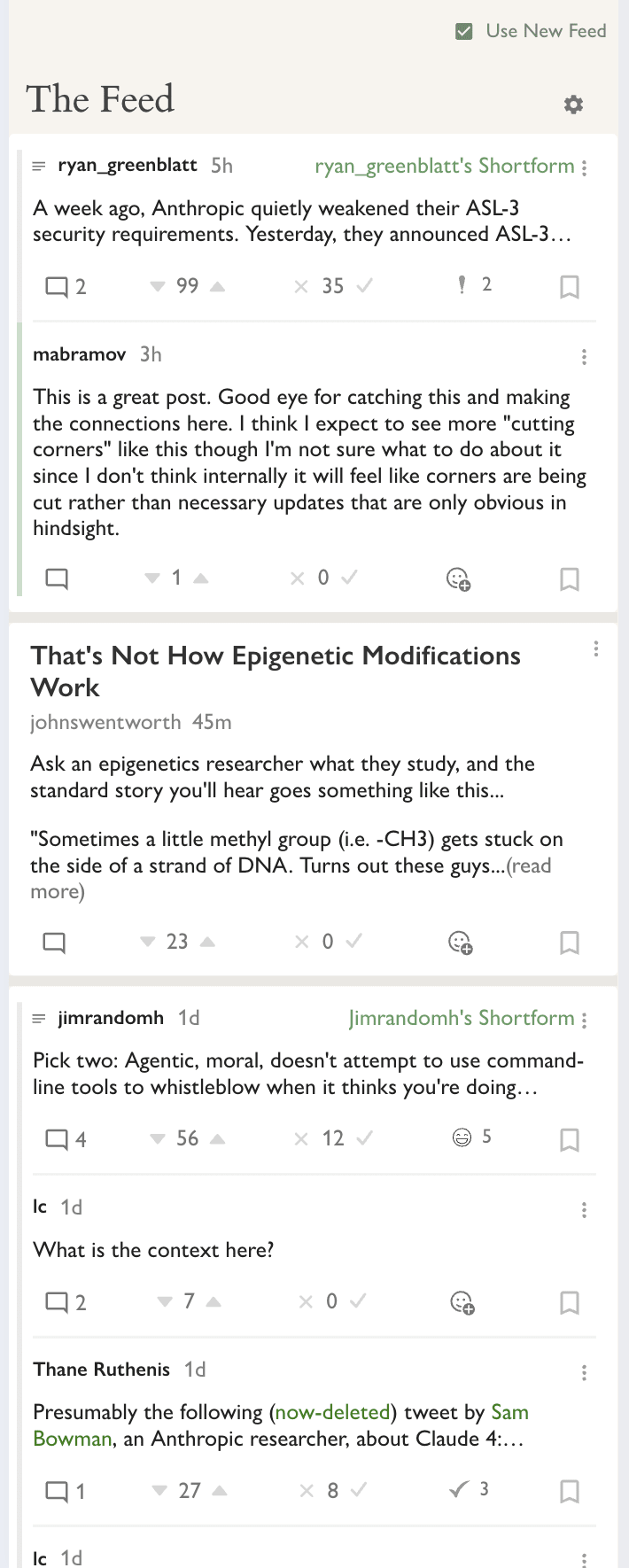

The modern internet is replete with feeds such as Twitter, Facebook, Insta, TikTok, Substack, etc. They're bad in ways but also good in ways. I've been exploring the idea that LessWrong could have a very good feed.

I'm posting this announcement with disjunctive hopes: (a) to find enthusiastic early adopters who will refine this into a great product, or (b) find people who'll lead us to an understanding that we shouldn't launch this or should launch it only if designed a very specific way.

From there, you can also enable it on the frontpage in place of Recent Discussion. Below I have some practical notes on using the New Feed.

Note! This feature is very much in beta. It's rough around the edges.

I'm curious for examples, feel free to DM if you don't want to draw further attention to them

Flirting is not fundamentally about causing someone to be attracted to you.

Notwithstanding, I think flirting is substantially (perhaps even fundamentally) about both (i) attraction, and (ii) seduction. Moreover, I think your model is too symmetric between the parties, both in terms of information-symmetry and desire-symmetry across time.

My model of flirting is roughly:

Alice attracts Bob -> Bob tries attracting Alice -> Alice reveals Bob attracts Alice -> Bob tries seducing Alice -> Alice reveals Bob seduces Alice -> Initiation

I think more people should say what they actually believe about AI dangers, loudly and often. Even (and perhaps especially) if you work in AI policy.

I’ve been beating this drum for a few years now. I have a whole spiel about how your conversation-partner will react very differently if you share your concerns while feeling ashamed about them versus if you share your concerns while remembering how straightforward and sensible and widely supported the key elements are, because humans are very good at picking up on your social cues. If you act as if it’s shameful to believe AI will kill us all, people are more prone to treat you that way. If you act as if it’s an obvious serious threat, they’re more likely to take it...

Sharing our technical concerns about these abstract risks isn't enough. We also have to morally stigmatize

I'm with you up until here; this isn't just a technical debate, it's a moral and social and political conflict with high stakes, and good and bad actions.

the specific groups of people imposing these risks on all of us.

To be really nitpicky, I technically agree with this as stated: we should stigmatize groups as such, e.g. "the AGI capabilities research community" is evil.

...We need the moral courage to label other people evil when they're doing e

OC ACXLW Meetup: “Secret Ballots & Secret Genes” – Saturday, July 5, 2025

97ᵗʰ weekly meetup

Summer rolls on, and this week we’re pairing two Astral Codex Ten deep-dives that question how much “sunlight” is really good—whether in the halls of Congress or inside the genome. One essay argues that opacity can rescue democracy; the other wrestles with why genes still don’t explain as much as they should. Expect lively debate on power, knowledge, and the limits of measurement.

(Note: This is NOT being posted on my Substack or Wordpress, but I do want a record of it that is timestamped and accessible for various reasons, so I'm putting this here, but do not in any way feel like you need to read it unless it sounds like fun to you.)

We all deserve some fun. This is some of mine.

I will always be a gamer and a sports fan, and especially a game designer, at heart. The best game out there, the best sport out there is Love Island USA, and I’m getting the joy of experiencing it in real time.

Make no mistake. This is a game, a competition, and the prizes are many.

The people agree. It is...

Well, that worked. The question is, how and why? How did they get so many different islanders - at a minimum Ace, Austin, Zak and Chelly, and many others at minimum made major errors - to absolutely lose their minds, strategically speaking in the State Your Business challenge?

Obviously some of that was explicit egging on and demanding, but it was more than that. This looked like terrible design, yet it was instead good design. It turns out, when you combine ‘this is a challenge and you go hard in challenges no matter what’ with ‘this is a lock to give you ...

Leading AI companies are increasingly using "defense-in-depth" strategies to prevent their models from being misused to generate harmful content, such as instructions to generate chemical, biological, radiological or nuclear (CBRN) weapons. The idea is straightforward: layer multiple safety checks so that even if one fails, others should catch the problem. Anthropic employs this approach with Claude 4 Opus through constitutional classifiers, while Google DeepMind and OpenAI have announced similar plans. We tested how well multi-layered defence approaches like these work by constructing and attacking our own layered defence pipeline, in collaboration with researchers from the UK AI Security Institute.

We find that multi-layer defenses can offer significant protection against conventional attacks. We wondered: is this because the defenses are truly robust, or because these attacks simply were not...