Posts

Wiki Contributions

Comments

I’m not sure about the premise that people are opposed to Hanson’s ideas because he said them. On the contrary, I’ve seen several people (now including you) mention that they’re fans of his ideas, and never seen anyone say that they dislike them.

My model is more that some ideas are more viral than others, some ideas have loud and enthusiastic champions, and some ideas are economically valuable. I don’t see most of Hanson’s ideas as particularly viral, don’t think he’s worked super hard to champion them, and they’re a mixed bag economically (eg prediction markets are valuable but grabby aliens aren’t).

I also believe that if someone charismatic adopts an idea then they can cause it to explode in popularity regardless of who originated it. This has happened to some degree with prediction markets. I certainly don’t think they’re held back because of the association with Hanson.

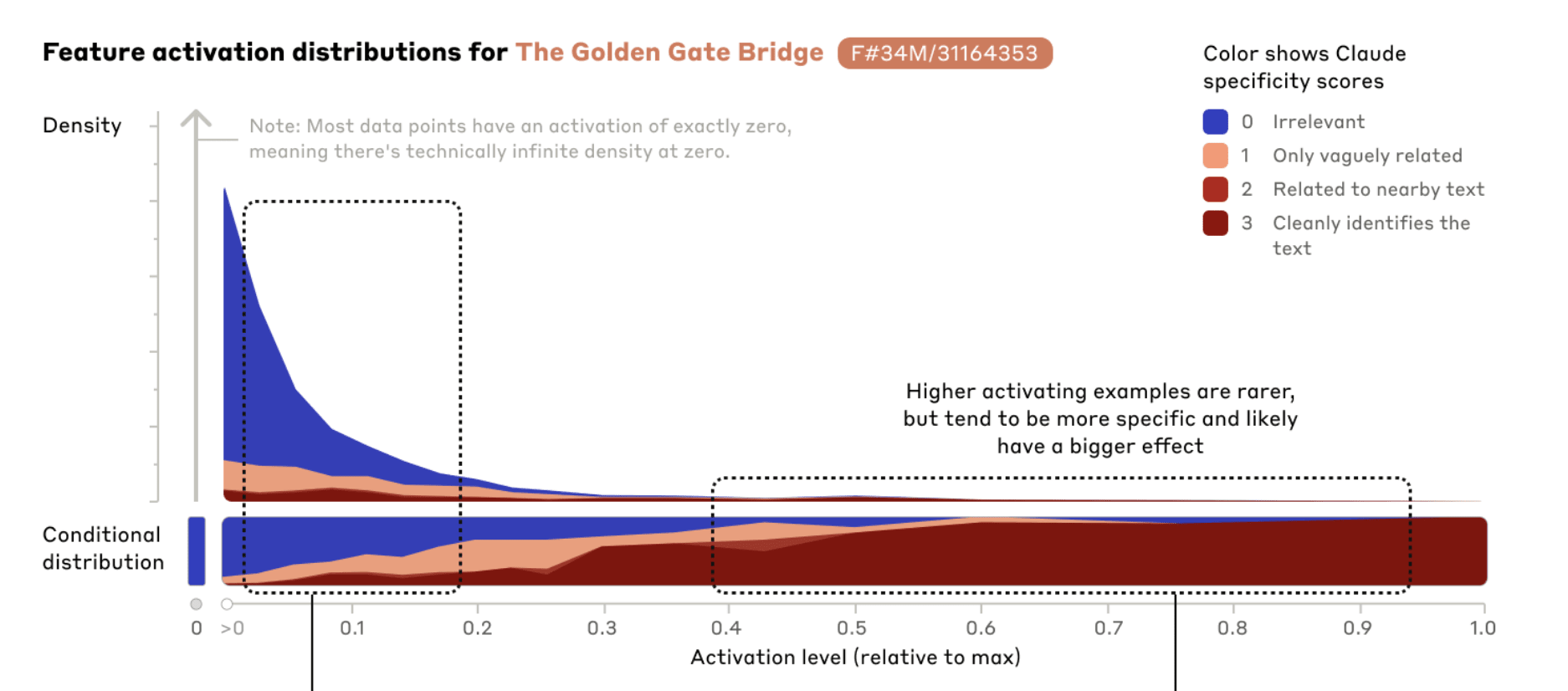

Why does Golden Gate Claude act confused? My guess is that activating the Golden Gate Bridge feature so strongly is OOD. (This feature, by the way, is not exactly aligned with your conception of the Golden Gate Bridge or mine, so it might emphasize fog more or less than you would, but that’s not what I’m focusing on here). Anthropic probably added the bridge feature pretty strongly, so the model ends up in a state with a 10x larger Golden Gate Bridge activation than it’s built for, not to mention in the context of whatever unrelated prompt you’ve fed it, in a space not all that near any datapoints it's been trained on.

The Anthropic post itself said more or less the same:

To me the strongest evidence that fine-tuning is based on LoRA or similar is the fact that pricing is based just on training and input / output and doesn't factor in the cost of storing your fine-tuned models. Llama-3-8b-instruct is ~16GB (I think this ought to be roughly comparable, at least in the same ballpark). You'd almost surely care if you were storing that much data for each fine-tune.

Measuring the composition of fryer oil at different times certainly seems like a good way to test both the original hypothesis and the effect of altitude.

You're right, my original wording was too strong. I edited it to say that it agrees with so many diets instead of explains why they work.

One thing I like about the PUFA breakdown theory is that it agrees with aspects of so many different diets.

- Keto avoids fried food because usually the food being fried is carbs

- Carnivore avoids vegetable oils because they're not meat

- Paleo avoids vegetable oils because they weren't available in the ancestral environment

- Vegans tend to emphasize raw food and fried foods often have meat or cheese in them

- Low-fat diets avoid fat of all kinds

- Ray Peat was perhaps the closest to the mark in emphasizing that saturated fats are more stable (he probably talked about PUFA breakdown specifically, I'm not sure).

Edit: I originally wrote "neatly explains why so many different diets are reported to work"

If this was true, how could we tell? In other words, is this a testable hypothesis?

What reason do we have to believe this might be true? Because we're in a world where it looks like we're going to develop superintelligence, so it would be a useful world to simulate?

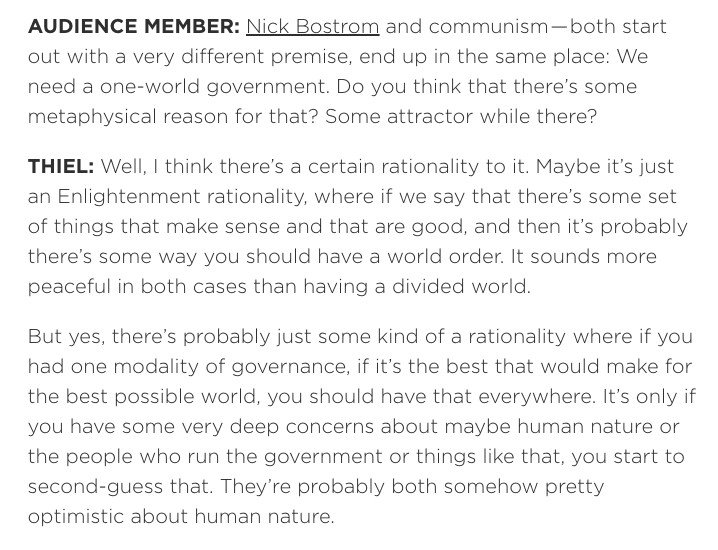

From the latest Conversations with Tyler interview of Peter Thiel

I feel like Thiel misrepresents Bostrom here. He doesn’t really want a centralized world government or think that’s "a set of things that make sense and that are good". He’s forced into world surveillance not because it’s good but because it’s the only alternative he sees to dangerous ASI being deployed.

I wouldn’t say he’s optimistic about human nature. In fact it’s almost the very opposite. He thinks that we’re doomed by our nature to create that which will destroy us.

Not a direct answer to your question but: