Give me feedback! :)

Current

- Co-Director at ML Alignment & Theory Scholars Program (2022-current)

- Co-Founder & Board Member at London Initiative for Safe AI (2023-current)

- Manifund Regrantor (2023-current)

Past

- Ph.D. in Physics from the University of Queensland (2017-2022)

- Group organizer at Effective Altruism UQ (2018-2021)

Posts

Wiki Contributions

Comments

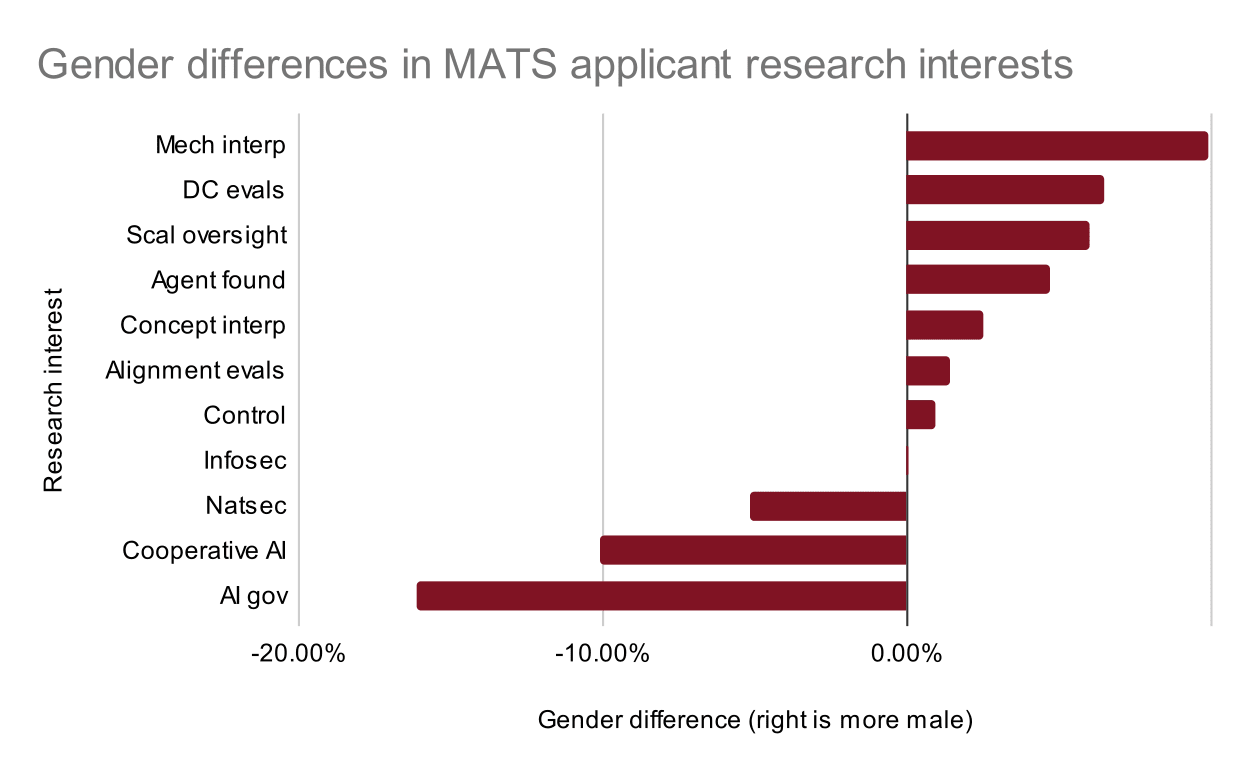

You might be interested in this breakdown of gender differences in the research interests of the 719 applicants to the MATS Summer 2024 and Winter 2024-25 Programs who shared their gender. The plot shows the difference between the percentage of male applicants who indicated interest in specific research directions from the percentage of female applicants who indicated interest in the same.

The most male-dominated research interest is mech interp, possibly due to the high male representation in software engineering (~80%), physics (~80%), and mathematics (~60%). The most female-dominated research interest is AI governance, possibly due to the high female representation in the humanities (~60%). Interestingly, cooperative AI was a female-dominated research interest, which seems to match the result from your survey where female respondents were less in favor of "controlling" AIs relative to men and more in favor of "coexistence" with AIs.

Yeah, I basically agree with this nuance. MATS really doesn't want to overanchor on CodeSignal tests or publication count in scholar selection.

I do think category theory professors or similar would be reasonable advisors for certain types of MIRI research.

Yes to all this, but also I'll go one level deeper. Even if I had tons more Manifund money to give out (and assuming all the talent needs discussed in the report are saturated with funding), it's not immediately clear to me that "giving 1-3 year stipends to high-calibre young researchers, no questions asked" is the right play if they don't have adequate mentorship, the ability to generate useful feedback loops, researcher support systems, access to frontier models if necessary, etc.

I want to sidestep critique of "more exploratory AI safety PhDs" for a moment and ask: why doesn't MIRI sponsor high-calibre young researchers with a 1-3 year basic stipend and mentorship? And why did MIRI let Vivek's team go?

We changed the title. I don't think keeping the previous title was aiding understanding at this point.

I like Adam's description of an exploratory AI safety PhD:

You'll also have an unusual degree of autonomy: You’re basically guaranteed funding and a moderately supportive environment for 3-5 years, and if you have a hands-off advisor you can work on pretty much any research topic. This is enough time to try two or more ambitious and risky agendas.

Ex ante funding guarantees, like The Vitalik Buterin PhD Fellowship in AI Existential Safety or Manifund or other funders, mitigate my concerns around overly steering exploratory research. Also, if one is worried about culture/priority drift, there are several AI safety offices in Berkeley, Boston, London, etc. where one could complete their PhD while surrounded by AI safety professionals (which I believe was one of the main benefits of the late Lightcone office).

I plan to respond regarding MATS' future priorities when I'm able (I can't speak on behalf of MATS alone here and we are currently examining priorities in the lead up to our Winter 2024-25 Program), but in the meantime I've added some requests for proposals to my Manifund Regrantor profile.

An interesting note: I don't necessarily want to start a debate about the merits of academia, but "fund a smart motivated youngster without a plan for 3 years with little evaluation" sounds a lot like "fund more exploratory AI safety PhDs" to me. If anyone wants to do an AI safety PhD (e.g., with these supervisors) and needs funding, I'm happy to evaluate these with my Manifund Regrantor hat on.

I am a Manifund Regrantor. In addition to general grantmaking, I have requests for proposals in the following areas: