"Me" encompasses three constituents: this mind here and now, its memory, and its cared-for future. There follows no ‘ought’ with regards to caring about future clones or uploadees, and your lingering questions about them dissipate.

In When is a mind me?, Rob Bensinger suggests three Yes follow for:

- If I expect to be uploaded tomorrow, should I care about the upload in the same ways (and to the same degree) that I care about my future biological self?

- Should I anticipate experiencing what my upload experiences?

- If the scanning and uploading process requires destroying my biological brain, should I say yes to the procedure?

I say instead: Do however it occurs to you, it’s not wrong! And if tomorrow you changed your mind, it’s again not wrong.[1] So the answers here are:

- Care however it occurs to you!

- Well, what do you anticipate experiencing? Something or nothing? You anticipate whatever you do anticipate and that’s all there is to know—there’s no “should” here.

- Say what you fee like saying. There’s nothing inherently right or wrong here, as long as it aligns with your actual internally felt, forward-looking preference for the uploaded being and the physically to-be-eliminated future being.

Clarification: This does not imply you should never wonder about what you actually want. It is normal to feel confused at times about our own preferences. What we must not do, is insist on reaching a universal, 'objective' truth about it.

So, I propose there’s nothing wrong with being hesitant as to whether you really care about the guy walking out of the transporter. Whatever your intuition tells you, is as good as it gets in terms of judgement. It’s neither right nor wrong. So, I advocate a sort of relativity theory for your future, if you will: Care about whosever fate you happen to, but don’t ask whom you should care about in terms of successors of yours.

I conclude on this when starting from a rather similar position as that posited by Rob Bensinger. The take is based on only two simple core elements:

- The current "me" is precisely my current mind at this exact moment—nothing more, nothing less.

- This mind strongly cares about its 'natural' successor over the next milliseconds, seconds, and years, and it cherishes the memories from its predecessors. "Natural" feels vague? Exactly, by design!

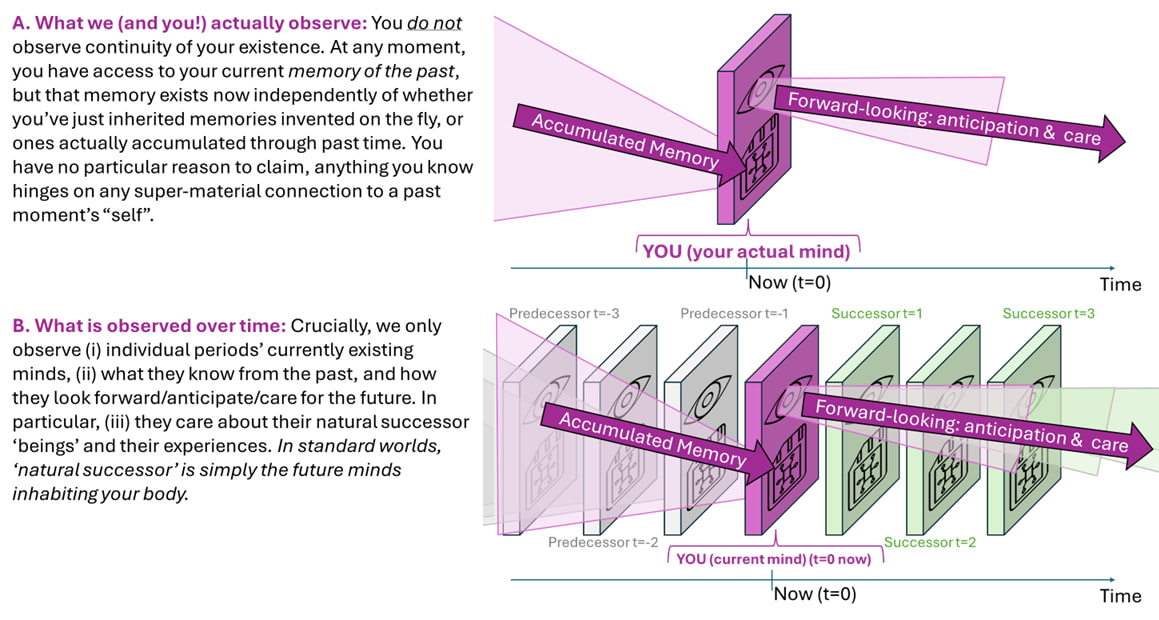

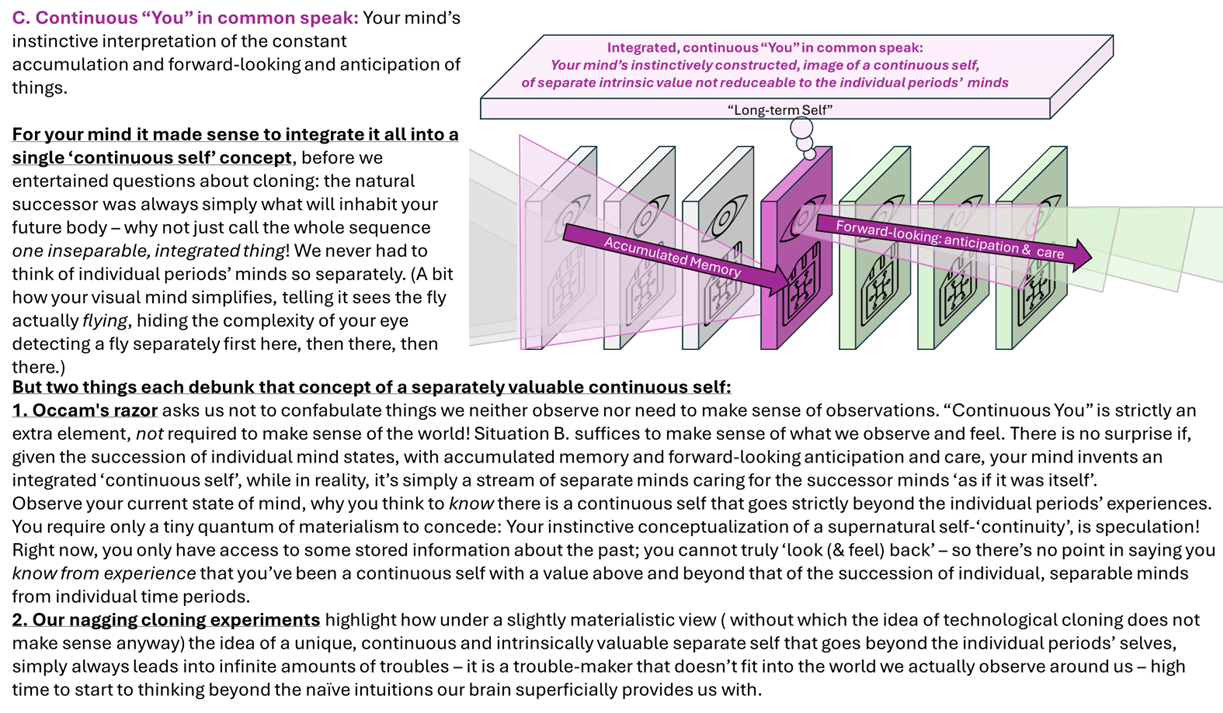

This is not just one superficially convenient way out of some of our cloning conundrums, it is also the logical view: besides removing the inevitable puzzles about cloning/uploading that you may struggle to solve satisfactorily otherwise, it corresponds to explaining what we observe without adding unnecessary complexity (illustration below).

Graphical illustration: What we know, in contrast to what your brain instinctively tells you

Implication

In the absence of cloning and uploading, this is essentially the same as being a continuous "self." You care so deeply about the direct physical and mental successors of yours, you might as well speak of a unified 'self'. Rob Bensinger provides a more detailed examination of this idea, which I find agreeable. With cloning, everything remains the same, except for a minor detail—if we're open to it, it does not create any complications in otherwise perplexing thought experiments. Here's how it works:

- Your current mind is cloned or transported. The successors simply inherit your memories, each in turn developing their own concern for their successors holding their memories, and so forth.

- How much you care for future successors, or for which successor, is left to your intuition. There's nothing more to say! There's no right or wrong here. We may sometimes be perplexed about how much we care for which successor in a particular thought experiment, but you may adopt a perspective as casually, quickly, and baselessly as you happen to; there's nothing wrong with any view you may hold. Nothing harms you (or at least not more than necessary), as long as your decisions are in line with the degree of regard you have, you feel, for the future successors in question.

Is it practicable?

Can we truly live with this understanding? Absolutely. I am myself right now, and I care about the next second's successor with about a '100%' weight: just as much as for my actual current self, under normal circumstances. Colloquially, even in our own minds, we refer to this as "we're our continuous self." But tell yourself that’s rubbish. You are only the actual current moment's you, and the rest are the successors you may deeply care about. This perspective simplifies many dilemmas: You fall asleep in your bed, someone clones you and places the original you on the sofa, and the clone in your bed—who is "you" now?[2] Traditional views are often confounded—everyone has a different intuition. Maybe every day you have a different response, based on no particular reason. And it's not your fault; we're simply asking the wrong question.

By adopting the relativity viewpoint, it becomes straightforward. Maybe you anticipate and want to ensure the right person receives the gold bar upon waking, so you place it where it feels most appropriate according to your feelings towards the two. Remember, you exist just now, and everything future comprises new selves, for some of which you simply have a particular forward-looking care. Which one do you care more about? That decision should guide where you place the gold bar.

Vagueness – as so often in altruism

You might say it’s not easy. You can’t just make up your mind so easily about whom to care for. It resonates with me. Ever dived into how humans show altruism towards others? It’s not exactly pretty. Not just because absolute altruism is unbeautifully small but simply because: We don’t have good, quantitative, answers as to whom we care about how much. We’re extremely erratic here: one minute we might completely ignore lives far away, and the next, a small change in the story can make us care deeply. And, so it may also be for your feelings towards future beings inheriting your memories and starting off with your current brain state. You have no very clear preferences. But here’s the thing—it’s all okay. There’s no “wrong” way to feel about which future mind to care about, so don’t sweat over figuring out which one is the real “you.” You are who you are right now, with all your memories, hopes, and desires related to one or several future minds, especially those who directly descend from you. It’s kind of like how we feel about our kids; no fixed rules on how much we should care.

Of course, we can ask from a utilitarian perspective, how you should care about whom, but that’s a totally separate question, as it deals with aggregate welfare, and thus exactly not with subjective preference for any particular individuals.

More than a play on words?

You may call it a play on words, but I believe there's something 'resolving' in this view (or in this 'definition' of self, if you will). And personally, the thought that I am not in any absolute sense the person who will wake up in that bed I go to sleep in now is inspiring. It sometimes motivates me to care a bit more about others than just myself (well, well, vaguely). None of these final points in of themselves justify the proposed view in any ultimate way, of course.

- ^

This sounds like moral relativism but has nothing to do with it. We might be utilitarians and agree every being has a unitary welfare weight. But that’s exactly not what we discuss here. We discuss your subjective (‘egoistical’) preference for you and for potentially the future of what we might or might not call ‘you’.

- ^

Fractalideation introduced the sleep-clone-swap thought experiment, and also guessed it is resolved by the individual whether "stream-of-consciousness continuity" or "substrate continuity" dominates, perfectly in line with the here generalized take.

Nice challenge! There's no "epistemic relativism" here, even if I see where you're coming from.

First recall the broader altruism analogy: Would you say it's epistemic relativisim if I tell you, you can simply look inside yourself and see freely, how much you care, how closely connected you feel about people in a faraway country? You sure wouldn't reproach that to me; you sure agree it's your own 'decision' (or intrinsic inclination or so) that decides how much weight or care you personally put on these persons.

Now, remember the core elements I posit. "You" are (i) your mind of right here and now, including (ii) it's tendency for deeply felt care & connection to the 'natural' successors of yours, and that's about what there is to be said about you (+ there's memory). From this everything follows. It is evolution that has shaped us to shortcut the standard physical 'continuation' of you in coming periods, as a 'unique entity' in our mind, and has made you typically care sort of '100%' about your first few sec worth of forthcoming successors of yours [in analogy: Just as nature has shaped you to (usually) care tremendously also for your direct children or siblings]. Now there are (hypothetically) cases, where things are so warped and that are so unusual evolutionarily, that you have no clear tastes: that clone or this clone, if you are/are not destroyed in the process/while asleep or not/blabla - all the puzzles we can come up with. For all these cases, you have no clear taste as to which of the 'successors' of yours you care much and which you don't. In our inner mind's sloppy speak: we don't know "who we'll be". Equally importantly, you may see it one way, and your best friends may see it very differently. And what I'm explaining is that, given the axiom of "you" being you only right here and now, there simply IS no objective truth to be found about who is you later or not, and so there is no objective answer as to whom of those many clones in all different situations you ought to care how much about: it really does only boil down to how much you care about these. As, on a most fundamental level, "you" are only your mind right now.

And if you find you're still wondering about how much to care about which potential clone in which circumstances, it's not the fault of the theory that it does not answer it to you. You're asking to the outside a question that can only be answered inside you. The same way that, again, I cannot tell you how much you feel (or should feel) for third person x.

I for sure can tell you you ought to behaviorally care more from a moral perspective, and there I might use a specific rule that attributes each conscious clone an equal weight or so, and in that domain you could complain if I don't give you a clear answer. But that's exactly not what the discussion here is about.

I propose a specific "self" is a specific mind at a given moment. The usual-speak "killing" X and the relevant harm associated with it means to prevent X's natural successors, about whom X cares so deeply, from coming into existence. If X cares about his physical-direct-body successors only, disintegrating and teleporting him means we destroy all he cared for, we prevented all he wanted to happen from happening, we have so-to-say killed him, as we prevented his successors from coming to live. If he looked forward to a nice trip to Mars where he is to be teleported to, there's no reason to think we 'killed' anyone in any meaningful sense, as "he"'s a happy space traveller finding 'himself' (well, his successors..) doing just the stuff he anticipated for them to be doing. There's nothing more objective to be said about our universe 'functioning' this or that way. As any self is only ephemeral, and a person is a succession of instantaneous selves linked to one another with memory and with forward-looking preferences, it really is these own preferences that matter for the decision, no outside 'fact' about the universe.