Studies On Slack

89johnswentworth

26Charlie Steiner

9Vaniver

12johnswentworth

6ChristianKl

6Benquo

1countingtoten

2Vaniver

33DirectedEvolution

2Vaniver

2DirectedEvolution

2Vaniver

3wangscarpet

11Viliam

2Charlie Steiner

3NoriMori1992

3Leafcraft

9ChristianKl

13mukashi

2ChristianKl

-68Miguel Cisneros

24Raemon

4Pattern

2Viliam

2Raemon

2Viliam

2Raemon

6Leafcraft

-15Miguel Cisneros

26gilch

6gjm

0Ericf

2Dustin

1Teerth Aloke

New Comment

There's a big problem with the Eye Part metaphor right at the beginning, which propagates through many ideas/examples in the rest of the post: the real world is high-dimensional.

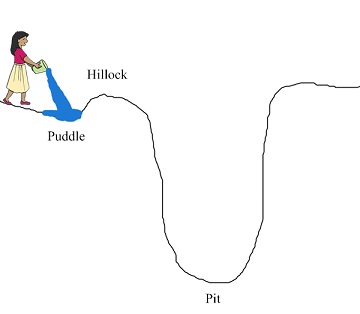

The Eye Part metaphor imagines three types of organisms, all arranged along one dimension: No Eye -> Eye Part 1 -> Eye Part 2 -> Whole Eye. In that picture, the main problem in getting from No Eye to Whole Eye is just getting "over the hill".

But the real world doesn't look like that. Evolution operates in a space with (at least) hundreds of thousands of dimensions - every codon in every gene can change, genes/chunks of genes can copy or delete, etc. The "No Eye" state doesn't have one outgoing arrow, it has hundreds of thousands of outgoing arrows, and "Eye Part 1" has hundreds of thousands of outgoing arrows", and so forth.

As we move further away from the starting state, the number of possible states increases exponentially. By the time we're as-far-away as Whole Eye (which, in practice, is a lot more than three steps), the number of possible states will outnumber the atoms in the universe. If evolution is uniformly-randomly exploring that space, without any pressure toward the Whole Eye state specifically, it will not ever stumble on the Whole Eye - no matter how much slack it has.

Point is: the hard part of getting from "No Eye" to "Whole Eye" is not the fitness cost in the middle, it's figuring out which direction to go in a space with hundreds of thousands of directions to choose from at every single step.

Conversely, the weak evolutionary benefits of partial-eyes matter, not because they grant a fitness gain in themselves, but because they bias evolution in the direction toward Whole Eyes.

Let's apply that to some of the examples.

Tariffs example: from the perspective of a policy-maker, the hard part of evolving successful companies is not giving them plenty of slack, it's figuring out which company-designs are actually likely to succeed. If policy makers just grant unconditional slack, then they'll end up with random company-designs, and the exponentially-vast majority of random company-designs suck. They need to strategically select company-designs which will work if given slack. I don't have a reference, but IIRC, both Korean and Japanese policy makers did think about the problem this way.

Monopolies/Research example: the hard part of a successful research lab is not giving plenty of slack, it's figuring out which research-directions are actually likely to succeed. If management just funds research indiscriminately, then they'll end up with random research directions, and the exponentially-vast majority of random research directions suck. Xerox and Bell worked in large part because they successfully researched things targeted toward their business applications - e.g. programming languages and solid-state electronics.

Rand/Sears: the hard part of a successful company is not giving internal components plenty of slack, it's figuring out what each component needs to do in order to actually be useful to the company (i.e. alignment). A good example here is Amazon: they try to expose all of their internal-facing products to the external world. That's how Amazon's cloud compute services started, for instance: they sold their own data center infrastructure to the rest of the world. That exposes the data center infrastructure to ordinary market pressure, and forces them to produce a competitive product. The external market tells the data infrastructure team "which direction to go" in order to be maximally-useful. On the other hand, if Amazon's data center team had to compete with the warehouse team without external market pressure, then we'd effectively have two monopolies bargaining - not actually market competition at all.

Thus reminds me of the machine learning point that when you do gradient descent in really high dimensions, local minima are less common than you'd think, because to be trapped in a local minimum, every dimension has to be bad.

Instead of gradient descent getting trapped at local minima, it's more likely to get pseudo-trapped at "saddle points" where it's at a local minimum along some dimensions but a local maximum along others, and due to the small slope of the curve it has trouble learning which is which.

If management just funds research indiscriminately, then they'll end up with random research directions, and the exponentially-vast majority of random research directions suck. Xerox and Bell worked in large part because they successfully researched things targeted toward their business applications - e.g. programming languages and solid-state electronics.

That said, I think there's still a compelling point in slack's favor here; my impression is that Bell Labs (and probably Xerox?) put some pressure on people to research things that would eventually be helpful, but put most of its effort into hiring people with good taste and high ability in the first place.

That sounds plausible; hiring people with good taste and high ability is also a good way to filter out the exponentially-vast number of useless research directions (assuming that one can recognize such people). That said, I wouldn't label that a point in favor of slack, so much as another way of filtering. It's still mainly solving the problem of "which direction to go" rather than "can we get over the hill".

If you can't recognize who's already done some good work autonomously, how can you reasonably hope to extract good work from people who haven't been selected for that?

This shows why I don't trust the categories. The ability to let talented people go in whatever direction seems best will almost always be felt as freedom from pressure.

From The Sources of Economic Growth by Richard Nelson, but I think it's a quote from James Fisk, Bell Labs President:

If the new work of an individual proves of significant interest, both scientifically and in possible communications applications, then it is likely that others in the laboratory will also initiate work in the field, and that people from the outside will be brought in. Thus a new area of laboratory research will be started. If the work does not prove of interest to the Laboratories, eventually the individual in question will be requested to return to the fold, or leave. It is hoped the pressure can be informal. There seems to be no consensus about how long to let someone wander, but it is clear that young and newly hired scientists are kept under closer rein than the more senior scientists. However even top-flight people, like Jansky, have been asked to change their line of research. But, in general, the experience has been that informal pressures together with the hiring policy are sufficient to keep AT&T and Western Electric more than satisfied with the output of research.

[Most recently brought to my attention by this post from a few days ago]

The referenced study on group selection on insects is "Group selection among laboratory populations of Tribolium," from 1976. Studies on Slack claims that "They hoped the insects would evolve to naturally limit their family size in order to keep their subpopulation alive. Instead, the insects became cannibals: they ate other insects’ children so they could have more of their own without the total population going up."

This makes it sound like cannibalism was the only population-limiting behavior the beetles evolved. According to the original study, however, the low-population condition (B populations) showed a range of population size-limiting strategies, including but not limited to higher cannibalism rates.

"Some of the B populations enjoy a higher cannibalism rate than the controls while other B populations have a longer mean developmental time or a lower average fecundity relative to the controls. Unidirectional group selection for lower adult population size resulted in a multivarious response among the B populations because there are many ways to achieve low population size."

Scott claims that group selection can't work to restrain boom-bust cycles (i.e. between foxes and rabbits) because "the fox population has no equivalent of the overarching genome; there is no set of rules that govern the behavior of every fox." But the empirical evidence of the insect study he cited shows that we do in fact see changes in developmental time and fecundity. After all, a species has considerable genetic overlap between individuals, even if we're not talking about heavily inbred family members, as we'd be seeing in the beetle study. Wikipedia's article on human genetic diversity cites a Nature article and says "as of 2015, the typical difference between an individual's genome and the reference genome was estimated at 20 million base pairs (or 0.6% of the total of 3.2 billion base pairs)."

An explanation here is that the inbred beetles of the study are becoming progressively more inbred with each generation, meaning that genetic-controlled fecundity-limiting changes will tend to be shared and passed down. Individual differences will be progressively erased generation by generation, meaning that as time goes by, group selection may increasingly dominate individual competition as a driver of selection.

All this is to motivate the outer/inner selection model, the idea that the ability to impose a top-down ruleset restraining the selfish short-term interests of individuals naturally results in higher-level entities. In biology, it's multicellular life, while in human culture, it's various forms of social enterprise. Scott is trying to explain not only how such social enterprises form, but why some fail and others thrive.

In part 3, this model is used to justify the tenure system, as well as diversity in grantmaking. And this is where I think Scott's inner/outer selection model, with its origins in biology, doesn't work as well. Tenure has its origins in the early 1600s, but really took off in the mid-19th century. The first university was founded in 1088.

Under Scott's explanatory framework, tenure is an inner constraint that has spread because it enhances the fitness of universities by constraining academics from focusing on shortsighted publishing at the expense of long-term investments in excellent research. This can't be the result of blind evolution selecting for the survival and reproduction of universities, because the individual "organisms" (universities) often predate the "tenure gene" by centuries, and then acquired it hundreds of years into their individual lifespan. Instead, tenure historically is the result of 19th-century labor organizing by teachers. In fact, it looks like tenure was a rebellion of the inner system against the outer.

If we run with Scott's framing, though, things get weird. What if tenure helps universities prosper not because it helps them produce high-quality research, but because it helps them recruit and maintain high-prestige academics capable of attracting big grants from external funders? If so, that would be a little like an organism that figures out how to attract the healthiest cells from a neighboring organism and incorporate them into its body. That seems to be a facet of group selection in biology (i.e. mitochondria and gut bacteria), but for the most part, our bodies are vigilant to destroy outsiders. To me, this is just a challenge for taking frameworks that are derived from the particularities of eukaryotic biology and trying to apply them to a different sort of entity with an entirely different set of mechanisms for self-definition, survival, reward, and replication. Perhaps group selection is still relevant, but this framing of university tenure as group selection ruleset feels less like a useful way to explore culture and more like a mad lib. We're just taking the abstract components of the inner/outer selection model for cancer biology and finding the handiest intuitive category to map onto the component parts. It creates superficial plausibility, but isn't historically accurate and feels mechanistically questionable.

I think that a more compelling concept of a human "ruleset" is perhaps the pattern of behavior called "professionalism." Across the board, groups of all kinds that maintain a basic level of professional decorum tend to thrive. Those that do not tend to fail. The way that looks across cultures can vary, but there's a recognizable commonality around the world. Something like professionalism has been around for a long time, and a very large number of professional groups have come and gone on a much shorter time scale. This creates opportunity for group selection to function, an opportunity that seems to be lacking in the university/tenure context.

Moving to the last section, Boeing is not a monopoly. Their four largest competitors have about the same number of employees all together as Boeing has alone. But that's a side concern. More importantly, lack of slack is not the obvious explanation for why a misbehaving monopoly would persist. The most obvious solution would be for an activist investor to purchase 51% of the monopoly's shares, then either reform or profit off the company. But they'd face whatever coordination and incentives caused the monopoly to misbehave in the first place. They'd also have to deal with efficient market questions. The real story here, I think, isn't slacklessness, but computational challenges.

An alternative explanation for why monopolies would be major innovators is not because their freedom from competition makes them able to invest for the long term, but rather because they are the only employer in the industry. As a consequence, the company gets the credit for every innovation occurring in its industry during the time period in which it's a monopoly.

To make this more clear, imagine that an industrial sector starts with 1 company, which produces 10 inventions per year for 10 years, for a total of 100 inventions. Then, it splits into 10 companies, each of which produces 2 inventions per year for 10 years, for a total of 200 inventions. The smaller companies will individually only have 20% of the inventions that the monopoly had, but the industry as a whole is twice as innovative.

Trying to evaluate the impact of a monopoly on innovation requires evaluating something like the "rate of innovation per capita" among employees in the sector, but even that's not good enough, because we don't know if a monopoly context tends to expand or constrain the population of employees, and we also care about the absolute number of innovations per year. If a highly monopolistic industrial landscape tended to increase the number of people choosing careers as innovators while decreasing the rate of innovation per capita, it's hard to know how that would cash out in terms of innovations per year.

Turning to the section on "strategy games," and specifically the Graham Greene quote, while I don't know historical population levels for Italy vs. Switzerland, in modern times, Italy has 7x the population of Switzerland. From "The Renaissance in Switzerland," "The geographical position of Switzerland made contact with Renaissance manifestations in Italy and Germany easy even if the country was too small and poor for notable buildings or works of art." Switzerland, then, seems like it suffered if anything from too little slack, not too much.

Where Scott references Greece's "slack" from being "hard to take over" "even by Sparta," I suggest the delightful blog A Collection of Unmitigated Pedantry's take on Sparta's underwhelming military prowess. Scott points out that this part is purely speculative, so my main aim is just to spread the word that there's a great blog on military history to be read, if you haven't seen it before.

One of my issues with this article as a whole, beyond the epistemological topiary, is that veers between instances in which we can clearly see on a mechanistic level how dire poverty forces an inventor to take a day job rather than invent cold fusion, to a plausible but unsupported claim that Sears' unconventional corporate structure contributed to its implosion, to wild speculation of "this thing happened in history, and maybe it was caused by too much/too little/just the right amount of slack too!" Scott sometimes labels his confidence levels explicitly, and other times indicates them with his tone, but I notice that my first read produced a credulous absorption of his speculative claims as factual, and my second read left me with Gell-Mann amnesia. If we're really having a hard time finding a slack-based account for some phenomenon, we can always redefine slack (lack of resources vs. absence of competition), play around with whether the problem was too much or too little slack or the wrong temporal pattern of change in slack, or add a third (and why not a fourth?) layer to the inner/outer model.

As a complicating extension, it's hard to say where the slackness "resides." For example, imagine a student has two tutors, one harsh and exacting (low slack), the other nurturing and patient (high slack). Say the student notices that they learn faster with the low-slack tutor. They hypothesize that this shows that, at least in the tutoring domain, their optimal learning-rate is generated by low-slack tutors. So they hire low-slack tutors for every subject, only to find that their learning rate plummets.

They now suppose that this must be because with their original mix of tutors, adding a low-slack tutor enhanced their learning rate for that individual subject, but also used up their overall emotional capacity to deal with stress. Adding all low-slack tutors overwhelmed their emotional budget.

Optimal slack levels on one level led to conditions of suboptimal slack on a different level. Optimizing slack would mean finding harmonious slack levels throughout the many interwoven systems affecting the entities in question.

But we still might be able to do that by just varying the slack levels in ways that seem sensible, and keeping the new equilibrium if we like the outcome. You don't necessarily need an RCT to figure this out. Maybe the barrier is just having the idea and putting it into action. The effects might be obvious. Maybe this is low-hanging fruit.

The vagueness and complexity of "slack" as a concept makes me worry that this term lends itself to be a hand-wavey explanation for whatever a writer wants to assert, or for political agendas, more than for making testable predictions that enhance our understanding of the world. What it seems to offer is inspiration for making testable predictions. It's a hypothesis-generating tool.

This seems to be one of the things Scott's really good at. He doesn't make too many testable predictions, although he sometimes shares those of others. Instead, he finds patterns in observations and makes you fall in love with that pattern. Then, it's on you to figure out how to turn that speculative pattern into a testable hypothesis. This is a valuable skill, one that many of his readers seem to envy. But it also puts you at risk of just resorting to that pattern he's jammed into your head any time you need to reach for an explanation for something.

Unfortunately, I'm really not sure if we have it in us, as a blogging community, to internally coordinate intellectual progress. We have speculative stuff, like this article. To that, we need not only epistemic spot checks, like I'm trying to do in this review, but attempts at operationalization and normal scientific study, which this article is referencing in places, but which isn't obviously being directly triggered or motivated by this article. If we mostly only have the time and expertise for speculation, exhortations, and the occasional fact check or two, how do we achieve intellectual progress? How will we get the resources to have these ideas checked, operationalized, and put to the test? How do we overcome our short-term status competitions in order to create a body of work that builds on itself over time toward a higher long-term payoff for the community?

It sounds like we need more slack.

An explanation here is that the inbred beetles of the study are becoming progressively more inbred with each generation, meaning that genetic-controlled fecundity-limiting changes will tend to be shared and passed down. Individual differences will be progressively erased generation by generation, meaning that as time goes by, group selection may increasingly dominate individual competition as a driver of selection.

I don't think this adds up. Yes, species share many of their genes--but then those can't be the genes that natural selection is working on! And so we have to explain why the less fecund individuals survived more than the more fecund individuals. If that's true, then this is just an adaptive trait going to fixation, as in common (and isn't really a group selection thing).

I’d enjoy talking this out with you if you have the stamina for a few more back-and-forths. I didn’t quite understand the wording of your counter argument, so I’m hoping you could spell it out in a bit more detail?

Looking at the paper, I think I wasn't tracking an important difference.

I still think that genes that have reached fixation among a population aren't selected for, because you don't have enough variance to support natural selection. The important thing that's happening in the paper is that, because they have groups that colonize new groups, traits can reach fixation within a group (by 'accident') and then form the material for selection between groups. The important quote from the paper:

The total variance in adult numbers for a generation can be partitioned on the basis of the parents in the previous generation into two components: a within-populations component of variance and a between-populations component of variance. The within-populations component is evaluated by calculating the variance among D populations descended from the same parent in the immediately preceding generation. The between-populations component is evaluated by calculating the variance among groups of D populations descended from different parents. The process of random extinctions with recolonization (D) was observed to convert a large portion of the total variance into the between-populations component of the variance (Fig. 2b), the component necessary for group selection.

So even tho low fecundity is punished within every group (because your groupmates who have more children will be a larger part of the ancestor distribution), some groups by founder effects will have low fecundity, and be inbred enough that there's not enough fecundity variance to differentiate between members of the population of that group, (even if fecundity varies among all beetles, because they're not a shared breeding population).

[EDIT] That is, I still think it's correct that foxes sharing 'the fox genome' can't fix boom-bust cycles for all foxes, but that you can locally avoid catastrophe in an unstable way.

For example, there's a gene for some species that causes fathers to only have sons. This is fascinating because it 1) is reproductively successful in the early stage (you have twice as many chances to be a father in the next generation as someone without the copy of the gene, and all children need to have a father) and it 2) leads to extinction in the later stage (because as you grow to be a larger and larger fraction of the population, the total number of descendants in the next generation shrinks, with there eventually being a last generation of only men). The reason this isn't common everywhere is group selection; any subpopulations where this gene appeared died out, and failed to take other subpopulations down with them because of the difficulty of traveling between subpopulations. But this is 'luck' and 'survivor recolonization', which are pretty different mechanisms than individual selection.

This is a little like game theory coordination vs cooperation actually. Coordination is if you can constrain all actors to change in the same way: competition is if each can change while holding the others fixed. "Evolutionary replicator dynamics" is a game theory algorithm that encompasses the latter.

Even if the beetles all currently share the same genes, any one beetle can have a mutation that competes with his/her peers in future generations. Therefore, reduced variation at the current time doesn't cause the system to be stable, unless there's some way to ensure that any change is passed to all beetles (like having a queen that does all the breeding).

Web moderation is also like this. Karma can become a tool of Moloch, but discussions without voting do not separate the wheat from the chaff.

(With all respect to author, finding the best comments on SSC is a full-time job.)

Well, you need some selection process. But for a karma-less community you can still have selection on members, or social encouragement/discouragement. I guess this also requires that the volume of comments isn't so high that ain't nobody got time for that.

Some scientists tried to create group selection under laboratory conditions. They divided some insects into subpopulations, then killed off any subpopulation whose numbers got too high, and and “promoted” any subpopulation that kept its numbers low to better conditions. They hoped the insects would evolve to naturally limit their family size in order to keep their subpopulation alive. Instead, the insects became cannibals: they ate other insects’ children so they could have more of their own without the total population going up. In retrospect, this makes perfect sense; an insect with the behavioral program “have many children, and also kill other insects’ children” will have its genes better represented in the next generation than an insect with the program “have few children”.

Why didn't they try also killing off subpopulations that engaged in cannibalism, and promoting those that didn't? And what would have most likely happened if they had tried that?

I believe you used the therm "genetic code" incorrectly in [II.] when you were talking about cancer, the correct word is genome.

For anybody who wants to understand:

The difference between the terms is that genetic code means the part of the DNA that codes for proteins. The genome on the other hand means all DNA and thus also telomere and other information like various types of RNA for which DNA codes.

Sorry for replying to a comment from so long ago, I just bumped into this.

This clarification is wrong, and a common mistake in science journalism: the genetic code is not the part of DNA that codes for proteins. The genetic code is the mapping between triplets of nucleotides (codons) and amino acids. The genetic code is very conserved among all life beings, though there is some variation (especially in mitochondria, where the selective pressure seems to be quite special)

https://en.wikipedia.org/wiki/Genetic_code

The correct word is genome, I agree

It's not that evolutionary pressure doesn't exist after a catastroph that kills most members of a species. There will still be different reproduction rates of different members of the species. Not all members will find mates. The key point is that the evolutionary pressure is quite different from the pressure that the species is usually exposed.

The thing that's more central is that there's not one specific context for which everything is optimized. That gives you diversity.

When I contribute on LessWrong I might get benefits such as status but the evaluation of my performance is sufficently unclear that it's hard to focus on those gains and easy to just write what I consider to be important.

Curated and popular this week