Crossposed from https://stephencasper.com/reframing-ai-safety-as-a-neverending-institutional-challenge/

Stephen Casper

“They are wrong who think that politics is like an ocean voyage or a military campaign, something to be done with some particular end in view, something which leaves off as soon as that end is reached. It is not a public chore, to be got over with. It is a way of life.”

– Plutarch

“Eternal vigilance is the price of liberty.”

– Wendell Phillips

“The unleashed power of the atom has changed everything except our modes of thinking, and we thus drift toward unparalleled catastrophe.”

– Albert Einstein

“Technology is neither good nor bad; nor is it neutral.”

– Melvin Kranzberg

“Don’t ask if artificial intelligence is good or fair, ask how it shifts power.”

– Pratyusha Kalluri

“Deliberation should be the goal of AI Safety, not just the procedure by which it is ensured.”

– Roel Dobbe, Thomas Gilbert, and Yonatan Minz

As AI becomes increasingly transformative, we need to rethink how we approach safety – not as a technical alignment problem, but as an ongoing, unsexy struggle.

“What are your timelines?” This question constantly reverberates around the AI safety community. It reflects the idea that there may be some critical point in time in which humanity will either succeed or fail in securing a safe future. It stems from an idea woven into the culture of the AI safety community: that the coming of artificial general intelligence will likely either be apocalyptic or messianic and that, at a certain point, our fate will no longer be in our hands (e.g., Chapter 6 of Bostrom, 2014). But what exactly are we planning for? Why should one specific moment matter more than others? History teaches us that transformative challenges tend to unfold not as pivotal moments, but as a series of developments that test our adaptability.

An uncertain future: AI has the potential to transform the world. If we build AI that is as good or better than humans at most tasks, at a minimum, it will be highly disruptive; at a maximum, it could seriously threaten the survival of humanity (Hendrycks, et al., 2023). And that’s not to mention the laundry list of non-catastrophic risks that advanced AI poses (Slattery et al., 2024). The core goal of the AI safety community is to make AI’s future go better, but it is very difficult to effectively predict and plan for what is next. Forecasting the future of AI has been fraught, to say the least. Past agendas for AI safety have centered around challenges that bear limited resemblance to the key ones we face today (e.g., Soares and Fallenstein., 2015; Amodei et al., 2016; Everitt et al., 2018; Critch and Krueger, 2020). Meanwhile, many of the community’s investments in scaling labs, alignment algorithms, (e.g., Christiano et al., 2017), and safety-coded capabilities research have panned out to be doubtfully net-positive for safety at best (e.g., Ahmed et al., 2023; Ren et al., 2024).

The Pandora’s Box: If AI becomes highly transformative and humanity survives it, two outcomes are possible: either some coherent coalition will monopolize the technology globally, or it will proliferate. In other words, some small coalition will take control of the world—or one will not. While the prospect of global domination is concerning for obvious reasons, there are also strong reasons to believe it is unlikely. Power is deeply embedded in complex global structures that are resistant to being overthrown. Moreover, possessing the capacity for world domination does not guarantee that it will happen. For example, in the 1940s, nuclear weapons could have enabled a form of global control, yet no single nation managed to dominate the world. Much of the alarm within the AI safety community today resembles what a movement centered around nuclear utopianism or dystopianism might have looked like in the 1930s. Historically, transformative technologies have consistently spread once developed, and thus far, AI has constantly shown signs that it will continue to follow this pattern.

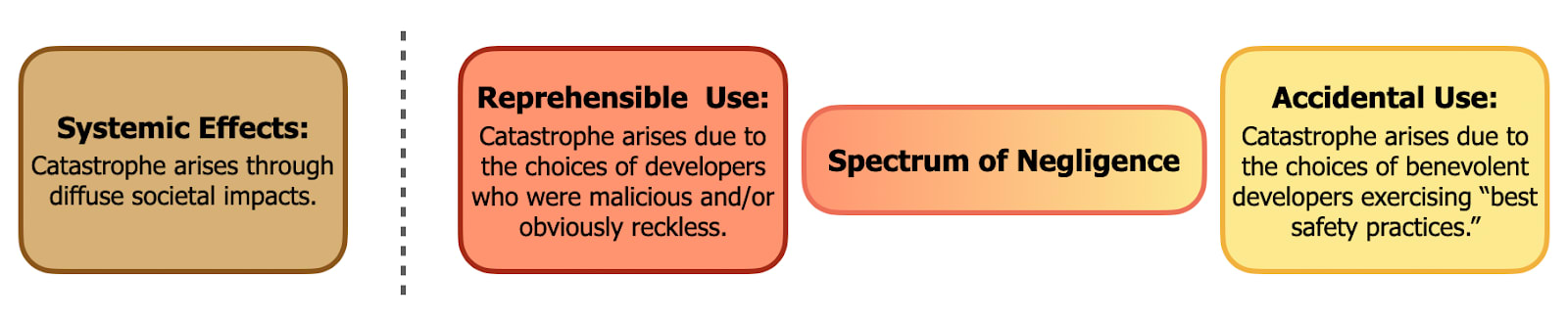

The risks we face: Imagine a future where AI causes significant harm. What might lead to such an outcome? One scenario involves complex systemic effects (Arnscheidt et al., 2024; Kulveit et al., 2025). Another non-mutually-exclusive possibility is for harm to arise from the choices of some identifiable set of actors. In cases involving such perpetrators, the event would fall on a spectrum ranging from a pure accident to a clearly intentional act. We can taxonomize catastrophic AI risks accordingly:

Will technical solutions save us? Inside the AI safety community, it is common for agendas to revolve around solving the technical alignment challenge: getting AI systems’ actions to serve the goals and intentions of their operators (e.g., Amodei et al., 2016; Everitt et al., 2018; Critch and Krueger, 2020, Ji et al., 2023). This focus stems from a long history of worrying that rogue AI systems pursuing unintended goals could spell catastrophe (e.g., Bostrom, 2014). However, the predominance of these concerns seems puzzling in light of our risk profile (Khlaaf, 2023). Alignment can only be sufficient for safety if (1) no catastrophes result from systemic effects and (2) the people in control of the AI are reliably benevolent and responsible. Both are highly uncertain. And if/when transformative AI opens a Pandora’s box, it will become almost inevitable that some frontier developers will be malicious or reckless. On top of this, being better at technical alignment could also exacerbate risks by speeding up timelines and enabling more acutely harmful misuse.

If AI causes a catastrophe, what are the chances that it will be triggered by the choices of people who were exercising what would be considered to be “best safety practices” at the time? The history of human conflict and engineering disasters (Dekker, 2019) suggests that these chances are very small. For example, consider nuclear technology. The technical ‘nuclear alignment’ problem is virtually solved: nuclear tech reliably makes energy when its users want and reliably destroys cities when its users want. To be clear, it is very nice to live in a world in which catastrophic failures of nuclear power plants are very rare. Nonetheless, for the foreseeable future, we will be in perpetual danger from nuclear warfare—not for technical reasons, but institutional ones.

When all you have is a hammer… The AI safety community’s focus on technical alignment approaches can be explained, in part, by its cultural makeup. The community is diverse and discordant. Yet, throughout its existence, it has been overwhelmingly filled with highly technical people trained to solve well-posed technical problems. So it makes sense for technical people to focus on technical alignment challenges to improve safety. However, AI safety is not a model property (Narayanan and Kapoor, 2024), and conflating alignment with safety is a pervasive mistake (Khlaaf, 2023).

Who benefits from technosolutionism? The overemphasis on alignment can also be explained by how useful it is for technocrats. For example, leading AI companies like OpenAI, Anthropic, Google DeepMind, etc., acknowledge the potential for catastrophic AI risks but predominantly frame these challenges in terms of technical alignment. And isn’t it convenient that the alignment objectives that they say will be needed to prevent AI catastrophe would potentially give them trillions of dollars and enough power to rival the very democratic institutions meant to address misuse and systemic risks? Meanwhile, the AI research community is full of people who have much more wealth and privilege than the vast majority of others in the world. Technocrats also tend to benefit from new technology instead of being marginalized by it. In this light, it is unsurprising to see companies that gain power from AI push the notion that it’s the AI and not them that we should fear – that if they are just able to build and align superintelligence (before China, of course) we might be safe. Does this remind you of anything?

The long road ahead: If we are serious about AI safety, we need to be serious about preparing for the Pandora’s box that will be opened once transformative AI proliferates. At that point, developing solutions for technical alignment would sometimes be useful. However, they would also fail to protect us from most disaster scenarios, exacerbate misuse risks, concentrate power, and accelerate timelines. Unless we believe in an AI messiah, we can expect the fight for AI safety to be a neverending, unsexy struggle. This underscores a need to build institutions that place effective checks and balances on AI. Instead of figuring out what kind of AI systems to develop, the more pressing question is about how to shape the AI ecosystem in a way that better enables the ongoing challenges of identifying, studying, and deliberating about risks. In light of this, the core priorities of the AI safety community should be to build governmental capacity (e.g. Carpenter & Ezell, 2024; Novelli et al., 2024), increase transparency & accountability (e.g., Uuk et al., 2024; Casper et al., 2025), and improve disaster preparedness (e.g., Wasil et al., 2024, Bernardi et al., 2024). These will be the defining challenges that long-term AI safety will depend on.

I disagree that people working on the technical alignment problem generally believe that solving that technical problem is sufficient to get to Safe & Beneficial AGI. I for one am primarily working on technical alignment but bring up non-technical challenges to Safe & Beneficial AGI frequently and publicly, and here’s Nate Soares doing the same thing, and practically every AGI technical alignment researcher I can think of talks about governance and competitive races-to-the-bottom and so on all the time these days, …. Like, who specifically do you imagine that you’re arguing against here? Can you give an example? Dario Amodei maybe? (I am happy to throw Dario Amodei under the bus and no-true-Scotsman him out of the “AI safety community”.)

I also disagree with the claim (not sure whether you endorse it, see next paragraph) that solving the technical alignment problem is not necessary to get to Safe & Beneficial AGI. If we don’t solve the technical alignment problem, then we’ll eventually wind up with a recipe for summoning more and more powerful demons with callous lack of interest in whether humans live or die. And more and more people will get access to that demon-summoning recipe over time, and running that recipe will be highly profitable (just as using unwilling slave labor is very profitable until there’s a slave revolt). That’s clearly bad, right? Did you mean to imply that there’s a good future that looks like that? (Well, I guess “don’t ever build AGI” is an option in principle, though I’m skeptical in practice because forever is a very long time.)

Alternatively, if you agree with me that solving the technical alignment problem is necessary to get to Safe & Beneficial AGI, and that other things are also necessary to get to Safe & Beneficial AGI, then I think your OP is not clearly conveying that position. The tone is wrong. If you believed that, then you should be cheering on the people working on technical alignment, while also encouraging more people to work on non-technical challenges to Safe & Beneficial AGI. By contrast, this post strongly has a tone that we should be working on non-technical challenges instead of the technical alignment problem, as if they were zero-sum, when they’re obviously (IMO) not. (See related discussion of zero-sum-ness here.)

I’m glad you think that the post has a small audience and may not be needed. I suppose that’s a good sign.

—

In the post I said it’s good that nukes don’t blow up on accident and similarly, it’s good that BSL-4 protocols and tech exist. I’m not saying that alignment solutions shouldn’t exist. I am speaking to a specific audience (e.g. the frontier companies and allies) that their focus on alignment isn’t commensurate with its usefulness. Also don’t forget the dual nature of alignment progress. I also mentioned in the post that frontier alignment progress hastens timelines and makes misuse risk more acute.