My amateur understanding of neural networks is that they almost always train using stochastic gradient descent. The quality of a neural network comes from its size, shape, and training data, but not from the training function, which is always simple gradient descent.

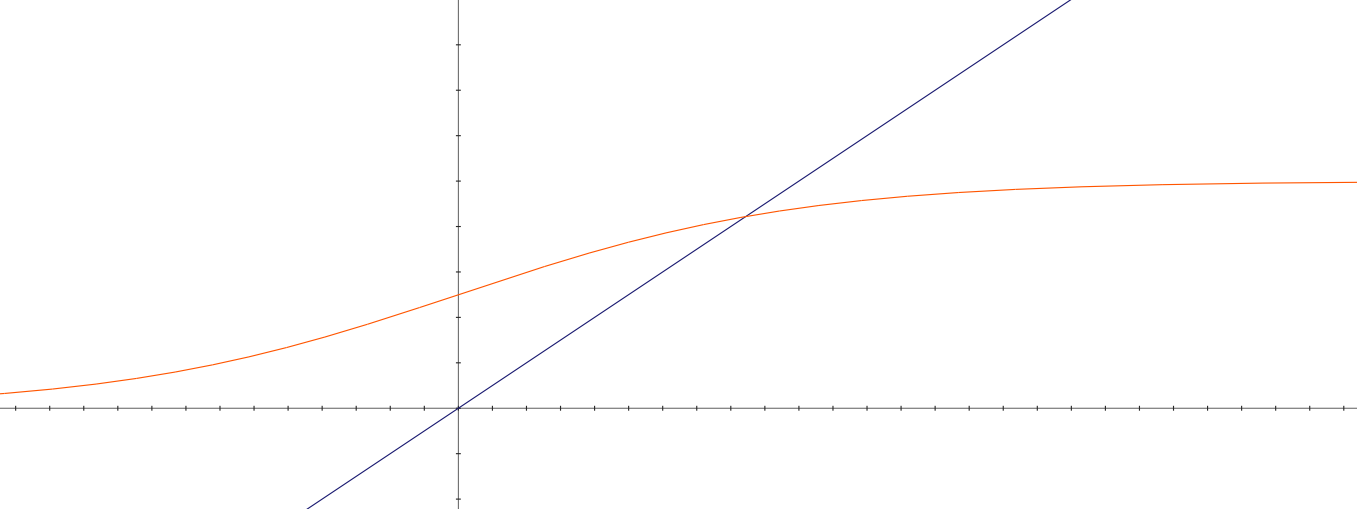

This is a bit unintuitive to me because gradient descent can only find the minimum of a function if that function is convex, and I wouldn't expect typical ML problems (e.g., "find the dog in this picture" or "continue this writing prompt") to have convex cost functions. So why does gradient descent always work?

One explanation I can think of: it doesn't work if your goal is to find the optimal answer, but we hardly ever want to know the optimal answer, we just want to know a good-enough answer. For example, if a NN is trained to play Go, it doesn't have to find the best move, it just has to find a winning move. Not sure if this explanation makes sense though.

Source: https://moultano.wordpress.com/2020/10/18/why-deep-learning-works-even-though-it-shouldnt/

There can be N settings that perfectly tie for the best score.

Also, they might exist in neighborhoods that are also very very very high scoring, such that incremental progress into any of those neighborhoods makes the optimal function local to the optimizer.

...

One thing that helps me visualize it is to remember circuit diagrams. There are many "computing systems" rich enough and generic enough that several steps of "an algorithm" can be embedded inside of that larger system with plenty of room to spare. Once the model is "big enough" to contain the right a... (read more)