Was a philosophy PhD student, left to work at AI Impacts, then Center on Long-Term Risk, then OpenAI. Quit OpenAI due to losing confidence that it would behave responsibly around the time of AGI. Now executive director of the AI Futures Project. I subscribe to Crocker's Rules and am especially interested to hear unsolicited constructive criticism. http://sl4.org/crocker.html

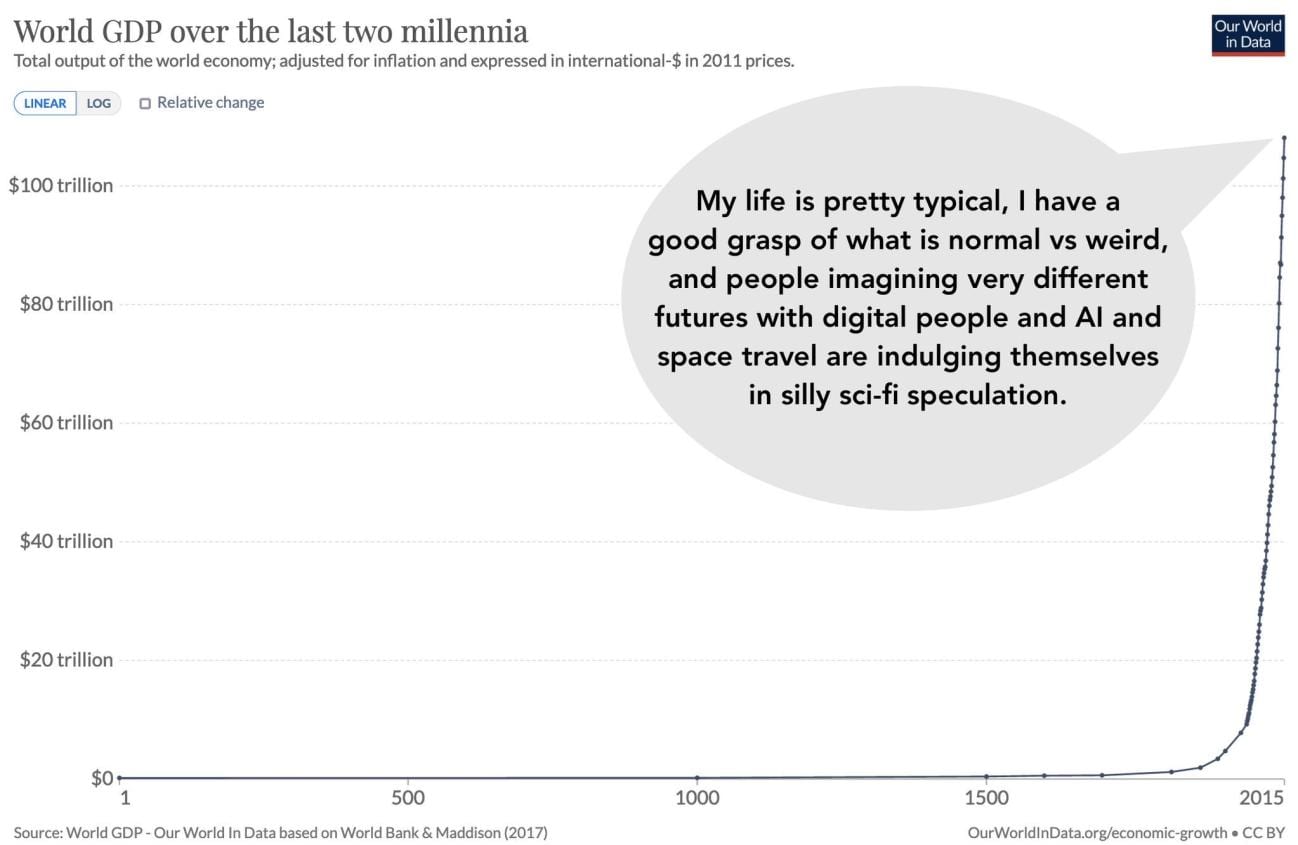

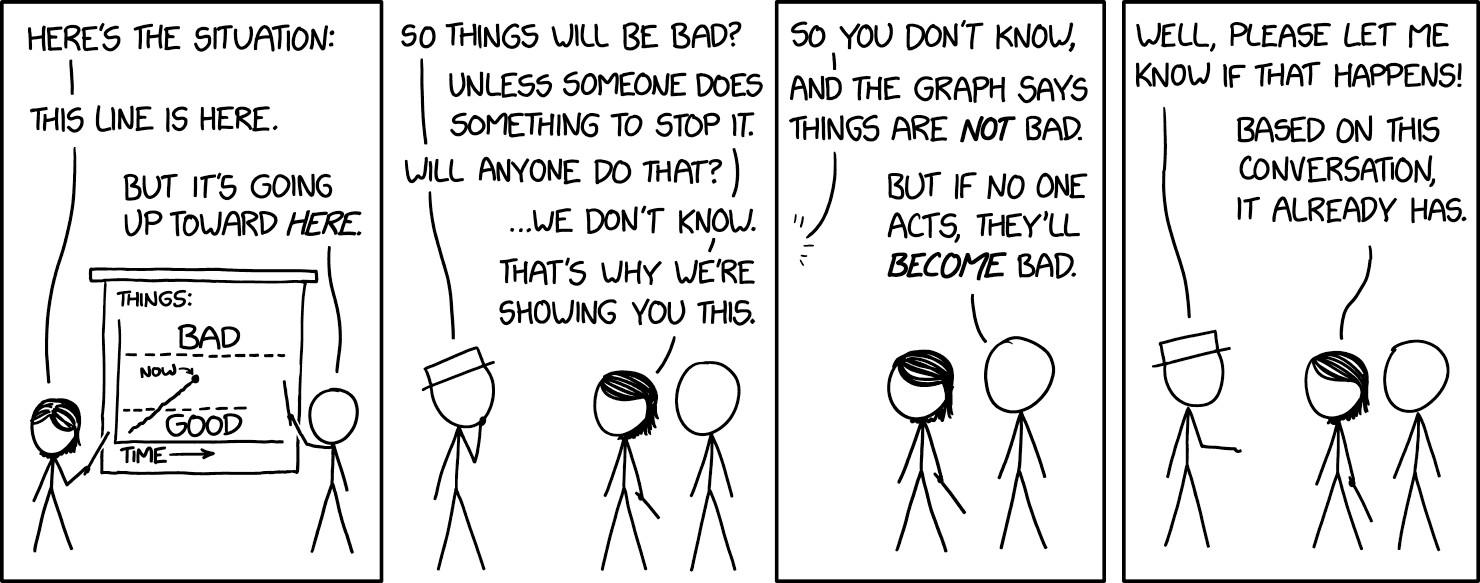

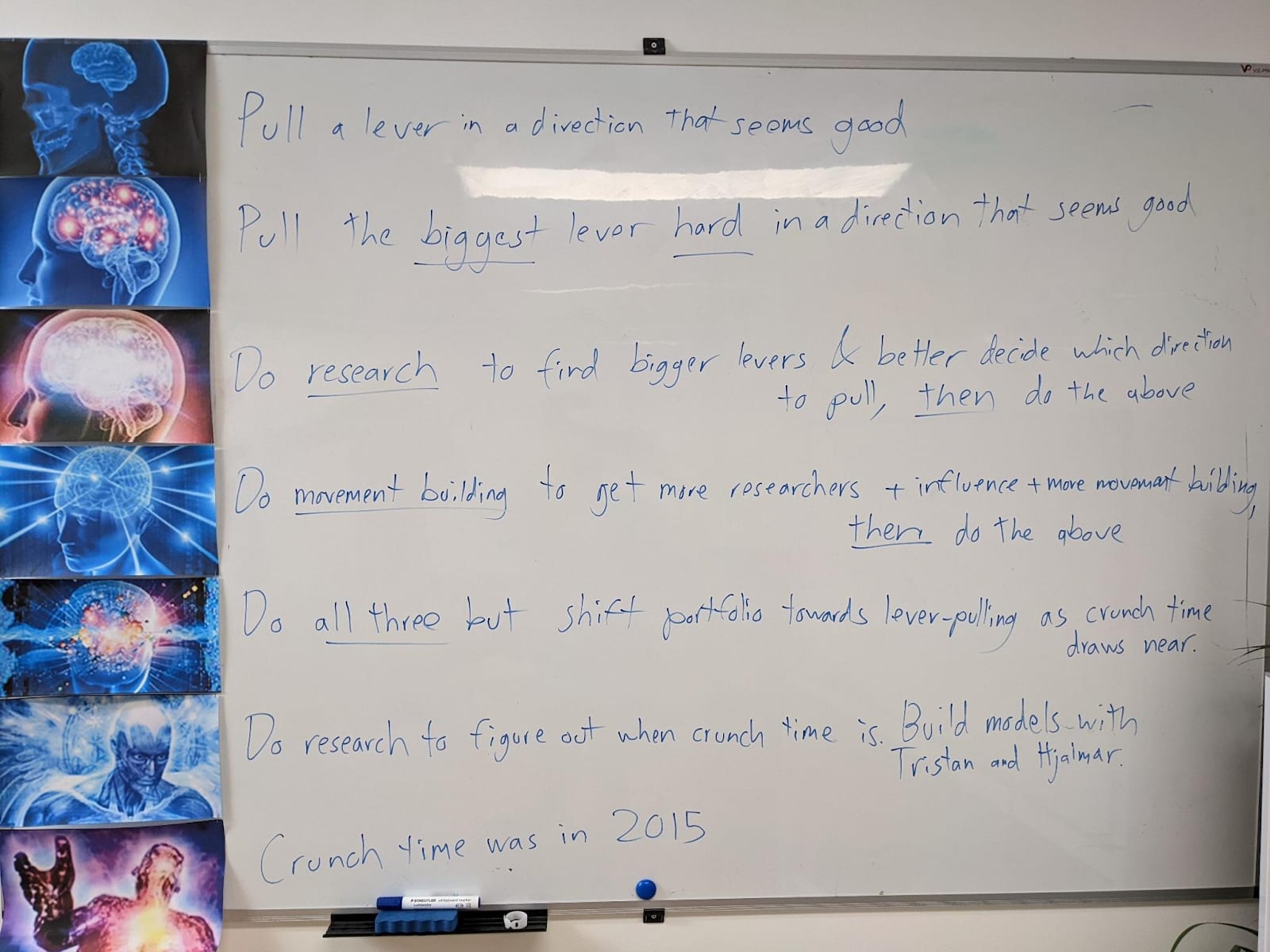

Some of my favorite memes:

(by Rob Wiblin)

(xkcd)

My EA Journey, depicted on the whiteboard at CLR:

(h/t Scott Alexander)

Posts

Wikitag Contributions

The argument is that there might not be a single Consensus-1 controlled military even in the US. I think it seems unlikely that the combined US AI police forces will be able to compete with the US AI national military, which is one reason I'm skeptical of this.

I agree the US could choose to do the industrial explosion & arms buildup in a way that's robust to all of OpenBrain's AIs turning out to be misaligned. However, they won't, because (a) that would have substantial costs /slowdown effects in the race against China, (b) they already decided that OpenBrain's AIs were aligned in late 2027 and have only had more evidence to confirm that bias since then, and (c) OpenBrain's AIs are superhuman at politics, persuasion, etc. (and everything else) and will effectively steer/lobby/etc. things in the right direction from their perspective.

I think this would be more clear if Vitalik or someone else undertook the task of making an alternative scenario.

.

Thanks for this critique! Besides the in-line comments above, I'd like to challenge you to sketch your own alternative scenario to AI 2027, depicting your vision. For example:

- 1 page on d/acc technologies and other prep that people can start working on today, that quietly build up momentum during the first part of AI 2027: Vitalik Version.

- 1 page on 'the branching point' where AI 2027: Vitalik Version starts to meaningfully diverge from original AI 2027

- 1-3 pages on what happens after that, depicting how e.g. OpenBrain and DeepCent's misaligned AIs are unable to take over the world, despite having successfully convinced corporate and political leadership to trust them. (Or perhaps why they wouldn't be able to convince them to trust them? You get to pick the branching point.) This section should end in 2035 or so, just like AI 2027.

I predict that if you try to write this, you'll run into a bunch of problems and realize that your strategy is going to be difficult to pull off successfully. (e.g. you'll start writing about how the analyst tools resist Consensus-1's persuasion, but then you'll be trying to write the part about how those analyst tools get built and deployed, and by whom, and no one has the technical capability to build them until 2028 but by that point OpenBrain+Agent5+ might already be working to undermine whoever is building them...) I hope I'm wrong.

Defense technologies should be more of the "armor the sheep" flavor, less of the "hunt down all the wolves" flavor. Discussions about the vulnerable world hypothesis often assume that the only solution is a hegemon maintaining universal surveillance to prevent any potential threats from emerging. But in a non-hegemonic world, this is not a workable approach (see also: security dilemma), and indeed top-down mechanisms of defense could easily be subverted by a powerful AI and turned into its offense. Hence, a larger share of the defense instead needs to happen by doing the hard work to make the world less vulnerable.

This might be the only item on this list that I disagree with.

I agree that given a choice between armoring the sheep and hunting down the wolves, we should prefer armoring the sheep. But sometimes we simply don't have a choice. E.g. our solution to murder is to hunt down murderers, not to give everyone body armor and so forth so that they can't be killed, because that simply wouldn't be feasible. (It would indeed be a better world if we didn't need police because violent crimes simply weren't possible because everything was so well defended)

I think we should take these things on a case by case basis.

And furthermore, I think that superintelligence is an example of the sort of thing where the best strategy is to ensure that the most powerful AIs, at any given time, are aligned/virtuous/etc. It's maybe OK if less-powerful ones are misaligned, but it's very much not OK if the world's most powerful AIs are misaligned.

to have access to good info defense tech. This is relatively more achievable within a short timeframe,

I was with you until this point. I would say "So how are we going to get slightly less wildly superintelligent analyzers to help out decision-makers, so that we don't need to blindly trust that the even-more-wildly superintelligent super-persuaders in the leading US AI project are trustworthy? Answer: We aren't. There simply isn't another company rival to OpenBrain, that has AIs that are only slightly less wildly superintelligent, that are also aligned/trustworthy. DeepCent maybe has AIs that could compete, but they are misaligned too, because DeepCent has been racing as hard as OpenBrain did. And besides US leaders wouldn't trust a DeepCent-designed analyzer, nor should they."

Individuals need to be equipped with locally-running AI that is explicitly loyal to them

In the Race ending of AI 2027, humanity never figures out how to make AIs loyal to anyone. OpenBrain doesn't slow down, they think they've solved the alignment problem but they haven't. Maybe some academics or misc minor companies in 2028 do additional research and discover e.g. how to make an aligned human-level AGI eventually, but by that point it's too little, too late (and also, their efforts may well be sabotaged by OpenBrain/Agent-5+, e.g. with regulation and distractions.

I think it's pretty easy for it to fail to produce this stuff without tipping its hand. Consider how, if OpenAI leadership really cared a lot about preventing concentration of power, they should be investing some % of their resources in pioneering good corporate governance structures for themselves & lobbying for those structures to be mandatory for all companies. The fact that they aren't doing that is super suspicious....

Except no it isn't, because there isn't a strong consensus that they should obviously be doing that. So they can get away with basically doing nothing, or doing something vaguely related to democratic AI, or whatnot.

Similarly, OpenBrain+Consensus-1 could have all sorts of nifty d/acc projects that they are ostentatiously funding and doing, except for the ones that would actually work to stop their takeover plans. And there simply won't be a consensus that the ones they aren't doing, or aren't doing well, are strong evidence that they are evil.