This was a pretty enjoyable read. Meandering, but in a nice relaxing way, without being overly dogmatic. More like musing.

Thanks. I had hoped it to be informative and entertaining. Think of it as "let's play" but for nerds.

Excellent post! (I do not entirely agree with your stance on posthumanism, but that is a secondary matter…)

Re: the externalization of the inner voice: I am reminded of the parable of the Whispering Earring.

Re: manufactured meaning as a pervasive mode of human existence: Karl Schroeder’s excellent novel Lady of Mazes has a lot to say on this topic.

The Whispering Earring is interesting. It appears that the earring provides a kind of slow mind-uploading, but more noninvasive than most other approaches. The author of the story seems to consider it to be bad for some reason, perhaps because of triggering of "Liberty / Oppression" and "Purity / Sanctity" (of the inside-skull self) moral alarms.

Unfortunately I dislike reading novels. Would you kindly summarize the relevant parts?

the earring provides a kind of slow mind-uploading

This is only true if whatever (hyper)computation the earring is using to make recommendations contains a model of the wearer. Such a model could be interpreted as a true upload, in which case it would be true that the wearer's mind is not actually destroyed.

However, if the earring's predictions are made by some other means (which I don't think is impossible even in real life--predictions are often made without consulting a detailed, one-to-one model of the thing being predicted), then there is no upload, and the user has simply been taken over like a mindless puppet.

This wades deep into the problem of what makes something feel conscious. I believe (and Scott Aaronson also wrote about it), that to have such a detailed understanding of a consciousness, one must also have a consciousness-generating process in it. That is, to fully understand a mind, it's necessary to recreate the mind.

If the Earring merely does the most satisfactory decisions according to some easy-to-compute universal standards (like to act morally according to some computationally efficient system), then the takeover makes sense to me, but otherwise it seems like a refusal to admit multiple realizations of a mind.

Part of the story is that !> it tells you you are better off taking it off. Given that it's always as good/better than you at making decisions, leaving it on is a bad idea. <!

it seems like a refusal to admit multiple realizations of a mind.

I think it admits the possibility that such a thing may be to your detriment. (Perhaps it only contains one model (a human mind?), and uses that knowledge to destroy, rather than upload, human minds.)

EDIT: How does one add spoilers here?

After reading the story, I don't believe that it is a bad idea to leave on the earring, and I just think the author made an inconsistency in the story.

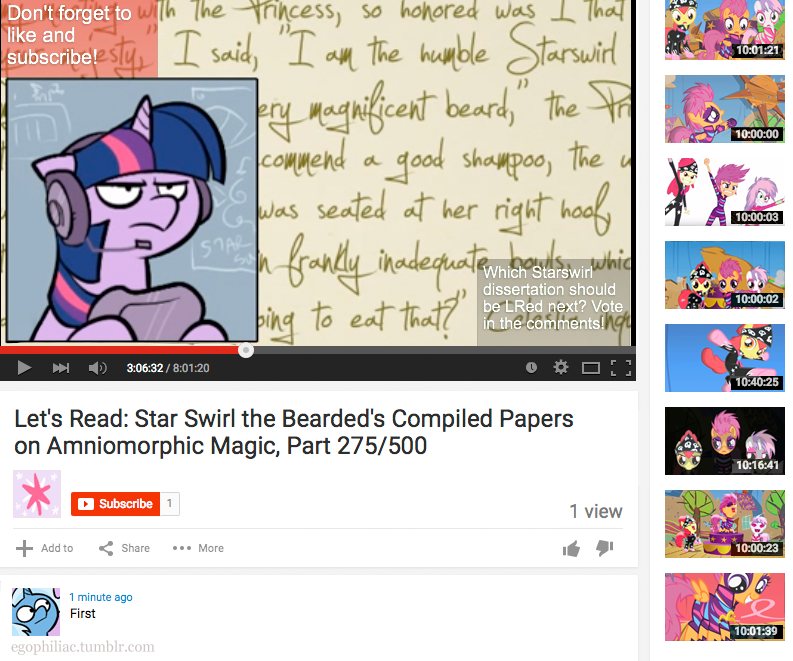

Follow me as I read through an essay on AI Theology and give my comments. Good Shepherds (O’Gieblyn, 2019)

Obligatory reference to AlphaGo. Could have mentioned AlphaZero, but it was not nearly as popular as AlphaGo.

By "immediate" means "now". Reasonable. AI apocalypse is not scheduled until perhaps 100 years later.

"Dataism" does not necessarily undermine liberalism. It is possible to augment human thought to keep up with the data deluge, and preserve (trans)human liberalism. Although this is not guaranteed.

The problem of Theodicy is amusing. And the trials of God can be total dark humor. My favorite is

Back to the essay.

I do think that if a God made this world, then yes, They are clearly entirely alien and probably more interested in quantum mechanics than justice. Also, one should note that the Reformation probably didn't help or suppress science. See History: Science and the Reformation (David Wootton, 2017).

No need to invoke the more speculative parts. Just the verified Standard Model is strange enough. Or the endless technology stacks in the electronics...

Two problems.

From what I've observed, "bias" and "prior" are distinguished entirely by moral judgments. "Bias" is immoral base rate belief. "Prior" is moral. As such, whether a prior is thought as "bias" or "prior" can easily be manipulated by framing a base rate belief in moral language.

According to research by Haidt, moral feelings are 6 kinds:

For the distinction between "bias" and "prior", the most relevant kinds are the first three. For example, the persistent denial of the effectiveness of IQ tests is motivated reasoning, based on moral rejection about how it could be used to justify oppression of low-IQ people, unfairly allowing high-IQ children to get into elite schools, and cause harm of many kinds. By this moral tainting, any prior based on IQ test results becomes immoral prior, thus "bias".

For more on the moralization of base rate beliefs, see for example The base rate principle and the fairness principle in social judgment (J Cao, MR Banaji, 2016) and The Psychology of the Unthinkable (Tetlock et al, 2000).

Or just contemplate how strange it is that sexual orientation is not discrimination, but friendship orientation might be. I can only be friends with females, and a male acquaintance (who really wants to be my friend) once wondered if that's discrimination.

A toy example is how WolframAlpha manages to show you how to solve an integral "step by step". What it actually does is to internally use a general algorithm that's too hard for humans to understand, then separately use an expert system that looks at the result and the problem, and try to make up a chain of integration tricks that a human could probably have thought up.

Humans are also prone to such guessing. When they introspect their decision process, they might say that they simply knew how they arrived at the decision, but in reality they are trying to infer it using folk theories of psychology. This is the lesson from (Nisbett, 1977) or The unbearable automaticity of being (Bargh & Chartrand, 1999).

The inner voice, as noted above, is a social voice. It exists to explain the actions of a human to other humans. It does not perceive accurately. An asocial species probably has no inner voice, for there is no benefit of evolving that.

If this Dataism prediction comes to pass, then the inner voice would simply come from the outside. Like, I would think, "What do I like to do today?" [datastream comes from some hidden decision module located somewhere overseas] "Oh yes, write an essay!"

Instead of experiencing a kind of "me listening to the clever robot", it would be like "me listening to me", except the "me" would be weird and spill outside the skull.

The effect would be the same, but the first raises the moral alarm: it has the potential to become immoral by the "Liberty / Oppression" rule.

Kaczynski writes with the precision of a mathematician (he did complex analysis back in school), and his manifesto sets out his primitive humanist philosophy clearly.

This vision seems unlikely to me, simply because it is too benign. People might adapt and survive, but I doubt they would be humans. I believe the future is vomittingly strange.

There is a third way. Science can reveal what humans feel as meaningful, and using that, meaning can be mass-produced at an affordable price. Positive psychology, for example, has shown that there are three cores of the feeling of meaning (F Martela, MF Steger, 2016):

It has also, incidentally, found that people feel like their life is pretty meaningful (SJ Heintzelman, LA King, 2014).

The problem of "Yes, it is the feeling of meaning, but is it really meaning?" is not of practical significance. Presumably humans, by their habit of self-denial (they hate to become predictable, even to themselves), would rebel against guaranteed meaning if they recognize it. That appears unlikely (most people welcome guaranteed health and shelter as a human right), but if it does happen, the meaning manufacturing industry can simply become invisible and employ artists who subscribe to existentialism (meaning can only be constructed).

Typical of these essays to insert a meaningful conclusion at the end. It does not occur to the author that they could also accept the algorithms as irreducible mysteries.

I prefer posthumanism. I do not have much sympathy with humanists' rigid attempts to circumscribe what is human, anyway. If I somehow end up becoming posthuman, okay.