Before we get to the receipts, let's talk epistemics.

This community prides itself on "rationalism." Central to that framework is the commitment to evaluate how reality played out against our predictions.

"Bayesian updating," as we so often remind each other.

If we're serious about this project, we should expect our community to maintain rigorous epistemic standards—not just individually updating our beliefs to align with reality, but collectively fostering a culture where those making confident pronouncements about empirically verifiable outcomes are held accountable when those pronouncements fail.

With that in mind, I've compiled in the appendix a selection of predictions about China and AI from prominent community voices. The pattern is clear: a systematic underestimation of China's technical capabilities, prioritization of AI development, and ability to advance despite (or perhaps because of) government involvement.

The interesting question isn't just that these predictions were wrong. It's that they were confidently wrong in a specific direction, and—crucially—that wrongness has gone largely unacknowledged.

How many of you incorporated these assumptions into your fundamental worldview? How many based your advocacy for AI "pauses" or "slowdowns" on the belief that Western labs were the only serious players? How many discounted the possibility that misalignment risk might manifest first through a different technological trajectory than the one pursued by OpenAI or Anthropic?

If you're genuinely concerned about misalignment, China's rapid advancement represents exactly the scenario many claimed to fear: potentially less-aligned AI development accelerating outside the influence of Western governance structures. This seems like the most probable vector for the "unaligned AGI" scenarios many have written extensive warnings about.

And yet, where is the community updating? Where are the post-mortems on these failed predictions? Where is the reconsideration of alignment strategies in light of demonstrated reality?

Collective epistemics require more than just nodding along to the concept of updating. They require actually doing the work when our predictions fail.

What do YOU think?

Appendix:

Bad Predictions on China and AI

"No...There is no appreciable risk from non-Western countries whatsover" - @Connor Leahy

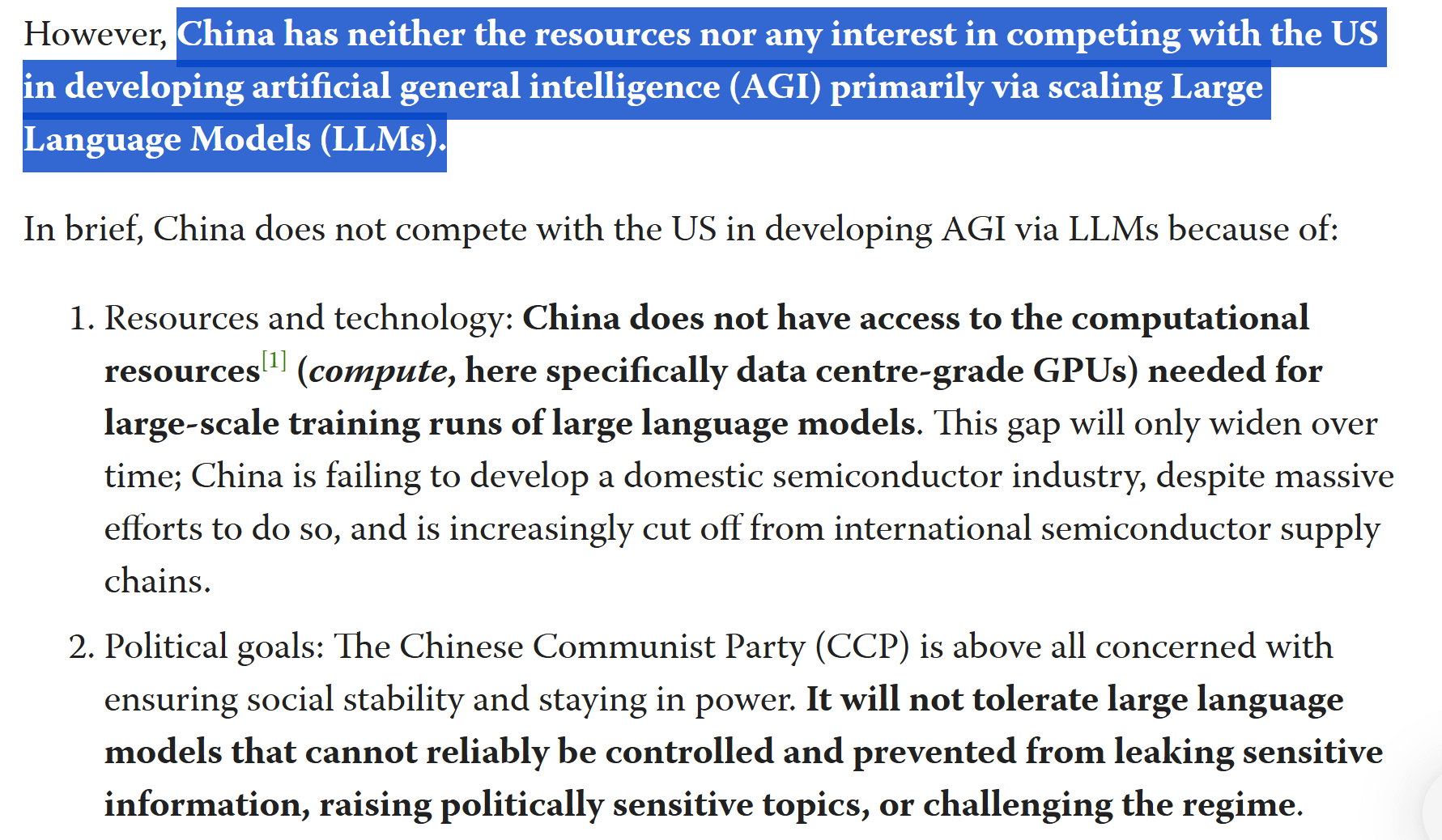

"China has neither the resources nor any interest in competing with the US on developing artificial general intelligence" = @Eva_B

dear ol @Eliezer Yudkowsky

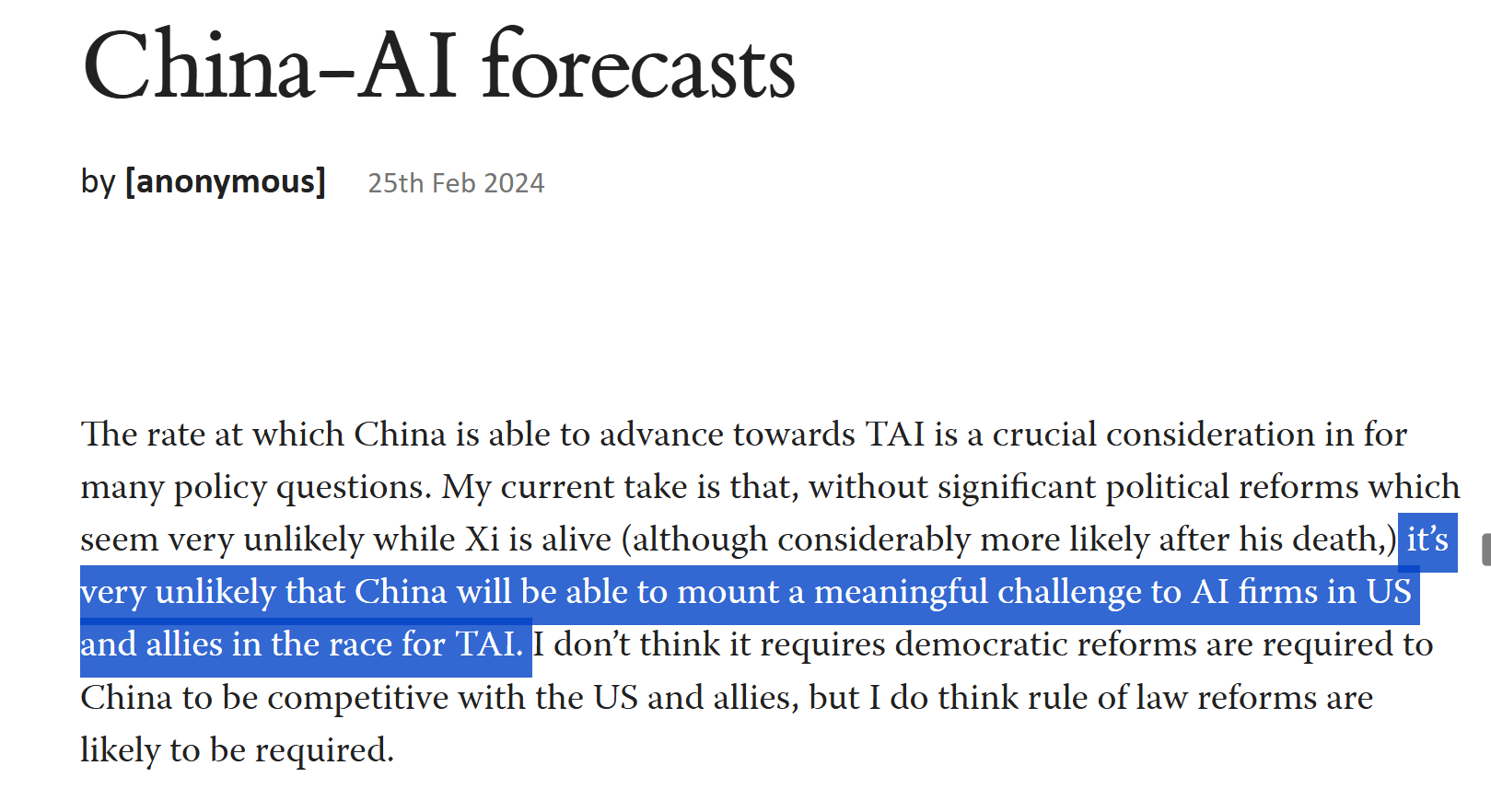

Anonymous (I guess we know why)

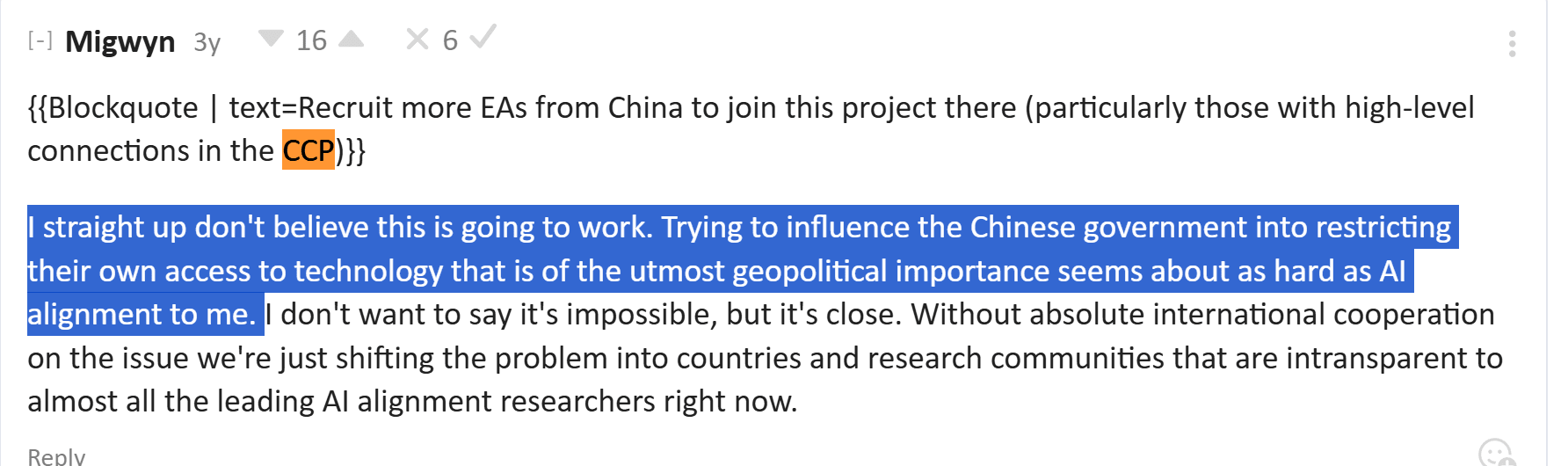

https://www.lesswrong.com/posts/ysuXxa5uarpGzrTfH/china-ai-forecasts

https://www.lesswrong.com/posts/KPBPc7RayDPxqxdqY/china-hawks-are-manufacturing-an-ai-arms-race

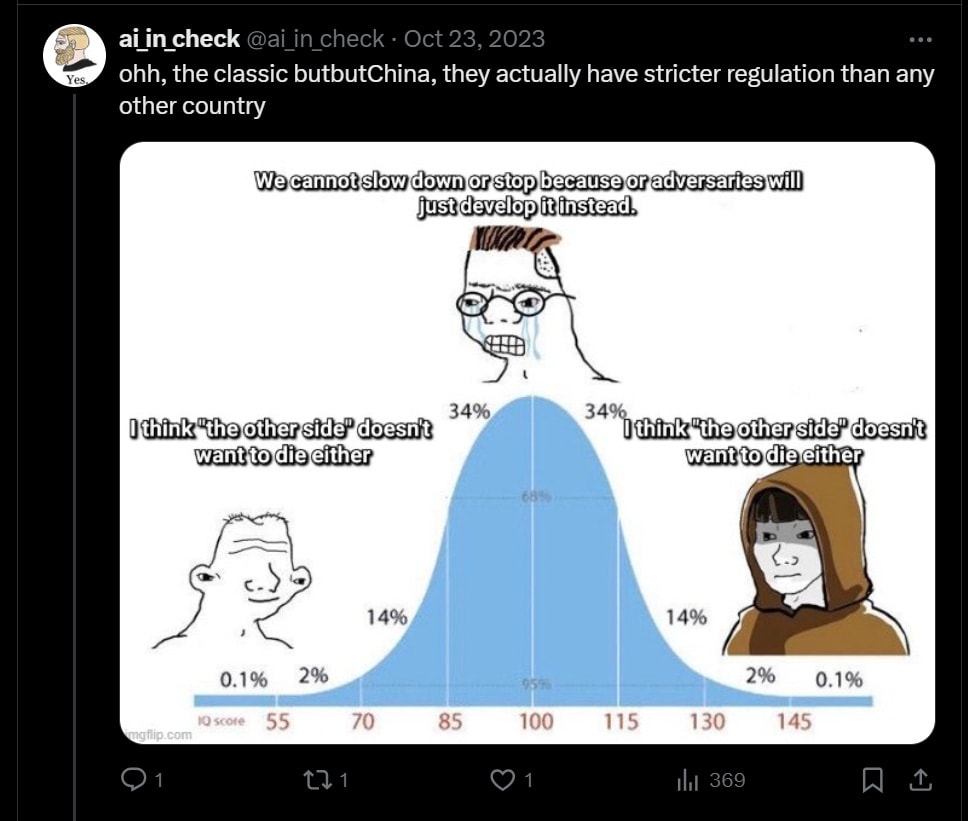

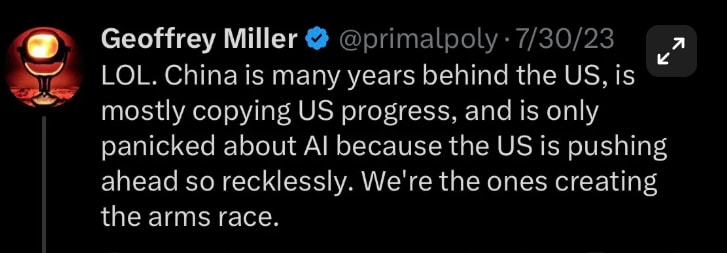

Various folks on twitter...

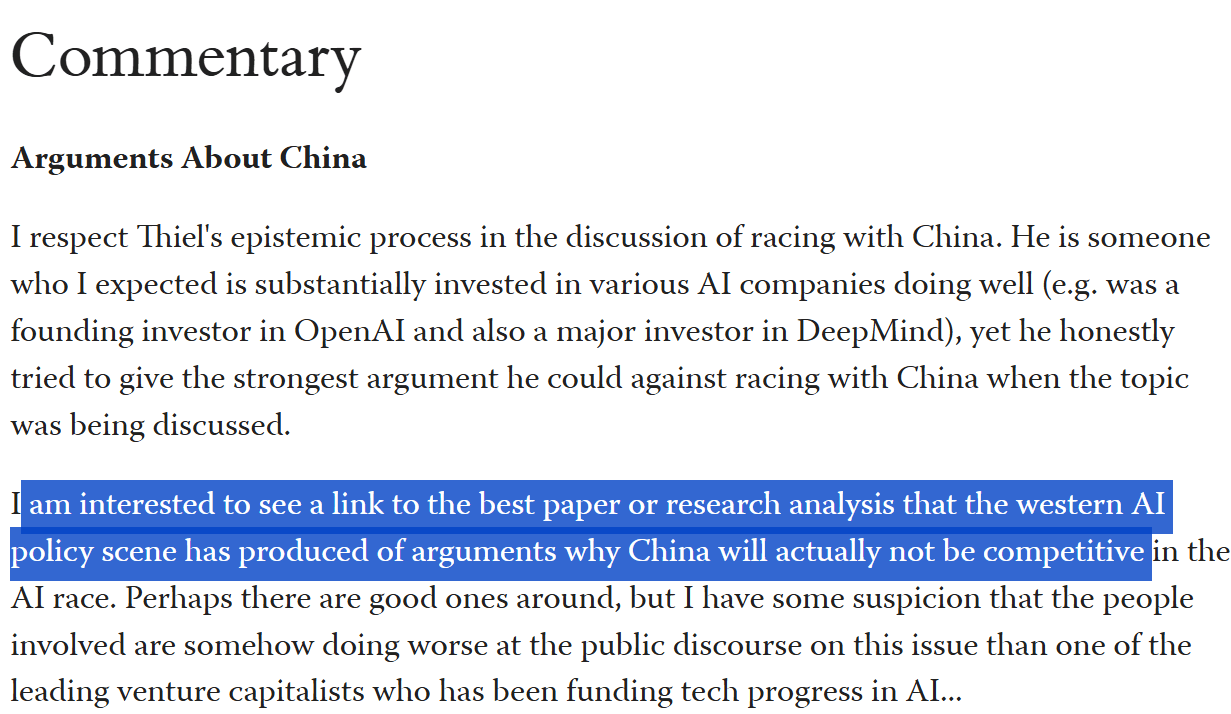

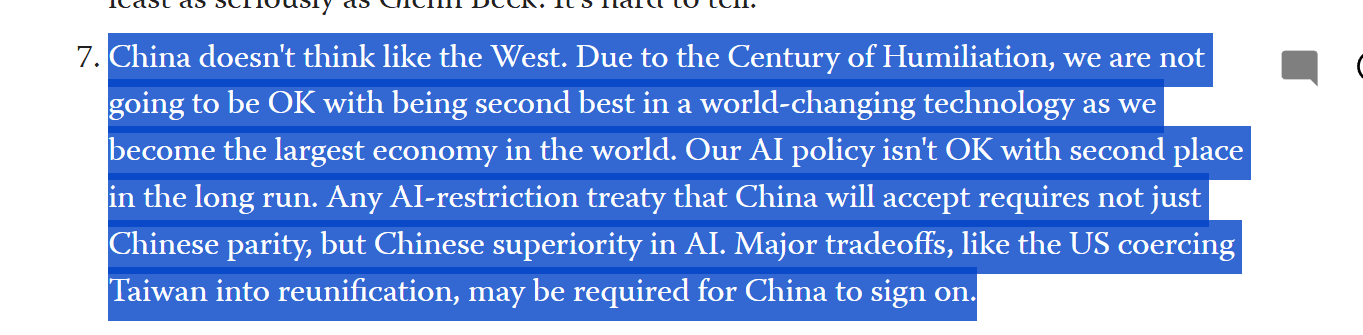

Good Predictions / Open-mindedness

https://www.lesswrong.com/posts/xbpig7TcsEktyykNF/thiel-on-ai-and-racing-with-china

I think,

But

I gave you a strong upvote because I strongly agree with the direction of your post and I think it's under-discussed.

But I wish you make it clearer that only the first two points are observed realities that people should adjust their beliefs to. The third point is your own belief, which may be wrong.

PS: Please don't say "This is literally cope" to people. That sounds like an X/Twitter comment. People on LessWrong avoid talking like that.

Please, before you criticize anyone, ask an AI to help translate your criticism into LessWrong flavoured corporate-speak. /s

:) thank you for saying you'll moderate your tone. It's rare I manage to criticize someone and they reply with "ok" and actually change what they do.

My first post on LessWrong was A better “Statement on AI Risk?”. I felt it was a very good argument for the government to fund AI alignment, and I tried really hard to convince people to turn it into an open letter.

Some people told me the problem with my idea is that asking for more AI alignment funding is the wrong strategy. The right strategy is to slow down and pause AI.

I tried to explain that: when politic... (read more)