This reminds me of my intuitive rejection of the Chinese Room thought experiment, in which the intuition pump seems to rely on the little guy in the room not knowing Chinese, but that it's obviously the whole mechanism that is the room, the books in the room, etc. that is doing the "knowing" while the little guy is just a cog.

Part of what makes the rock/popcorn/wall thought experiment more appealing, even given your objections here, is that even if you imagine that you have offloaded the complex mapping somewhere else, the actual thinking-action that the mapping interprets is happening in the rock/popcorn/wall. The mapping itself is inert and passive at that point. So if you imagine consciousness as an activity that is equivalent to a physical process of computation you still have to imagine it taking place in the popcorn, not in the mapping.

You seem maybe to be implying that we have underinvestigated the claim that one really can arbitrarily map any complex computation to any finite collection of stuff (that this would e.g. imply that we have solved the halting problem). But I think these thought experiments don't require us to wrestle with that because they assume ad arguendo that you can instantiate the computations we're interested in (consciousness) in a headful of meat, and then try to show that if this is the case, many other finite collections of matter ought to be able to do the job just as well.

"the actual thinking-action that the mapping interprets"

I don't think this is conceptually correct. Looking at the chess playing waterfall that Aaronson discusses, the mapping itself is doing all of the computation. The fact that the mapping ran in the past doesn't change the fact that it's the location of the computation, any more than the fact that it takes milliseconds for my nerve impulses to reach my fingers means that my fingers are doing the thinking in writing this essay. (Though given the typos you found, it would be convenient to blame them.)

they assume ad arguendo that you can instantiate the computations we're interested in (consciousness) in a headful of meat, and then try to show that if this is the case, many other finite collections of matter ought to be able to do the job just as well.

Yes, they assume that whatever runs the algorithm is experiencing running the algorithm from the inside. And yes, many specific finite systems can do so - namely, GPUs and CPUs, as well as the wetware in our head. But without the claim that arbitrary items can do these computations, it seems that the arguendo is saying nothing different than the conclusion - right?

I agree that the Chinese Room thought experiment dissolves into clarity once you realize that it is the room as a whole, not just the person, that implements the understanding.

But then wouldn't the mapping, like the inert book in the room, need to be included in the system?

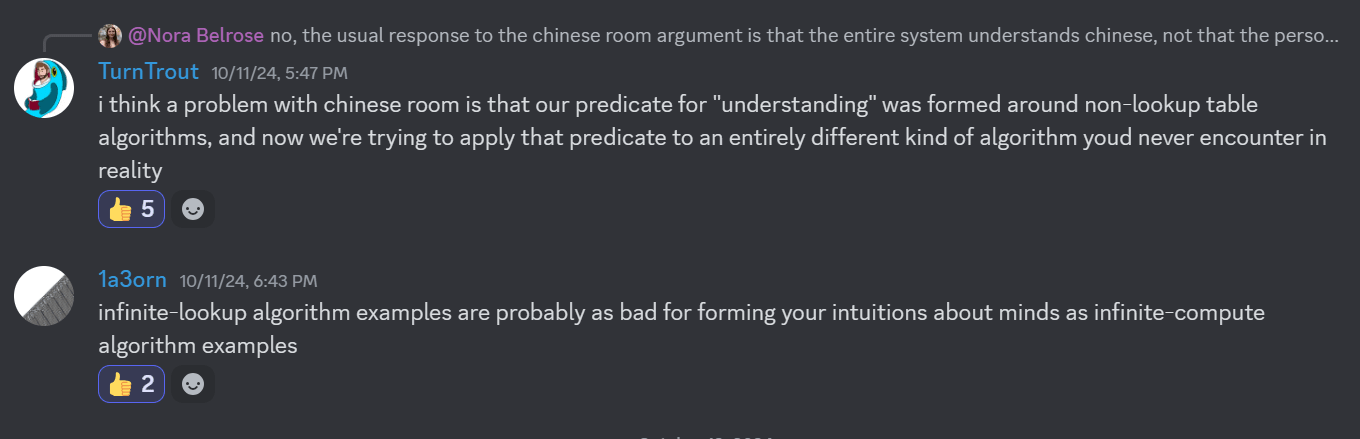

IMO, the entire Chinese room thought experiment dissolves into clarity once we remember that the intuitive meaning of understanding is formed around algorithms that are not lookup tables, because trying to create an infinite look-up table would be infeasible in reality, thus our intuitions go wrong in extreme cases.

I agree with the discord comments here on this point:

The portion of the argument where I contest is step 2 here (it's a summarized version):

- If Strong AI is true, then there is a program for Chinese, C, such that if any computing system runs C, that system thereby comes to understand Chinese.

- I could run C without thereby coming to understand Chinese.

- Therefore Strong AI is false.

Or this argument here:

Searle imagines himself alone in a room following a computer program for responding to Chinese characters slipped under the door. Searle understands nothing of Chinese, and yet, by following the program for manipulating symbols and numerals just as a computer does, he sends appropriate strings of Chinese characters back out under the door, and this leads those outside to mistakenly suppose there is a Chinese speaker in the room.

As stated, if the computer program is accessible to him, then for all intents and purposes, he does understand Chinese for the purposes of interacting until the program is removed (assuming that it completely characterizes Chinese and correctly works for all inputs).

I think the key issue is that people don't want to accept that if we were completely unconstrained physically, even very large look-up tables would be a valid answer to making an AI that is useful.

The book in the room isn't inert, though. It instructs the little guy on what to do as he manipulates symbols and stuff. As such, it is an important part of the computation that takes place.

The mapping of popcorn-to-computation, though, doesn't do anything equivalent to this. It's just an off-to-the-side interpretation of what is happening in the popcorn: it does nothing to move the popcorn or cause it to be configured in such a way. It doesn't have to even exist: if you just know that in theory there is a way to map the popcorn to the computation, then if (by the terms of the argument) the computation itself is sufficient to generate consciousness, the popcorn should be able to do it as well, with the mapping left as an exercise for the reader. Otherwise you are implying some special property of a headful of meat such that it does not need to be interpreted in this way for its computation to be equivalent to consciousness.

That doesn't quite follow to me. The book seems just as inert as the popcorn-to-consciousness map. The book doesn't change yhe incoming slip of paper (popcorn) in any way, it just responds with an inert static map yo result in an outgoing slip of paper (consciousness), utilizing the map-and-popcorn-analyzing-agent-who-lacks-understanding (man in the room).

The book in the Chinese Room directs the actions of the little man in the room. Without the book, the man doesn't act, and the text doesn't get translated.

The popcorn map on the other hand doesn't direct the popcorn to do what it does. The popcorn does what it does, and then the map in a post-hoc way is generated to explain how what the popcorn did maps to some particular calculation.

You can say that "oh well, then, the popcorn wasn't really conscious until the map was generated; it was the additional calculations that went into generating the map that really caused the consciousness to emerge from the calculating" and then you're back in Chinese Room territory. But if you do this, you're left with the task of explaining how a brain can be conscious solely by means of executing a calculation before anyone has gotten around to creating a map between brain-states and whatever the relevant calculation-states might be. You have to posit some way in which calculations capable of embodying consciousness are inherent to brains but must be interpreted into being elsewhere.

Further, the ability to 'map' Turing machine states to integers implies that we have solved the halting problem — a logical impossibility.

Wait, how does mapping Turing machine states to integers require us to solve the halting problem?

We earlier mentioned that it is required that the finite mapping be precomputed. If it is for arbitrary Turing machines, including those that don't halt, we need infinite time, so the claim that we can map to arbitrary Turing machines fails. If we restrict it to those which halt, we need to check that before providing the map, which requires solving the halting problem to provide the map.

Edit to add: I'm confused why this is getting "disagree" votes - can someone explain why or how this is an incorrect logical step, or

This isn't arguing other side, just a request for clarification:

There are some Turing machines that we don't know and can't prove if they halt. There are some that are sufficiently simple that we can show that they halt.

But why dors the discussion encompass the question of all possible Turing machines and their maps? Why isn't it sufficient to discuss the subset which are tractable within some compute threshold?

Epistemic status: very new to philosophy and theory of mind, but has taken a couple graduate courses in subjects related to the theory of computation.

I think there are two separate matters:

- I have a physical object that has a means to receive inputs and will do something based on those inputs. Suppose I now create two machines: one that takes 0s and 1s and converts it into something the object receives, and one that observes the actions of the physical object then spits out an output. Both of these machines operate in time that is simultaneously at most quadratic in the length of the input AND at most linear in the "run time" of the physical object. And both of these machines are "bijective".

If I create a program that has the same input/outputs as the above configuration (which is highly non-unique, and can vary significantly based on the choice of machines), there is some sense in which the physical object "computes" this program. This is kind of weak since the input/output converting machines can do a lot to emulate different programs, but at least you're getting things in a similar complexity class.

- You have a central nervous system (CNS) which is currently having a "subjective experience", whatever that means. It is true that your CNS can be viewed as the aforementioned physical object. And while it is also true that, in the previous framework, one would need a very long and complicated program, it also seems to be true that my subjective experience arises from just a specific sequence of inputs.

If we were to only consider how the physical object behaves with a few specific inputs, I think it's difficult to eliminate any possibilities for what the object is computing. When I see thought experiments like Putnam's rock, they make sense to me because we're only looking at a specific computation, not a full input-output set.

Edit: @Davidmanheim I've read your reply and agree that I've slightly misinterpreted your post. I'll think about if the above ideas can be salvaged from the angle of measuring information in a long but finite sequence (e.g. Kolmogorov complexity) and reply when I have time.

OK, so this is helpful, but if I understood you correctly, I think it's assuming too much about the setup. For #1, in the examples we're discussing, the states of the object aren't predictably changing in complex ways - just that it will change "states" in ways that can be predicted to follow a specific path, which can be mapped to some set of states. The states are arbitrary, and per the argument don't vary in some way that does any work - and so as I argued, they can be mapped to some set of consecutive integers. But this means that the actions of the physical object are predetermined in the mapping.

And the difference between that situation and the CNS is that we know he neural circuitry is doing work - the exact features are complex and only partly understood, but the result is clearly capable of doing computation in the sense of Turing machines.

Okay, let me know if this is a fair assessment:

-

Let's consider someone meditating in a dark and mostly-sealed room with minimal sensory inputs, and they're meditating in a way that we can agree they're having a conscious experience. Let's pick a 1 second window and consider the CNS and local environment of the meditator during that window.

-

(I don't know much physics, so this might need adjustment): Let's say we had a reasonable guess of an "initial wavefunction" of the meditator in that window. Maybe this hypothetical is unreasonable in a deep way and this deserves to be fought. But supposing it can be done, and we had a sufficiently powerful supercomputer, we could encode and simulate possible trajectories of this CNS over a one second window. CF suggests that there is a genuine conscious experience there.

-

Now let's look at how one such simulation is encoded, which we could view as a long string of 0s and 1s. The tricky part (I think) is as follows: we have a way of understanding these 0s and 1s as particles and the process of interpreting these as states of particles is "simple". But I can't convert that understanding rigorously into the length of a program because all programs can do is convert one encoding into another (and presumably we've designed this encoding to be as straightforward-to-interpret as possible, instead of as short as possible).

-

Let's say I have sand swirling around in a sandstorm. I likewise pick a section of this, and do something like the above to encode it as a sequence of integers in a manner that is as easy for a human to interpret as possible, and makes no effort to be compressed.

-

Now I can ask for the K-complexity of the CNS string, given the sand swirling sequence as input (i.e. the size of the smallest turing machine that prints the CNS string with the sand swirling sequence on its input tape). Divide this by the K-complexity of the CNS string. If the resulting fraction is close to zero, maybe there's a sense in which the sand swirling sequence is really emulating the meditator's conscious experience. But this is ratio is probably closer to 1. (By the way, the choice of using K-complexity is itself suspect, but it can be swapped with other notions of complexity.)

What I can't seem to shake is that it seems to be fundamentally important that we have some notion of 0s and 1s encoding things in a manner that is optimally "human-friendly". I don't know how this can be replaced with a way that avoids needing a sentient being.

That seems like a reasonable idea. It seems not at all related to what any of the philosophers proposed.

For their proposals, it seems like the computational process is more like:

1. Extract a specific string of 1s and zeros from the sandstorm's initial position, and another from it's final position, with the some length as the length of the full description of the mind.

2. Calculate the bitwise sum of the initial mind state and the initial sand position.

3. Calculate the bitwise sum of the final mind state and the final sand position.

4. Take the output of state 2 and replace it with the output of state 3.

5. Declare that the sandstorm is doing something isomorphic to what the mind did. Ignore the fact that the internal process is completely unrelated, and all of the computation was done inside of the mind, and you're just copying answers.

I'm going to read https://www.scottaaronson.com/papers/philos.pdf, https://philpapers.org/rec/PERAAA-7, and the appendix here: https://www.lesswrong.com/posts/dkCdMWLZb5GhkR7MG/ (as well as the actual original statements of Searle's Wall, Johnston's popcorn, and Putnam's rock), and when that's eventually done I might report back here or make a new post if this thread is long dead by then

I think the point is valid, but (as I said here) I don't think this refutes the thought experiments (that purport to show that a wall/bag-of-popcorn/whatever is doing computation). I think the thought experiments show that it's very hard to objectively interpret computation, and your point is that there is a way to make nonzero progress on the problem by positing a criterion that rules out some interpretations

Imo it's kind of like if someone made a thought experiment arguing that AI alignment is hard because there's a large policy space, and you responded by showing that there's an impact measure by which not every policy in the space is penalized equally. It would be a coherent argument, but it would be strange to say that it "refuted" the initial thought experiment, even if the impact measure happened to penalize a particular failure mode that the initial thought experiment used as an example. A refutation would more be a complete solution to the alignment problem, which no one has -- just as no one has a complete solution to the problem of objectively interpreting computation.

(I also wonder if this post is legible to anyone not already familiar with the arguments? It seems very content-dense per number of words/asking a lot of the reader.)

I agree that this wasn't intended as an introduction to the topic. For that, I will once again recommend Scott Aaronson's excellent mini-book explaining computational complexity to philosophers.

I agree that the post isn't a definition of what computation is - but I don't need to be able to define fire to be able to point out something that definitely isn't on fire! So I don't really understand your claim. I agree that it's objectively hard to interpret computation, but it's not at all hard to interpret the fact that the integers are less complex and doing less complex computation than, say, an exponential-time Turing machine - and given the specific arguments being made, neither is a wall or a bag of popcorn. Which, as I just responded to the linked comment, was how I understood the position being taken by Searle, Putnam, and Johnson. (And even this ignores that one implication of the difference in complexity is that the wall / bag of popcorn / whatever is not mappable to arbitrary computations, since the number of steps required for a computation may not be finite!)

but I don't need to be able to define fire to be able to point out something that definitely isn't on fire!

I guess I can see that. I just don't think that e.g. Mike Johnson would consider his argument refuted based on the post; I think he'd argue that the type of problems illustrated by the popcorn thought experiment are in fact hard (and, according to him, probably unsolvable). And I'd probably still invoke the thought experiment myself, too. Basically I continue to think they make a valid point, hence are not "refuted". (Maybe I'm being nit-picky? But I think the standards for claiming to have refuted sth should be pretty high.)

Yeah, perhaps refuting is too strong given that the central claim is that we can't know what is and is not doing computation - which I think is wrong, but requires a more nuanced discussion. However, the narrow claims they made inter-alia were strong enough to refute, specifically by showing that their claims are equivalent to saying the integers are doing arbitrary computation - when making the claim itself requires the computation to take place elsewhere!

Crucially, the mappings to rocks or integers require the computation to be performed elsewhere to generate the mapping. Without the computation occurring externally, the mapping cannot be constructed, and thus, it is misleading to claim that the computation happens 'in' the rock or the integers. Further, Crucially, the mappings to rocks or integers require the computation to be performed elsewhere to generate the mapping. Without the computation occurring externally, the mapping cannot be constructed, and thus, it is misleading to claim that the computation happens 'in' the rock or the integers.

Either this is saying the same thing twice or I'm seeing double.

In a recent essay, Euan McLean suggested that a cluster of thought experiments “viscerally capture” part of the argument against computational functionalism. Without presenting an opinion about the underlying claim about consciousness, I will explain why these arguments fail as a matter of computational complexity. Which, parenthetically, is something that philosophers should care about.

To explain the question, McLean summarizes part of Brian Tomasik’s essay "How to Interpret a Physical System as a Mind." There, Tomasik discusses the challenge of attributing consciousness to physical systems, drawing on Hilary Putnam's "Putnam's Rock" thought experiment. Putnam suggests that any physical system, such as a rock, can be interpreted as implementing any computation. This is meant to challenge the idea that computation alone defines consciousness. It challenges computational functionalism by implying that if computation alone defines consciousness, then even a rock could be considered conscious.

Tomasik refers to Paul Almond’s (attempted) refutation of the idea, which says that a single electron could be said to implement arbitrary computation in the same way. Tomasik "does not buy" this argument, but I think a related argument succeeds. That is, a finite list of consecutive integers can be used to 'implement' any Turing machine using the same logic as Putnam’s rock. Each step N of the machine's execution corresponds directly to integer N in the list. But this mapping is trivial, doing no more than listing the steps of the computation.

It might seem that the above proves too much. Perhaps every mapping requires doing the computation to construct? This is untrue, as the notion of a reduction in computational complexity makes clear. That is, we can build a ”simple” mapping, relative to the complexity of the Turing machine itself, and this succeeds in showing that the system is actually performing arbitrary computations - both the system performing computations and the one being mapped from. Rocks and integers cannot, since any mapping must be as complex as the original Turing machine.

Does the mapping to rocks or integers do anything at all? No. Crucially, the mappings to rocks or integers require the computation to be performed elsewhere to generate the mapping. Without the computation occurring externally, the mapping cannot be constructed, and thus, it is misleading to claim that the computation happens 'in' the rock or the integers. Further, the ability to 'map' Turing machine states to integers implies that we have solved the halting problem — a logical impossibility. But even if we can guarantee the machine halts, the core issue remains: constructing the mapping requires external computation, refuting the idea that the computation occurs in the rock.