Multiple Factor Explanations Should Not Appear One-Sided

In Policy Debates Should Not Appear One-Sided, Eliezer Yudkowsky argues that arguments on questions of fact should be one-sided, whereas arguments on policy questions should not: > On questions of simple fact (for example, whether Earthly life arose by natural selection) there's a legitimate expectation that the argument should be a one-sided battle; the facts themselves are either one way or another, and the so-called "balance of evidence" should reflect this. Indeed, under the Bayesian definition of evidence, "strong evidence" is just that sort of evidence which we only expect to find on one side of an argument. > > But there is no reason for complex actions with many consequences to exhibit this onesidedness property. The reason for this is primarily that natural selection has caused all sorts of observable phenomena. With a bit of ingenuity, we can infer that natural selection has caused them, and hence they become evidence for natural selection. The evidence for natural selection thus has a common cause, which means that we should expect the argument to be one-sided. In contrast, even if a certain policy, say lower taxes, is the right one, the rightness of this policy does not cause its evidence (or the arguments for this policy, which is a more natural expression), the way natural selection causes its evidence. Hence there is no common cause of all of the valid arguments of relevance for the rightness of this policy, and hence no reason to expect that all of the valid arguments should support lower taxes. If someone nevertheless believes this, the best explanation of their belief is that they suffer from some cognitive bias such as the affect heuristic. (In passing, I might mention that I think that the fact that moral debates are not one-sided indicates that moral realism is false, since if moral realism were true, moral facts should provide us with one-sided evidence on moral questions, just like natural selection provides us with one-sided evidence on

Cf this Bostrom quote.

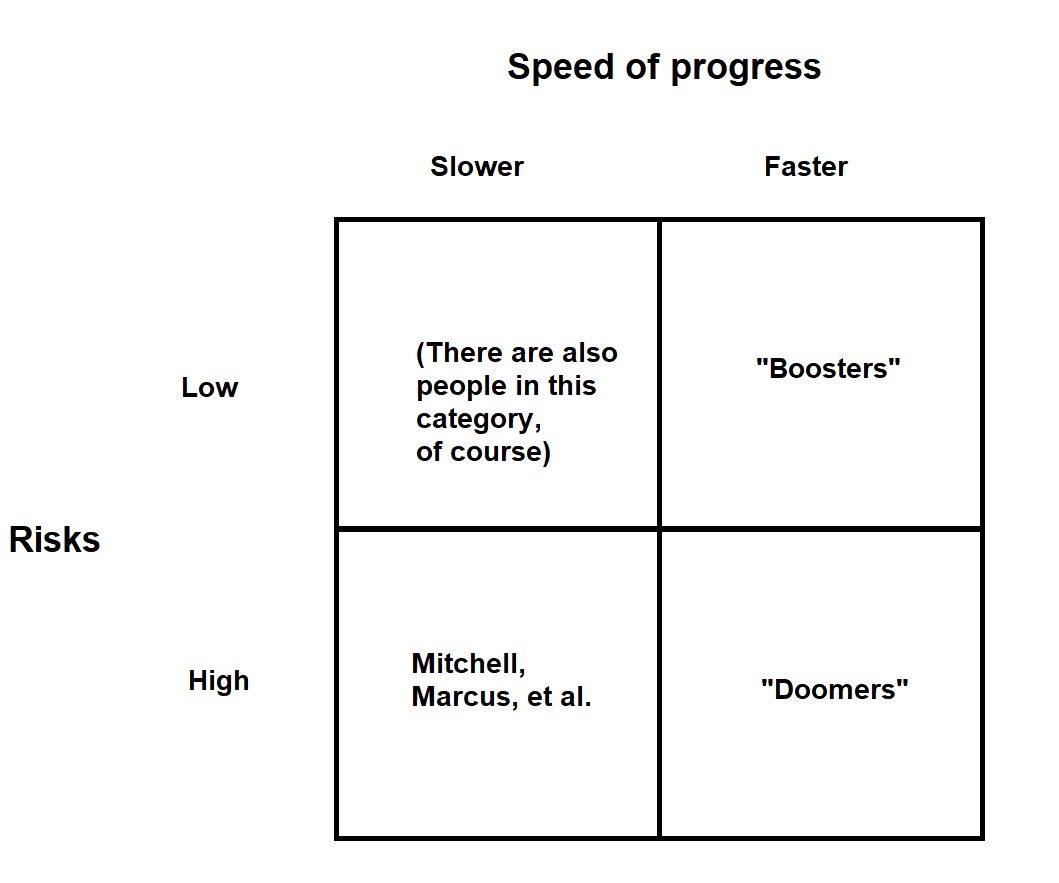

Re this:

A bit nit-picky, but a recent paper studying West Eurasia found significant evolution over the last 14,000 years.