Posts

Wiki Contributions

There's a related confusion between uses of "theory" that are neutral about the likelihood of the theory being true, and uses that suggest that the theory isn't proved to be true.

Cf the expression "the theory of evolution". Scientists who talk about the "theory" of evolution don't thereby imply anything about its probability of being true - indeed, many believe it's overwhelmingly likely to be true. But some critics interpret this expression differently, saying it's "just a theory" (meaning it's not the established consensus).

Thanks for this thoughtful article.

It seems to me that the first and the second examples have something in common, namely an underestimate of the degree to which people will react to perceived dangers. I think this is fairly common in speculations about potential future disasters, and have called it sleepwalk bias. It seems like something that one should be able to correct for.

I think there is an element of sleepwalk bias in the AI risk debate. See this post where I criticise a particular vignette.

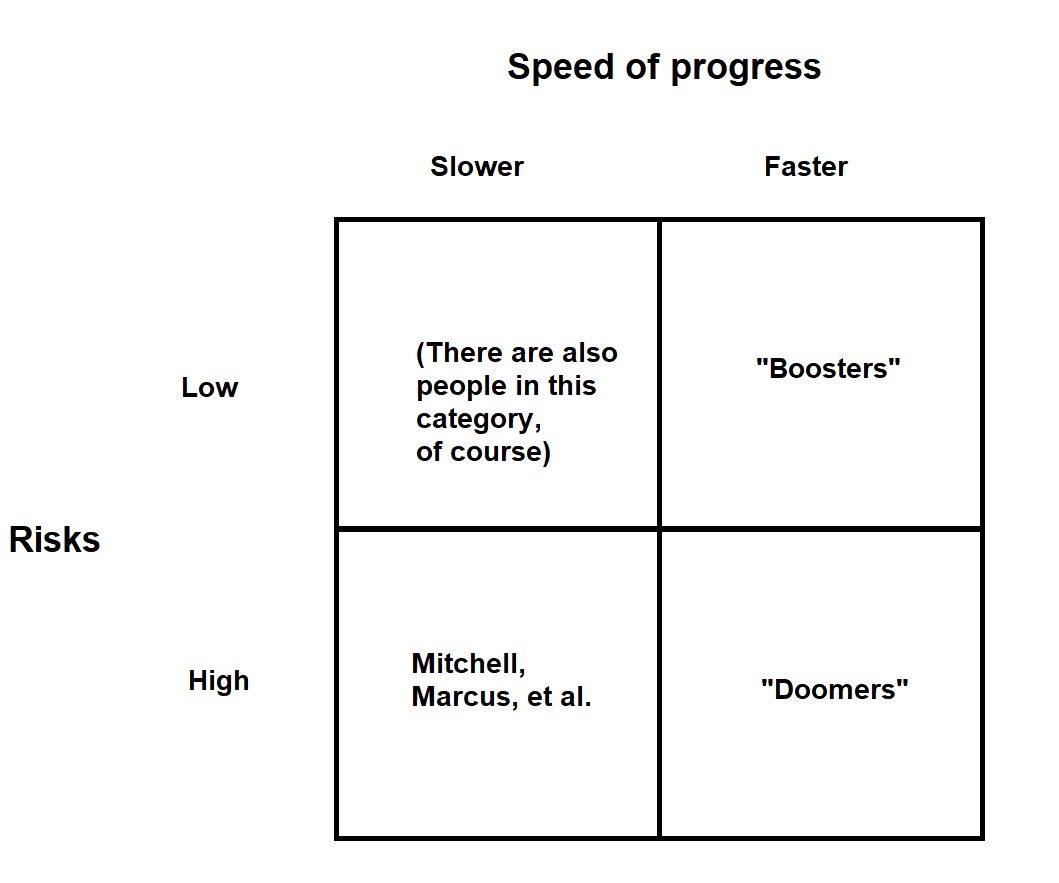

Yeah, I think so. But since those people generally find AI less important (there's both less of an upside and less of a downside) they generally participate less in the debate. Hence there's a bit of a selection effect hiding those people.

There are some people who arguably are in that corner who do participate in the debate, though - e.g. Robin Hanson. (He thinks some sort of AI will eventually be enormously important, but that the near-term effects, while significant, will not be at the level people on the right side think).

Looking at the 2x2 I posted I wonder if you could call the lower left corner something relating to "non-existential risks". That seems to capture their views. It might be hard to come up with a catch term, though.

The upper left corner could maybe be called "sceptics".

Not exactly what you're asking for, but maybe a 2x2 could be food for thought.

Realist and pragmatist don't seem like the best choices of terms, since they pre-judge the issue a bit in the direction of that view.

Thanks.

I think psychologists-scientists should have unusually good imaginations about the potential inner workings of other minds, which many ML engineers probably lack.

That's not clear to me, given that AI systems are so unlike human minds.

tell your fellow psychologist (or zoopsychologist) about this, maybe they will be incentivised to make a switch and do some ground-laying work in the field of AI psychology

Do you believe that (conventional) psychologists would be especially good at what you call AI psychology, and if so, why? I guess other skills (e.g. knowledge of AI systems) could be important.

I think that's exactly right.

I think that could be valuable.

It might be worth testing quite carefully for robustness - to ask multiple different questions probing the same issue, and see whether responses converge. My sense is that people's stated opinions about risks from artificial intelligence, and existential risks more generally, could vary substantially depending on framing. Most haven't thought a lot about these issues, which likely contributes. I think a problem problem with some studies on these issues is that researchers over-generalise from highly framing-dependent survey responses.

Cf this Bostrom quote.

Re this:

A bit nit-picky, but a recent paper studying West Eurasia found significant evolution over the last 14,000 years.