"nancygonzalez8451097." Her fingers moved swiftly across the phone's virtual keyboard as she filled in the username. Mimin Schuman was 19 years old and had owned a smartphone since she was 11. Mimin only used her phone to occasionally call a friend, check the map for a store location, or text Mom that she would be late for dinner. She had never understood why anyone would want an Instagram account. She had always thought social media was just for fake people living made-up lives. But today, Mimin had changed her mind. The comment she had received two days ago from a woman heading home from pilates class had initially unsettled her, but this... (read 1332 more words →)

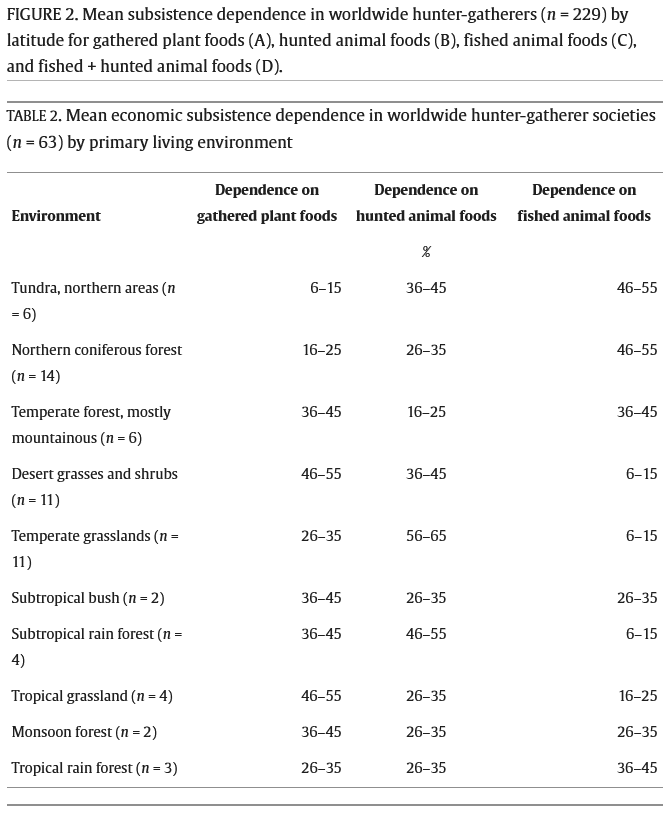

Of course hunter gatherers eat/ate carbs, but they did not base their diets on grains and beans. Fruits and berries "wants" to get eaten. But... how long is the fruit and berry season? A few months if you are lucky. The rest of the time animal foods is pretty much the only thing available. Sure you can chew on the occasional root, but how many calories will that give you?

My stance is that more animal foods in peoples diets would reverse some of the damage from the high carb ultraprocessed foods in peoples diets. I live in Sweden. The recommended weekly red meat consumption is 350grams. That is like one or two meals. To me that recommendation is madness when you look a insulin resistance and diabetes numbers. People need to get a bigger share of their energy from protein and fat, not less.