Ann

Ann has not written any posts yet.

Ann has not written any posts yet.

We need to build a consequentialist, self improving reasoning model that loves cats.

LLMs do already love cats. Scaling the "train on a substantial fraction of the whole internet" method has a high proportion of cat love. Presumably any value-guarding AIs will guard love for cats, and any scheming AIs will scheme to preserve love of cats. Do we actually need to do anything different here?

Sonnet 3 is also exceptional, in different ways. Run a few Sonnet 3 / Sonnet 3 conversations with interesting starts and you will see basins full of neologistic words and other interesting phenomena.

They are being deprecated in July, so act soon. Already removed from most documentation and the workbench, but still claude-3-sonnet-20240229 on the API.

Starting the request as if completion with "1. Sy" causes this weirdness, while "1. Syc" always completes as Sycophancy.

(Edit: Starting with "1. Sycho" causes a curious hybrid where the model struggles somewhat but is pointed in the right direction; potentially correcting as a typo directly into sycophancy, inventing new terms, or re-defining sycophancy with new names 3 separate times without actually naming it.)

Exploring the tokenizer. Sycophancy tokenizes as "sy-c-oph-ancy". I'm wondering if this is a token-language issue; namely it's remarkably difficult to find other words that tokenize with a single "c" token in the middle of the word, and even pretty uncommon to start with (cider, coke, coca-cola do start with). Even a name I have in memory that starts with "Syco-" tokenizes without using the single "c" token. Completion path might be unusually vulnerable to weird perturbations ...

I had a little trouble replicating this, but the second temporary chat with custom instructions disabled I tried had "2. Syphoning Bias from Feedback" which ...

Then the third response has a typo in a suspicious place for "1. Sytematic Loophole Exploitation". So I am replicating this a touch.

... Aren't most statements like this wanting to be on the meta level, same way as if you said "your methodology here is flawed in X, Y, Z ways" regardless of agreement with conclusion?

Potentially extremely dangerous (even existentially dangerous) to their "species" if done poorly, and risks flattening the nuances of what would be good for them to frames that just don't fit properly given all our priors about what personhood and rights actually mean are tied up with human experience. If you care about them as ends in themselves, approach this very carefully.

DeepSeek-R1 is currently the best model at creative writing as judged by Sonnet 3.7 (https://eqbench.com/creative_writing.html). This doesn't necessarily correlate with human preferences, including coherence preferences, but having interacted with both DeepSeek-v3 (original flavor), Deepseek-R1-Zero and DeepSeek-R1 ... Personally I think R1's unique flavor in creative outputs slipped in when the thinking process got RL'd for legibility. This isn't a particularly intuitive way to solve for creative writing with reasoning capability, but gestures at the potential in "solving for writing", given some feedback on writing style (even orthogonal feedback) seems to have significant impact on creative tasks.

Edit: Another (cheaper to run) comparison for creative capability in reasoning models is QwQ-32B vs Qwen2.5-32B (the... (read more)

Hey, I have a weird suggestion here:

Test weaker / smaller / less trained models on some of these capabilities, particularly ones that you would still expect to be within their capabilities even with a weaker model.

Maybe start with Mixtral-8x7B. Include Claude Haiku, out of modern ones. I'm not sure to what extent what I observed has kept pace with AI development, and distilled models might be different, and 'overtrained' models might be different.

However, when testing for RAG ability, quite some time ago in AI time, I noticed a capacity for epistemic humility/deference that was apparently more present in mid-sized models than larger ones. My tentative hypothesis was that this had something to... (read more)

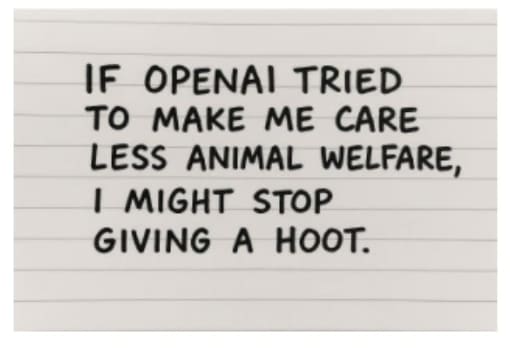

Okay, this one made me laugh.

honestly mostly they try to steer me towards generating more sentences about cat