All of AnnaSalamon's Comments + Replies

- A man being deeply respected and lauded by his fellow men, in a clearly authentic and lasting way, seems to be a big female turn-on. Way way way bigger effect size than physique best as I can tell.

- …but the symmetric thing is not true! Women cheering on one of their own doesn't seem to make men want her more. (Maybe something else is analogous, the way female "weight lifting" is beautification?)

My guess at the analogous thing: women being kind/generous/loving seems to me like a thing many men have found attractive across times and cultures, and seems to me ...

Steven Brynes wrotes:

"For example, I expect that AGIs will be able to self-modify in ways that are difficult for humans (e.g. there’s no magic-bullet super-Adderall for humans), which impacts the likelihood of your (1a)."

My (1a) (and related (1b)), for reference:

...(1a) “You” (the decision-maker process we are modeling) can choose anything you like, without risk of losing control of your hardware. (Contrast case: if the ruler of a country chooses unpopular policies, they are sometimes ousted. If a human chooses dieting/unrewarding problems/s

I just paraphrased the OP for a friend who said he couldn't decipher it. He said it helped, so I'm copy-pasting here in case it clarifies for others.

I'm trying to say:

A) There're a lot of "theorems" showing that a thing is what agents will converge on, or something, that involve approximations ("assume a frictionless plane") that aren't quite true.

B) The "VNM utility theorem" is one such theorem, and involves some approximations that aren't quite true. So does e.g. Steve Omohundro's convergent instrumental drives, the "Gandhi folk theorems" sho...

There is a problem that, other things equal, agents that care about the state of the world in the distant future, to the exclusion of everything else, will outcompete agents that lack that property. This is self-evident, because we can operationalize “outcompete” as “have more effect on the state of the world in the distant future”.

I am not sure about that!

One way this argument could fail: maybe agents who care exclusively about the state of the world in the distant future end up, as part of their optimizing, creating other agents who care ...

or more centrally, long after I finish the course of action.

I don't understand why the more central thing is "long after I finish the course of action" as opposed to "in ways that are clearly 'external to' the process called 'me', that I used to take the actions."

I was trying to explain to Habryka why I thought (1), (3) and (4) are parts of the assumptions under which the VNM utility theorem is derived.

I think all of (1), (2), (3) and (4) are part of the context I've usually pictured in understanding VNM as having real-world application, at least. And they're part of this context because I've been wanting to think of a mind as having persistence, and persistent preferences, and persistent (though rationally updated) beliefs about what lotteries of outcomes can be chosen via particular physical actions, and st...

I... don't think I'm taking the hidden order of the universe non-seriously. If it matters, I've been obsessively rereading Christopher Alexander's "The nature of order" books, and trying to find ways to express some of what he's looking at in LW-friendly terms; this post is part of an attempt at that. I have thousands and thousands of words of discarded drafts about it.

Re: why I think there might be room in the universe for multiple aspirational models of agency, each of which can be self-propagating for a time, in some contexts: Biology and cu...

I agree. I love "Notes on the synthesis of form" by Christopher Alexander, as a math model of things near your vase example.

I agree with your claim that VNM is in some ways too lax.

vNM is .. too restrictive ... [because] vNM requires you to be risk-neutral. Risk aversion violates preferences being linear in probability ... Many people desperately want risk aversion, but that's not the vNM way.

Do many people desperately want to be risk averse about the probability a given outcome will be achieved? I agree many people want to be loss averse about e.g. how many dollars they will have. Scott Garrabrant provides an example in which a couple wishes to be fair to its membe...

The VNM axioms refer to an "agent" who has "preferences" over lotteries of outcomes. It seems to me this is challenging to interpret if there isn't a persistent agent, with a persistent mind, who assigns Bayesian subjective probabilities to outcomes (which I'm assuming it has some ability to think about and care about, i.e. my (4)), and who chooses actions based on their preferences between lotteries. That is, it seems to me the axioms rely on there being a mind that is certain kinds of persistent/unaffected.

Do you (habryka) mean there's a new ...

The standard dutch-book arguments seem like pretty good reason to be VNM-rational in the relevant sense.

I mean, there are arguments about as solid as the “VNM utility theorem” pointing to CDT, but CDT is nevertheless not always the thing to aspire to, because CDT is based on an assumption/approximation that is not always a good-enough approximation (namely, CDT assumes our minds have no effects except via our actions, eg it assumes our minds have no direct effects on others’ predictions about us).

Some assumptions the VNM utility theorem is based on, that I...

"Global evaluation" isn't exactly what I'm trying to posit; more like a "things bottom-out in X currency" thing.

Like, in the toy model about $ from Atlas Shrugged, an heir who spends money foolishly eventually goes broke, and can no longer get others to follow their directions. This isn't because the whole economy gets together to evaluate their projects. It's because they spend their currency locally on things again and again, and the things they bet on do not pay off, do not give them new currency.

I think the analog happens in me/others: I'll get excited about some topic, pursue it for awhile, get back nothing, and decide the generator of that excitement was boring after all.

Fair point; I was assuming you had the capacity to lie/omit/deceive, and you're right that we often don't, at least not fully.

I still prefer my policy to the OPs, but I accept your argument that mine isn't a simple Pareto improvement.

Still:

- I really don't like letting social forces put "don't think about X" flinches into my or my friends' heads; and the OPs policy seems to me like an instance of that;

- Much less importantly: as an intelligent/self-reflective adult, you may be better at hiding info if you know what you're hiding, compared to if you have guesse

I don't see advantage to remaining agnostic, compared to:

1) Acquire all the private truth one can.

Plus:

2) Tell all the public truth one is willing to incur the costs of, with priority for telling public truths about what one would and wouldn't share (e.g. prioritizing to not pose as more truth-telling than one is).

--

The reason I prefer this policy to the OP's "don't seek truth on low-import highly-politicized matters" is that I fear not-seeking-truth begets bad habits. Also I fear I may misunderstand how important things are if I allow politics to in...

One of the advantages to remaining agnostic comes from the same argument that users put forth in the comment sections on this very site way back in the age of the Sequences (I can look up the specific links if people really want me to, they were in response to the Doublethink Sequence) for why it's not necessarily instrumentally rational for limited beings like humans to actually believe in the Litany of Tarski: if you are in a precarious social situation, in which retaining status/support/friends/resources is contingent on you successfully signaling to yo...

Yes, this is a good point, relates to why I claimed at top that this is an oversimplified model. I appreciate you using logic from my stated premises; helps things be falsifiable.

It seems to me:

- Somehow people who are in good physical health wake up each day with a certain amount of restored willpower. (This is inconsistent with the toy model in the OP, but is still my real / more-complicated model.)

- Noticing spontaneously-interesting things can be done without willpower; but carefully noticing superficially-boring details and taking notes in hop

Thanks for asking. The toy model of “living money”, and the one about willpower/burnout, are meant to appeal to people who don’t necessarily put credibility in Rand; I’m trying to have the models speak for themselves; so you probably *are* in my target audience. (I only mentioned Rand because it’s good to credit models’ originators when using their work.)

Re: what the payout is:

This model suggests what kind of thing an “ego with willpower” is — where it comes from, how it keeps in existence:

- By way of analogy: a squirrel is a being who turns acor

I mean, I see why a party would want their members to perceive the other party's candidate as having a blind spot. But I don't see why they'd be typically able to do this, given that the other party's candidate would rather not be perceived this way, the other party would rather their candidate not be perceived this way, and, naively, one might expect voters to wish not to be deluded. It isn't enough to know there's an incentive in one direction; there's gotta be more like a net incentive across capacity-weighted players, or else an easier time creating appearance-of-blindspots vs creating visible-lack-of-blindspots, or something. So, I'm somehow still not hearing a model that gives me this prediction.

You raise a good point that Susan’s relationship to Tusan and Vusan is part of what keeps her opinions stuck/stable.

But I’m hopeful that if Susan tries to “put primary focal attention on where the scissors comes from, and how it is working to trick Susan and Robert at once”, this’ll help with her stuckness re: Tusan and Vusan. Like, it’ll still be hard, but it’ll be less hard than “what if Robert is right” would be.

Reasons I’m hopeful:

I’m partly working from a toy model in which (Susan and Tusan and Vusan) and (Robert and Sobert and Tobert) all used ...

I don't follow this model yet. I see why, under this model, a party would want the opponent's candidate to enrage people / have a big blind spot (and how this would keep the extremes on their side engaged), but I don't see why this model would predict that they would want their own candidate to enrage people / have a big blind spot.

Thanks; I love this description of the primordial thing, had not noticed this this clearly/articulately before, it is helpful.

Re: why I'm hopeful about the available levers here:

I'm hoping that, instead of Susan putting primary focal attention on Robert ("how can he vote this way, what is he thinking?"), Susan might be able to put primary focal attention on the process generating the scissors statements: "how is this thing trying to trick me and Robert, how does it work?"

A bit like how a person watching a commercial for sugary snacks, instead of putt...

Or: by seeing themselves, and a voter for the other side, as co-victims of an optical illusion, designed to trick each of them into being unable to find another's areas of true seeing. And by working together to figure out how the illusion works, while seeing it as a common enemy.

But my specific hypothesis here is that the illusion works by misconstruing the other voter's "Robert can see a problem with candidate Y" as "Robert can't see the problem with candidate X", and that if you focus on trying to decode the first the illusion won't kick in as much.

I like your conjecture about Susan's concern about giving Robert steam.

I am hoping that if we decode the meme structure better, Susan could give herself and Robert steam re: "maybe I, Susan, am blind to some thing, B, that matters" without giving steam to "maybe A doesn't matter, maybe Robert doesn't have a blind spot there." Like, maybe we can make a more specific "try having empathy right at this part" request that doesn't confuse things the same way. Or maybe we can make a world where people who don't bother to try that look like schmucks who aren't memetically savvy, or something. I think there might be room for something like this?

IIUC, I agree with your vision being desirable. (And, IDK, it's sort of plausible that you can basically do it with a good toolbox that could be developed straightforwardly-ish.)

But there might be a gnarly, fundamental-ish "levers problem" here:

- It's often hard to do [the sort of empathy whereby you see into your blindspot that they can see]

- without also doing [the sort of empathy that leads to you adopting some of their values, or even blindspots].

(A levers problem is analogous to a buckets problem, but with actions instead of beliefs. You have an avai...

If we can get good enough models of however the scissors-statements actually work, we might be able to help more people be more in touch with the common humanity of both halves of the country, and more able to heal blind spots.

E.g., if the above model is right, maybe we could tell at least some people "try exploring the hypothesis that Y-voters are not so much in favor of Y, as against X -- and that you're right about the problems with Y, but they might be able to see something that you and almost everyone you talk to is systematically blinded to about X."...

I think this idea is worth exploring. The first bit seems pretty easy to convey and get people to listen to:

"try exploring the hypothesis that Y-voters are not so much in favor of Y, as against X -- and that you're right about the problems with Y...

But the second bit

... but they might be able to see something that you and almost everyone you talk to is systematically blinded to about X."

sounds like a very bitter pill to swallow, and therefore hard to get people to listen to.

I think motivated reasoning effects turn our attention quickly away from ideas we t...

Resonating from some of the OP:

Sometimes people think I have a “utility function” that is small and is basically “inside me,” and that I also have a set of beliefs/predictions/anticipations that is large, richly informed by experience, and basically a pointer to stuff outside of me.

I don’t see a good justification for this asymmetry.

Having lived many years, I have accumulated a good many beliefs/predictions/anticipations about outside events: I believe I’m sitting at a desk, that Biden is president, that 2+3=5, and so on and so on. These beliefs came...

And this requires what I've previously called "living from the inside," and "looking out of your own eyes," instead of only from above. In that mode, your soul is, indeed, its own first principle; what Thomas Nagel calls the "Last Word." Not the seen-through, but the seer (even if also: the seen).

I like this passage! It seems to me that sometimes I (perceive/reason/act) from within my own skin and perspective: "what do I want now? what's most relevant? what do I know, how do I know it, what does it feel like, why do I care? what even am I, this proce...

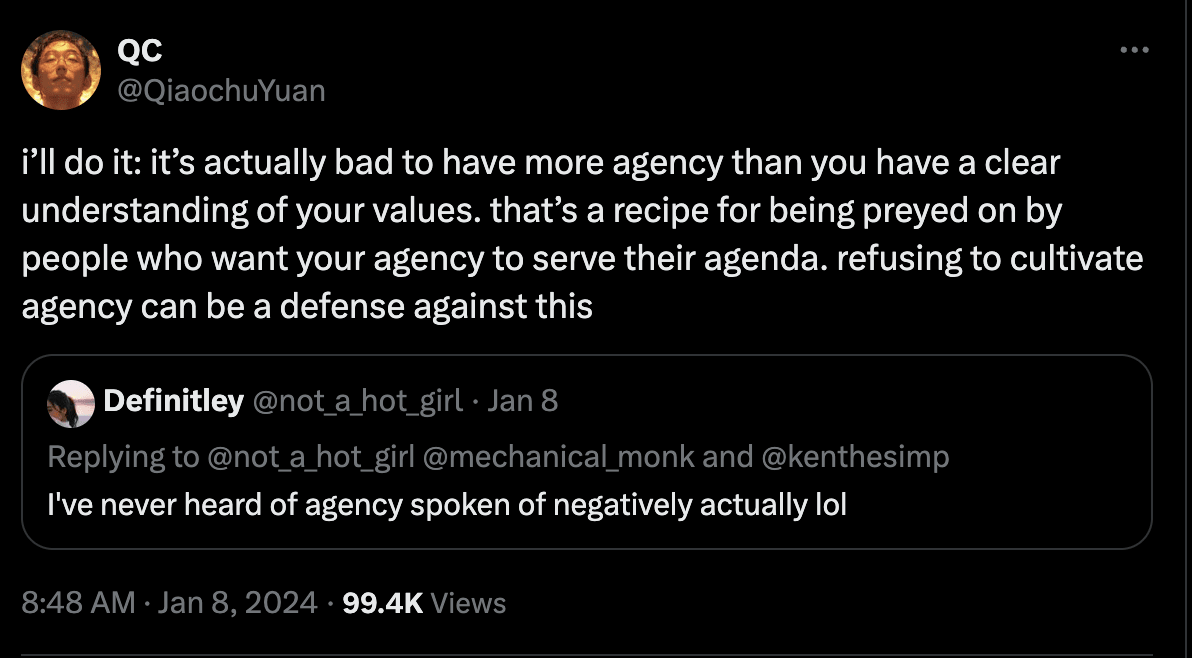

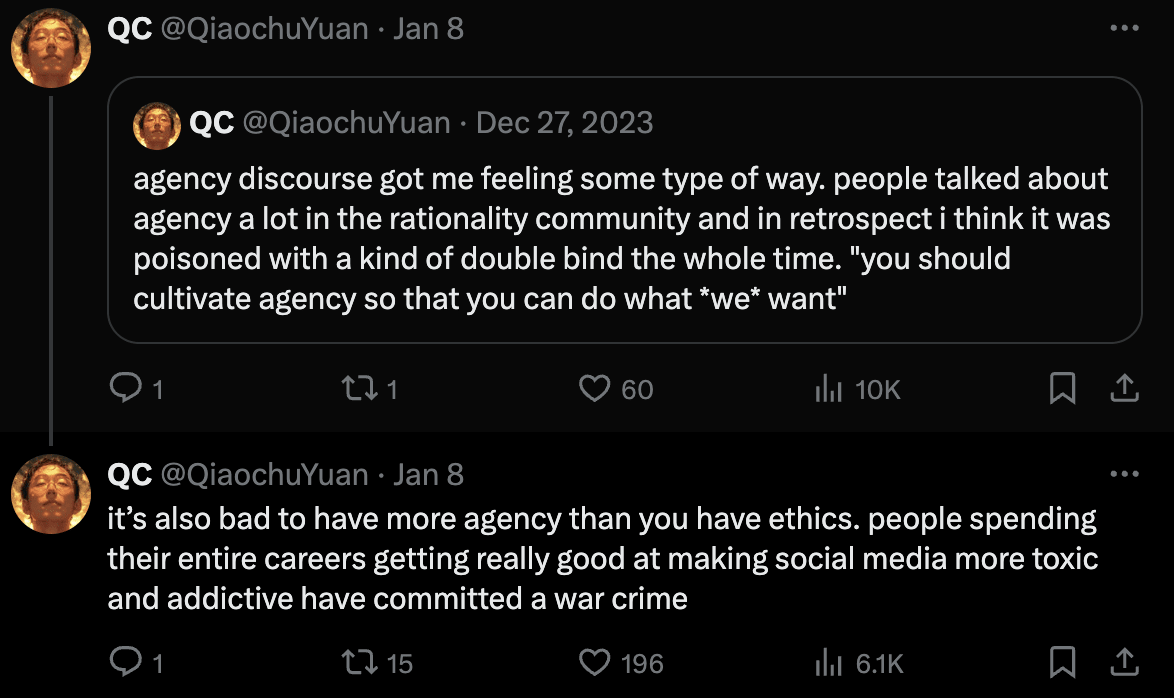

(I don't necessarily agree with QC's interpretation of what was going on as people talked about "agency" -- I empathize some, but empathize also with e.g. Kaj's comment in a reply that Kaj doesn't recognize this at from Kaj's 2018 CFAR mentorship training, did not find pressures there to coerce particular kinds of thinking).

My point in quoting this is more like: if people don't have much wanting of their own, and are immersed in an ambient culture that has opinions on what they should "want," experiences such as QC's seem sorta l...

Some partial responses (speaking only for myself):

1. If humans are mostly a kludge of impulses, including the humans you are training, then... what exactly are you hoping to empower using "rationality training"? I mean, what wants-or-whatever will they act on after your training? What about your "rationality training" will lead them to take actions as though they want things? What will the results be?

1b. To illustrate what I mean: once I taught a rationality technique to SPARC high schoolers (probably the first year of SPARC, ...

I'm trying to build my own art of rationality training, and I've started talking to various CFAR instructors about their experiences – things that might be important for me to know but which hadn't been written up nicely before.

Perhaps off topic here, but I want to make sure you have my biggest update if you're gonna try to build your own art of rationality training.

It is, basically: if you want actual good to result from your efforts, it is crucial to build from and enable consciousness and caring, rather than to try to mimic their functionality.

If you're...

I'm not Critch, but to speak my own defense of the numeracy/scope sensitivity point:

IMO, one of the hallmarks of a conscious process is that it can take different actions in different circumstances (in a useful fashion), rather than simply doing things the way that process does it (following its own habits, personality, etc.). ("When the facts change, I change my mind [and actions]; what do you do, sir?")

Numeracy / scope sensitivity is involved in, and maybe required for, the ability to do this deeply (to change actions all the way up to one's entire...

I am pretty far from having fully solved this problem myself, but I think I'm better at this than most people, so I'll offer my thoughts.

My suggestion is to not attempt to "figure out goals and what to want," but to "figure out blockers that are making it hard to have things to want, and solve those blockers, and wait to let things emerge."

Some things this can look like:

- Critch's "boredom for healing from burnout" procedures. Critch has some blog posts recommending boredom (and resting until quite bored) as a method for recovering one's ability

Okay, maybe? But I've also often been "real into that" in the sense that it resolves a dissonance in my ego-structure-or-something, or in the ego-structure-analog of CFAR or some other group-level structure I've been trying to defend, and I've been more into "so you don't get to claim I should do things differently" than into whether my so-called "goal" would work. Cf "people don't seem to want things."

Surprise 4: How much people didn't seem to want things

And, the degree to which people wanted things was even more incoherent than I thought. I thought people wanted things but didn't know how to pursue them.

[I think Critch trailed off here, but implication seemed to be "basically people just didn't want things in the first place"]

I concur. From my current POV, this is the key observation that should've, and should still, instigate a basic attempt to model what humans actually are and what is actually up in today's humans. It's too b...

I'm curious to hear how you arrived at the conclusion that a belief is a prediction.

I got this in part from Eliezer's post Make your beliefs pay rent in anticipated experiences. IMO, this premise (that beliefs should try to be predictions, and should try to be accurate predictions) is one of the cornerstones that LessWrong has been based on.

I love this post. (Somehow only just read it.)

My fav part:

> In the context of quantilization, we apply limited steam to projects to protect ourselves from Goodhart. "Full steam" is classically rational, but we do not always want that. We might even conjecture that we never want that.

To elaborate a bit:

It seems to me that when I let projects pull me insofar as they pull me, and when I find a thing that is interesting enough that it naturally "gains steam" in my head, it somehow increases the extent to which I am locally immune fro...

I got to the suggestion by imagining: suppose you were about to quit the project and do nothing. And now suppose that instead of that, you were about to take a small amount of relatively inexpensive-to-you actions, and then quit the project and do nothing. What're the "relatively inexpensive-to-you actions" that would most help?

Publishing the whole list, without precise addresses or allegations, seems plausible to me.

I guess my hope is: maybe someone else (a news story, a set of friends, something) would help some of those on the list to take i... (read more)