What are you getting paid in?

Crossposting this essay by my friend, Leila Clark. A long time ago, a manager friend of mine wrote a book to collect his years of wisdom. He never published it, which is a shame because it was full of interesting insights. One that I think a lot about today was the question: “How are you paying your team?” This friend worked in finance. You might think that people in finance, like most people, are paid in money. But it turns out that even in finance, you can’t actually always pay and motivate people with just money. Often, there might just not be money to go around. Even if there is, managers are often captive to salary caps and performance bands. In any case, it’s awkward to pay one person ten times more than another, even if one person is clearly contributing ten times more than the other (many such cases exist). With this question, my manager friend wanted to point out that you can pay people in lots of currencies. Among other things, you can pay them in quality of life, prestige, status, impact, influence, mentorship, power, autonomy, meaning, great teammates, stability and fun. And in fact most people don’t just want to be paid in money — they want to be paid some mixture of these things. To demonstrate this point, take musicians and financiers. A successful financier is much, much richer in dollars than a successful musician. Some googling suggests that Mitski and Grimes, both very successful alternative musicians, have net worths of about $3-5m. $5m is barely notable in the New York high society circles that most financiers run in. Even Taylor Swift, maybe one of the most successful musicians of all times, has a net worth of generously $1b; Ken Griffin, one of the most successful financiers of all time, has a net worth of $33b. But more people want to be musicians, and I think it’s because musicians are paid in ways that financiers aren’t. Most obviously, musicians are way cooler. They get to interact with t

Curious whether y'all considered Tiptap as a base for the editor, and if so why you decided against it?

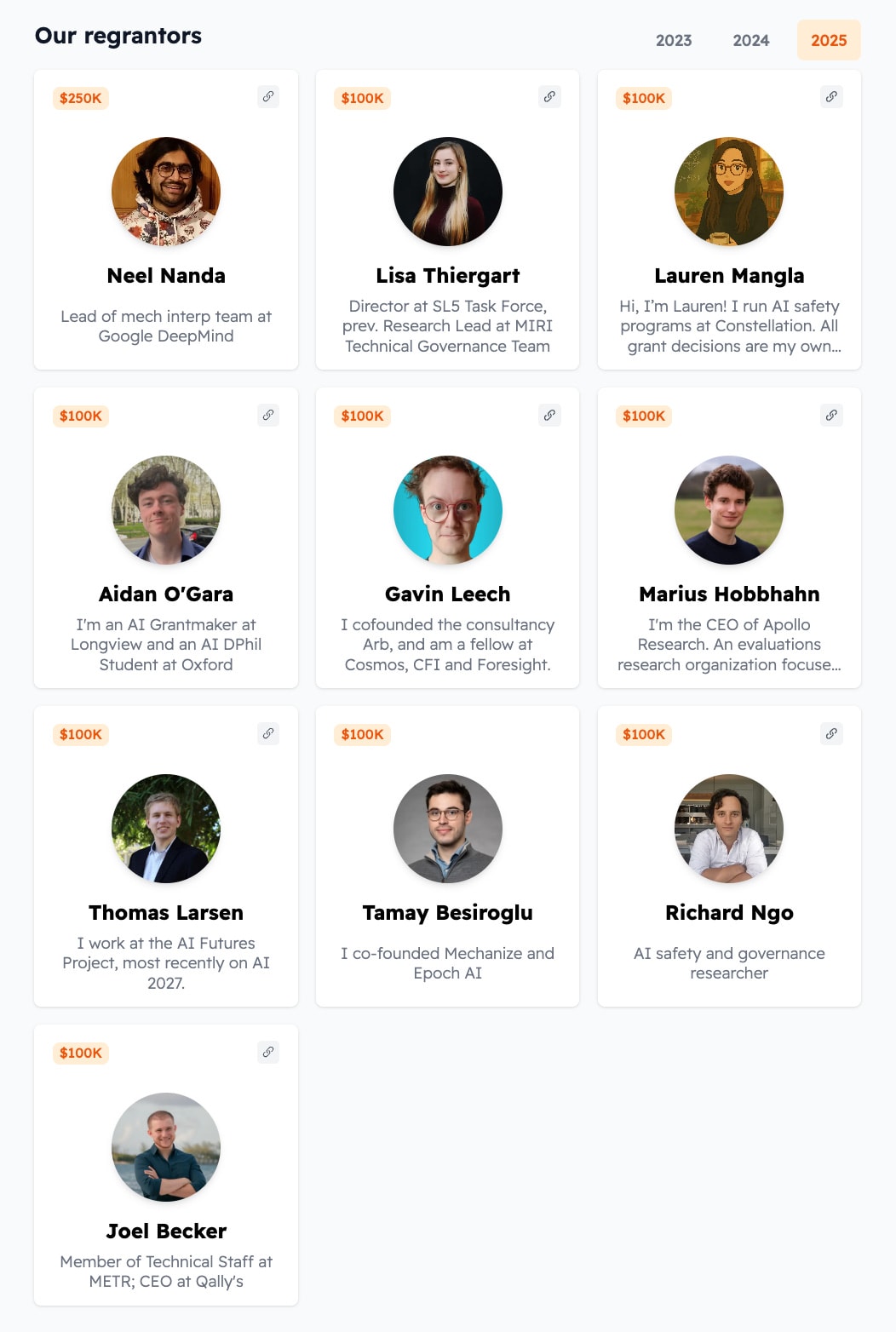

(Tiptap is what we use for Manifold/Manifund and there are definitely some warts -- eg markdown not being supported out of the box, though recently I think that's changed -- but mostly I've liked it.)