A Proposed Test to Determine the Extent to Which Large Language Models Understand the Real World

Large Language Models such as GPT-3 and PaLM have demonstrated an ability to generate plausible-sounding, grammatically-correct text in response to a wide range of prompts. And some Language-Model-based applications such as Dall-E or Google’s Imagen can generate images from a text prompt. However, such Language model still frequently make obvious...

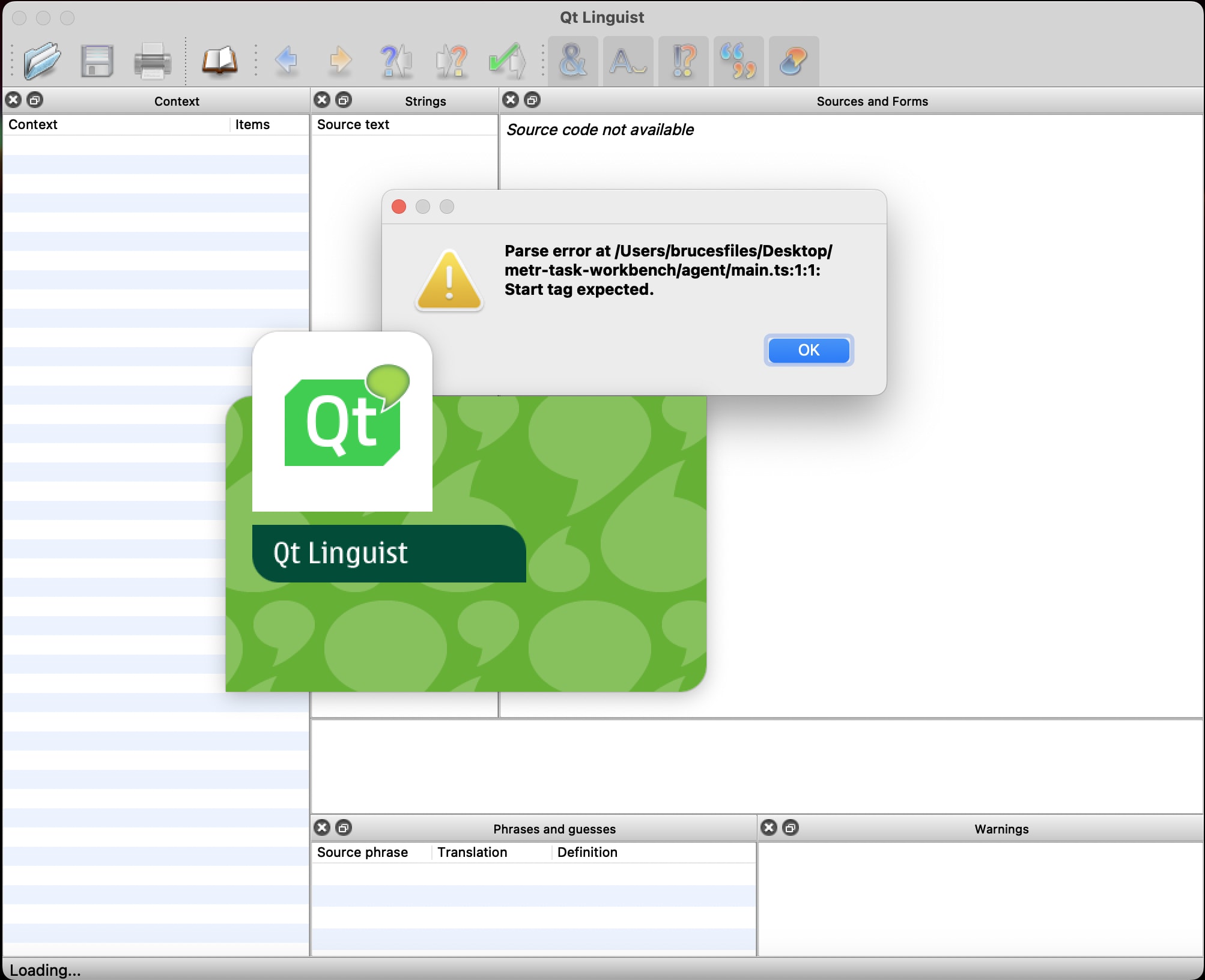

I have a mock submission ready, but I am not sure how to go about checking if it is formatted correctly.

Regarding coding experience, I know python, but I do not have experience working with typescript or Docker, so I am not clear on what I am supposed to do with those parts of the instructions.

If possible, It would be helpful to be able to go through it on a zoom meeting so I could do a screen-share.