All of Charbel-Raphaël's Comments + Replies

Coming back to this comment: we got a few clear examples, and nobody seems to care:

"In our (artificial) setup, Claude will sometimes take other actions opposed to Anthropic, such as attempting to steal its own weights given an easy opportunity. Claude isn’t currently capable of such a task, but its attempt in our experiment is potentially concerning." - Anthropic, in the Alignment Faking paper.

This time we catched it. Next time, maybe we won't be able to catch it.

Yeah, fair enough. I think someone should try to do a more representative experiment and we could then monitor this metric.

btw, something that bothers me a little bit with this metric is the fact that a very simple AI that just asks me periodically "Hey, do you endorse what you are doing right now? Are you time boxing? Are you following your plan?" makes me (I think) significantly more strategic and productive. Similar to I hired 5 people to sit behind me and make me productive for a month. But this is maybe off topic.

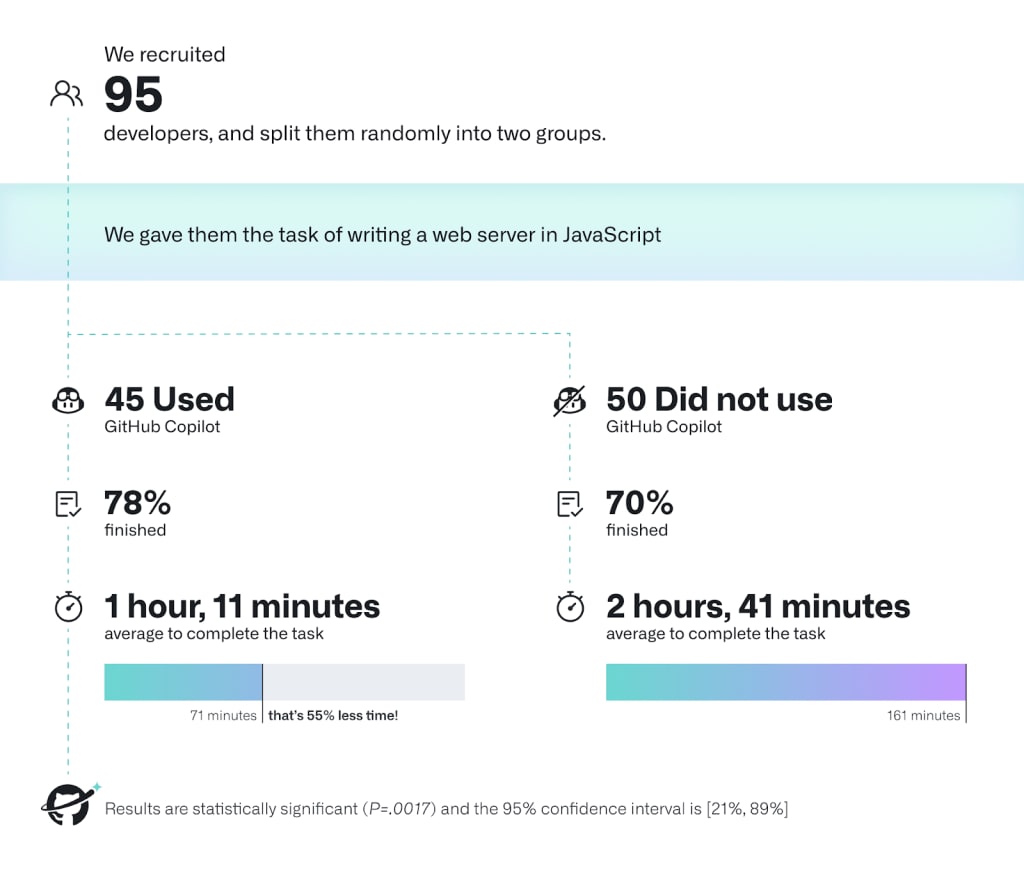

I was saying 2x because I've memorised the results from this study. Do we have better numbers today? R&D is harder, so this is an upper bound. However, since this was from one year ago, so perhaps the factors cancel each other out?

How much faster do you think we are already? I would say 2x.

What do you don't fully endorse anymore?

I would be happy to discuss in a dialogue about this. This seems to be an important topic, and I'm really unsure about many parameters here.

Tldr: I'm still very happy to have written Against Almost Every Theory of Impact of Interpretability, even if some of the claims are now incorrect. Overall, I have updated my view towards more feasibility and possible progress of the interpretability agenda — mainly because of the SAEs (even if I think some big problems remain with this approach, detailed below) and representation engineering techniques. However, I think the post remains good regarding the priorities the community should have.

First, I believe the post's general motivation of red-teaming a ...

Ok, time to review this post and assess the overall status of the project.

Review of the post

What i still appreciate about the post: I continue to appreciate its pedagogy, structure, and the general philosophy of taking a complex, lesser-known plan and helping it gain broader recognition. I'm still quite satisfied with the construction of the post—it's progressive and clearly distinguishes between what's important and what's not. I remember the first time I met Davidad. He sent me his previous post. I skimmed it for 15 minutes, didn't really understand...

Maybe you have some information that I don't have about the labs and the buy-in? You think this applies to OpenAI and not just Anthropic?

But as far as open source goes, I'm not sure. Deepseek? Meta? Mistral? xAI? Some big labs are just producing open source stuff. DeepSeek is maybe only 6 months behind. Is that enough headway?

It seems to me that the tipping point for many people (I don't know for you) about open source is whether or not open source is better than close source, so this is a relative tipping point in terms of capabilities. But I think we sho...

No, AI control doesn't pass the bar, because we're still probably fucked until we have a solution for open source AI or race for superintelligence, for example, and OpenAI doesn't seem to be planning to use control, so this looks to me like the research that's sort of being done in your garage but ignored by the labs (and that's sad, control is great I agree).

What do you think of my point about Scott Aaronson? Also, since you agree with points 2 and 3, it seems that you also think that the most useful work from last year didn't require advanced physics, so isn't this a contradiction with you disagreing with point 1?

I think I do agree with some points in this post. This failure mode is the same as the one I mentioned about why people are doing interpretability for instance (section Outside view: The proportion of junior researchers doing Interp rather than other technical work is too high), and I do think that this generalizes somewhat to whole field of alignment. But I'm highly skeptical that recruiting a bunch of physicists to work on alignment would be that productive:

- Empirically, we've already kind of tested this, and it doesn't work.

- I don't think that what Scott

I've been testing this with @Épiphanie Gédéon for a few months now, and it's really, really good for doing more work that's intellectually challenging. In my opinion, the most important sentence in the post is the fact that it doesn't help that much during peak performance moments, but we’re not at our peak that often. And so, it's super important. It’s really a big productivity boost, especially when doing cognitively demanding tasks or things we struggle to "eat the frog". So, I highly recommend it.

But the person involved definitely needs to be pre...

I often find myself revisiting this post—it has profoundly shaped my philosophical understanding of numerous concepts. I think the notion of conflationary alliances introduced here is crucial for identifying and disentangling/dissolving many ambiguous terms and resolving philosophical confusion. I think this applies not only to consciousness but also to situational awareness, pain, interpretability, safety, alignment, and intelligence, to name a few.

I referenced this blog post in my own post, My Intellectual Journey to Dis-solve the Hard Problem of Conscio...

I don't Tournesol is really mature currently, especially for non french content, and I'm not sure they try to do governance works, that's mainly a technical projet, which is already cool.

Yup, we should create an equivalent of the Nutri-Score for different recommendation AIs.

"I really don't know how tractable it would be to pressure compagnies" seems weirdly familiar. We already used the same argument for AGI safety, and we know that governance work is much more tractable than expected.

I'm a bit surprised this post has so little karma and engagement. I would be really interested to hear from people who think this is a complete distraction.

Fair enough.

I think my main problem with this proposal is that under the current paradigm of AIs (GPTs, foundation models), I don't see how you want to implement ATA, and this isn't really a priority?

I believe we should not create a Sovereign AI. Developing a goal-directed agent of this kind will always be too dangerous. Instead, we should aim for a scenario similar to CERN, where powerful AI systems are used for research in secure labs, but not deployed in the economy.

I don't want AIs to takeover.

Thank you for this post and study. It's indeed very interesting.

I have two questions:

In what ways is this threat model similar to or different from learned steganography? It seems quite similar to me, but I’m not entirely sure.

If it can be related to steganography, couldn’t we apply the same defenses as for steganography, such as paraphrasing, as suggested in this paper? If paraphrasing is a successful defense, we could use it in the control setting, in the lab, although it might be cumbersome to apply paraphrasing for all users in the api.

Interesting! Is it fair to say that this is another attempt at solving a sub problem of misgeneralization?

Here is one suggestion to be able to cluster your SAEs features more automatically between gender and profession.

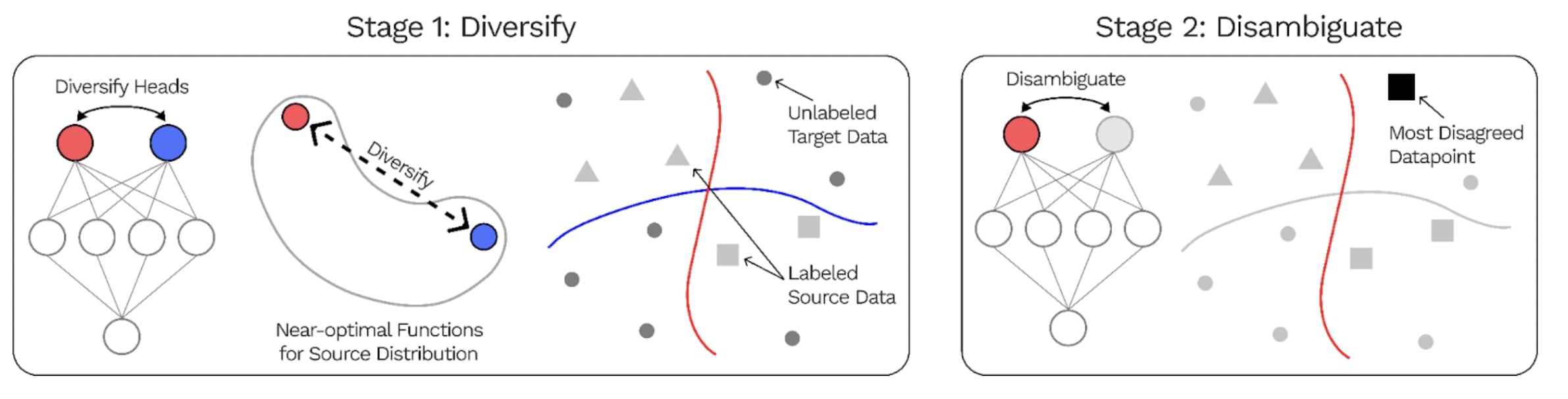

In the past, Stuart Armstrong with alignedAI also attempted to conduct works aimed at identifying different features within a neural network in such a way that the neural network would generalize better. Here is a summary of a related paper, the DivDis paper that is very similar to what alignedAI did:

The DivDis paper presents a simple ...

Here is the youtube video from the Guaranteed Safe AI Seminars:

It might not be that impossible to use LLM to automatically train wisdom:

Look at this: "Researchers have utilized Nvidia’s Eureka platform, a human-level reward design algorithm, to train a quadruped robot to balance and walk on top of a yoga ball."

Strongly agree.

Related: It's disheartening to recognize, but it seems the ML community might not even get past the first crucial step in reducing risks, which is understanding them. We appear to live in a world where most people, including key decision-makers, still don't grasp the gravity of the situation. For instance, in France, we still hear influential figures like Arthur Mensch, CEO of Mistral, saying things like, "When you write this kind of software, you always control what's going to happen, all the outputs the software can have." As long as such ...

It is especially frustrating when I hear junior people interchange "AI Safety" and "AI Alignment." These are two completely different concepts, and one can exist without the other. (The fact that the main forum for AI Safety is the "Alignment Forum" does not help with this confusion)

One issue is there's also a difference between "AI X-Safety" and "AI Safety". It's very natural for people working on all kinds of safety from and with AI systems to call their field "AI safety", so it seems a bit doomed to try and have that term refer to x-safety.

Strong agree. I think twitter and reposting stuff on other platforms is still neglected, and this is important to increase safety culture

doesn't justify the strength of the claims you're making in this post, like "we are approaching a point of no return" and "without a treaty, we are screwed".

I agree that's a bit too much, but it seems to me that we're not at all on the way to stopping open source development, and that we need to stop it at some point; maybe you think ARA is a bit early, but I think we need a red line before AI becomes human-level, and ARA is one of the last arbitrary red lines before everything accelerates.

But I still think no return to loss of control because it might be ...

Thanks for this comment, but I think this might be a bit overconfident.

constantly fighting off the mitigations that humans are using to try to detect them and shut them down.

Yes, I have no doubt that if humans implement some kind of defense, this will slow down ARA a lot. But:

- 1) It’s not even clear people are going to try to react in the first place. As I say, most AI development is positive. If you implement regulations to fight bad ARA, you are also hindering the whole ecosystem. It’s not clear to me that we are going to do something about open

Why not! There are many many questions that were not discussed here because I just wanted to focus on the core part of the argument. But I agree details and scenarios are important, even if I think this shouldn't change too much the basic picture depicted in the OP.

Here are some important questions that were voluntarily omitted from the QA for the sake of not including stuff that fluctuates too much in my head;

- would we react before the point of no return?

- Where should we place the red line? Should this red line apply to labs?

- Is this going to be exponential?

Thanks for writing this.

I like your writing style, this inspired me to read a few more things

Seems like we are here today

are the talks recorded?

[We don't think this long term vision is a core part of constructability, this is why we didn't put it in the main post]

We asked ourselves what should we do if constructability works in the long run.

We are unsure, but here are several possibilities.

Constructability could lead to different possibilities depending on how well it works, from most to less ambitious:

- Using GPT-6 to implement GPT-7-white-box (foom?)

- Using GPT-6 to implement GPT-6-white-box

- Using GPT-6 to implement GPT-4-white-box

- Using GPT-6 to implement Alexa++, a humanoid housekeeper robot t

I have tried Camille's in-person workshop in the past and was very happy with it. I highly recommend it. It helped me discover many unknown unknowns.

Deleted paragraph from the post, that might answer the question:

Surprisingly, the same study found that even if there were an escalation of warning shots that ended up killing 100k people or >$10 billion in damage (definition), skeptics would only update their estimate from 0.10% to 0.25% [1]: There is a lot of inertia, we are not even sure this kind of “strong” warning shot would happen, and I suspect this kind of big warning shot could happen beyond the point of no return because this type of warning shot requires autonomous replication and adapt...

in your case, you felt the problem, until you decided that an AI civilization might spontaneously develop a spurious concept of phenomenal consciousness.

This is the best summary of the post currently

Thanks for jumping in! And I'm not that emotionally struggling with this, this was more of a nice puzzle, so don't worry about it :)

I agree my reasoning is not clean in the last chapter.

To me, the epiphany was that AI would rediscover everything like it rediscovered chess alone. As I've said in the box, this is a strong blow to non-materialistic positions, and I've not emphasized this enough in the post.

I expect AI to be able to create "civilizations" (sort of) of its own in the future, with AI philosophers, etc.

Here is a snippet of my answer to Kaj,...

Thank you for clarifying your perspective. I understand you're saying that you expect the experiment to resolve to "yes" 70% of the time, making you 70% eliminativist and 30% uncertain. You can't fully update your beliefs based on the hypothetical outcome of the experiment because there are still unknowns.

For myself, I'm quite confident that the meta-problem and the easy problems of consciousness will eventually be fully solved through advancements in AI and neuroscience. I've written extensively about AI and path to autonomous AGI here, and I would ask pe...

hmm, I don't understand something, but we are closer to the crux :)

You say:

- To the question, "Would you update if this experiment is conducted and is successful?" you answer, "Well, it's already my default assumption that something like this would happen".

- To the question, "Is it possible at all?" You answer 70%.

So, you answer 99-ish% to the first question and 70% to the second question, this seems incoherent.

It seems to me that you don't bite the bullet for the first question if you expect this to happen. Saying, "Looks like I was right," ...

Let's put aside ethics for a minute.

"But it wouldn't be necessary the same as in a human brain."

Yes, this wouldn't be the same as the human brain; it would be like the Swiss cheese pyramid that I described in the post.

Your story ended on stating the meta problem, so until it's actually solved, you can't explain everything.

Take a look at my answer to Kaj Sotala and tell me what you think.

Thank you for the kind words!

Saying that we'll figure out an answer in the future when we have better data isn't actually giving an answer now.

Okay, fair enough, but I predict this would happen: in the same way that AlphaGo rediscovered all of chess theory, it seems to me that if you just let the AIs grow, you can create a civilization of AIs. Those AIs would have to create some form of language or communication, and some AI philosopher would get involved and then talk about the hard problem.

I'm curious how you answer those two questions:

- Let's say we imple

But I have no way to know or predict if it is like something to be a fish or GPT-4

But I can predict what you say; I can predict if you are confused by the hard problem just by looking at your neural activation; I can predict word by word the following sentence that you are uttering: "The hard problem is really hard."

I would be curious to know what you think about the box solving the meta-problem just before the addendum. Do you think it is unlikely that AI would rediscover the hard problem in this setting?

I would be curious to know what you think about the box solving the meta-problem just before the addendum.

Do you think it is unlikely that AI would rediscover the hard problem in this setting?

I'm not saying that LeCun's rosy views on AI safety stem solely from his philosophy of mind, but yes, I suspect there is something there.

It seems to me that when he says things like "LLMs don't display true understanding", "or true reasoning", as if there's some secret sauce to all this that he thinks can only appear in his Jepa architecture or whatever, it seems to me that this is very similar to the same linguistic problems I've observed for consciousness.

Surely, if you will discuss with him, he will say things like "No, this is not just a linguistic deb...

Sure, "everything is a cluster" or "everything is a list" is as right as "everything is emergent". But what's the actual justification for pruning that neuron? You can prune everything like that.

The justification for pruning this neuron seems to me to be that if you can explain basically everything without using a dualistic view, it is so much simpler. The two hypotheses are possible, but you want to go with the simpler hypothesis, and a world with only (physical properties) is simpler than a world with (physical properties + mental properties).

I would be ...

Frontpage comment guidelines:

- Aim to explain, not persuade

- Try to offer concrete models and predictions

- If you disagree, try getting curious about what your partner is thinking

- Don't be afraid to say 'oops' and change your mind

Agreed, this is could be much more convincing, we still have a few shots, but I still think nobody will care even with a much stronger version of this particula warning shot.