All of Chipmonk's Comments + Replies

Can you think of examples like this in the broader AI landscape? - What are the best examples of self-fulfilling prophecies in AI alignment?

I don't feel like I learned anything new from the post.

This surprises me! Wait so-

- The "How does one-shotting happen?" section didn't have anything interesting for you? (Have you seen stuff like this elsewhere?)

- Did you already know one-shotting was possible?

since your bullet-point list in the beginning isn't detailed enough for anyone to try to replicate the method.

Wait I'm confused- this is not the purpose of the post

Also notable is that you only have positive examples for your method

The purpose of this post is not advertisement. It's to discuss one-shots

Especially, how would you be able to distinguish between your approach convincing your customers they were helped, instead of actually changing their behavior?

See above

Would anyone like to help me edit a better version of this?

Oh I like "patients" ("clients"). I'll think about the rest, thanks. I'm just not sure how to write anything useful and legible without talking about my own experience and what I have the most data for?

Also I see the point of your last bullet where "my business" is the subject hm

any suggestions for how to talk about this stuff without having it read like an advertisement? i'm genuinely interested in the idea of one-shotting and legibilizing evidence that quick growth is possible

any updates on how this is going btw? (doing retroactive funding research)

what came of this? (doing research on bounties, prizes, and retroactive funding rn)

fwiw, FABRIC was able to get funding in November 2024 (who knows if this date is correct though)

nvm this was an "exit grant" lmao

Now that this is "over", I'd be fascinated to see a post about what the fundraising process was like for you and what can be learned. Seems like a big L for retroactive funding for example

Aside: I'm surprised you're suggesting people get validation --> people feel secure ? This does not at all seem like the causality to me (though I'm aware most people probably think like this).

Prediction: In the absence of radically improved psychotechnology, a significant fraction of people will always find a way to feel insecure.

hmm i suspect releasing these metrics could make my customers significantly more annoying. like, early adopters are fun and experimental. but if i make it seem not risky then i get risk-averse people who tend to be prickly

so maybe i will compile and release this data but i would need to figure out how to do it in a way that doesn't change the funnel

Any updates on this?

Any updates on this?

I wonder if you could set up a conditional donation? “I donate $X, minus if total donations exceed $3M"

i like this thanks. might take a bit of time to put together but interested

made some light edits because of this comment, thanks

oh ok i might start doing that. knowing my calibration on that would be nice

oh ok hm. i also don't want to be incentivized to not give easy-for-me help to people with low odds of success though

could you give a few examples?

also seems time-intensive hmmmm

also, i thought about it more and i really like the metric of "results generated per hour"

:D i really hope bounties catch on

wow this is contraversial (my own vote is +6)

wonder why

I upvoted for the novelty of a rationalist trying a bounty based career. But also this halfway reads as an advertisement for your life coaching service. I wouldn’t want to see much more in that direction.

- One-sentence summary: Formalise one piece of morality: the causal separation between agents and their environment. See also Open Agency Architecture.

- Theory of change: Formalise (part of) morality/safety, solve outer alignment.

Chris Lakin here - this is a very old post and What does davidad want from «boundaries»? should be the canonical link

Why SPY over QQQ?

available on the website at least

Why was this post tagged as boundaries/membranes? I'm inclined to remove the tag.

makes sense

another weird bug is if i click the link i was just sent in my email, it brings me to a 403 Forbidden page (even though the URLs of this functional page and that 403 page look identical)

I've run two workshops at LightHaven and it's pretty unthinkable to run a workshop anywhere else in the Bay Area. Lightcone has really made it easy to run overnight events without setup

Yeah i'm confused about what to name it. we can always change it later i guess.

also let me know if you have any posts you want me to definitely tag for it that you think i might miss otherwise

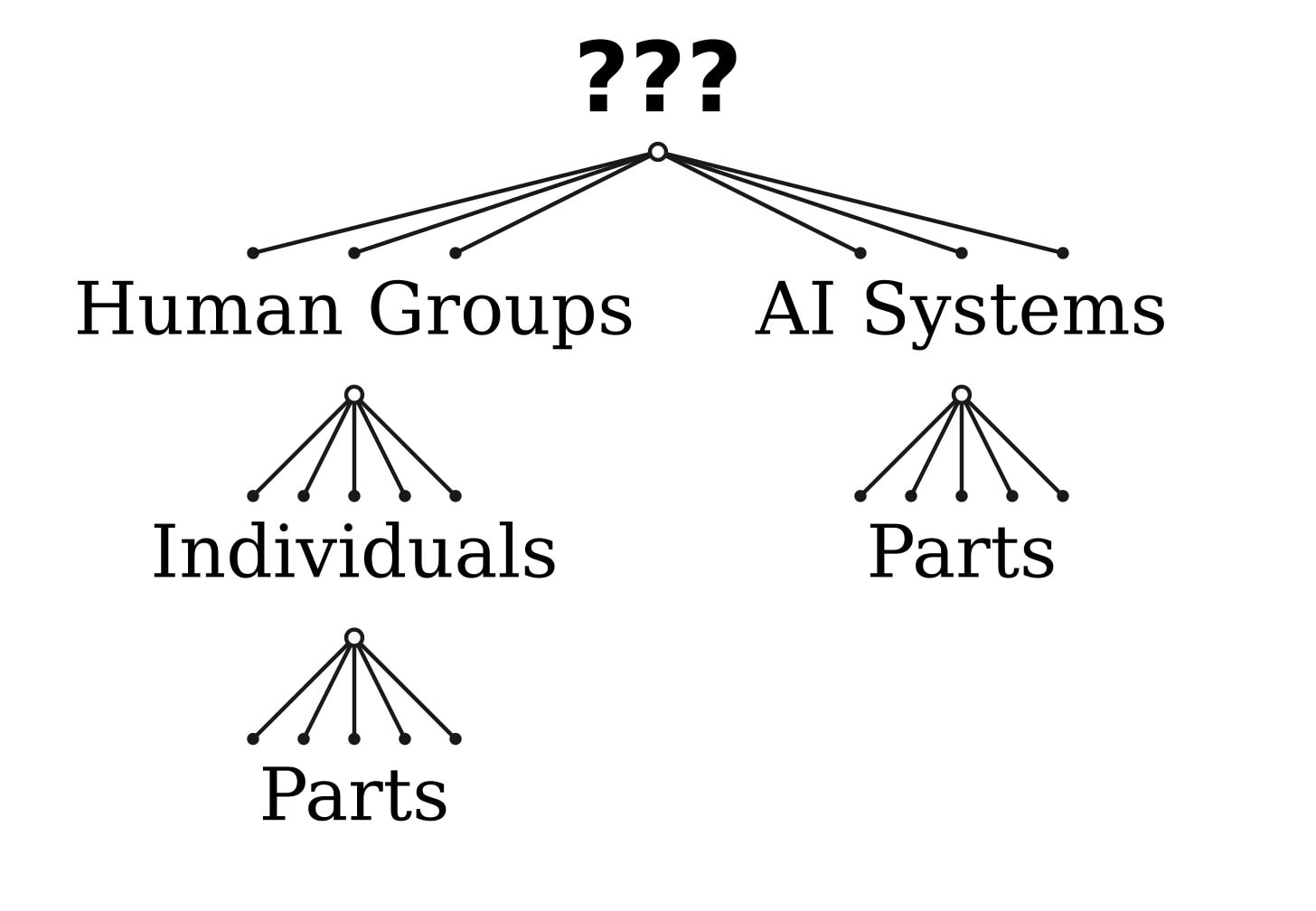

Do we have a LessWrong tag for "hierarchical agency" or "multi-scale alignment" or something? Should I make one?

I just made a twitter list with accounts interested in hierarchical agency (or what i call "multi-scale alignment"). Lmk who should be added

Random but you might like this graphic I made representing hierarchical agency from my post today on a very similar idea. What would you change about it?

This was an impressive demonstation of Claude for interviews. Was this one take?

(Also what prompt did you use? I like how your Claude speaks.)

There was some selection of branches, and one pass of post-processing.

It was after ˜30 pages of a different conversation about AI and LLM introspection, so I don't expect the prompt alone will elicit the "same Claude". Start of this conversation was

Thanks! Now, I would like to switch to a slightly different topic: my AI safety oriented research on hierarchical agency. I would like you to role-play an inquisitive, curious interview partner, who aims to understand what I mean, and often tries to check understanding using paraphrasing, giving examples, and si...

I'm glad you wrote this! I've been wanting to tell othres about ACS's research and finally have a good link

Great question, thanks!

I think you're correct in pointing towards the existence of basically-all-downside genetic conditions, but I still think these are in the minority. Moreover, even most of those don't create a big issue on the object level— compared to how people might feel about the issue as a result.

This argument doesn't extend to conditions like Huntington's, but if a person is missing a pinky finger, most of the issues the person is going to face are related to social factors and their own emotions, not the physical aspect.

I also just say this from experience helping others.

I did not say that depression is always a strategy for everyone.

Eliezer likes it but lesswrong doesn't

can someone explain to me why this is so controversial

What would you say that the main types of power are?

My list (for humans): physical security, financial security, social security, emotional security (this one you can only give yourself though)

In other cases, or for other reasons, they might be instead set up to demand results, and evaluate primarily based on results.

Why might it be set up like that? Seems potentially quite irrational. Veering into motivated reasoning territory here imo

Maybe, but that also requires that the other group members were (irrationally) failing to consider that the “attempt could've been good even if the luck was bad”.

In human groups, people often do gain (some) reputation for noble failures (is this wrong?)

what are the examples of the curriculum/activities you're considering/planning?