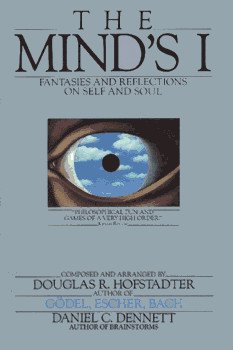

A Rationalist's Bookshelf: The Mind's I (Douglas Hofstadter and Daniel Dennett, 1981)

When the call to compile a reading list for new rationalists went out, contributor djcb responded by suggesting The Mind's I: Fantasies and Reflections on Self and Soul, a compilation of essays, fictions and excerpts "composed and arranged" by Douglas Hofstadter and Daniel Dennett. Cut to me peering guiltily over...