That's very interesting, and I think I'll lean toward this perspective too (giving more consideration to those who don't hold a view that's obvious to me). I'd say this is due to the path that's led me to veganism. I was aware of my own moral inconsistencies but also of my struggles to do anything about them, and really I won't expect everyone or most people to notice or change their own inconsistencies, because it's very hard.

However, I also expect that for some vegans it can be the opposite case and lead them to more intolerance, because in the end their view is obvious to them.

But actually yes, you've made me reconsider and I'll remove that part and leave it at "We love dogs. We eat pigs. We wear cows", cause I did want to use examples more universal among my audience than the ones I chose.

Totally, I didn't want to suggest it was everyone's point of view, more like that of those in my circle, or at least mine before not too long ago. Certainly dogs and horses are eaten in some places (horse is sometimes eaten in Spain, although much less commonly than in the past), just like bullfighting is accepted by many in Spain. My intention was to point out several inconsistent attitudes toward animals we commonly exhibit; the fact that these attitudes change so much depending on the country and culture makes them, to me, more "arbitrary" (in the sense that they depend more on particularities of the context/history than on consistent moral beliefs about the way things should be).

This resonates. I do have the tendency to read and think too much about whether this is the right thing to do (e.g., I keep following links and find an article that says "you should do this instead", then I think "hmm maybe I should", until I find the next advice that convinces me otherwise). And in the end, of course, I get much less done (and learn much less) than I would have if I had just tried something out.

Thanks for suggesting this shift in perspective towards action.

Thanks a lot for this article, Neel! As a recent Bachelor's graduate embarking on this journey into AI Safety research on my own, this is very timely, especially by sharing steps, mindsets, and resources to keep in mind.

My core question revolves around "Stage 0", even before starting to learn the basics. How much time would you recommend dedicating to exploring and deciding on a specific research agenda (like mechanistic interpretability vs others) before diving into learning the basics required for research in that sub-field?

Because my concern is that the learning paths and all that follows is very dependent on the chosen agenda, and committing to a direction inevitably involves a significant time investment. Therefore, for someone dedicating themselves full-time to AI Safety research from day 1, should this initial agenda exploration and prioritisation be in the order of hours, days, or weeks?

(Assuming a foundational understanding of the alignment problem, e.g., from the BlueDot course and other key resources.)

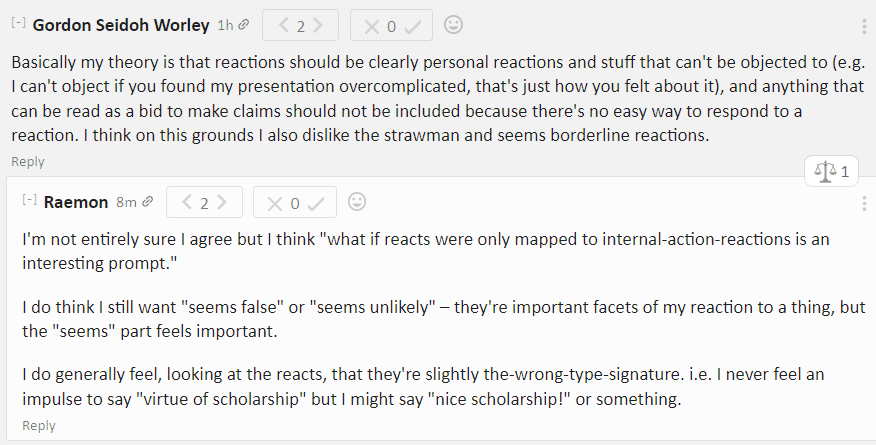

I partly support the spirit behind this feature, of providing more information (especially to the commenter), making the readers more engaged and involved, and expressing a reaction with more nuance than with a mere upvote/downvote. I also like that, as with karma, there are options for negative (but constructive) feedback, which I mentioned here when reviewing a different social discussions platform that had only positive reactions such as "Aha!" and "clarifying".

In another sense, I suspect (but could be wrong) that this extra information could also have the opposite effect of "anchoring" the readers of the comments and biasing them towards the reactions left by others. If they saw that a comment had been reacted with a "verified" or "wrong", for example, they could anchor on that before reading the comment. Maybe this effect would be less pronounced than in other communities, but I don't think LessWrong would be unaffected by this.

(Comment on UI: when there are nested comments, it can be confusing to tell whether the reaction corresponds to the parent or the child comment:

Edit: I see Raemon already mentioned this)

Strong upvote. I found that almost every sentence was extremely clear and conveyed a transparent mental image of the argument made. Many times I found myself saying to myself "YES!" or "This checks" as I read a new point.

That might involve not working on a day you’ve decided to take off even if something urgent comes up; or deciding that something is too far out of your comfort zone to try now, even if you know that pushing further would help you grow in the long term

I will add that, for many routine activities or personal dilemmas with short- and long-term intentions pulling you in opposite directions (e.g. exercising, eating a chocolate bar), the boundaries you set internally should be explicit and unambiguous, and ideally be defined before being faced by the choice.

This is to avoid rationalising momentary preferences (I am lazy right now + it's a bit cloudy -> "the weather is bad, it might rain, I won't enjoy running as much as if it was sunny, so I won't go for a run") that run counter to your long-term goals, where the result of defecting a single time would be unnoticeable for the long run. In this cases it can be helpful to imagine your current self in a bargaining game with your future selves, in a sort of prisoner's dilema. If your current now defects, your future selves will be more prone to defecting as well. If you coordinate and resist tempation now, future resistance will be more likely. In other words, establishing a Schelling fence.

At the same time, this Schelling fence shouldn't be too restrictive nor be merciless towards any possible circumstance, because then this would make you more demotivated and even less inclined to stick to it. One should probably experiment with what works for him/her in order to find a compromise between a bucket broad and general enough for 70-90% of scenarios to fall into, while being merciful towards some needed exceptions.

Thank you very much for this sequence. I knew fear was a great influence (or impediment) over my actions, but I hadn't given it such a concrete form, and especially a weapon (= excitement) to combat it, until now.

Following matto's comment, I went through the Tunning Your Cognitive Strategies exercise, spotting microthoughts and extracting the cognitive strategies and deltas between such microthoughts. When evaluating a possible action, the (emotional as much as cognitive) delta "consider action X -> tiny feeling in my chest or throat -> meh, I'm not sure about X" seemed quite recurring. Thanks to your pointers on fear and to introspecting about it, I have added "-> are you feeling fear? -> yes, I have this feeling in my chest -> is this fear helpful? -> Y, so no -> can you replace fear with excitement?" (a delta about noticing deltas) as a cognitive strategy.

Why I (beware of other-optimizing) can throw away fear in most situations is that I have developed the mental techniques, awareness and strength to counter the negatives which fear wants to point at.

As many, I developed fear as a kid, in response to being criticised or rejected, at a time when I didn't have the mental tools to deal with these situations. For example, I took things too personally, thought others' reactions were about me and my identity, and failed to put myself in others' shoes and understand that when other kids criticise it is often unfounded and just to have a laugh. To protect my identity I developed aversion, a bias towards inaction, and fear of failure and of being criticised. This propagated to also lead to demotivation, self-doubt, and underconfidence.

Now I can evaluate whether fear is an emotion worth having. Fear points at something real and valuable: the desire to do things well and be liked. But as I said, for me personally fear is something I can do away with in most situations because I have the tools to respond better to negative feedback. If I write an article and it gets downvoted, I won't take it as a personal issue that hurts my intrinsic worth; I will use the feedback to improve and update my strategies. In several cases, excitement can be much more useful (and motivating, leading to action) than fear: excitement of commenting or writing on LessWrong over fear of saying the wrong thing; excitement of talking or being with a girl rather than fear of rejection.

Hehe yes, it's not a rational argument to use for coming to this decision. But it's separate from my argument for becoming vegan — in the end, my reason for becoming vegan is that I believe that, accounting for uncertainty over their sentience, the suffering of all non-human animals doesn't justify the small benefit we obtain from them. Using the point of view of the future is just a mental device or technique to motivate me to make the change for something I already believed was right (sort of intentional compartmentalization in Dark Arts of Rationality?).

Besides, can I know what makes you disagree with veganism as a moral stance? Is it for instance that you don't believe animals are sentient?